Barramento PCI

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Quickspecs Pacstation Plus

QuickSpecs PACStation Plus Overview 1. Power Button Array 4. Rear access Panel 2. Internal System Cooling 5. Side access Panel. Support Access only. 3. USB Port with QUAD Access. PACStation Plus — FL – Worldwide — Version 7 — January 1, 2017 Page 1 QuickSpecs PACStation Plus Overview 6. Internal CPU PODS 7. Front Grill and faceplate 8. Video Interface Module 9. External Video System Controller 10. Video Workflow Manager 11. Primary Cable Harness 12. Internal Voltage Regulator 13. USB Transfer Ports 14. External Video Array (See Placement on page 10 for installation process) 15. Network Management Module 16. Screen Management Controller Hot plug. PACStation Plus — FL – Worldwide — Version 7 — January 1, 2017 Page 2 QuickSpecs PACStation Plus Overview Overview Form Factor Tower Operating Systems Preinstalled: Windows 10 Pro 64-bit Windows 10 Pro 64 to Windows 7 Professional 64-bit \Windows 7 Professional 64-bit Windows 8.1 Pro 64-bit OS Supported: Windows 8/8.1 Enterprise 64-bit Windows 7 Enterprise 64-bit Windows 10 Pro 64-bit Windows 10 Pro 64 to Windows 7 Professional 64-bit Available Processors QPI Featuring Intel® Turbo Clock Memory Hyper Name Cores Cache (MB) Speed Intel® vProTM Boost TDP (W) Speed Speed (MT/s) Threading (GT/s) Technology Technology1 (GHz) Intel Core TM i3-5010U 2.1 GHz 2 3 1600 NO YES NO YES 65 Intel Core TM i5-5300U 2.3-2.9 GHz 2 3 1600 NO YES YES YES 65 Intel Core TM i7-5557U 3.1-3.4 GHz 2 4 1600 NO YES YES YES 65 The specifications shown in this column represent the following: (all core maximum turbo steps, one core maximum turbo steps). -

Firewire 800 Expresscard 34

FireWire 800 ExpressCard 34 Two FireWire 800 Ports for Your Laptop Quick and Easy Upgrade Simple and Mobile Now you can affordably upgrade your Mac® or PC laptop to Installation is extremely easy; there’s no need to install any driver fast FireWire 800 connectivity with the LaCie FireWire 800 software because the LaCie FireWire 800 ExpressCard 34 is ExpressCard 34*. It provides two independent 9-pin 1394b driver-free for computers running on Windows® XP, Vista®, or 7 bilingual ports so you can connect two external FireWire 800 or Mac OS X 10.5 or higher. It’s small and lightweight for easy devices simultaneously. Since it’s plug & play and hot-pluggable, transporting and provides a communication distance of up to 100 you can quickly attach your favorite peripherals such as hard drives, meters. Whether you and your laptop are at home, at work or on CD/DVD drives, scanners, printers, etc. and use them instantly. the road, just plug it into an ExpressCard 34 or 54 slot and enjoy making new connections. Connect FireWire Devices Multiple Uses The LaCie FireWire 800 ExpressCard 34 can run at 800Mbits/s This “all-in-one” FireWire host solution is the ideal combination of or 400Mbits/s because it’s also FireWire 400 (IEEE 1394a) wide compatibility and speedy performance. Whether you want to backward compatible. In addition, it can support 200Mbits/s back up data from your laptop to your external LaCie hard disk, and 100Mbits/s devices. Based on the IEEE 1394b chipset from capture video from your camcorder, or download photos from your Texas Instruments, it’s a versatile FireWire solution that’s i.LINK camera, the LaCie FireWire 800 ExpressCard 34 is the right choice compatible and OHCI compliant. -

ECESATUSB1 This Expresscard Power Esata Port Controller Card

1 Port ExpressCard Power eSATA Controller Adapter Card StarTech ID: ECESATUSB1 This ExpressCard Power eSATA port controller card can be installed in an available ExpressCard 34/54 mm slot to provide a powered eSATA connection, and also alternatively provide either external SATA (data only) or USB 2.0 connectivity from one uniquely designed port if using with standard eSATA or USB devices. An ideal solution for using an eSATA SSD Flash drive on your laptop, the power eSATA card delivers both a high speed eSATA connection and power from the combined USB port. A versatile connectivity solution, the card features built-in port multiplier support, allowing multi-drive eSATA storage enclosures to be connected to the host computer using a single eSATA cable. Taking advantage of the transfer speed of eSATA connection and the 5V power output of the USB 2.0 port, the ExpressCard Power eSATA adapter is the perfect answer for connecting compatible mobile drive enclosures, similar to the built-in power eSATA port provided by the following laptop computers: Toshiba: Satellite E105, A350, Satellite Pro P300; Qosmio G50, X305, Portege A600, M750, R500, R600; and Tecra M10, R10, A10. Dell: Studio 15, 17; Latitude E6400, E6500; Precision M2400, M4400, M6400, M6400 Covet. Applications Connects to eSATA SSD Flash drives, such as OCZ Throttle, Kangaru e-Flash drives and Ridata Racer series flash drives Provides connectivity between Notebooks and PCs with ExpressCard slots to external drive enclosures with Power eSATA (eSATA+USB) port, or with regular eSATA -

Practice Test Version 1.8 LPI 117-101: Practice Exam QUESTION NO: 1 CORRECT TEXT

LPI 117-101 117-101 LPI 101 General Linux, Part I Practice Test Version 1.8 LPI 117-101: Practice Exam QUESTION NO: 1 CORRECT TEXT You suspect that a new ethernet card might be conflicting with another device. Which file should you check within the /proc tree to learn which IRQs are being used by which kernel drives? Answer: interrupts QUESTION NO: 2 How many SCSI ids for peripherals can SCSI-1 support? A. 5 B. 6 C. 7 D. 8 Answer: C Explanation: SCSI-1 support total 7 peripherals. There are several different types of SCSI devices. The original SCSI specification is commonly referred to as SCSI-1. The newer specification, SCSI-2, offers increased speed and performance, as well as new commands. Fast SCSI increases throughput to more than 10MB per second. Fast-Wide SCSI provides a wider data path and throughput of up to 40MB per second and up to 15 devices. There there are Ultra-SCSI and Ultra-Wide-SCSI QUESTION NO: 3 You need to install a fax server. Which type of fax/modem should you install to insure Linux compatibility? Test-King.com A. External Serial Fax/modem B. External USB Fax/modem C. Internal ISA Fax/modem D. Internal PCI Fax/modem Answer: A QUESTION NO: 4 You are running Linux 2.0.36 and you need to add a USB mouse to your system. Which of the following statements is true? "Welcome to Certification's Main Event" - www.test-king.com 2 LPI 117-101: Practice Exam A. You need to rebuild the kernel. -

Express Complete

PCI Express Cards . MADE by RME Express Complete HDSPe MADI 128-Channel 192 kHz MADI PCI Express Card While many MADI devices require an additional clock line, HDSPe MADI uses SteadyClockTM to extract the reference clock at lowest jitter directly from the MADI signal, making long-distance connections even more convenient. RME offers several options to build up powerful yet affordable I/O systems. For example in combination with the ADI-648 an 8 x ADAT optical computer interface is realized, unbeatable in price and performance. The ADI-6432 serves as bidirectional AES/EBU frontend with 64 channels I/O. On a powerful PC/Mac three HDSPe MADI can be used at the same time, providing up to 192 inputs and 192 outputs on a single workstation. Specifications What is MADI? ▪ 1 Lane PCI Express endpoint device (no PCI Express to PCI bridge) MADI - Multichannel Audio Digital Interface - ▪ 2.5 Gbps line speed is the perfect solution for the transmission of ▪ Packet-based full-duplex communication (up to 500 MB/s transfer rate) multiple audio channels. A thin cable transmits ▪ All settings changeable in real-time up to 64 audio channels, lossless, over a distance ▪ Automatic and intelligent master/slave clock control of up to 2000 meters! ▪ Sync sources: MADI coaxial, MADI optical, word clock, internal All signals in one direction go through one cable. ▪ Varipitch: by input signal or word clock Only two MADI cables can interface a stage, a ▪ Sample rates: 44.1 kHz, 48 kHz, 88.2 kHz, 96 kHz, 176.4 kHz, 192 kHz, theatre or a conference hall. -

Cifx PC Cards for Real-Time Ethernet and Fieldbus

cifX PC cards for Real-Time Ethernet and Fieldbus All major industrial protocols All common PC card formats One hardware for all Real-Time Ethernet protocols Master and Slave Wide range of device drivers Same application interface for all networks PC cards in all formats for all protocols The cifX PC card family is the unified standard supporting all Real-Time Ethernet and Fieldbus systems for PC based automation. The protocol stack is executed autonomously on the PC card and process data exchange with the host is done via Dual-Port-Memory or DMA (Direct Memory Access). Thanks to the common Hilscher Platform Strategy all PC cards use the same driver and tools - independent of protocol and card format. A change of communication protocol is done by just loading a different firmware. A rotary switch delivers an easy and reliable slot assignment for the PCI- and PCI Express card types. In addition special types e.g. with integrated NVRAM, 2-channel cards or detached network interface are available. A complete software package is always included in the scope of delivery. This package consists of one FDT-based configuration tool (IEC standard) for all products and networks, loadable firmware, documentation and a driver tool-kit. Due to own network controller netX a 10-year guarantee of delivery is granted. Spectra GmbH & Co. KG Niederlassung Österreich Spectra (Schweiz) AG [email protected] [email protected] [email protected] cifX - Communication for PC based Automation NVRAM included PCI Same Function - Same API - Same Tools Especially for compact Box PCs Hilscher offers specific types with integrated NVRAM for secure data storage. -

Adaptec SAS RAID.Indd

Adaptec SAS RAID Confi guration and the Windows OS Installation Instructions Adaptec SAS RAID Confi guration and the Windows OS Installation Instructions After all the hardware has been installed, you must fi rst confi gure the SAS RAID before you install the Windows Operating System and other software drivers. 1 The Adaptec SAS RAID Controller Driver If you do not wish to confi gure Adaptec SAS RAID functions, please go directly to Section 2 for the Windows OS Installation instructions. Introduction to SAS (Serial Attached SCSI) In addition to SATA (Serial ATA) which is supported by the Intel ESB2 South Bridge, your motherboard has an Adaptec SAS (Serial Attached SCSI) 9410W Controller built in. SAS supports serial link data transfer rates up to 3Gbps. With the dynamic SAS infrastructure built in, your motherboard supports both SATA and SAS, providing the user with unparalleled data storage expansion and inter-con- nectivity capability. Using the Adaptec RAID Confi guration Utility The onboard SAS Controller is enabled by default. To disable it, please set the SAS Enable Jumper to Pins 2-3 (See the Jumper Section in Chapter 2 of your motherboard manual for details.) When the system is detecting the SAS Controller BIOS, make sure that the 16-digit Adapter WWN address displays. If this number is not shown, you will not be able to use the controller. *Once the WWN address appears, press the <Ctrl> and <A> keys simultaneously when prompted to access the Adaptec SAS RAID BIOS. (Note: Use the arrow keys to highlight an item and then press <Enter> to select an option. -

Dell EMC Poweredge T340 Technical Guide

Dell EMC PowerEdge T340 Technical Guide Regulatory Model: E60S Regulatory Type: E60S001 Dec. 2020 Rev. A07 Notes, cautions, and warnings NOTE: A NOTE indicates important information that helps you make better use of your product. CAUTION: A CAUTION indicates either potential damage to hardware or loss of data and tells you how to avoid the problem. WARNING: A WARNING indicates a potential for property damage, personal injury, or death. © 2018 - 2020 Dell Inc. or its subsidiaries. All rights reserved. Dell, EMC, and other trademarks are trademarks of Dell Inc. or its subsidiaries. Other trademarks may be trademarks of their respective owners. 1 Product Overview Topics: • Introduction • New technologies Introduction The Dell EMC PowerEdge T340 is the reliable, easy to manage, and scalable 1-socket tower server for growing businesses and remote offices/ branch offices. New technologies The PowerEdge T340 equipped with Intel® Xeon® E-2100 and E-2200 product family processors support to help run applications faster and support for full-feature remote management (iDRAC9). The T340 is versatile enough to address many customer segments and workloads. Target workloads include ● Small and medium businesses and organizations: Collaboration/sharing productivity applications, databases, web serving, backup/recovery, and mail and messaging. ● ROBO: Applications and workloads specific to the particular industry, e.g. Retail, Healthcare, Finance, Education, etc. The following table shows the list of new technologies offered by the PowerEdge T340: New Technologies Detailed Descriptions Intel® C246 series chipset Please refer to the chipset section for details. Intel® Xeon® processor E- 2100 and E-2200 Product The Intel® processor that works with Intel® C246 series Family chipset. -

Focus on Your Core Competency the COM Express Standard

Computer-On-Modules Focus on your Core Competency The COM Express Standard – A Computer-On-Module (COM) provides a convenient solution for Adaptable to Your Specific Needs OEMs that need computing functionality but are not interested in COM Express was developed and is maintained by PICMG investing the time and resources into designing a single board (PCI Industrial Computer Manufacturers Group). COM computer. There are several COM standards, one of the more Express was released in the summer of 2005 and is the popular being COM Express (also referred to as COM.0). COM most widely used COM standard. The standard defines the Express modules contain the CPU, memory, common peripherals physical size, interconnect, and thermal interface for a COM. (USB, SATA) and an I/O interface (PCI and PCI Express). OEMs that The original COM Express specification was written to use COM Express modules design a carrier board that contains any support peripherals that were available at the time of release required I/O interfaces not found on the COM Express module as – including USB 2.0, SATA, PATA, Ethernet, VGA, LVDS, well as connectors for external I/O. A COM based solution allows SDVO, PCI, and PCI Express Gen 1. Several pinout types an OEM to focus on their core competency and not the design and were defined by PICMG with each one having a specific maintenance of a single board computer. combination of peripherals, expansion interfaces and connector layout. The most widely used COM Express A COM Express based solution with a custom carrier board offers module is a type 2, followed by type 1. -

I.MX Linux® Reference Manual

i.MX Linux® Reference Manual Document Number: IMXLXRM Rev. 1, 01/2017 i.MX Linux® Reference Manual, Rev. 1, 01/2017 2 NXP Semiconductors Contents Section number Title Page Chapter 1 About this Book 1.1 Audience....................................................................................................................................................................... 27 1.1.1 Conventions................................................................................................................................................... 27 1.1.2 Definitions, Acronyms, and Abbreviations....................................................................................................27 Chapter 2 Introduction 2.1 Overview.......................................................................................................................................................................31 2.1.1 Software Base................................................................................................................................................ 31 2.1.2 Features.......................................................................................................................................................... 31 Chapter 3 Machine-Specific Layer (MSL) 3.1 Introduction...................................................................................................................................................................37 3.2 Interrupts (Operation).................................................................................................................................................. -

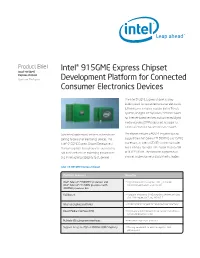

Intel® 915GME Express Chipset Development Platform For

Product Brief Intel® 915GME Express Chipset Intel® 915GME Express Chipset Consumer Electronics Development Platform for Connected Consumer Electronics Devices The Intel 915GME Express chipset is a key building block for connected consumer electronics (CE) platforms, including modular digital TV sub- systems, IP digital set top boxes, “set back” boxes for Internet-based services and connected digital media recorders (DMRs) designed to support a variety of emerging connected usage models. Connected applications, services and media are The chipset includes a 400 MHz system bus to coming to consumer electronics devices. The support the Intel® Celeron® M 900MHz and 1.5MHz Intel® 915GME Express Chipset Development processors, an internal 2D/3D graphics controller Platform provides the components you need to and a memory controller that supports up to 2GB add Intel® architecture processing performance of DDR2 SDRAM. The controller supports dual- and IP networking capability to CE devices. channel, single-channel and asymmetric modes. Intel® CE 951GME Express Chipset Platform Features Benefits Intel® Celeron® M 900MHz processor and • High performance to support next-generation Intel® Celeron® M 1.5GHz processor with connected applications and services 400MHz processor bus SVDO port • Supports third-party SDVO compliant devices including DVI, TV-Encoder, LVDS, and HDMI 1.2 Internal graphics controller • 2D/3D graphics support for advanced user interfaces Direct Media Interface (DMI) • High-bandwidth chip-to-chip interconnect for optimum system-level performance Multiple I/O subsystem interfaces • Flexible platform configurations Support for up to 2GB of 400MHz DDR2 memory • Memory bandwidth for optimum system-level performance Product Brief Intel® 915GME Express Chipset Block Diagram Intel® Celeron® M n IMVP 4 CK410 (SC451, SC2608), Clock Gen (900/1.5GHz 1.8V, 3.3Vstby, (IC954103) 353/370) XDP Co 5Vaud, 2.5V Dual-Channel ATX FS8 Pwr Con 512 MB-1GB 400MHz 400MT/s DDR2 x 16 0 SDVO_C 512 MB/1DDR2GB x 16 HDMI Xmtr HDMI 1. -

Intel FPGA Programmable Acceleration Card N3000

Intel® Acceleration Stack User Guide Intel FPGA Programmable Acceleration Card N3000 Updated for Intel® Acceleration Stack for Intel® Xeon® CPU with FPGAs: 1.1 Subscribe UG-20244 | 2021.06.14 Send Feedback Latest document on the web: PDF | HTML Contents Contents 1. About this Document...................................................................................................... 4 1.1. Acronym List ........................................................................................................5 2. System Requirements..................................................................................................... 7 2.1. Cooling Requirements............................................................................................ 7 3. Hardware Installation................................................................................................... 11 3.1. Installing the Intel FPGA PAC N3000.......................................................................11 4. Installing the OPAE Software........................................................................................16 4.1. Install Additional Packages.................................................................................... 17 4.2. Install the Release Package................................................................................... 17 4.2.1. Remove Previous OPAE Packages............................................................... 18 4.2.2. Install the Acceleration Stack for Runtime..................................................