Assessing the Standard-Compliance for Multi-Threading Primitives in C Compilers

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

User's Manual

rBOX610 Linux Software User’s Manual Disclaimers This manual has been carefully checked and believed to contain accurate information. Axiomtek Co., Ltd. assumes no responsibility for any infringements of patents or any third party’s rights, and any liability arising from such use. Axiomtek does not warrant or assume any legal liability or responsibility for the accuracy, completeness or usefulness of any information in this document. Axiomtek does not make any commitment to update the information in this manual. Axiomtek reserves the right to change or revise this document and/or product at any time without notice. No part of this document may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior written permission of Axiomtek Co., Ltd. Trademarks Acknowledgments Axiomtek is a trademark of Axiomtek Co., Ltd. ® Windows is a trademark of Microsoft Corporation. Other brand names and trademarks are the properties and registered brands of their respective owners. Copyright 2014 Axiomtek Co., Ltd. All Rights Reserved February 2014, Version A2 Printed in Taiwan ii Table of Contents Disclaimers ..................................................................................................... ii Chapter 1 Introduction ............................................. 1 1.1 Specifications ...................................................................................... 2 Chapter 2 Getting Started ...................................... -

LTIB Quick Start: Targeting the Coldfire Mcf54418tower Board By: Soledad Godinez and Jaime Hueso Guadalajara Mexico

Freescale Semiconductor Document Number: AN4426 Application Note Rev. 0, December 2011 LTIB Quick Start: Targeting the ColdFire MCF54418Tower Board by: Soledad Godinez and Jaime Hueso Guadalajara Mexico Contents 1 Introduction 1 Introduction................................................................1 The purpose of this document is to indicate step-by-step how 2 LTIB..........................................................................1 to accomplish these tasks: • Install the BSP on a host development system. 2.1 Introduction....................................................1 • Run Linux Target Image Builder (LTIB) to build target 2.2 Installing the BSP...........................................2 images. • Boot Linux on the ColdFire MCF54418 Tower board. 2.3 Running LTIB................................................7 3 Target deployment .................................................13 This document is a guide for people who want to properly set up the Freescale Linux Target Image Builder (LTIB) for the 3.1 The Tower kit ..............................................13 ColdFire MCF54418 Tower Board Support Package (BSP). 3.2 Constructing the Tower kit...........................15 3.3 Programming U-boot, kernel, and root file system.............................................16 2 LTIB 3.4 Configuring U-boot......................................24 3.5 Running.......................................................25 4 Conclusions.............................................................26 2.1 Introduction Freescale GNU/Linux -

A Type-Checking Preprocessor for Cilk 2, a Multithreaded C Language

A Typechecking Prepro cessor for Cilk a Multithreaded C Language by Rob ert C Miller Submitted to the Department of Electrical Engineering and Computer Science in partial fulllment of the requirements for the degrees of Bachelor of Science in Computer Science and Engineering and Master of Engineering in Electrical Engineering and Computer Science at the Massachusetts Institute of Technology May Copyright Massachusetts Institute of Technology All rights reserved Author ::::::::::::::::::::::::::::::::::::::::::::::::::: :::::::::::::::::::::::: Department of Electrical Engineering and Computer Science May Certied by ::::::::::::::::::::::::::::::::::::::::::::::::::: ::::::::::::::::::: Charles E Leiserson Thesis Sup ervisor Accepted by ::::::::::::::::::::::::::::::::::::::::::::::::::: ::::::::::::::::::: F R Morgenthaler Chairman Department Committee on Graduate Theses A Typechecking Prepro cessor for Cilk a Multithreaded C Language by Rob ert C Miller Submitted to the Department of Electrical Engineering and Computer Science on May in partial fulllment of the requirements for the degrees of Bachelor of Science in Computer Science and Engineering and Master of Engineering in Electrical Engineering and Computer Science Abstract This thesis describ es the typechecking optimizing translator that translates Cilk a C ex tension language for multithreaded parallel programming into C The CilktoC translator is based on CtoC a to ol I developed that parses type checks analyzes and regenerates a C program With a translator based on CtoC -

N2429 V 1 Function Failure Annotation

ISO/IEC JTC 1/SC 22/WG14 N2429 September 23, 2019 v 1 Function failure annotation Niall Douglas Jens Gustedt ned Productions Ltd, Ireland INRIA & ICube, Université de Strasbourg, France We have been seeing an evolution in proposals for the best syntax for describing how to mark how a function fails, such that compilers and linkers can much better optimise function calls than at present. These papers were spurred by [WG21 P0709] Zero-overhead deterministic exceptions, which proposes deterministic exception handling for C++, whose implementation could be very compatible with C code. P0709 resulted in [WG21 P1095] Zero overhead deterministic failure – A unified mecha- nism for C and C++ which proposed a C syntax and exception-like mechanism for supporting C++ deterministic exceptions. That was responded to by [WG14 N2361] Out-of-band bit for exceptional return and errno replacement which proposed an even wider reform, whereby the most popular idioms for function failure in C code would each gain direct calling convention support in the compiler. This paper synthesises all of the proposals above into common proposal, and does not duplicate some of the detail in the above papers for brevity and clarity. It hews closely to what [WG14 N2361] proposes, but with different syntax, and in addition it shows how the corresponding C++ features can be constructed on top of it, without loosing call compatibility with C. Contents 1 Introduction 2 1.1 Differences between WG14 N2361 and WG21 P1095 . .3 2 Proposed Design 4 2.1 errno-related function failure editions . .5 2.2 When to choose which edition . -

The C-- Language Reference Manual

The C-- Language Reference Manual Simon Peyton Jones Thomas Nordin Dino Oliva Pablo Nogueira Iglesias April 23, 1998 Contents 1 Introduction 3 2 Syntax definition 3 2.1 General ......................................... 3 2.2 Comments........................................ 6 2.3 Names.......................................... 6 2.4 Namescope....................................... 6 2.5 The import and export declarations ........................ 6 2.6 Constants ....................................... 7 2.6.1 Integer and floating point numbers . ...... 7 2.6.2 Characters and strings . ... 7 3 Fundamental concepts in C-- 7 3.1 Memory......................................... 7 3.2 Datasegment ..................................... 8 3.3 Codesegment..................................... 8 3.4 Types .......................................... 8 3.5 Local variables (or registers) . ......... 8 3.6 Addresses ....................................... 9 3.7 Names.......................................... 9 3.8 Foreign language interface . ....... 9 4 Data layout directives 9 4.1 Labels.......................................... 10 4.2 Initialisation.................................. ..... 10 4.3 Alignment....................................... 12 1 5 Procedures 12 5.1 Proceduredefinition..... ...... ..... ...... ...... .. ..... 12 5.2 Statements...................................... 13 5.2.1 skip; ..................................... 13 5.2.2 Declaration ................................... 13 5.2.3 Assignment................................... 13 5.2.4 -

Lesson 01 Programming with C

Lesson 01 Programming with C Pijushkanti Panigrahi What is C ? • The C is a programming Language, developed by Dennis Ritchie for creating system applications that directly interact with the hardware devices such as drivers, kernels, etc. • C programming is considered as the base for other programming languages, that is why it is known as mother language. • C is famous for its Compactness. • C language is case sensitive. 2 Features It can be defined by the following ways: Mother language System programming language Procedure-oriented programming language Structured programming language Mid-level programming language 3 1) C as a mother language ? • C language is considered as the mother language of all the modern programming languages because most of the compilers, JVMs, Kernels, etc. are written in C language , and most of the programming languages follow C syntax, for example, C++, Java, C#, etc. • It provides the core concepts like the array , strings , functions , file handling , etc . that are being used in many languages like C++ , Java , C# , etc. 4 2) C as a system programming language • A system programming language is used to create system software. • C language is a system programming language because it can be used to do low-level programming (for example driver and kernel) . • It is generally used to create hardware devices, OS, drivers, kernels, etc. For example, Linux kernel is written in C. 5 3) C as a procedural language • A procedure is known as a function, method, routine, subroutine, etc. A procedural language specifies a series of steps for the program to solve the problem. -

The Book of C Release 2018.01

The Book of C Release 2018.01 Joel Sommers Sep 16, 2018 CONTENTS 1 Introduction: The C Language3 2 Getting your feet wet in the C5 2.1 Hello, somebody............................................5 2.2 Hello, clang ...............................................7 3 Basic Types and Operators 11 3.1 Integer types.............................................. 11 3.2 Floating point types.......................................... 14 3.3 Boolean type.............................................. 15 3.4 Basic syntactic elements........................................ 15 4 Control Structures 23 4.1 if Statement.............................................. 23 4.2 The conditional expression (ternary operator)........................... 24 4.3 switch statement............................................ 24 4.4 while loop................................................ 25 4.5 do-while loop............................................. 25 4.6 for loop................................................. 26 5 Arrays and Strings 29 5.1 Arrays.................................................. 29 5.2 Multidimensional Arrays....................................... 32 5.3 C Strings................................................. 32 6 Aggregate Data Structures 39 6.1 The C struct .............................................. 39 7 Functions 43 7.1 Function syntax............................................. 43 7.2 Data types for parameters and return values............................ 45 7.3 Storage classes, the stack and the heap.............................. -

In Using the GNU Compiler Collection (GCC)

Using the GNU Compiler Collection For gcc version 6.1.0 (GCC) Richard M. Stallman and the GCC Developer Community Published by: GNU Press Website: http://www.gnupress.org a division of the General: [email protected] Free Software Foundation Orders: [email protected] 51 Franklin Street, Fifth Floor Tel 617-542-5942 Boston, MA 02110-1301 USA Fax 617-542-2652 Last printed October 2003 for GCC 3.3.1. Printed copies are available for $45 each. Copyright c 1988-2016 Free Software Foundation, Inc. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.3 or any later version published by the Free Software Foundation; with the Invariant Sections being \Funding Free Software", the Front-Cover Texts being (a) (see below), and with the Back-Cover Texts being (b) (see below). A copy of the license is included in the section entitled \GNU Free Documentation License". (a) The FSF's Front-Cover Text is: A GNU Manual (b) The FSF's Back-Cover Text is: You have freedom to copy and modify this GNU Manual, like GNU software. Copies published by the Free Software Foundation raise funds for GNU development. i Short Contents Introduction ::::::::::::::::::::::::::::::::::::::::::::: 1 1 Programming Languages Supported by GCC ::::::::::::::: 3 2 Language Standards Supported by GCC :::::::::::::::::: 5 3 GCC Command Options ::::::::::::::::::::::::::::::: 9 4 C Implementation-Defined Behavior :::::::::::::::::::: 373 5 C++ Implementation-Defined Behavior ::::::::::::::::: 381 6 Extensions to -

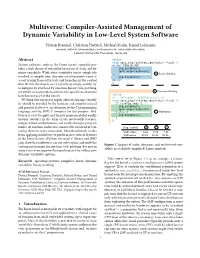

Multiverse: Compiler-Assisted Management of Dynamic Variability in Low-Level System Software

Multiverse: Compiler-Assisted Management of Dynamic Variability in Low-Level System Software Florian Rommel, Christian Dietrich, Michael Rodin, Daniel Lohmann {rommel, dietrich, lohmann}@sra.uni-hannover.de, [email protected] Leibniz Universität Hannover, Germany Abstract inline void spin_irq_lock(raw_spinlock_t *lock) { System software, such as the Linux kernel, typically pro- #ifdef CONFIG_SMP vides a high degree of versatility by means of static and dy- irq_disable(); _ namic variability. While static variability can be completely spin acquire(&lock); #else A Static Binding resolved at compile time, dynamic variation points come at irq_disable(); a cost arising from extra tests and branches in the control #endif flow. Kernel developers use it (a) only sparingly and (b)try } to mitigate its overhead by run-time binary code patching, for which several problem/architecture-specific mechanisms B Dynamic Binding have become part of the kernel. inline {+ __attribute__((multiverse)) +} We think that means for highly efficient dynamic variabil- void spin_irq_lock(raw_spinlock_t *lock) { ity should be provided by the language and compiler instead if (config_smp) { _ and present multiverse, an extension to the C programming irq disable(); spin_acquire(&lock); language and the GNU C compiler for this purpose. Mul- } else{ C Multiverse tiverse is easy to apply and targets program-global config- irq_disable(); uration switches in the form of (de-)activatable features, } } integer-valued configurations, and rarely-changing program modes. At run time, multiverse removes the overhead of eval- [avg. cycles] A B C uating them on every invocation. Microbenchmark results SMP=false 6.64 9.75 7.48 from applying multiverse to performance-critical features SMP=true 28.82 28.91 28.86 of the Linux kernel, cPython, the musl C-library and GNU grep show that multiverse can not only replace and unify the Figure 1. -

Objective-C 2.0 Essentials Third Edition

Objective-C 2.0 Essentials Third Edition i Objective-C 2.0 Essentials – Third Edition ISBN-13: 978-1480262102 © 2012 Neil Smyth. This book is provided for personal use only. Unauthorized use, reproduction and/or distribution strictly prohibited. All rights reserved. The content of this book is provided for informational purposes only. Neither the publisher nor the author offers any warranties or representation, express or implied, with regard to the accuracy of information contained in this book, nor do they accept any liability for any loss or damage arising from any errors or omissions. This book contains trademarked terms that are used solely for editorial purposes and to the benefit of the respective trademark owner. The terms used within this book are not intended as infringement of any trademarks. Find more eBooks online at http://www.eBookFrenzy.com. Rev. 3.0 ii Table of Contents 1. About Objective-C Essentials ...................................................................................................... 1 1.1 Why are you reading this? .................................................................................................... 1 1.2 Supported Platforms ............................................................................................................. 2 2. The History of Objective-C .......................................................................................................... 3 2.1 The C Programming Language ............................................................................................. -

Objective-C Fundamentals

Christopher K. Fairbairn Johannes Fahrenkrug Collin Ruffenach MANNING Objective-C Fundamentals Download from Wow! eBook <www.wowebook.com> Download from Wow! eBook <www.wowebook.com> Objective-C Fundamentals CHRISTOPHER K. FAIRBAIRN JOHANNES FAHRENKRUG COLLIN RUFFENACH MANNING SHELTER ISLAND Download from Wow! eBook <www.wowebook.com> For online information and ordering of this and other Manning books, please visit www.manning.com. The publisher offers discounts on this book when ordered in quantity. For more information, please contact Special Sales Department Manning Publications Co. 20 Baldwin Road PO Box 261 Shelter Island, NY 11964 Email: [email protected] ©2012 by Manning Publications Co. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by means electronic, mechanical, photocopying, or otherwise, without prior written permission of the publisher. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in the book, and Manning Publications was aware of a trademark claim, the designations have been printed in initial caps or all caps. Recognizing the importance of preserving what has been written, it is Manning’s policy to have the books we publish printed on acid-free paper, and we exert our best efforts to that end. Recognizing also our responsibility to conserve the resources of our planet, Manning books are printed on paper that is at least 15 percent recycled -

Objective-C Language and Gnustep Base Library Programming Manual

Objective-C Language and GNUstep Base Library Programming Manual Francis Botto (Brainstorm) Richard Frith-Macdonald (Brainstorm) Nicola Pero (Brainstorm) Adrian Robert Copyright c 2001-2004 Free Software Foundation Permission is granted to make and distribute verbatim copies of this manual provided the copyright notice and this permission notice are preserved on all copies. Permission is granted to copy and distribute modified versions of this manual under the conditions for verbatim copying, provided also that the entire resulting derived work is distributed under the terms of a permission notice identical to this one. Permission is granted to copy and distribute translations of this manual into another lan- guage, under the above conditions for modified versions. i Table of Contents 1 Introduction::::::::::::::::::::::::::::::::::::: 3 1.1 What is Object-Oriented Programming? :::::::::::::::::::::::: 3 1.1.1 Some Basic OO Terminology :::::::::::::::::::::::::::::: 3 1.2 What is Objective-C? :::::::::::::::::::::::::::::::::::::::::: 5 1.3 History :::::::::::::::::::::::::::::::::::::::::::::::::::::::: 5 1.4 What is GNUstep? ::::::::::::::::::::::::::::::::::::::::::::: 6 1.4.1 GNUstep Base Library :::::::::::::::::::::::::::::::::::: 6 1.4.2 GNUstep Make Utility :::::::::::::::::::::::::::::::::::: 7 1.4.3 A Word on the Graphical Environment :::::::::::::::::::: 7 1.4.4 The GNUstep Directory Layout ::::::::::::::::::::::::::: 7 1.5 Building Your First Objective-C Program :::::::::::::::::::::: 8 2 The Objective-C Language :::::::::::::::::::