Machine Learning Approaches to Human Body Shape Analysis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Role of Body Fat and Body Shape on Judgment of Female Health and Attractiveness: an Evolutionary Perspective

View metadata, citation and similar papers at core.ac.uk brought to you by CORE Psychological Topics 15 (2006), 2, 331-350 Original Scientific Article – UDC 159.9.015.7.072 572.51-055.2 Role of Body Fat and Body Shape on Judgment of Female Health and Attractiveness: An Evolutionary Perspective Devendra Singh University of Texas at Austin Department of Psychology Dorian Singh Oxford University Department of Social Policy and Social Work Abstract The main aim of this paper is to present an evolutionary perspective for why women’s attractiveness is assigned a great importance in practically all human societies. We present the data that the woman’s body shape, or hourglass figure as defined by the size of waist-to-hip-ratio (WHR), reliably conveys information about a woman’s age, fertility, and health and that systematic variation in women’s WHR invokes systematic changes in attractiveness judgment by participants both in Western and non-Western societies. We also present evidence that attractiveness judgments based on the size of WHR are not artifact of body weight reduction. Then we present cross-cultural and historical data which attest to the universal appeal of WHR. We conclude that the current trend of describing attractiveness solely on the basis of body weight presents an incomplete, and perhaps inaccurate, picture of women’s attractiveness. “... the buttocks are full but her waist is narrow ... the one for who[m] the sun shines ...” (From the tomb of Nefertari, the favorite wife of Ramses II, second millennium B.C.E.) “... By her magic powers she assumed the form of a beautiful woman .. -

Understanding 7 Understanding Body Composition

PowerPoint ® Lecture Outlines 7 Understanding Body Composition Copyright © 2009 Pearson Education, Inc. Objectives • Define body composition . • Explain why the assessment of body size, shape, and composition is useful. • Explain how to perform assessments of body size, shape, and composition. • Evaluate your personal body weight, size, shape, and composition. • Set goals for a healthy body fat percentage. • Plan for regular monitoring of your body weight, size, shape, and composition. Copyright © 2009 Pearson Education, Inc. Body Composition Concepts • Body Composition The relative amounts of lean tissue and fat tissue in your body. • Lean Body Mass Your body’s total amount of lean/fat-free tissue (muscles, bones, skin, organs, body fluids). • Fat Mass Body mass made up of fat tissue. Copyright © 2009 Pearson Education, Inc. Body Composition Concepts • Percent Body Fat The percentage of your total weight that is fat tissue (weight of fat divided by total body weight). • Essential Fat Fat necessary for normal body functioning (including in the brain, muscles, nerves, lungs, heart, and digestive and reproductive systems). • Storage Fat Nonessential fat stored in tissue near the body’s surface. Copyright © 2009 Pearson Education, Inc. Why Body Size, Shape, and Composition Matter Knowing body composition can help assess health risks. • More people are now overweight or obese. • Estimates of body composition provide useful information for determining disease risks. Evaluating body size and shape can motivate healthy behavior change. • Changes in body size and shape can be more useful measures of progress than body weight. Copyright © 2009 Pearson Education, Inc. Body Composition for Men and Women Copyright © 2009 Pearson Education, Inc. -

Relationship Between Body Image and Body Weight Control in Overweight ≥55-Year-Old Adults: a Systematic Review

International Journal of Environmental Research and Public Health Review Relationship between Body Image and Body Weight Control in Overweight ≥55-Year-Old Adults: A Systematic Review Cristina Bouzas , Maria del Mar Bibiloni and Josep A. Tur * Research Group on Community Nutrition and Oxidative Stress, University of the Balearic Islands & CIBEROBN (Physiopathology of Obesity and Nutrition CB12/03/30038), E-07122 Palma de Mallorca, Spain; [email protected] (C.B.); [email protected] (M.d.M.B.) * Correspondence: [email protected]; Tel.: +34-971-1731; Fax: +34-971-173184 Received: 21 March 2019; Accepted: 7 May 2019; Published: 9 May 2019 Abstract: Objective: To assess the scientific evidence on the relationship between body image and body weight control in overweight 55-year-old adults. Methods: The literature search was conducted ≥ on MEDLINE database via PubMed, using terms related to body image, weight control and body composition. Inclusion criteria were scientific papers, written in English or Spanish, made on older adults. Exclusion criteria were eating and psychological disorders, low sample size, cancer, severe diseases, physiological disorders other than metabolic syndrome, and bariatric surgery. Results: Fifty-seven studies were included. Only thirteen were conducted exclusively among 55-year-old ≥ adults or performed analysis adjusted by age. Overweight perception was related to spontaneous weight management, which usually concerned dieting and exercising. More men than women showed over-perception of body image. Ethnics showed different satisfaction level with body weight. As age increases, conformism with body shape, as well as expectations concerning body weight decrease. Misperception and dissatisfaction with body weight are risk factors for participating in an unhealthy lifestyle and make it harder to follow a healthier lifestyle. -

Refining the Abdominoplasty for Better Patient Outcomes

Refining the Abdominoplasty for Better Patient Outcomes Karol A Gutowski, MD, FACS Private Practice University of Illinois & University of Chicago Refinements • 360o assessment & treatment • Expanded BMI inclusion • Lipo-abdominoplasty • Low scar • Long scar • Monsplasty • No “dog ears” • No drains • Repurpose the fat • Rapid recovery protocols (ERAS) What I Do and Don’t Do • “Standard” Abdominoplasty is (almost) dead – Does not treat the entire trunk – Fat not properly addressed – Problems with lateral trunk contouring – Do it 1% of cases • Solution: 360o Lipo-Abdominoplasty – Addresses entire trunk and flanks – No Drains & Rapid Recovery Techniques Patient Happy, I’m Not The Problem: Too Many Dog Ears! Thanks RealSelf! Take the Dog (Ear) Out! Patients Are Telling Us What To Do Not enough fat removed Not enough skin removed Patient Concerns • “Ideal candidate” by BMI • Pain • Downtime • Scar – Too high – Too visible – Too long • Unnatural result – Dog ears – Mons aesthetics Solutions • “Ideal candidate” by BMI Extend BMI range • Pain ERAS protocols + NDTT • Downtime ERAS protocols + NDTT • Scar Scar planning – Too high Incision markings – Too visible Scar care – Too long Explain the need • Unnatural result Technique modifications – Dog ears Lipo-abdominoplasty – Mons aesthetics Mons lift Frequent Cause for Reoperation • Lateral trunk fullness – Skin (dog ear), fat, or both • Not addressed with anterior flank liposuction alone – need posterior approach • Need a 360o approach with extended skin excision (Extended Abdominoplasty) • Patient -

Depth Assisted Composition of Synthetic and Real 3D Scenes

©2016 Society for Imaging Science and Technology DOI: 10.2352/ISSN.2470-1173.2016.21.3DIPM-399 Depth Assisted Composition of Synthetic and Real 3D Scenes Santiago Cortes, Olli Suominen, Atanas Gotchev; Department of Signal Processing; Tampere University of Technology; Tampere, Finland Abstract In media production, previsualization is an important step. It Prior work allows the director and the production crew to see an estimate of An extensive description and formalization of MR systems the final product during the filmmaking process. This work focuses can be found in [1]. After the release of the ARToolkit [2] by the in a previsualization system for composite shots, which involves Nara Institute of Technology, the research community got much real and virtual content. The system visualizes a correct better access to real time blend of real and virtual data. Most of the perspective view of how the real objects in front of the camera AR applications running on web browsers produced during the operator look placed in a virtual space. The aim is to simplify the early 2000s were developed using the ARToolkit. MR has evolved workflow, reduce production time and allow more direct control of with the technology used to produce it, and the uses for it have the end result. The real scene is shot with a time-of-flight depth multiplied. Nowadays, in the embedded system era, a single device camera, whose pose is tracked using a motion capture system. can have most of, if not all, the sensors and computing power to Depth-based segmentation is applied to remove the background run a good MR application. -

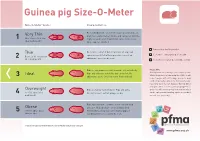

Guinea Pig Size-O-Meter Will 3 Abdominal Curve

Guinea pig Size-O- Meter Size-O-Meter Score: Characteristics: Each individual rib can be felt easily, hips and spine are Very Thin prominent and extremely visible and can be felt with the 1 More than 20% below slightest touch. Under abdominal curve can be seen. ideal body weight Spine appears hunched. Your pet is a healthy weight Thin Each rib is easily felt but not prominent. Hips and spine are easily felt with no pressure. Less of an Seek advice about your pet’s weight Between 10-20% below 2 abdominal curve can be seen. ideal body weight Seek advice as your pet could be at risk Ribs are not prominent and cannot be felt individually. Please note Hips and spine are not visible but can be felt. No Getting hands on is the key to this simple system. Ideal Whilst the pictures in Guinea pig Size-O-Meter will 3 abdominal curve. Chest narrower then hind end. help, it may be difficult to judge your pet’s body condition purely by sight alone. Some guinea pigs have long coats that can disguise ribs, hip bones and spine, while a short coat may highlight these Overweight Ribs are harder to distinguish. Hips and spine areas. You will need to gently feel your pet which 4 10 -15% above ideal difficult to feel. Feet not always visible. can be a pleasurable bonding experience for both body weight you and your guinea pig. Ribs, hips and spine cannot be felt or can with mild Obese pressure. No body shape can be distinguished. -

Know Dieting: Risks and Reasons to Stop

k"#w & ieting, -isks and -easons to 2top Dieting: Any attempts in the name of weight loss, “healthy eating” or body sculpting to deny your body of the essential, well-balanced nutrients and calories it needs to function to its fullest capacity. The Dieting Mindset: When dissatisfaction with your natural body shape or size leads to a decision to actively change your physical body weight or shape. Dieting has become a national pastime, especially for women. ∗ Americans spend more than $40 billion dollars a year on dieting and diet-related products. That’s roughly equivalent to the amount the U.S. Federal Government spends on education each year. ∗ It is estimated that 40-50% of American women are trying to lose weight at any point in time. ∗ One recent study revealed that 91% of women on a college campus had dieted. 22% dieted “often” or “always.” (Kurth et al., 1995). ∗ Researchers estimate that 40-60% of high school girls are on diets (Sardula et al., 1993; Rosen & Gross, 1987). ∗ Another study found that 46% of 9-11 year olds are sometimes or very often on diets (Gustafson-Larson & Terry, 1992). ∗ And, another researcher discovered that 42% of 1st-3rd grade girls surveyed reported wanting to be thinner (Collins, 1991). The Big Deal About Dieting: What You Should Know ∗ Dieting rarely works. 95% of all dieters regain their lost weight and more within 1 to 5 years. ∗ Dieting can be dangerous: ! “Yo-yo” dieting (repetitive cycles of gaining, losing, & regaining weight) has been shown to have negative health effects, including increased risk of heart disease, long-lasting negative impacts on metabolism, etc. -

(12) United States Patent (10) Patent No.: US 9,729,765 B2 Balakrishnan Et Al

USOO9729765B2 (12) United States Patent (10) Patent No.: US 9,729,765 B2 Balakrishnan et al. (45) Date of Patent: Aug. 8, 2017 (54) MOBILE VIRTUAL CINEMATOGRAPHY A63F 13/70 (2014.09); G06T 15/20 (2013.01); SYSTEM H04N 5/44504 (2013.01); A63F2300/1093 (71) Applicant: Drexel University, Philadelphia, PA (2013.01) (58) Field of Classification Search (US) None (72) Inventors: Girish Balakrishnan, Santa Monica, See application file for complete search history. CA (US); Paul Diefenbach, Collingswood, NJ (US) (56) References Cited (73) Assignee: Drexel University, Philadelphia, PA (US) PUBLICATIONS (*) Notice: Subject to any disclaimer, the term of this Lino, C. et al. (2011) The Director's Lens: An Intelligent Assistant patent is extended or adjusted under 35 for Virtual Cinematography. ACM Multimedia, ACM 978-1-4503 U.S.C. 154(b) by 651 days. 0616-Apr. 11, 2011. Elson, D.K. and Riedl, M.O (2007) A Lightweight Intelligent (21) Appl. No.: 14/309,833 Virtual Cinematography System for Machinima Production. Asso ciation for the Advancement of Artificial Intelligence. Available (22) Filed: Jun. 19, 2014 from www.aaai.org. (Continued) (65) Prior Publication Data US 2014/O378222 A1 Dec. 25, 2014 Primary Examiner — Maurice L. McDowell, Jr. (74) Attorney, Agent, or Firm — Saul Ewing LLP: Related U.S. Application Data Kathryn Doyle; Brian R. Landry (60) Provisional application No. 61/836,829, filed on Jun. 19, 2013. (57) ABSTRACT A virtual cinematography system (SmartWCS) is disclosed, (51) Int. Cl. including a mobile tablet device, wherein the mobile tablet H04N 5/222 (2006.01) device includes a touch-sensor Screen, a first hand control, a A63F 3/65 (2014.01) second hand control, and a motion sensor. -

3D Optical Imaging As a New Tool for the Objective Evaluation of Body Shape Changes After Bariatric Surgery

Obesity Surgery https://doi.org/10.1007/s11695-020-04408-4 ORIGINAL CONTRIBUTIONS 3D Optical Imaging as a New Tool for the Objective Evaluation of Body Shape Changes After Bariatric Surgery Andreas Kroh1 & Florian Peters2 & Patrick H. Alizai 1 & Sophia Schmitz1 & Frank Hölzle2 & Ulf P. Neumann1,3 & Florian T. Ulmer1,3 & Ali Modabber2 # The Author(s) 2020 Abstract Introduction Bariatric surgery is the most effective treatment option for obesity. It results in massive weight loss and improvement of obesity-related diseases. At the same time, it leads to a drastic change in body shape. These body shape changes are mainly measured by two-dimensional measurement methods, such as hip and waist circumference. These measurement methods suffer from significant measurement errors and poor reproducibility. Here, we present a three- dimensional measurement tool of the torso that can provide an objective and reproducible source for the detection of body shape changes after bariatric surgery. Material and Methods In this study, 25 bariatric patients were scanned with Artec EVA®, an optical three-dimensional mobile scanner up to 1 week before and 6 months after surgery. Data were analyzed, and the volume of the torso, the abdominal circumference and distances between specific anatomical landmarks were calculated. The results of the processed three-dimensional measurements were compared with clinical data concerning weight loss and waist circumference. Results The volume of the torso decreased after bariatric surgery. Loss of volume correlated strongly with weight loss 6 months after the operation (r =0.6425,p = 0.0005). Weight loss and three-dimensional processed data correlated better (r =0.6121,p = 0.0011) than weight loss and waist circumference measured with a measuring tape (r =0.3148,p =0.1254). -

Weight Loss Behavior in Obese Patients Before Seeking Professional Treatment in Taiwan

Obesity Research & Clinical Practice (2009) 3, 35—43 ORIGINAL ARTICLE Weight loss behavior in obese patients before seeking professional treatment in Taiwan Tsan-Hon Liou a,b, Nicole Huang c, Chih-Hsing Wu d, Yiing-Jenq Chou c, Yiing-Mei Liou e, Pesus Chou c,∗ a Department of Physical Medicine and Rehabilitation, Taipei Medical University-Wan Fang Hospital, Taipei, Taiwan b Graduate Institute of Injury Prevention and Control, College of Public Health and Nutrition, Taipei Medical University, Taiwan c Community Medicine Research Center and Institute of Public Health, National Yang-Ming University, Taipei, Taiwan d Department of Family Medicine, National Cheng Kung University Hospital, Tainan, Taiwan e Institute of Community Health Nursing, National Yang-Ming University, Taipei, Taiwan Received 13 March 2008; received in revised form 22 September 2008; accepted 14 October 2008 KEYWORDS Summary Obesity; Objective: To assess weight loss strategies and behaviors in obese patients prior to Anti-obesity drug; seeking professional obesity treatment in Taiwan. Weight expectation; Design: A cross-sectional study was conducted between 1 July 2004 and 30 June Weight loss behavior 2005. Setting and subjects: Obese subjects (1060; 791 females; age, ≥18 years; median BMI, 29.5 kg/m2) seeking treatment in 18 Taiwan clinics specializing in obesity treat- ment were enrolled and completed a self-administered questionnaire. Results: Of the 1060 subjects, the prevalence of anti-obesity drug use was 50.8%; more females than males used anti-obesity drugs (53.6% vs. 42.4%). Approximately one-third of normal weight or overweight subjects with no concomitant obesity- related risk factors took anti-obesity drugs. -

Places to Ride in the Northwest 8Places to Ride in the Northwest

PlacesPlaces toto RideRide 88 Chris Gilbert inin thethe NorthwestNorthwest Photo Christian Pondella HOW December 2004 TO Launch from a Boat 12 Plus: Florida Hurricanes, USA $5.95 Oregon Snowkiting & Exploring Mauritius 0 7447004392 8 2 3 On a whole other level. Guillaume Chastagnol. Photo Bertrand Boone Contents December 2004 Features 38 Northern Exposure Brian Wheeler takes us to eight of his favorite places to ride in the Northwest. 48 Exposed A photo essay of the kiteboarding lifestyle. 46 The Legend of Jim Bones Adam Koch explores the life of veteran waterman Jim Bones. 58 Journey into the Indian Ocean: The Island of Mauritius Felix Pivec, Julian Sudrat and José Luengo travel to the island just off Africa. DepartmentsDepartments 14 Launch Ten of the world’s best riders get together in Cape Hatteras to build the world’s biggest kiteboarding rail. 34 Close-up Bertrand Fleury and Bri Chmel 70 Analyze This Up close and personal with some of the latestlatest gear.gear. 72 Academy 10 Skim Board Tips to Help you Rip Cover Shot Tweak McCore Chris Gilbert launches off the USS Lexington in Corpus Christie, Texas. 81 Tweak McCore Photo Christian Pondella Contents Shot California’s C-street local Corky Cullen stretching out a Japan air. Photo Jason Wolcott Photo Tracy Kraft Double Check My phone rang the other day and it was my best friend telling me the wind was cranking at our local spot. Running around like a chicken with its head cut off, I threw my 15-meter kite and gear into the truck and made the quick rush down to the beach through 30 minutes of traffic. -

A Cost Effective, Accurate Virtual Camera System for Games, Media Production and Interactive Visualisation Using Game Motion Controllers

EG UK Theory and Practice of Computer Graphics (2013) Silvester Czanner and Wen Tang (Editors) A Cost Effective, Accurate Virtual Camera System for Games, Media Production and Interactive Visualisation Using Game Motion Controllers Matthew Bett1, Erin Michno2 and Dr Keneth B. McAlpine1 1University of Abertay Dundee 2Quartic Llama ltd, Dundee Abstract Virtual cameras and virtual production techniques are an indispensable tool in blockbuster filmmaking but due to their integration into commercial motion-capture solutions, they are currently out-of-reach to low-budget and amateur users. We examine the potential of a low budget high-accuracy solution to create a simple motion capture system using controller hardware designed for video games. With this as a basis, a functional virtual camera system was developed which has proven usable and robust for commercial testing. Categories and Subject Descriptors (according to ACM CCS): I.3.6 [Computer Graphics]: Methodology and Techniques—Interaction Techniques I.3.7 [Information Interfaces and Presentations]: Three-Dimensional Graphics and Realism —Virtual reality 1. Introduction sult is a loss of truly ’organic’ camera shots being created with ease in the production process. In recent years, advances in computer graphics, motion cap- Virtual camera systems allow the user to apply conven- ture hardware and a need to give greater directorial control tional camera-craft within the CGI filmmaking process. A over the digital filmmaking process have given rise to the physical device similar to a conventional camera is used by world of virtual production as a tool in film and media pro- the camera operator with a monitor acting as the view-finder duction [AUT09].