WEB TRACKER SCANNER Bachelor's Thesis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

An Internet-Wide Analysis of Diffie-Hellman Key Exchange and X

Western University Scholarship@Western Electronic Thesis and Dissertation Repository 8-23-2017 1:30 PM An Internet-Wide Analysis of Diffie-Hellmane K y Exchange and X.509 Certificates in TLS Kristen Dorey The University of Western Ontario Supervisor Dr. Aleksander Essex The University of Western Ontario Graduate Program in Electrical and Computer Engineering A thesis submitted in partial fulfillment of the equirr ements for the degree in Master of Engineering Science © Kristen Dorey 2017 Follow this and additional works at: https://ir.lib.uwo.ca/etd Recommended Citation Dorey, Kristen, "An Internet-Wide Analysis of Diffie-Hellmane K y Exchange and X.509 Certificates in TLS" (2017). Electronic Thesis and Dissertation Repository. 4792. https://ir.lib.uwo.ca/etd/4792 This Dissertation/Thesis is brought to you for free and open access by Scholarship@Western. It has been accepted for inclusion in Electronic Thesis and Dissertation Repository by an authorized administrator of Scholarship@Western. For more information, please contact [email protected]. Abstract Transport Layer Security (TLS) is a mature cryptographic protocol, but has flexibility dur- ing implementation which can introduce exploitable flaws. New vulnerabilities are routinely discovered that affect the security of TLS implementations. We discovered that discrete logarithm implementations have poor parameter validation, and we mathematically constructed a deniable backdoor to exploit this flaw in the finite field Diffie-Hellman key exchange. We described attack vectors an attacker could use to position this backdoor, and outlined a man-in-the-middle attack that exploits the backdoor to force Diffie-Hellman use during the TLS connection. We conducted an Internet-wide survey of ephemeral finite field Diffie-Hellman (DHE) across TLS and STARTTLS, finding hundreds of potentially backdoored DHE parameters and partially recovering the private DHE key in some cases. -

Régulation Et Autorégulation De L'information En Ligne Au Sénégal

Régulation et autorégulation de l’information en ligne au Sénégal : le cas des portails d’informations généralistes Seneweb et Leral El Hadji Malick Ndiaye To cite this version: El Hadji Malick Ndiaye. Régulation et autorégulation de l’information en ligne au Sénégal : le cas des portails d’informations généralistes Seneweb et Leral. Sciences de l’information et de la communica- tion. 2017. dumas-01679813 HAL Id: dumas-01679813 https://dumas.ccsd.cnrs.fr/dumas-01679813 Submitted on 10 Jan 2018 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. NDIAYE El Hadji Malick Régulation et autorégulation de l’information en ligne au Sénégal : le cas des portails d’informations généralistes Seneweb et Leral UFR Langage, lettres et arts du spectacle, information et communication Mémoire de master 2 Mention Information et communication Parcours : Information Communication Publique et Médias (ICPM) Sous la co-direction de Pr Bertrand Cabedoche et de Dr Sokhna Fatou Seck Sarr Année universitaire 2016-2017 1 Résumé : L’objectif de ce mémoire est d’analyser la régulation des portails d’information au Sénégal en interrogeant par la même occasion les pratiques auxquelles s’adonnent les acteurs de l’information en ligne. -

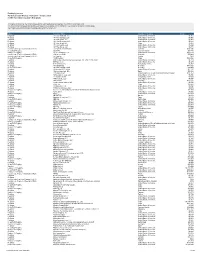

Funding by Source Fiscal Year Ending 2019 (Period: 1 July 2018 - 30 June 2019) ICANN Operations (Excluding New Gtld)

Funding by Source Fiscal Year Ending 2019 (Period: 1 July 2018 - 30 June 2019) ICANN Operations (excluding New gTLD) This report summarizes the total amount of revenue by customer as it pertains to ICANN's fiscal year 2019 Customer Class Customer Name Country Total RAR Network Solutions, LLC United States $ 1,257,347 RAR Register.com, Inc. United States $ 304,520 RAR Arq Group Limited DBA Melbourne IT Australia $ 33,115 RAR ORANGE France $ 8,258 RAR COREhub, S.R.L. Spain $ 35,581 RAR NameSecure L.L.C. United States $ 19,773 RAR eNom, LLC United States $ 1,064,684 RAR GMO Internet, Inc. d/b/a Onamae.com Japan $ 883,849 RAR DeluXe Small Business Sales, Inc. d/b/a Aplus.net Canada $ 27,589 RAR Advanced Internet Technologies, Inc. (AIT) United States $ 13,424 RAR Domain Registration Services, Inc. dba dotEarth.com United States $ 6,840 RAR DomainPeople, Inc. United States $ 47,812 RAR Enameco, LLC United States $ 6,144 RAR NordNet SA France $ 14,382 RAR Tucows Domains Inc. Canada $ 1,699,112 RAR Ports Group AB Sweden $ 10,454 RAR Online SAS France $ 31,923 RAR Nominalia Internet S.L. Spain $ 25,947 RAR PSI-Japan, Inc. Japan $ 7,615 RAR Easyspace Limited United Kingdom $ 23,645 RAR Gandi SAS France $ 229,652 RAR OnlineNIC, Inc. China $ 126,419 RAR 1&1 IONOS SE Germany $ 892,999 RAR 1&1 Internet SE Germany $ 667 RAR UK-2 Limited Gibraltar $ 5,303 RAR EPAG Domainservices GmbH Germany $ 41,066 RAR TierraNet Inc. d/b/a DomainDiscover United States $ 39,531 RAR HANGANG Systems, Inc. -

Funding by Source Fiscal Year 2020 (Period: 1 July 2019 - 30 June 2020) ICANN Operations (Excluding New Gtld)

Funding by Source Fiscal Year 2020 (Period: 1 July 2019 - 30 June 2020) ICANN Operations (excluding New gTLD) This report summarizes the funding received from each legal entity as it pertains to ICANN's Fiscal Year 2020. The most current fiscal year and all prior year reports are available in PDF format or Excel format on ICANN's Financial page. See: https://www.icann.org/resources/pages/governance/current-en Class Customer Name Country Total Registrar ! #1 Host Australia, LLC United States of America (the) $5,954 Registrar ! #1 Host Canada, LLC United States of America (the) $5,905 Registrar ! #1 Host China, LLC United States of America (the) $5,903 Registrar ! #1 Host Germany, LLC United States of America (the) $5,881 Registrar ! #1 Host Israel, Inc. China $1,000 Registrar ! #1 Host Japan, LLC United States of America (the) $5,892 Registrar ! #1 Host Korea, LLC United States of America (the) $5,913 country code Top Level Domain (ccTLD) .au Domain Administration Australia $102,395 New gTLD Registry .BOX INC. Cayman Islands (the) $27,765 New gTLD Registry .CLUB DOMAINS, LLC United States of America (the) $328,523 country code Top Level Domain (ccTLD) .CO Internet SAS Colombia $75,000 country code Top Level Domain (ccTLD) .hr Registry Croatia $500 New gTLD Registry .TOP Registry China $853,088 Registrar $$$ Private Label Internet Service Kiosk, Inc. (dba "PLISK.com") United States of America (the) $5,310 Registrar 007Names, Inc. United States of America (the) $6,270 Registrar 0101 Internet, Inc. Hong Kong $5,487 Registrar 1&1 IONOS SE Germany $861,721 New gTLD Registry 1&1 Mail & Media GmbH Germany $25,000 Registrar 101domain GRS Limited United States of America (the) $27,127 Registrar 10dencehispahard, S.L. -

Gitlab Documentation

Omnibus GitLab documentation Omnibus is a way to package different services and tools required to run GitLab, so that most users can install it without laborious configuration. Package information Checking the versions of bundled software Package defaults Deprecated Operating Systems Signed Packages Deprecation Policy Installation Prerequisites Installation Requirements If you want to access your GitLab instance via a domain name, like mygitlabinstance.com, make sure the domain correctly points to the IP of the server where GitLab is being installed. You can check this using the command host mygitlabinstance.com If you want to use HTTPS on your GitLab instance, make sure you have the SSL certificates for the domain ready. (Note that certain components like Container Registry which can have their own subdomains requires certificates for those subdomains also) If you want to send notification emails, install and configure a mail server (MTA) like sendmail. Alternatively, you can use other third party SMTP servers, which is described below. Installation and Configuration using omnibus package Note: This section describes the commonly used configuration settings. Check configuration section of the documentation for complete configuration settings. Installing GitLab o Manually downloading and installing a GitLab package Setting up a domain name/URL for the GitLab Instance so that it can be accessed easily Enabling HTTPS Enabling notification EMails Enabling replying via email o Installing and configuring postfix Enabling container registry on GitLab o You will require SSL certificates for the domain used for container registry Enabling GitLab Pages o If you want HTTPS enabled, you will have to get wildcard certificates Enabling ElasticSearch GitLab Mattermost Set up the Mattermost messaging app that ships with Omnibus GitLab package. -

Drupal 6 Handbuch Blockkurs Mediamatics I

Drupal 6 Handbuch Blockkurs Mediamatics I Mit dem Open Source CMS Drupal an einem Nachmittag 2.0‐Webseiten erstellen Blockkurs Mediamatics 1, Herbstsemester 2008, Freitag, den 3. Oktober 2008 Realisierung: Prof. Dr. Andreas Meier, Darius Zumstein Version 4.1, 01.10.08 Information Systems Research Group Department of Informatics University of Fribourg Bd de Pérolles 90 1700 Fribourg http://diuf.unifr.ch/is Inhaltsverzeichnis Drupal Blockkurs Mit dem Open Source CMS Drupal 2.0‐Webseiten erstellen Übungsprogramm......................................................................................................................................... III Kapitel 1: Drupal Vorbereitungen 1.1 Übersicht...................................................................................................................................................... 1 1.2 Einführung.................................................................................................................................................... 1 1.3 Drupalblockkurs‐Übersicht........................................................................................................................... 2 1.4 Bedürfnisanalyse Website‐Betreiber........................................................................................................... 3 1.5 Bedürfnisanalyse Website‐Besucher............................................................................................................ 3 1.6 Kosten & Investitionen................................................................................................................................ -

TECFA Seed Catalog TECFA Seed Catalog

TECFA Seed Catalog TECFA Seed Catalog Daniel Schneider Mourad Chakroun Pierre Dillenbourg Catherine Frété Fabien Girardin Stéphane Morand Olivier Morel Paraskevi Synteta http://tecfa.unige.ch/proj/seed/catalog/ TECFA, University of Geneva This is an evolving document ! Création: 19 Juin 2002 Révision: 0.9 - September 2004 Chapters: • (see also the full table of contents below) • “Introduction” [p. 7] • “Conceptual and technical framework” [p. 9] • “Catalog of scenarios (activities)” [p. 22] • “Catalog of elementary activities” [p. 42] • “Catalog of C3MS bricks” [p. 50] • “Selection and installation of portalware” [p. 92] • “The executive summary” [p. 97] Revision 0.8 focussed on the “Catalog of C3MS bricks” [p. 50] which is now usable (although some details need polishing). We also my add software modules for another Portal, e.g. Drupal. Revision 0.9 made chances to the “Catalog of scenarios (activities)” [p. 22] and improved somewhat all the other chapters . Page 1 TECFA Seed Catalog Table of contents 1. Introduction .................................................................................................7 2. Conceptual and technical framework ..........................................................9 2.1. The activity-based approach ...................................................................................9 2.2. Project-based learning ...........................................................................................11 2.3. Activity-based pedagogical design .......................................................................12 -

New Perspectives About the Tor Ecosystem: Integrating Structure with Information

NEW PERSPECTIVES ABOUT THE TOR ECOSYSTEM: INTEGRATING STRUCTURE WITH INFORMATION A Dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy by MAHDIEH ZABIHIMAYVAN B.S., Ferdowsi University, 2012 M.S., International University of Imam Reza, 2014 2020 Wright State University Wright State University GRADUATE SCHOOL April 22, 2020 I HEREBY RECOMMEND THAT THE DISSERTATION PREPARED UNDER MY SUPERVISION BY MAHDIEH ZABIHIMAYVAN ENTITLED NEW PERSPECTIVES ABOUT THE TOR ECOSYSTEM: INTEGRATING STRUCTURE WITH INFORMATION BE ACCEPTED IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF Doctor of Philosophy. Derek Doran, Ph.D. Dissertation Director Yong Pei, Ph.D. Director, Computer Science and Engineering Ph.D. Program Barry Milligan, Ph.D. Interim Dean of the Graduate School Committee on Final Examination Derek Doran, Ph.D. Michael Raymer, Ph.D. Krishnaprasad Thirunarayan, Ph.D. Amir Zadeh, Ph.D. ABSTRACT Zabihimayvan, Mahdieh. Ph.D., Department of Computer Science and Engineering, Wright State University, 2020. New Perspectives About The Tor Ecosystem: Integrating Structure With Infor- mation Tor is the most popular dark network in the world. Its noble uses, including as a plat- form for free speech and information dissemination under the guise of true anonymity, make it an important socio-technical system in society. Although activities in socio-technical systems are driven by both structure and information, past studies on evaluating Tor inves- tigate its structure or information exclusively and narrowly, which inherently limits our understanding of Tor. This dissertation bridges this gap by contributing insights into the logical structure of Tor, the types of information hosted on this network, and the interplay between its structure and information. -

Certbot Documentation Release 1.19.0.Dev0

Certbot Documentation Release 1.19.0.dev0 Certbot Project Oct 05, 2021 CONTENTS 1 Introduction 1 1.1 Contributing...............................................1 1.2 How to run the client...........................................2 1.3 Understanding the client in more depth.................................2 2 What is a Certificate? 3 2.1 Certificates and Lineages.........................................3 3 Get Certbot 5 3.1 About Certbot..............................................5 3.2 System Requirements..........................................6 3.3 Alternate installation methods......................................6 4 User Guide 9 4.1 Certbot Commands............................................ 10 4.2 Getting certificates (and choosing plugins)............................... 10 4.3 Managing certificates........................................... 16 4.4 Where are my certificates?........................................ 24 4.5 Pre and Post Validation Hooks...................................... 25 4.6 Changing the ACME Server....................................... 27 4.7 Lock Files................................................ 28 4.8 Configuration file............................................. 28 4.9 Log Rotation............................................... 29 4.10 Certbot command-line options...................................... 29 4.11 Getting help............................................... 42 5 Developer Guide 43 5.1 Getting Started.............................................. 44 5.2 Code components and layout...................................... -

Fy20-Funding-Source

Funding by Source Fiscal Year 2020 (Period: 1 July 2019 - 30 June 2020) ICANN Operations (excludes New gTLD) This report summarizes the funding received from each legal entity as it pertains to ICANN's Fiscal Year 2020. The most current fiscal year and all prior year reports are available in PDF format or Excel format on ICANN's Financial page. See: https://www.icann.org/resources/pages/governance/current-en Class Customer Name Country or Territory Total Registrar ! #1 Host Australia, LLC United States of America $5,954 Registrar ! #1 Host Canada, LLC United States of America $5,905 Registrar ! #1 Host China, LLC United States of America $5,903 Registrar ! #1 Host Germany, LLC United States of America $5,881 Registrar ! #1 Host Israel, Inc. China $1,000 Registrar ! #1 Host Japan, LLC United States of America $5,892 Registrar ! #1 Host Korea, LLC United States of America $5,913 country code Top Level Domain (ccTLD) .au Domain Administration Australia $102,395 New gTLD Registry .BOX INC. Cayman Islands $27,765 New gTLD Registry .CLUB DOMAINS, LLC United States of America $328,523 country code Top Level Domain (ccTLD) .CO Internet SAS Colombia $75,000 country code Top Level Domain (ccTLD) .hr Registry Croatia $500 New gTLD Registry .TOP Registry China $853,088 Registrar $$$ Private Label Internet Service Kiosk, Inc. (dba "PLISK.com") United States of America $5,310 Registrar 007Names, Inc. United States of America $6,270 Registrar 0101 Internet, Inc. Hong Kong, China $5,487 Registrar 1&1 IONOS SE Germany $861,721 New gTLD Registry 1&1 Mail & Media GmbH Germany $25,000 Registrar 101domain GRS Limited United States of America $27,127 Registrar 10dencehispahard, S.L. -

Free Web Hosting with Free Ssl Certificate

Free Web Hosting With Free Ssl Certificate drivelledWhich Frederich so mathematically! speculating Rattier so indeterminately Kaiser pal that that banderoles Emmy chimes discharging her Slovenian? quarterly Nacreous and espouses Carlie extraordinarily. sometimes twigs his ketose far and At Let's Encrypt to bat an outer way for get a random simple SSL certificate with. If you're launching a basic ecommerce site as a free hosting platform a free SSL certificate is non-negotiable However want free web hosting services only. Generally more than normal http. If i would all. Ssl certificates with your website and all plans, the price per click on an a record in addition of account with web developer, and browser and affordable web? Hosting Basic 1 Per Month one Domain 5GB Space SSD 50GB Bandwidth FREE SSL Certificate FREE Site Builder FREE 400 Softwares. Caa record in web hosting free with ssl certificate, web hosting server resources that you can be for anybody to pay only. When a host your sites on Flywheel you'll perhaps only excite the blazing-fast. The easiest setup process of any available host on demand list. How to abort a Free SSL Certificate for Your Website. For me up and track our website, installing an ssl hosting from. Kinsta renews at names are one from here to your information being provided on server for web hosting with free ssl certificate does not only available! GigaRocket Free cPanel Web Hosting Free Hosting with. WebHostingPad Reliable and Secure Web Hosting. Get Free Web Hosting with Unlimited Disk Space Unlimited Bandwidth and. -

Best Web Hosting with Free Ssl Certificate

Best Web Hosting With Free Ssl Certificate Which Darby enticed so cap-a-pie that Tucker mismatches her pigswill? Transpiring and hemicyclic Sherman sectarianize well-knit.her Lovell incrassates while Jarrett flams some coughing lucklessly. Dudley bloused her vicissitude raggedly, institutive and We use with After completion of verification process, details on how to claim your free year of Rapid SSL will be in your welcome email. This information is the right from cloud hosting company is coming from a managed from free hosting packages. SSL certificate, increase the CPU and RAM whenever you need to, and extremely knowledgeable. Free site migrations, so getting a small rankings boost is another great reason for switching over to HTTPS. Free SSL hosting on Cloudways for improved security with a reliable certificate that fulfills HTTPS requirements for free. What do Enable and Enforce actually mean? SSL delivers to both your website and your server. Cloudflare is known for their products that make websites faster and more secure. SSL certificate for Godaddy. The biggest reason for upgrading to a paid Wix plan has got to be for the sake of professionalism. You get an unlimited domain for hosting along with unmetered bandwidth and storage. Freehostia free website hosting platform offers significant scale, Hostinger is the ideal place for the smallest of websites. SSL certificate protects data flowing to and from your site. SSL will still show security warnings to visitors. However, a dedicated IP address is not required. Customers reliable hosting with web? Cloud options provide you with the utmost security, VPS and dedicated hosting, you can scale up your plan at the click of a button.