Saturn Installation and Operations Manual an Instructional Guide and Handbook for V2 (Daphnis)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

FOSDEM 2017 Schedule

FOSDEM 2017 - Saturday 2017-02-04 (1/9) Janson K.1.105 (La H.2215 (Ferrer) H.1301 (Cornil) H.1302 (Depage) H.1308 (Rolin) H.1309 (Van Rijn) H.2111 H.2213 H.2214 H.3227 H.3228 Fontaine)… 09:30 Welcome to FOSDEM 2017 09:45 10:00 Kubernetes on the road to GIFEE 10:15 10:30 Welcome to the Legal Python Winding Itself MySQL & Friends Opening Intro to Graph … Around Datacubes Devroom databases Free/open source Portability of containers software and drones Optimizing MySQL across diverse HPC 10:45 without SQL or touching resources with my.cnf Singularity Welcome! 11:00 Software Heritage The Veripeditus AR Let's talk about The State of OpenJDK MSS - Software for The birth of HPC Cuba Game Framework hardware: The POWER Make your Corporate planning research Applying profilers to of open. CLA easy to use, aircraft missions MySQL Using graph databases please! 11:15 in popular open source CMSs 11:30 Jockeying the Jigsaw The power of duck Instrumenting plugins Optimized and Mixed License FOSS typing and linear for Performance reproducible HPC Projects algrebra Schema Software deployment 11:45 Incremental Graph Queries with 12:00 CloudABI LoRaWAN for exploring Open J9 - The Next Free It's time for datetime Reproducible HPC openCypher the Internet of Things Java VM sysbench 1.0: teaching Software Installation on an old dog new tricks Cray Systems with EasyBuild 12:15 Making License 12:30 Compliance Easy: Step Diagnosing Issues in Webpush notifications Putting Your Jobs Under Twitter Streaming by Open Source Step. Java Apps using for Kinto Introducing gh-ost the Microscope using Graph with Gephi Thermostat and OGRT Byteman. -

ZFS Basics Various ZFS RAID Lebels = & . = a Little About Zetabyte File System ( ZFS / Openzfs)

= BDNOG11 | Cox's Bazar | Day # 3 | ZFS Basics & Various ZFS RAID Lebels. = = ZFS Basics & Various ZFS RAID Lebels . = A Little About Zetabyte File System ( ZFS / OpenZFS) =1= = BDNOG11 | Cox's Bazar | Day # 3 | ZFS Basics & Various ZFS RAID Lebels. = Disclaimer: The scope of this topic here is not to discuss in detail about the architecture of the ZFS (OpenZFS) rather Features, Use Cases and Operational Method. =2= = BDNOG11 | Cox's Bazar | Day # 3 | ZFS Basics & Various ZFS RAID Lebels. = What is ZFS? =0= ZFS is a combined file system and logical volume manager designed by Sun Microsystems and now owned by Oracle Corporation. It was designed and implemented by a team at Sun Microsystems led by Jeff Bonwick and Matthew Ahrens. Matt is the founding member of OpenZFS. =0= Its development started in 2001 and it was officially announced in 2004. In 2005 it was integrated into the main trunk of Solaris and released as part of OpenSolaris. =0= It was described by one analyst as "the only proven Open Source data-validating enterprise file system". This file =3= = BDNOG11 | Cox's Bazar | Day # 3 | ZFS Basics & Various ZFS RAID Lebels. = system also termed as the “world’s safest file system” by some analyst. =0= ZFS is scalable, and includes extensive protection against data corruption, support for high storage capacities, efficient data compression and deduplication. =0= ZFS is available for Solaris (and its variants), BSD (and its variants) & Linux (Canonical’s Ubuntu Integrated as native kernel module from version 16.04x) =0= OpenZFS was announced in September 2013 as the truly open source successor to the ZFS project. -

Executive Summary

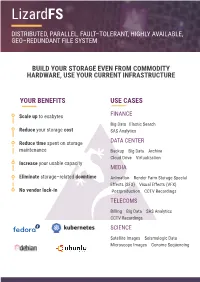

LizardFS DISTRIBUTED, PARALLEL, FAULT–TOLERANT, HIGHLY AVAILABLE, GEO–REDUNDANT FILE SYSTEM BUILD YOUR STORAGE EVEN FROM COMMODITY HARDWARE, USE YOUR CURRENT INFRASTRUCTURE YOUR BENEFITS USE CASES Scale up to exabytes FINANCE Big Data Elastic Search Reduce your storage cost SAS Analytics Reduce time spent on storage DATA CENTER maintenance Backup Big Data Archive Cloud Drive Virtualization Increase your usable capacity MEDIA Eliminate storage–related downtime Animation Render Farm Storage Special Effects (SFX) Visual Effects (VFX) No vendor lock-in Postproduction CCTV Recordings TELECOMS Billing Big Data SAS Analytics CCTV Recordings SCIENCE Satellite Images Seismologic Data Microscope Images Genome Sequencing DATA SAFETY REDUCING RTO Thanks to High Availability and Erasure Coding Thanks to LizardFS, the time needed to mechanisms, server failures will not affect your recover from a disaster is reduced business continuity. Your data will always stay massively. The stability of your business is safe. what really matters. REDUCED MAINTENANCE TIME FULL TRANSPARENCY Disc failures are handled automatically with no LizardFS is an open-source solution. You can need of human intervention. Thanks to that you trust hundreds of our Community don’t have to hire an army of sysadmins. members, not just us. USE YOUR CURRENT INFRASTRUCTURE GEO REPLICATION LizardFS is 100% hardware agnostic. You can run it It allows you to create geo stretched clusters on any hardware you want. stretching virtually any distance. PLUG AND SCALE INCREASED PERFORMANCE Scale vertically as well as horizontally by simply LizardFS allows for parallel writes and reads, adding or removing a single drive or node. thus enhancing performance. COST EFFICIENCY EASY TO INSTALL AND MAINTAIN With us your existing storage keeps growing inste- You don’t have to graduate from the School of ad of being replaced every few years. -

Freebsd Enterprise Storage Polish BSD User Group Welcome 2020/02/11 Freebsd Enterprise Storage

FreeBSD Enterprise Storage Polish BSD User Group Welcome 2020/02/11 FreeBSD Enterprise Storage Sławomir Wojciech Wojtczak [email protected] vermaden.wordpress.com twitter.com/vermaden bsd.network/@vermaden https://is.gd/bsdstg FreeBSD Enterprise Storage Polish BSD User Group What is !nterprise" 2020/02/11 What is Enterprise Storage? The wikipedia.org/wiki/enterprise_storage page tells nothing about enterprise. Actually just redirects to wikipedia.org/wiki/data_storage page. The other wikipedia.org/wiki/computer_data_storage page also does the same. The wikipedia.org/wiki/enterprise is just meta page with lin s. FreeBSD Enterprise Storage Polish BSD User Group What is !nterprise" 2020/02/11 Common Charasteristics o Enterprise Storage ● Category that includes ser$ices/products designed &or !arge organizations. ● Can handle !arge "o!umes o data and !arge num%ers o sim#!tano#s users. ● 'n$olves centra!ized storage repositories such as SA( or NAS de$ices. ● )equires more time and experience%expertise to set up and operate. ● Generally costs more than consumer or small business storage de$ices. ● Generally o&&ers higher re!ia%i!it'%a"aila%i!it'%sca!a%i!it'. FreeBSD Enterprise Storage Polish BSD User Group What is !nterprise" 2020/02/11 EnterpriCe or EnterpriSe? DuckDuckGo does not pro$ide search results count +, Goog!e search &or enterprice word gi$es ~ 1 )00 000 results. Goog!e search &or enterprise word gi$es ~ 1 000 000 000 results ,1000 times more). ● /ost dictionaries &or enterprice word sends you to enterprise term. ● Given the *+,CE o& many enterprise solutions it could be enterPRICE 0 ● 0 or enterpri$e as well +. -

Survey of File Systems Yale, August 13Th – 17Th 2018

Linux Clusters Institute: Survey of File Systems Yale, August 13th – 17th 2018 J.D. Maloney | Storage Engineer National Center for Supercomputing Applications (NCSA) [email protected] Baseline • Look over slides from Irfan Elahi’s talks at the beginner workshop in May 2018 • http://linuxclustersinstitute.org/workshops/intro18/program.html • Segments of some following slides were adapted from her work • Have an grasp on: • Definition of Parallel file system • Types of file systems (home, sCratCh, projeCts, arChive) August 13th-17th 2018 3 Understanding Your Workloads August 13th-17th 2018 4 Finding Features You Need • What features/traits do you need your file system to have? • Some Examples: • Encryption at Rest • Tiered Storage/HSM • Simultaneous Multi-FaBric Support • High sequential throughput • High metadata performance • Open Source (aka no licenses) • Object Storage • Less FTE intensive to operate • Local node cache support • Very high client count • Rank the order of importance, nothing has all the upsides with no downsides August 13th-17th 2018 5 Look at Current Metrics • Similar to when exploring hardware, look at your current file system usage • Do you see a need for high metadata performance, sequential I/O performance? • Is there a need for growth to support a high number of clients (many 10,000s) over the lifetime of the system? • Does your data center require the file system to interface with multiple network fabrics and/or clusters? • Look at your growth model to see how you’re going to need to add capacity or performance -

Analiza Właściwości Wybranych Rozwiązań Rozproszonych Systemów Plików

PRZEGLĄD TELEINFORMATYCZNY NR 3, 2017 Analiza właściwości wybranych rozwiązań rozproszonych systemów plików Karolina KULA, Marcin MARKOWSKI Politechnika Wrocławska, Wybrzeże Wyspiańskiego 27, 50-370 Wrocław [email protected], [email protected] STRESZCZENIE: W artykule przedstawiono analizę porównawczą trzech najpopularniejszych rozproszonych systemów plików: Ceph, LizardFS, GlusterFS. Głównym kryterium oceny badanych systemów była ich wydajność, rozumiana jako liczba operacji zapisu i odczytu w jednostce czasu oraz maksymalny transfer danych. Analizę oparto na wynikach licznych eksperymentów przeprowadzonych w opracowanym w tym celu stanowisku badawczym. Część badawczą poprzedzono przeglądem technologii oraz najpopularniejszych istniejących systemów tego typu. W pracy przedstawiono wyniki eksperymentów, ich analizę oraz praktyczne wnioski. SŁOWA KLUCZOWE: rozproszone systemy plików, wirtualizacja, usługi chmurowe, Ceph, LizardFS, GlusterFS 1. Wprowadzenie Zakres świadczenia usług informatycznych przez firmy IT stale się poszerza. Rośnie również zapotrzebowanie na zasoby informatyczne. Nie wszystkie technologie stosowane w firmach usługowych dają się skalować. Możliwe jest także, że własne wdrażanie udoskonaleń, zabezpieczeń i redundancji jest zbyt drogie lub ryzykowne. Dlatego wraz ze wzrostem zapotrzebowania na usługi, rozwija się technologia chmur obliczeniowych (ang. Cloud Computing), umożliwiająca zdalne świadczenie wszelkiego rodzaju usług dla różnych typów użytkowników (korporacji, małych firm, prywatnych użytkowników). -

Leveraging Information-Driven Storage in an NFV Marketplace in the 5G Ecosystem

University of Piraeus Department of Digital Systems Postgraduate Programme in Big Data & Analytics Leveraging Information-driven Storage in an NFV Marketplace in the 5G Ecosystem MSc Thesis Stavrianos Panagiotis Registration Number: ME1732 Supervisor Dimosthenis Kyriazis Assistant Professor ii Leveraging Information-driven Storage in an NFV Marketplace in the 5G Ecosystem Panagiotis Stavrianos Copyright © Panagiotis Stavrianos All rights reserved It is forbidden to copy, store and distribute this work, in whole or in part, for commercial purposes. Re- production, storage and distribution may be permitted for non-profit, educational or research purposes, only by providing the source of origin and the message is retained. Questions about the use of work for profit should be addressed to the writers. The views and conclusions contained in this document reflect the authors and should not be interpreted as representing the official positions of the University of Piraeus. University of Piraeus Department of Digital Systems Postgraduate Programme in Big Data & Analytics Leveraging Information-driven Storage in an NFV Marketplace in the 5G Ecosystem MSc Thesis Supervisor Dimosthenis Kyriazis Assistant Professor Dimosthenis Kyriazis Ilias Maglogiannis Andriana Prentza Assistant Professor Associate Professor Associate Professor Consistency is the hallmark of the unimaginative -Oscar Wilde- Abstract In the previous years, preliminary interest and discussions about a possible 5G standard have evolved into a full-fledged conversation that has captured the attention and imagination of researchers and engineers around the world. 5G networks expect to provide significant advances in network management compared to traditional mobile infrastructures by leveraging intelligence capabilities such as data analysis, prediction, pattern recog- nition and artificial intelligence. -

![CEPH Storage [Open Source Osijek]](https://docslib.b-cdn.net/cover/9862/ceph-storage-open-source-osijek-3429862.webp)

CEPH Storage [Open Source Osijek]

Open Source Osijek Licenca : GPL Autor: Hrvoje Horvat CEPH sustav 1 Sadržaj CEPH storage .................................................................................................................................. 4 NAS i SAN ................................................................................................................................. 4 Što je uopće NAS sustav ? ...................................................................................................... 4 A što je SAN sustav ................................................................................................................ 8 Zbog čega uopće koristiti NAS ili SAN sustave ......................................................................... 9 U čemu je problem sa standardnim NAS ili SAN sustavima ............................................... 11 Slijedeći korak: Klasterski i/ili redundantni NAS sustavi ........................................................ 11 Redundantni ili Klasterizirani NAS Sustavi ......................................................................... 12 Redundantni ili Klasterizirani SAN Sustavi ......................................................................... 14 ZFS - negdje između ............................................................................................................. 14 Proces učenja ........................................................................................................................ 17 Što je slijedeće ............................................................................................................................. -

(FSS) in Oracle Cloud Infrastructure Bradley C

Everyone Loves File: File Storage Service (FSS) in Oracle Cloud Infrastructure Bradley C. Kuszmaul, Matteo Frigo, Justin Mazzola Paluska, and Alexander (Sasha) Sandler, Oracle Corporation https://www.usenix.org/conference/atc19/presentation/frigo This paper is included in the Proceedings of the 2019 USENIX Annual Technical Conference. July 10–12, 2019 • Renton, WA, USA ISBN 978-1-939133-03-8 Open access to the Proceedings of the 2019 USENIX Annual Technical Conference is sponsored by USENIX. Everyone Loves File: File Storage Service (FSS) in Oracle Cloud Infrastructure Bradley C. Kuszmaul Matteo Frigo Justin Mazzola Paluska Alexander (Sasha) Sandler Oracle Corporation Abstract 100 MB=s of bandwidth and 3000 operations per sec- ond for every terabyte stored. Customers can mount a File Storage Service (FSS) is an elastic filesystem pro- filesystem on an arbitrary number of NFS clients. The vided as a managed NFS service in Oracle Cloud In- size of a file or filesystem is essentially unbounded, lim- frastructure. Using a pipelined Paxos implementation, ited only by the practical concerns that the NFS pro- we implemented a scalable block store that provides lin- tocol cannot cope with files bigger than 16 EiB and earizable multipage limited-size transactions. On top of that we would need to deploy close to a million hosts the block store, we built a scalable B-tree that provides to store multiple exabytes. FSS provides the ability linearizable multikey limited-size transactions. By us- to take a snapshot of a filesystem using copy-on-write ing self-validating B-tree nodes and performing all B- techniques. -

Lizardfs Documentation Release Latest

LizardFS Documentation Release latest Michal Bielicki Jun 15, 2018 Contents 1 ToDo-List 3 2 Introduction to LizardFS 5 2.1 Architecture...............................................5 2.2 Replication-Modes............................................6 2.3 Possible application of LizardFS.....................................6 2.4 Hardware recommendation.......................................7 2.5 Additional Features...........................................7 3 LizardFS QuickStart on Debian9 3.1 Master server installation........................................9 3.2 Shadow master installation........................................ 10 3.3 Metalogger installation.......................................... 11 3.4 Chunk server installation......................................... 11 3.5 Cgi server installation.......................................... 12 3.6 Command line administration tools................................... 13 4 LizardFS Administration Guide 15 4.1 Installing LizardFS............................................ 15 4.2 Basic Configuration........................................... 18 4.3 Configuring Replication Modes..................................... 26 4.4 Advanced configuration......................................... 29 4.5 Configuring Optional Features...................................... 34 4.6 Connecting Clients to your LizardFS installation............................ 36 4.7 Securing your LizardFS installation................................... 40 4.8 Optimizing your LizardFS setup.................................... -

Ütläärschulr Dekanat - Prüfungsausschuss LJ LUBECK University of Applied §Ciences

Fachbereich Elektrotechnik und lnformatik ÜtläärscHulr Dekanat - Prüfungsausschuss LJ LUBECK University of Applied §ciences Thema der Masterarbeit für Herrn Thomas F in n e r n Analyse und lntegration von Storage-Clustern in elastische Container Plattformen ofessor Dr. Kratzke gefordert durch: {r Ausgabedatum: 02. Januar 2017 rf Abgabedatum: 03. Juli 2017 E -q**V,.,1 s& -4, #w r+ (Professor Dr. Andreas Hanemann) Vorsitzender des Prüfu ngsaussch usses L# ffi Seite 2 Thema der Masterarbeit für Herrn Thomas Finnern Aufgabenstellung: Elastische Container Plattformen können auf Maschinen von verschiedenen Cloud Service Providern (2.8. AWS, GCE, Azure) ausgebracht werden und ermöglichen eine Migration von Workloads zwischen Cloud-Anbietern. Eine Realisierung dieses Ansat- zes wurde im Forschungsprojekt Cloud TRANSIT bereits teilweise durch die Entwick- lung eines Cluster-Deployers für Kubernetes auf Amazon AWS und OpenStack umge- setzt. Aktuell können zustandslose Applikationen providerunabhängig bereitgestellt werden. Mit einem ergänzenden Storage-Cluster sollen nun auch zustandsbehaftete Applikationen u nterstützt werden. In dieser Masterarbeit sollen dafür existierende Open Source Storage-Cluster Lösun- gen analysiert, integriert und hinsichtlich Migrierbarkeit evaluiert werden. Hierzu sind im Detailfolgende TeilaufEaben zu bearbeiten und zu dokumentieren (Masterarbeit): . Automatisches Bereitstellen von Storage-Cluster Lösungen (lntegration) . Evaluierung der Migrierbarkeit von Storage-Clustern Kratzke Fachbereich Elektrotechnik und Informatik Dekanat - Prüfungsausschuss University of Applied Sciences Erklärung zur Abschlussarbeit Ich versichere, dass ich die Arbeit selbständig, ohne fremde Hilfe verfasst habe. Bei der Abfassung der Arbeit sind nur die angegebenen Quellen benutzt worden. Wörtlich oder dem Sinne nach entnommene Stellen sind als solche gekennzeichnet. Ich bin damit einverstanden, dass meine Arbeit veröffentlicht wird, insbesondere dass die Arbeit Dritten zur Einsichtnahme vorgelegt oder Kopien der Arbeit zur Weitergabe an Dritte angefertigt werden. -

Freebsd Enterprise Storage Polish BSD User Group Welcome 2020/02/11 Freebsd Enterprise Storage

FreeBSD Enterprise Storage Polish BSD User Group Welcome 2020/02/11 FreeBSD Enterprise Storage Sławomir Wojciech Wojtczak [email protected] vermaden.wordpress.com twitter.com/vermaden bsd.network/@vermaden https://is.gd/bsdstg FreeBSD Enterprise Storage Polish BSD User Group What is Enterprise? 2020/02/11 What is Enterprise Storage? FreeBSD Enterprise Storage Polish BSD User Group What is Enterprise? 2020/02/11 What is Enterprise Storage? The wikipedia.org/wiki/enterprise_storage page tells nothing about enterprise. FreeBSD Enterprise Storage Polish BSD User Group What is Enterprise? 2020/02/11 What is Enterprise Storage? The wikipedia.org/wiki/enterprise_storage page tells nothing about enterprise. Actually just re irects to wikipedia.org/wiki/data_storage page. FreeBSD Enterprise Storage Polish BSD User Group What is Enterprise? 2020/02/11 What is Enterprise Storage? The wikipedia.org/wiki/enterprise_storage page tells nothing about enterprise. Actually just re irects to wikipedia.org/wiki/data_storage page. The other wikipedia.org/wiki/computer_data_storage page also oes the same. FreeBSD Enterprise Storage Polish BSD User Group What is Enterprise? 2020/02/11 What is Enterprise Storage? The wikipedia.org/wiki/enterprise_storage page tells nothing about enterprise. Actually just re irects to wikipedia.org/wiki/data_storage page. The other wikipedia.org/wiki/computer_data_storage page also oes the same. The wikipedia.org/wiki/enterprise is just meta page !ith lin"s. FreeBSD Enterprise Storage Polish BSD User Group What is Enterprise? 2020/02/11 Common Charasteristics o Enterprise Storage FreeBSD Enterprise Storage Polish BSD User Group What is Enterprise? 2020/02/11 Common Charasteristics o Enterprise Storage ● Category that inclu es ser$ices/pro ucts esigne &or !arge organizations.