CEPH Storage [Open Source Osijek]

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Open Source Solutions for Building Your Own Storage Area Network and Network Attached Storage

Open Source Solutions for Building Your Own Storage Area Network and Network Attached Storage Author, Balakrishnan Subramanian A Data Science Foundation White Paper May 2020 --------------------------------------------------- Data Science Foundation Data Science Foundation, Atlantic Business Centre, Atlantic Street, Altrincham, WA14 5NQ Tel: 0161 926 3641 Email: [email protected] Web: www.datascience.foundation Registered in England and Wales 4th June 2015, Registered Number 9624670 www.datascience.foundation Copyright 2016 - 2017 Data Science Foundation 1. INTRODUCTION Generally, Storage solutions can be grouped into following four categories: SoHo NAS systems, Cloud-based/object solutions, Microsoft Storage Server solutions, Enterprise NAS (Networked Attached Storage) and Storage Area Network (SAN) solutions. Enterprise NAS and SAN solutions are generally closed systems offered by traditional vendors like EMC and NetApp with a very large price tag, so many businesses are looking at Open Source solutions to meet their needs. This is a collection of links and brief descriptions of Open Source storage solutions currently available. Open Source of course means it’s free to use and modify, however some projects have do commercially supported versions as well for enterprise customers who require it. 1. Factors for choosing Storage Solutions When you are in the need of Storage solutions for managing your own infrastructure in your private Data Center, there are many offerings but selecting the right one depends upon your requirement. Factors involved in selecting a Storage type Budget Type of Data that you want to store Scaling concerns and Usage pattern In this article, we will be discussing two different methods (i.e. NAS and SAN), these two methods define the Structure of the storage, it is important to choose the right one based upon your use case and type of data. -

FOSDEM 2017 Schedule

FOSDEM 2017 - Saturday 2017-02-04 (1/9) Janson K.1.105 (La H.2215 (Ferrer) H.1301 (Cornil) H.1302 (Depage) H.1308 (Rolin) H.1309 (Van Rijn) H.2111 H.2213 H.2214 H.3227 H.3228 Fontaine)… 09:30 Welcome to FOSDEM 2017 09:45 10:00 Kubernetes on the road to GIFEE 10:15 10:30 Welcome to the Legal Python Winding Itself MySQL & Friends Opening Intro to Graph … Around Datacubes Devroom databases Free/open source Portability of containers software and drones Optimizing MySQL across diverse HPC 10:45 without SQL or touching resources with my.cnf Singularity Welcome! 11:00 Software Heritage The Veripeditus AR Let's talk about The State of OpenJDK MSS - Software for The birth of HPC Cuba Game Framework hardware: The POWER Make your Corporate planning research Applying profilers to of open. CLA easy to use, aircraft missions MySQL Using graph databases please! 11:15 in popular open source CMSs 11:30 Jockeying the Jigsaw The power of duck Instrumenting plugins Optimized and Mixed License FOSS typing and linear for Performance reproducible HPC Projects algrebra Schema Software deployment 11:45 Incremental Graph Queries with 12:00 CloudABI LoRaWAN for exploring Open J9 - The Next Free It's time for datetime Reproducible HPC openCypher the Internet of Things Java VM sysbench 1.0: teaching Software Installation on an old dog new tricks Cray Systems with EasyBuild 12:15 Making License 12:30 Compliance Easy: Step Diagnosing Issues in Webpush notifications Putting Your Jobs Under Twitter Streaming by Open Source Step. Java Apps using for Kinto Introducing gh-ost the Microscope using Graph with Gephi Thermostat and OGRT Byteman. -

Globalfs: a Strongly Consistent Multi-Site File System

GlobalFS: A Strongly Consistent Multi-Site File System Leandro Pacheco Raluca Halalai Valerio Schiavoni University of Lugano University of Neuchatelˆ University of Neuchatelˆ Fernando Pedone Etienne Riviere` Pascal Felber University of Lugano University of Neuchatelˆ University of Neuchatelˆ Abstract consistency, availability, and tolerance to partitions. Our goal is to ensure strongly consistent file system operations This paper introduces GlobalFS, a POSIX-compliant despite node failures, at the price of possibly reduced geographically distributed file system. GlobalFS builds availability in the event of a network partition. Weak on two fundamental building blocks, an atomic multicast consistency is suitable for domain-specific applications group communication abstraction and multiple instances of where programmers can anticipate and provide resolution a single-site data store. We define four execution modes and methods for conflicts, or work with last-writer-wins show how all file system operations can be implemented resolution methods. Our rationale is that for general-purpose with these modes while ensuring strong consistency and services such as a file system, strong consistency is more tolerating failures. We describe the GlobalFS prototype in appropriate as it is both more intuitive for the users and detail and report on an extensive performance assessment. does not require human intervention in case of conflicts. We have deployed GlobalFS across all EC2 regions and Strong consistency requires ordering commands across show that the system scales geographically, providing replicas, which needs coordination among nodes at performance comparable to other state-of-the-art distributed geographically distributed sites (i.e., regions). Designing file systems for local commands and allowing for strongly strongly consistent distributed systems that provide good consistent operations over the whole system. -

Big Data Storage Workload Characterization, Modeling and Synthetic Generation

BIG DATA STORAGE WORKLOAD CHARACTERIZATION, MODELING AND SYNTHETIC GENERATION BY CRISTINA LUCIA ABAD DISSERTATION Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Computer Science in the Graduate College of the University of Illinois at Urbana-Champaign, 2014 Urbana, Illinois Doctoral Committee: Professor Roy H. Campbell, Chair Professor Klara Nahrstedt Associate Professor Indranil Gupta Assistant Professor Yi Lu Dr. Ludmila Cherkasova, HP Labs Abstract A huge increase in data storage and processing requirements has lead to Big Data, for which next generation storage systems are being designed and implemented. As Big Data stresses the storage layer in new ways, a better understanding of these workloads and the availability of flexible workload generators are increas- ingly important to facilitate the proper design and performance tuning of storage subsystems like data replication, metadata management, and caching. Our hypothesis is that the autonomic modeling of Big Data storage system workloads through a combination of measurement, and statistical and machine learning techniques is feasible, novel, and useful. We consider the case of one common type of Big Data storage cluster: A cluster dedicated to supporting a mix of MapReduce jobs. We analyze 6-month traces from two large clusters at Yahoo and identify interesting properties of the workloads. We present a novel model for capturing popularity and short-term temporal correlations in object re- quest streams, and show how unsupervised statistical clustering can be used to enable autonomic type-aware workload generation that is suitable for emerging workloads. We extend this model to include other relevant properties of stor- age systems (file creation and deletion, pre-existing namespaces and hierarchical namespaces) and use the extended model to implement MimesisBench, a realistic namespace metadata benchmark for next-generation storage systems. -

A Decentralized Cloud Storage Network Framework

Storj: A Decentralized Cloud Storage Network Framework Storj Labs, Inc. October 30, 2018 v3.0 https://github.com/storj/whitepaper 2 Copyright © 2018 Storj Labs, Inc. and Subsidiaries This work is licensed under a Creative Commons Attribution-ShareAlike 3.0 license (CC BY-SA 3.0). All product names, logos, and brands used or cited in this document are property of their respective own- ers. All company, product, and service names used herein are for identification purposes only. Use of these names, logos, and brands does not imply endorsement. Contents 0.1 Abstract 6 0.2 Contributors 6 1 Introduction ...................................................7 2 Storj design constraints .......................................9 2.1 Security and privacy 9 2.2 Decentralization 9 2.3 Marketplace and economics 10 2.4 Amazon S3 compatibility 12 2.5 Durability, device failure, and churn 12 2.6 Latency 13 2.7 Bandwidth 14 2.8 Object size 15 2.9 Byzantine fault tolerance 15 2.10 Coordination avoidance 16 3 Framework ................................................... 18 3.1 Framework overview 18 3.2 Storage nodes 19 3.3 Peer-to-peer communication and discovery 19 3.4 Redundancy 19 3.5 Metadata 23 3.6 Encryption 24 3.7 Audits and reputation 25 3.8 Data repair 25 3.9 Payments 26 4 4 Concrete implementation .................................... 27 4.1 Definitions 27 4.2 Peer classes 30 4.3 Storage node 31 4.4 Node identity 32 4.5 Peer-to-peer communication 33 4.6 Node discovery 33 4.7 Redundancy 35 4.8 Structured file storage 36 4.9 Metadata 39 4.10 Satellite 41 4.11 Encryption 42 4.12 Authorization 43 4.13 Audits 44 4.14 Data repair 45 4.15 Storage node reputation 47 4.16 Payments 49 4.17 Bandwidth allocation 50 4.18 Satellite reputation 53 4.19 Garbage collection 53 4.20 Uplink 54 4.21 Quality control and branding 55 5 Walkthroughs ............................................... -

Oracle® Linux 7 Managing File Systems

Oracle® Linux 7 Managing File Systems F32760-07 August 2021 Oracle Legal Notices Copyright © 2020, 2021, Oracle and/or its affiliates. This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS: Oracle programs (including any operating system, integrated software, any programs embedded, installed or activated on delivered hardware, and modifications of such programs) and Oracle computer documentation or other Oracle data delivered to or accessed by U.S. Government end users are "commercial computer software" or "commercial computer software documentation" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, the use, reproduction, duplication, release, display, disclosure, modification, preparation of derivative works, and/or adaptation of i) Oracle programs (including any operating system, integrated software, any programs embedded, installed or activated on delivered hardware, and modifications of such programs), ii) Oracle computer documentation and/or iii) other Oracle data, is subject to the rights and limitations specified in the license contained in the applicable contract. -

Parallel File Systems for HPC Introduction to Lustre

Parallel File Systems for HPC Introduction to Lustre Piero Calucci Scuola Internazionale Superiore di Studi Avanzati Trieste November 2008 Advanced School in High Performance and Grid Computing Outline 1 The Need for Shared Storage 2 The Lustre File System 3 Other Parallel File Systems Parallel File Systems for HPC Cluster & Storage Piero Calucci Shared Storage Lustre Other Parallel File Systems A typical cluster setup with a Master node, several computing nodes and shared storage. Nodes have little or no local storage. Parallel File Systems for HPC Cluster & Storage Piero Calucci The Old-Style Solution Shared Storage Lustre Other Parallel File Systems • a single storage server quickly becomes a bottleneck • if the cluster grows in time (quite typical for initially small installations) storage requirements also grow, sometimes at a higher rate • adding space (disk) is usually easy • adding speed (both bandwidth and IOpS) is hard and usually involves expensive upgrade of existing hardware • e.g. you start with an NFS box with a certain amount of disk space, memory and processor power, then add disks to the same box Parallel File Systems for HPC Cluster & Storage Piero Calucci The Old-Style Solution /2 Shared Storage Lustre • e.g. you start with an NFS box with a certain amount of Other Parallel disk space, memory and processor power File Systems • adding space is just a matter of plugging in some more disks, ot ar worst adding a new controller with an external port to connect external disks • but unless you planned for -

Ubuntu Server Guide Basic Installation Preparing to Install

Ubuntu Server Guide Welcome to the Ubuntu Server Guide! This site includes information on using Ubuntu Server for the latest LTS release, Ubuntu 20.04 LTS (Focal Fossa). For an offline version as well as versions for previous releases see below. Improving the Documentation If you find any errors or have suggestions for improvements to pages, please use the link at thebottomof each topic titled: “Help improve this document in the forum.” This link will take you to the Server Discourse forum for the specific page you are viewing. There you can share your comments or let us know aboutbugs with any page. PDFs and Previous Releases Below are links to the previous Ubuntu Server release server guides as well as an offline copy of the current version of this site: Ubuntu 20.04 LTS (Focal Fossa): PDF Ubuntu 18.04 LTS (Bionic Beaver): Web and PDF Ubuntu 16.04 LTS (Xenial Xerus): Web and PDF Support There are a couple of different ways that the Ubuntu Server edition is supported: commercial support and community support. The main commercial support (and development funding) is available from Canonical, Ltd. They supply reasonably- priced support contracts on a per desktop or per-server basis. For more information see the Ubuntu Advantage page. Community support is also provided by dedicated individuals and companies that wish to make Ubuntu the best distribution possible. Support is provided through multiple mailing lists, IRC channels, forums, blogs, wikis, etc. The large amount of information available can be overwhelming, but a good search engine query can usually provide an answer to your questions. -

Comparative Analysis of Distributed and Parallel File Systems' Internal Techniques

Comparative Analysis of Distributed and Parallel File Systems’ Internal Techniques Viacheslav Dubeyko Content 1 TERMINOLOGY AND ABBREVIATIONS ................................................................................ 4 2 INTRODUCTION......................................................................................................................... 5 3 COMPARATIVE ANALYSIS METHODOLOGY ....................................................................... 5 4 FILE SYSTEM FEATURES CLASSIFICATION ........................................................................ 5 4.1 Distributed File Systems ............................................................................................................................ 6 4.1.1 HDFS ..................................................................................................................................................... 6 4.1.2 GFS (Google File System) ....................................................................................................................... 7 4.1.3 InterMezzo ............................................................................................................................................ 9 4.1.4 CodA .................................................................................................................................................... 10 4.1.5 Ceph.................................................................................................................................................... 12 4.1.6 DDFS .................................................................................................................................................. -

ZFS Basics Various ZFS RAID Lebels = & . = a Little About Zetabyte File System ( ZFS / Openzfs)

= BDNOG11 | Cox's Bazar | Day # 3 | ZFS Basics & Various ZFS RAID Lebels. = = ZFS Basics & Various ZFS RAID Lebels . = A Little About Zetabyte File System ( ZFS / OpenZFS) =1= = BDNOG11 | Cox's Bazar | Day # 3 | ZFS Basics & Various ZFS RAID Lebels. = Disclaimer: The scope of this topic here is not to discuss in detail about the architecture of the ZFS (OpenZFS) rather Features, Use Cases and Operational Method. =2= = BDNOG11 | Cox's Bazar | Day # 3 | ZFS Basics & Various ZFS RAID Lebels. = What is ZFS? =0= ZFS is a combined file system and logical volume manager designed by Sun Microsystems and now owned by Oracle Corporation. It was designed and implemented by a team at Sun Microsystems led by Jeff Bonwick and Matthew Ahrens. Matt is the founding member of OpenZFS. =0= Its development started in 2001 and it was officially announced in 2004. In 2005 it was integrated into the main trunk of Solaris and released as part of OpenSolaris. =0= It was described by one analyst as "the only proven Open Source data-validating enterprise file system". This file =3= = BDNOG11 | Cox's Bazar | Day # 3 | ZFS Basics & Various ZFS RAID Lebels. = system also termed as the “world’s safest file system” by some analyst. =0= ZFS is scalable, and includes extensive protection against data corruption, support for high storage capacities, efficient data compression and deduplication. =0= ZFS is available for Solaris (and its variants), BSD (and its variants) & Linux (Canonical’s Ubuntu Integrated as native kernel module from version 16.04x) =0= OpenZFS was announced in September 2013 as the truly open source successor to the ZFS project. -

Executive Summary

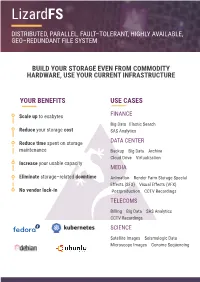

LizardFS DISTRIBUTED, PARALLEL, FAULT–TOLERANT, HIGHLY AVAILABLE, GEO–REDUNDANT FILE SYSTEM BUILD YOUR STORAGE EVEN FROM COMMODITY HARDWARE, USE YOUR CURRENT INFRASTRUCTURE YOUR BENEFITS USE CASES Scale up to exabytes FINANCE Big Data Elastic Search Reduce your storage cost SAS Analytics Reduce time spent on storage DATA CENTER maintenance Backup Big Data Archive Cloud Drive Virtualization Increase your usable capacity MEDIA Eliminate storage–related downtime Animation Render Farm Storage Special Effects (SFX) Visual Effects (VFX) No vendor lock-in Postproduction CCTV Recordings TELECOMS Billing Big Data SAS Analytics CCTV Recordings SCIENCE Satellite Images Seismologic Data Microscope Images Genome Sequencing DATA SAFETY REDUCING RTO Thanks to High Availability and Erasure Coding Thanks to LizardFS, the time needed to mechanisms, server failures will not affect your recover from a disaster is reduced business continuity. Your data will always stay massively. The stability of your business is safe. what really matters. REDUCED MAINTENANCE TIME FULL TRANSPARENCY Disc failures are handled automatically with no LizardFS is an open-source solution. You can need of human intervention. Thanks to that you trust hundreds of our Community don’t have to hire an army of sysadmins. members, not just us. USE YOUR CURRENT INFRASTRUCTURE GEO REPLICATION LizardFS is 100% hardware agnostic. You can run it It allows you to create geo stretched clusters on any hardware you want. stretching virtually any distance. PLUG AND SCALE INCREASED PERFORMANCE Scale vertically as well as horizontally by simply LizardFS allows for parallel writes and reads, adding or removing a single drive or node. thus enhancing performance. COST EFFICIENCY EASY TO INSTALL AND MAINTAIN With us your existing storage keeps growing inste- You don’t have to graduate from the School of ad of being replaced every few years. -

The Quantcast File System

The Quantcast File System Michael Ovsiannikov Silvius Rus Damian Reeves Quantcast Quantcast Google movsiannikov@quantcast [email protected] [email protected] Paul Sutter Sriram Rao Jim Kelly Quantcast Microsoft Quantcast [email protected] [email protected] [email protected] ABSTRACT chine writing it, another on the same rack, and a third on a The Quantcast File System (QFS) is an efficient alterna- distant rack. Thus HDFS is network efficient but not par- tive to the Hadoop Distributed File System (HDFS). QFS is ticularly storage efficient, since to store a petabyte of data, written in C++, is plugin compatible with Hadoop MapRe- it uses three petabytes of raw storage. At today’s cost of duce, and offers several efficiency improvements relative to $40,000 per PB that means $120,000 in disk alone, plus ad- HDFS: 50% disk space savings through erasure coding in- ditional costs for servers, racks, switches, power, cooling, stead of replication, a resulting doubling of write through- and so on. Over three years, operational costs bring the put, a faster name node, support for faster sorting and log- cost close to $1 million. For reference, Amazon currently ging through a concurrent append feature, a native com- charges $2.3 million to store 1 PB for three years. mand line client much faster than hadoop fs, and global Hardware evolution since then has opened new optimiza- feedback-directed I/O device management. As QFS works tion possibilities. 10 Gbps networks are now commonplace, out of the box with Hadoop, migrating data from HDFS and cluster racks can be much chattier.