LRD of Fractional Brownian Motion and Application in Data Network

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Stationary Processes and Their Statistical Properties

Stationary Processes and Their Statistical Properties Brian Borchers March 29, 2001 1 Stationary processes A discrete time stochastic process is a sequence of random variables Z1, Z2, :::. In practice we will typically analyze a single realization z1, z2, :::, zn of the stochastic process and attempt to esimate the statistical properties of the stochastic process from the realization. We will also consider the problem of predicting zn+1 from the previous elements of the sequence. We will begin by focusing on the very important class of stationary stochas- tic processes. A stochastic process is strictly stationary if its statistical prop- erties are unaffected by shifting the stochastic process in time. In particular, this means that if we take a subsequence Zk+1, :::, Zk+m, then the joint distribution of the m random variables will be the same no matter what k is. Stationarity requires that the mean of the stochastic process be a constant. E[Zk] = µ. and that the variance is constant 2 V ar[Zk] = σZ : Also, stationarity requires that the covariance of two elements separated by a distance m is constant. That is, Cov(Zk;Zk+m) is constant. This covariance is called the autocovariance at lag m, and we will use the notation γm. Since Cov(Zk;Zk+m) = Cov(Zk+m;Zk), we need only find γm for m 0. The ≥ correlation of Zk and Zk+m is the autocorrelation at lag m. We will use the notation ρm for the autocorrelation. It is easy to show that γk ρk = : γ0 1 2 The autocovariance and autocorrelation ma- trices The covariance matrix for the random variables Z1, :::, Zn is called an auto- covariance matrix. -

Stochastic Process - Introduction

Stochastic Process - Introduction • Stochastic processes are processes that proceed randomly in time. • Rather than consider fixed random variables X, Y, etc. or even sequences of i.i.d random variables, we consider sequences X0, X1, X2, …. Where Xt represent some random quantity at time t. • In general, the value Xt might depend on the quantity Xt-1 at time t-1, or even the value Xs for other times s < t. • Example: simple random walk . week 2 1 Stochastic Process - Definition • A stochastic process is a family of time indexed random variables Xt where t belongs to an index set. Formal notation, { t : ∈ ItX } where I is an index set that is a subset of R. • Examples of index sets: 1) I = (-∞, ∞) or I = [0, ∞]. In this case Xt is a continuous time stochastic process. 2) I = {0, ±1, ±2, ….} or I = {0, 1, 2, …}. In this case Xt is a discrete time stochastic process. • We use uppercase letter {Xt } to describe the process. A time series, {xt } is a realization or sample function from a certain process. • We use information from a time series to estimate parameters and properties of process {Xt }. week 2 2 Probability Distribution of a Process • For any stochastic process with index set I, its probability distribution function is uniquely determined by its finite dimensional distributions. •The k dimensional distribution function of a process is defined by FXX x,..., ( 1 x,..., k ) = P( Xt ≤ 1 ,..., xt≤ X k) x t1 tk 1 k for anyt 1 ,..., t k ∈ I and any real numbers x1, …, xk . -

Autocovariance Function Estimation Via Penalized Regression

Autocovariance Function Estimation via Penalized Regression Lina Liao, Cheolwoo Park Department of Statistics, University of Georgia, Athens, GA 30602, USA Jan Hannig Department of Statistics and Operations Research, University of North Carolina, Chapel Hill, NC 27599, USA Kee-Hoon Kang Department of Statistics, Hankuk University of Foreign Studies, Yongin, 449-791, Korea Abstract The work revisits the autocovariance function estimation, a fundamental problem in statistical inference for time series. We convert the function estimation problem into constrained penalized regression with a generalized penalty that provides us with flexible and accurate estimation, and study the asymptotic properties of the proposed estimator. In case of a nonzero mean time series, we apply a penalized regression technique to a differenced time series, which does not require a separate detrending procedure. In penalized regression, selection of tuning parameters is critical and we propose four different data-driven criteria to determine them. A simulation study shows effectiveness of the tuning parameter selection and that the proposed approach is superior to three existing methods. We also briefly discuss the extension of the proposed approach to interval-valued time series. Some key words: Autocovariance function; Differenced time series; Regularization; Time series; Tuning parameter selection. 1 1 Introduction Let fYt; t 2 T g be a stochastic process such that V ar(Yt) < 1, for all t 2 T . The autoco- variance function of fYtg is given as γ(s; t) = Cov(Ys;Yt) for all s; t 2 T . In this work, we consider a regularly sampled time series f(i; Yi); i = 1; ··· ; ng. Its model can be written as Yi = g(i) + i; i = 1; : : : ; n; (1.1) where g is a smooth deterministic function and the error is assumed to be a weakly stationary 2 process with E(i) = 0, V ar(i) = σ and Cov(i; j) = γ(ji − jj) for all i; j = 1; : : : ; n: The estimation of the autocovariance function γ (or autocorrelation function) is crucial to determine the degree of serial correlation in a time series. -

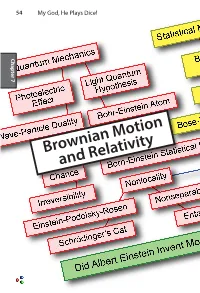

Brownian Motion and Relativity Brownian Motion 55

54 My God, He Plays Dice! Chapter 7 Chapter Brownian Motion and Relativity Brownian Motion 55 Brownian Motion and Relativity In this chapter we describe two of Einstein’s greatest works that have little or nothing to do with his amazing and deeply puzzling theories about quantum mechanics. The first,Brownian motion, provided the first quantitative proof of the existence of atoms and molecules. The second,special relativity in his miracle year of 1905 and general relativity eleven years later, combined the ideas of space and time into a unified space-time with a non-Euclidean 7 Chapter curvature that goes beyond Newton’s theory of gravitation. Einstein’s relativity theory explained the precession of the orbit of Mercury and predicted the bending of light as it passes the sun, confirmed by Arthur Stanley Eddington in 1919. He also predicted that galaxies can act as gravitational lenses, focusing light from objects far beyond, as was confirmed in 1979. He also predicted gravitational waves, only detected in 2016, one century after Einstein wrote down the equations that explain them.. What are we to make of this man who could see things that others could not? Our thesis is that if we look very closely at the things he said, especially his doubts expressed privately to friends, today’s mysteries of quantum mechanics may be lessened. As great as Einstein’s theories of Brownian motion and relativity are, they were accepted quickly because measurements were soon made that confirmed their predictions. Moreover, contemporaries of Einstein were working on these problems. Marion Smoluchowski worked out the equation for the rate of diffusion of large particles in a liquid the year before Einstein. -

And Nanoemulsions As Vehicles for Essential Oils: Formulation, Preparation and Stability

nanomaterials Review An Overview of Micro- and Nanoemulsions as Vehicles for Essential Oils: Formulation, Preparation and Stability Lucia Pavoni, Diego Romano Perinelli , Giulia Bonacucina, Marco Cespi * and Giovanni Filippo Palmieri School of Pharmacy, University of Camerino, 62032 Camerino, Italy; [email protected] (L.P.); [email protected] (D.R.P.); [email protected] (G.B.); gianfi[email protected] (G.F.P.) * Correspondence: [email protected] Received: 23 December 2019; Accepted: 10 January 2020; Published: 12 January 2020 Abstract: The interest around essential oils is constantly increasing thanks to their biological properties exploitable in several fields, from pharmaceuticals to food and agriculture. However, their widespread use and marketing are still restricted due to their poor physico-chemical properties; i.e., high volatility, thermal decomposition, low water solubility, and stability issues. At the moment, the most suitable approach to overcome such limitations is based on the development of proper formulation strategies. One of the approaches suggested to achieve this goal is the so-called encapsulation process through the preparation of aqueous nano-dispersions. Among them, micro- and nanoemulsions are the most studied thanks to the ease of formulation, handling and to their manufacturing costs. In this direction, this review intends to offer an overview of the formulation, preparation and stability parameters of micro- and nanoemulsions. Specifically, recent literature has been examined in order to define the most common practices adopted (materials and fabrication methods), highlighting their suitability and effectiveness. Finally, relevant points related to formulations, such as optimization, characterization, stability and safety, not deeply studied or clarified yet, were discussed. -

Long Memory and Self-Similar Processes

ANNALES DE LA FACULTÉ DES SCIENCES Mathématiques GENNADY SAMORODNITSKY Long memory and self-similar processes Tome XV, no 1 (2006), p. 107-123. <http://afst.cedram.org/item?id=AFST_2006_6_15_1_107_0> © Annales de la faculté des sciences de Toulouse Mathématiques, 2006, tous droits réservés. L’accès aux articles de la revue « Annales de la faculté des sci- ences de Toulouse, Mathématiques » (http://afst.cedram.org/), implique l’accord avec les conditions générales d’utilisation (http://afst.cedram. org/legal/). Toute reproduction en tout ou partie cet article sous quelque forme que ce soit pour tout usage autre que l’utilisation à fin strictement personnelle du copiste est constitutive d’une infraction pénale. Toute copie ou impression de ce fichier doit contenir la présente mention de copyright. cedram Article mis en ligne dans le cadre du Centre de diffusion des revues académiques de mathématiques http://www.cedram.org/ Annales de la Facult´e des Sciences de Toulouse Vol. XV, n◦ 1, 2006 pp. 107–123 Long memory and self-similar processes(∗) Gennady Samorodnitsky (1) ABSTRACT. — This paper is a survey of both classical and new results and ideas on long memory, scaling and self-similarity, both in the light-tailed and heavy-tailed cases. RESUM´ E.´ — Cet article est une synth`ese de r´esultats et id´ees classiques ou nouveaux surla longue m´emoire, les changements d’´echelles et l’autosimi- larit´e, `a la fois dans le cas de queues de distributions lourdes ou l´eg`eres. 1. Introduction The notion of long memory (or long range dependence) has intrigued many at least since B. -

Lewis Fry Richardson: Scientist, Visionary and Pacifist

Lett Mat Int DOI 10.1007/s40329-014-0063-z Lewis Fry Richardson: scientist, visionary and pacifist Angelo Vulpiani Ó Centro P.RI.ST.EM, Universita` Commerciale Luigi Bocconi 2014 Abstract The aim of this paper is to present the main The last of seven children in a thriving English Quaker aspects of the life of Lewis Fry Richardson and his most family, in 1898 Richardson enrolled in Durham College of important scientific contributions. Of particular importance Science, and two years later was awarded a grant to study are the seminal concepts of turbulent diffusion, turbulent at King’s College in Cambridge, where he received a cascade and self-similar processes, which led to a profound diverse education: he read physics, mathematics, chemis- transformation of the way weather forecasts, turbulent try, meteorology, botany, and psychology. At the beginning flows and, more generally, complex systems are viewed. of his career he was quite uncertain about which road to follow, but he later resolved to work in several areas, just Keywords Lewis Fry Richardson Á Weather forecasting Á like the great German scientist Helmholtz, who was a Turbulent diffusion Á Turbulent cascade Á Numerical physician and then a physicist, but following a different methods Á Fractals Á Self-similarity order. In his words, he decided ‘‘to spend the first half of my life under the strict discipline of physics, and after- Lewis Fry Richardson (1881–1953), while undeservedly wards to apply that training to researches on living things’’. little known, had a fundamental (often posthumous) role in In addition to contributing important results to meteo- twentieth-century science. -

Covariances of ARMA Models

Statistics 910, #9 1 Covariances of ARMA Processes Overview 1. Review ARMA models: causality and invertibility 2. AR covariance functions 3. MA and ARMA covariance functions 4. Partial autocorrelation function 5. Discussion Review of ARMA processes ARMA process A stationary solution fXtg (or if its mean is not zero, fXt − µg) of the linear difference equation Xt − φ1Xt−1 − · · · − φpXt−p = wt + θ1wt−1 + ··· + θqwt−q φ(B)Xt = θ(B)wt (1) 2 where wt denotes white noise, wt ∼ WN(0; σ ). Definition 3.5 adds the • identifiability condition that the polynomials φ(z) and θ(z) have no zeros in common and the • normalization condition that φ(0) = θ(0) = 1. Causal process A stationary process fXtg is said to be causal if there ex- ists a summable sequence (some require square summable (`2), others want more and require absolute summability (`1)) sequence f jg such that fXtg has the one-sided moving average representation 1 X Xt = jwt−j = (B)wt : (2) j=0 Proposition 3.1 states that a stationary ARMA process fXtg is causal if and only if (iff) the zeros of the autoregressive polynomial Statistics 910, #9 2 φ(z) lie outside the unit circle (i.e., φ(z) 6= 0 for jzj ≤ 1). Since φ(0) = 1, φ(z) > 0 for jzj ≤ 1. (The unit circle in the complex plane consists of those x 2 C for which jzj = 1; the closed unit disc includes the interior of the unit circle as well as the circle; the open unit disc consists of jzj < 1.) If the zeros of φ(z) lie outside the unit circle, then we can invert each Qp of the factors (1 − B=zj) that make up φ(B) = j=1(1 − B=zj) one at a time (as when back-substituting in the derivation of the AR(1) representation). -

Covariance Functions

C. E. Rasmussen & C. K. I. Williams, Gaussian Processes for Machine Learning, the MIT Press, 2006, ISBN 026218253X. c 2006 Massachusetts Institute of Technology. www.GaussianProcess.org/gpml Chapter 4 Covariance Functions We have seen that a covariance function is the crucial ingredient in a Gaussian process predictor, as it encodes our assumptions about the function which we wish to learn. From a slightly different viewpoint it is clear that in supervised learning the notion of similarity between data points is crucial; it is a basic similarity assumption that points with inputs x which are close are likely to have similar target values y, and thus training points that are near to a test point should be informative about the prediction at that point. Under the Gaussian process view it is the covariance function that defines nearness or similarity. An arbitrary function of input pairs x and x0 will not, in general, be a valid valid covariance covariance function.1 The purpose of this chapter is to give examples of some functions commonly-used covariance functions and to examine their properties. Section 4.1 defines a number of basic terms relating to covariance functions. Section 4.2 gives examples of stationary, dot-product, and other non-stationary covariance functions, and also gives some ways to make new ones from old. Section 4.3 introduces the important topic of eigenfunction analysis of covariance functions, and states Mercer’s theorem which allows us to express the covariance function (under certain conditions) in terms of its eigenfunctions and eigenvalues. The covariance functions given in section 4.2 are valid when the input domain X is a subset of RD. -

Lecture 1: Stationary Time Series∗

Lecture 1: Stationary Time Series∗ 1 Introduction If a random variable X is indexed to time, usually denoted by t, the observations {Xt, t ∈ T} is called a time series, where T is a time index set (for example, T = Z, the integer set). Time series data are very common in empirical economic studies. Figure 1 plots some frequently used variables. The upper left figure plots the quarterly GDP from 1947 to 2001; the upper right figure plots the the residuals after linear-detrending the logarithm of GDP; the lower left figure plots the monthly S&P 500 index data from 1990 to 2001; and the lower right figure plots the log difference of the monthly S&P. As you could see, these four series display quite different patterns over time. Investigating and modeling these different patterns is an important part of this course. In this course, you will find that many of the techniques (estimation methods, inference proce- dures, etc) you have learned in your general econometrics course are still applicable in time series analysis. However, there are something special of time series data compared to cross sectional data. For example, when working with cross-sectional data, it usually makes sense to assume that the observations are independent from each other, however, time series data are very likely to display some degree of dependence over time. More importantly, for time series data, we could observe only one history of the realizations of this variable. For example, suppose you obtain a series of US weekly stock index data for the last 50 years. -

Lecture 5: Gaussian Processes & Stationary Processes

Miranda Holmes-Cerfon Applied Stochastic Analysis, Spring 2019 Lecture 5: Gaussian processes & Stationary processes Readings Recommended: • Pavliotis (2014), sections 1.1, 1.2 • Grimmett and Stirzaker (2001), 8.2, 8.6 • Grimmett and Stirzaker (2001) 9.1, 9.3, 9.5, 9.6 (more advanced than what we will cover in class) Optional: • Grimmett and Stirzaker (2001) 4.9 (review of multivariate Gaussian random variables) • Grimmett and Stirzaker (2001) 9.4 • Chorin and Hald (2009) Chapter 6; a gentle introduction to the spectral decomposition for continuous processes, that gives insight into the link to the spectral decomposition for discrete processes. • Yaglom (1962), Ch. 1, 2; a nice short book with many details about stationary random functions; one of the original manuscripts on the topic. • Lindgren (2013) is a in-depth but accessible book; with more details and a more modern style than Yaglom (1962). We want to be able to describe more stochastic processes, which are not necessarily Markov process. In this lecture we will look at two classes of stochastic processes that are tractable to use as models and to simulat: Gaussian processes, and stationary processes. 5.1 Setup Here are some ideas we will need for what follows. 5.1.1 Finite dimensional distributions Definition. The finite-dimensional distributions (fdds) of a stochastic process Xt is the set of measures Pt1;t2;:::;tk given by Pt1;t2;:::;tk (F1 × F2 × ··· × Fk) = P(Xt1 2 F1;Xt2 2 F2;:::Xtk 2 Fk); k where (t1;:::;tk) is any point in T , k is any integer, and Fi are measurable events in R. -

Analytic and Geometric Background of Recurrence and Non-Explosion of the Brownian Motion on Riemannian Manifolds

BULLETIN (New Series) OF THE AMERICAN MATHEMATICAL SOCIETY Volume 36, Number 2, Pages 135–249 S 0273-0979(99)00776-4 Article electronically published on February 19, 1999 ANALYTIC AND GEOMETRIC BACKGROUND OF RECURRENCE AND NON-EXPLOSION OF THE BROWNIAN MOTION ON RIEMANNIAN MANIFOLDS ALEXANDER GRIGOR’YAN Abstract. We provide an overview of such properties of the Brownian mo- tion on complete non-compact Riemannian manifolds as recurrence and non- explosion. It is shown that both properties have various analytic characteri- zations, in terms of the heat kernel, Green function, Liouville properties, etc. On the other hand, we consider a number of geometric conditions such as the volume growth, isoperimetric inequalities, curvature bounds, etc., which are related to recurrence and non-explosion. Contents 1. Introduction 136 Acknowledgments 141 Notation 141 2. Heat semigroup on Riemannian manifolds 142 2.1. Laplace operator of the Riemannian metric 142 2.2. Heat kernel and Brownian motion on manifolds 143 2.3. Manifolds with boundary 145 3. Model manifolds 145 3.1. Polar coordinates 145 3.2. Spherically symmetric manifolds 146 4. Some potential theory 149 4.1. Harmonic functions 149 4.2. Green function 150 4.3. Capacity 152 4.4. Massive sets 155 4.5. Hitting probabilities 159 4.6. Exterior of a compact 161 5. Equivalent definitions of recurrence 164 6. Equivalent definitions of stochastic completeness 170 7. Parabolicity and volume growth 174 7.1. Upper bounds of capacity 174 7.2. Sufficient conditions for parabolicity 177 8. Transience and isoperimetric inequalities 181 9. Non-explosion and volume growth 184 Received by the editors October 1, 1997, and, in revised form, September 2, 1998.