Chren: Cherokee-English Machine Translation for Endangered Language Revitalization

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

1Cljqpgni 843713.Pdf

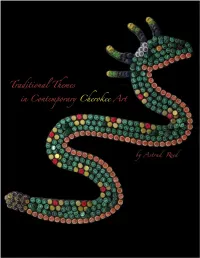

© 2013 University of Oklahoma School of Art All rights reserved. Published 2013. First Edition. Published in America on acid free paper. University of Oklahoma School of Art Fred Jones Center 540 Parrington Oval, Suite 122 Norman, OK 73019-3021 http://www.ou.edu/finearts/art_arthistory.html Cover: Ganiyegi Equoni-Ehi (Danger in the River), America Meredith. Pages iv-v: Silent Screaming, Roy Boney, Jr. Page vi: Top to bottom, Whirlwind; Claflin Sun-Circle; Thunder,America Meredith. Page viii: Ayvdaqualosgv Adasegogisdi (Thunder’s Victory),America Meredith. Traditional Themes in Contemporary Cherokee Art Traditional Themes in Contemporary Cherokee Art xi Foreword MARY JO WATSON xiii Introduction HEATHER AHTONE 1 Chapter 1 CHEROKEE COSMOLOGY, HISTORY, AND CULTURE 11 Chapter 2 TRANSFORMATION OF TRADITIONAL CRAFTS AND UTILITARIAN ITEMS INTO ART 19 Chapter 3 CONTEMPORARY CHEROKEE ART THEMES, METHODS, AND ARTISTS 21 Catalogue of the Exhibition 39 Notes 42 Acknowledgements and Contributors 43 Bibliography Foreword "What About Indian Art?" An Interview with Dr. Mary Jo Watson Director, School of Art and Art History / Regents Professor of Art History KGOU Radio Interview by Brant Morrell • April 17, 2013 Twenty years ago, a degree in Native American Art and Art History was non-existent. Even today, only a few universities offer Native Art programs, but at the University of Oklahoma Mary Jo Watson is responsible for launching a groundbreaking art program with an emphasis on the indigenous perspective. You expect a director of an art program at a major university to have pieces in their office, but entering Watson’s workspace feels like stepping into a Native art museum. -

No Meeting This Month. Instead, the Club Will Host a Fiction Master Class

ATLANTA WRITERS CLUB July 2008 —founded in 1914 We are a social and educational club where eQuill local writers meet to discuss the craft and business of writing. We Agenda for —July 19, 2008 also sponsor contests for our members and No meeting this month. host expert speakers from the worlds of Instead, the club will host a Fiction Master Class with Brian Jay writing, publishing, Corrigan for those who register, at the Holiday Inn‐Select on and entertainment. Chamblee‐Dunwoody Rd. See the full announcement and instructions for registration else‐ where in this issue. Passion for Words by Marty Aftewicz, President ......................................................... 2 Photos ................................................................................................................................................... 3 Scratch .................................................................................................................................................. 4 Accolades ............................................................................................................................................ 4 Charles Frazier at Gwinnett Center ......................................................................................... 5 Master Fiction Class ....................................................................................................................... 6 Picnic details..................................................................................................................................... -

Mountain Monsters Season 6 Episode 1 Free Download Mountain Monsters Season 6 Episode 1 Free Download

mountain monsters season 6 episode 1 free download Mountain monsters season 6 episode 1 free download. Completing the CAPTCHA proves you are a human and gives you temporary access to the web property. What can I do to prevent this in the future? If you are on a personal connection, like at home, you can run an anti-virus scan on your device to make sure it is not infected with malware. If you are at an office or shared network, you can ask the network administrator to run a scan across the network looking for misconfigured or infected devices. Another way to prevent getting this page in the future is to use Privacy Pass. You may need to download version 2.0 now from the Chrome Web Store. Cloudflare Ray ID: 67d97e1039bac41f • Your IP : 188.246.226.140 • Performance & security by Cloudflare. Are We Ever Going To See a Mountain Monsters Season 6? Mountain Monsters is a show that has aired for five seasons on Destination America, one of the channels that’s frequently featured on premium packages for satellite and cable television. Typically, the network deals with things that are paranormal and in some cases, they venture into the world of cryptozoology. Mountain Monsters is a show that kind of combines both of these things into a single series, and people that have seen it either love it or hate it. It really is no middle ground with this show, to say the least. The show features the AIMS team and typically, you see them going out into the backwoods of the Appalachian Mountains looking for bigfoot or other storied cryptids that people have reported seeing. -

Cherokee-English Machine Translation for Endangered Language Revitalization

ChrEn: Cherokee-English Machine Translation for Endangered Language Revitalization Shiyue Zhang Benjamin Frey Mohit Bansal UNC Chapel Hill {shiyue, mbansal}@cs.unc.edu; [email protected] Abstract Src. ᎥᏝ ᎡᎶᎯ ᎠᏁᎯ ᏱᎩ, ᎾᏍᎩᏯ ᎠᏴ ᎡᎶᎯ ᎨᎢ ᏂᎨᏒᎾ ᏥᎩ. Ref. They are not of the world, even as I am not of the Cherokee is a highly endangered Native Amer- world. ican language spoken by the Cherokee peo- SMT It was not the things upon the earth, even as I am ple. The Cherokee culture is deeply embed- not of the world. ded in its language. However, there are ap- NMT I am not the world, even as I am not of the world. proximately only 2,000 fluent first language Table 1: An example from the development set of Cherokee speakers remaining in the world, and ChrEn. NMT denotes our RNN-NMT model. the number is declining every year. To help save this endangered language, we introduce people: the Eastern Band of Cherokee Indians ChrEn, a Cherokee-English parallel dataset, to (EBCI), the United Keetoowah Band of Cherokee facilitate machine translation research between Indians (UKB), and the Cherokee Nation (CN). Cherokee and English. Compared to some pop- ular machine translation language pairs, ChrEn The Cherokee language, the language spoken by is extremely low-resource, only containing 14k the Cherokee people, contributed to the survival sentence pairs in total. We split our paral- of the Cherokee people and was historically the ba- lel data in ways that facilitate both in-domain sic medium of transmission of arts, literature, tradi- and out-of-domain evaluation. -

To Download Information Packet

INFORMATION PACKET General Information • Important Dates in Cherokee History • The Eastern Band of Cherokee Indians Tribal Government • Cherokee NC Fact Sheet • Eastern Cherokee Government Since 1870 • The Cherokee Clans • Cherokee Language • The Horse/Indian Names for States • Genealogy Info • Recommended Book List Frequently Asked Questions—Short ResearCh Papers with References • Cherokee Bows and Arrows • Cherokee Clothing • Cherokee Education • Cherokee Marriage Ceremonies • Cherokee Villages and Dwellings in the 1700s • Thanksgiving and Christmas for the Cherokee • Tobacco, Pipes, and the Cherokee Activities • Museum Word Seek • Butterbean Game • Trail of Tears Map ArtiCles • “Let’s Put the Indians Back into American History” William Anderson Museum of the Cherokee Indian Info packet p.1 IMPORTANT DATES IN CHEROKEE HISTORY Recently, Native American artifacts and hearths have been dated to 17,000 B.C. at the Meadowcroft site in Pennsylvania and at Cactus Hill in Virginia. Hearths in caves have been dated to 23,000 B.C. at sites on the coast of Venezuela. Native people say they have always been here. The Cherokee people say that the first man and first woman, Kanati and Selu, lived at Shining Rock, near present-day Waynesville, N.C. The old people also say that the first Cherokee village was Kituwah, located around the Kituwah Mound, which was purchased in 1997 by the Eastern Band of Cherokee Indians to become once again part of tribal lands. 10,000 BC-8,000 BC Paleo-Indian Period: People were present in North Carolina throughout this period, making seasonal rounds for hunting and gathering. Continuous occupation from 12,000 BC has been documented at Williams Island near Chattanooga, Tennessee and at some Cherokee town sites in North Carolina, including Kituhwa and Ravensford. -

Bbq Wings, •Hot Dogs, •Chicken Sausages, •Veggie Burgers, •Salads

ATLANTA WRITERS CLUB June 2008 —founded in 1914 We are a social and educational club where eQuill local writers meet to discuss the craft and business of writing. We Agenda for Next Meeting—June 21, 2008 also sponsor contests for our members and Plenty of food: host expert speakers •bbq wings, from the worlds of writing, publishing, •hot dogs, and entertainment. •chicken sausages, •veggie burgers, •salads, •corn on the cob, •desserts Passion for Words by Marty Aftewicz, President ......................................................... 2 Photos ................................................................................................................................................... 3 Scratch .................................................................................................................................................. 4 Accolades ............................................................................................................................................ 4 Charles Frazier at Gwinnett Center ......................................................................................... 5 Master Fiction Class ....................................................................................................................... 6 Picnic details...................................................................................................................................... 7 Terry Kay bio by G. Weinstein, Pro. Chair & 1st VP ....................................................... 8 Chattahoochee Valley -

Faren Sanders Crews

“Discovery of the New World” unleashed How did they manage to stay? By fleeing centuries of disease and violence that to, or already living on, inaccessible or decimated American Indian populations. “undesirable” land. Relying on existing But it was settler colonialism—the state or federal treaties (usually resulting hunger for land that fueled America’s in further loss of territory). Assimilating expansion—that increasingly drove through intermarriage and acculturation. American Indians from their homelands. And armed resistance. For Indians living in the Southeast, WE NEVER LEFT celebrates the Indian Removal Act of 1830 was contemporary artists descended from its ultimate expression, resulting in these American Indians who, against the forced removal of an estimated all odds, remained in the Southeast as 65,000 to “Indian Territory” west of the tribes who continue to live in Alabama, Mississippi (today’s Oklahoma). En route, Florida, Louisiana, Mississippi, North as many as ten thousand people are Carolina, South Carolina, and Virginia. estimated to have perished on what is now remembered as the Trail of Tears. Their highly diverse artwork reflects engagement with tradition-inspired Removal, however, was far from techniques, cutting-edge technology, complete. Estimates of Indians and pop culture, and addresses a who remained range from as few variety of issues—cultural preservation, as 4,000 to as many as 14,000—a language revitalization, personal discrepancy, scholars explain, due to identity and expression, American who government officials counted. history, community pride, and threats to (Indians’ reluctance to come forward was homeland and the natural environment. certainly understandable.) THIS EXHIBITION WAS ORGANIZED BY THE MUSEUM OF ARTS AND SCIENCES, DAYTONA BEACH, FLORIDA, AND CURATED BY WALTER L. -

Heritage Resource Inventory of the Brnha 3

CHAPTER THREE: HERITAGE RESOURCE INVENTORY OF THE BRNHA 3 INTRODUCTION This chapter attempts to comprehensively document to a degree that The Blue Ridge National Heritage Area is an extraordinary place. It is practical, given time and budgetary constraints, some of the more is significant primarily because of the quality and uniqueness of its notable heritage resources that reflect the five heritage themes for natural assets, native and early American cultural history, musical which the BRNHA is responsible for managing. It serves as a baseline traditions, world renowned artistry and hand craftsmanship, and rich from which additional inventory efforts can build. It is intended agricultural way of life. Indeed, the BRNHA’s authorizing legislation to serve as a reference over time in BRNHA’s internal planning and recognized these five central characteristics as contributing notably decision making. It should remind BRNHA of what specifically is to the region’s unique personality. These resources contain intrinsic significant about this Heritage Area, particularly within the context value and serve as both the backdrop and focus of numerous of its authorizing legislation. It can help inform the establishment recreational pursuits. While this rich heritage defines the area’s of priorities and annual operational plans. This inventory should past, this management plan intends to ensure that it remains alive also prove useful for potential implementation partners as they and well, contributing to a sustained economic vitality for the formulate proposals to help preserve, develop, and interpret region. these resources. Finally, this list has been used in the analysis of potential environmental impacts from various possible management alternatives explored under the environmental assessment process. -

Wanderer COMING HOME to a NEW PLACE H H H H H H H H

wanderer COMING HOME TO A NEW PLACE H H H H H H H H WHITESIDE MOUNTAIN. NC Written and Photographed by Jennifer Kornegay A ROAD LESS TRAVELED wanderer IT WAS A BRAND NEW SPOT TO ME, BUT MY CONNECTION, THOUGH INSTANT, FELT ANCIENT, LIKE THE MOUNTAIN ITSELF. Once shrouded in the cover of primeval forest and barely touched by man, Wh- part of the Cherokee Nation, and it holds a dark spot in that culture’s lore. Photograph by emranashraf iteside Mountain in western North Carolina is today more comparable to the According to one Cherokee story, Whiteside was home to Spearfinger, a female Disney World of hiking. The panoramic views of the Blue Ridge range’s sharper monster who preyed on children. In the mid-1800s, when the Cherokee were peaks transitioning to the soft, rounded hills of South Carolina’s Piedmont visi- forcibly removed from these lands with so many others, it was rumored that ble from its 4,900-foot-high summit draw throngs of people each summer and several families took refuge on the mountain, alluding detection for decades. up to 100,000 over the course of Somewhere on its slopes is a cave used any year. as a hideout by Confederate deserters. I am frequently part of the con- The mysteries and secrets tucked into the gestion. Anytime I’m in the area, mountain’s multi-layered past are numer- I make the trek too. And yet, even ous. But I think it also contains deeply when crowds surround me on the personal histories for people who, like me, two-mile-loop trail, I can feel the have fallen under its spell. -

Progressive Traditions: Cherokee Cultural Studies

PROGRESSIVE TRADITIONS: CHEROKEE CULTURAL STUDIES A Dissertation Presented to the Faculty of the Graduate School of Cornell University In Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy by Joshua Bourne Nelson February 2010 © 2010 Joshua Bourne Nelson PROGRESSIVE TRADITIONS: CHEROKEE CULTURAL STUDIES Joshua Bourne Nelson, Ph. D. Cornell University 2010 My dissertation intervenes in prevalent debates between nationalists and cosmopolitans that have dominated American Indian literary theory and displaced alternative questions about the empowering potential of local identities. Both positions too often categorize American Indian literature with a reductive dichotomy that opposes traditionalism against assimilation, resulting in the seizing of mechanisms that coordinate change and in the unwarranted archival exclusion of many authors—such as those I discuss—who speak to historical problems with present effects. Seeing past this dialectic requires a fresh look at what precisely is gathered under “assimilation” and “traditionalism”—a reassessment I initiate by arguing that Cherokee representations of dynamic, agentive identities proceed from traditional, adaptive strategies for addressing cultural and historical dilemmas. My project is organized into two sections of two chapters, the first of which introduce principled practices from several scholarly perspectives then applied to primary texts in the subsequent chapters. In the first, I examine an array of traditional religious dispositions and the critical theories -

Fall 2019 Newsletter & Calendar of Events

FALL 2019 NEWSLETTER & CALENDAR OF EVENTS MORE THAN A CENTURY OF ART, PG 2 NEW ACQUISITIONS, PG 14 CALENDAR OF EVENTS, PG 19 FROM THE DIRECTOR History, Art, and Culture he Tennessee State Museum is often considered a history museum, but a look T inside of our collection shows emphasis in art and culture as well. It has long collected and exhibited art together with artifacts, focusing on both individual pieces significant to the history of the state, and artists that have called the state home while pushing the form forward. Decorative arts can also often tell us as much about the periods in which they were created as material culture. In this issue, we focus on Tennessee art and artists during a time of rapid growth in both art influence and style — after the turn of the 19th century. Senior curator of art and architecture, Jim Hoobler, is your guide through notable paintings, sculpture and furniture from the Museum’s collection, much of it on display now. Elsewhere in the magazine, we highlight important new acquisitions that further inform our understanding of Tennessee history, like a sign denoting segregation on Nashville streetcars during the early 1900s. In our collection highlight, we delve deeper into state prehistory, by examining remarkably preserved Native American artifacts from the Woodland period. This season also marks one year since we opened our doors at our new location. Our By-The-Numbers section takes a fun look at how much we’ve grown, and who we’ve served, both in the building and throughout the state. -

Full Schedule

2019 EVENTS & STORIES SCHEDULE The icons below denote how scary each story is: Everyone Spooky Very Scary EVERY 15 MINUTES Night at the 12:30 pm Bell Witch from 10:15 am–2:30 pm Print Shop Bell Witch (Change and Challenge Gallery) Spearfinger (Forging a Nation Gallery) (Change and (Temporary Gallery 3) Challenge Gallery) 11:30 am–12:30 pm Night at the Mary at Storytellers Night at Print Shop the Orpheum the Print Shop (Forging a Nation Chip Bailey and Gallery) (Change and Jennifer Alldridge (Forging a Nation Gallery) Challenge Gallery) (Digital Learning Center) 12:30–1:00 pm 2:00 pm Frontier 11:30 am Nashville Night at the Fault Line Bell Witch Puppet Truck Print Shop (Forging a Nation (Forging a Nation (Change and (Digital Learning Center) Gallery) Gallery) Challenge Gallery) 1:00 pm 10:30 am Night at the Bell Witch Bell Witch (Change and Bell Witch Print Shop (Change and (Change and Challenge Gallery) (Forging a Nation Challenge Gallery) Challenge Gallery) Gallery) 2:30 pm Night at Night at the Costume Bell Witch the Print Shop Print Shop Parade (Change and (Forging a Nation Gallery) Challenge Gallery) (Forging a Nation (Grand Hall) Gallery) 1:00–2:00 pm Night at 12:00 pm Storytellers Nashville the Print Shop Bell Witch Chip Bailey and Puppet Truck (Forging a Nation (Change and Jennifer Alldridge Gallery) (Digital Learning Center) Challenge Gallery) (Digital Learning Center) 11:00 am Night at the 2:00-2:30 pm 1:30 pm Bell Witch Print Shop Nashville (Change and (Forging a Nation Costume Parade Puppet Truck Challenge Gallery) Gallery) (Grand Hall) (Digital Learning Center) Night at the Print Shop: Learn about how two friendly Spearfinger: Come hear the Cherokee story of ghosts helped save a print shop on the Tennessee frontier.