“What Is an 'Artificial Intelligence Arms Race' Anyway?”

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Pr-Dvd-Holdings-As-Of-September-18

CALL # LOCATION TITLE AUTHOR BINGE BOX COMEDIES prmnd Comedies binge box (includes Airplane! --Ferris Bueller's Day Off --The First Wives Club --Happy Gilmore)[videorecording] / Princeton Public Library. BINGE BOX CONCERTS AND MUSICIANSprmnd Concerts and musicians binge box (Includes Brad Paisley: Life Amplified Live Tour, Live from WV --Close to You: Remembering the Carpenters --John Sebastian Presents Folk Rewind: My Music --Roy Orbison and Friends: Black and White Night)[videorecording] / Princeton Public Library. BINGE BOX MUSICALS prmnd Musicals binge box (includes Mamma Mia! --Moulin Rouge --Rodgers and Hammerstein's Cinderella [DVD] --West Side Story) [videorecording] / Princeton Public Library. BINGE BOX ROMANTIC COMEDIESprmnd Romantic comedies binge box (includes Hitch --P.S. I Love You --The Wedding Date --While You Were Sleeping)[videorecording] / Princeton Public Library. DVD 001.942 ALI DISC 1-3 prmdv Aliens, abductions & extraordinary sightings [videorecording]. DVD 001.942 BES prmdv Best of ancient aliens [videorecording] / A&E Television Networks History executive producer, Kevin Burns. DVD 004.09 CRE prmdv The creation of the computer [videorecording] / executive producer, Bob Jaffe written and produced by Donald Sellers created by Bruce Nash History channel executive producers, Charlie Maday, Gerald W. Abrams Jaffe Productions Hearst Entertainment Television in association with the History Channel. DVD 133.3 UNE DISC 1-2 prmdv The unexplained [videorecording] / produced by Towers Productions, Inc. for A&E Network executive producer, Michael Cascio. DVD 158.2 WEL prmdv We'll meet again [videorecording] / producers, Simon Harries [and three others] director, Ashok Prasad [and five others]. DVD 158.2 WEL prmdv We'll meet again. Season 2 [videorecording] / director, Luc Tremoulet producer, Page Shepherd. -

2013 Movie Catalog © Warner Bros

1-800-876-5577 www.swank.com Swank Motion Pictures,Inc. Swank Motion 2013 Movie Catalog 2013 Movie © Warner Bros. © 2013 Disney © TriStar Pictures © Warner Bros. © NBC Universal © Columbia Pictures Industries, ©Inc. Summit Entertainment 2013 Movie Catalog Movie 2013 Inc. Pictures, Motion Swank 1-800-876-5577 www.swank.com MOVIES Swank Motion Pictures,Inc. Swank Motion 2013 Movie Catalog 2013 Movie © New Line Cinema © 2013 Disney © Columbia Pictures Industries, Inc. © Warner Bros. © 2013 Disney/Pixar © Summit Entertainment Promote Your movie event! Ask about FREE promotional materials to help make your next movie event a success! 2013 Movie Catalog 2013 Movie Catalog TABLE OF CONTENTS New Releases ......................................................... 1-34 Swank has rights to the largest collection of movies from the top Coming Soon .............................................................35 Hollywood & independent studios. Whether it’s blockbuster movies, All Time Favorites .............................................36-39 action and suspense, comedies or classic films,Swank has them all! Event Calendar .........................................................40 Sat., June 16th - 8:00pm Classics ...................................................................41-42 Disney 2012 © Date Night ........................................................... 43-44 TABLE TENT Sat., June 16th - 8:00pm TM & © Marvel & Subs 1-800-876-5577 | www.swank.com Environmental Films .............................................. 45 FLYER Faith-Based -

Complicated Views: Mainstream Cinema's Representation of Non

University of Southampton Research Repository Copyright © and Moral Rights for this thesis and, where applicable, any accompanying data are retained by the author and/or other copyright owners. A copy can be downloaded for personal non-commercial research or study, without prior permission or charge. This thesis and the accompanying data cannot be reproduced or quoted extensively from without first obtaining permission in writing from the copyright holder/s. The content of the thesis and accompanying research data (where applicable) must not be changed in any way or sold commercially in any format or medium without the formal permission of the copyright holder/s. When referring to this thesis and any accompanying data, full bibliographic details must be given, e.g. Thesis: Author (Year of Submission) "Full thesis title", University of Southampton, name of the University Faculty or School or Department, PhD Thesis, pagination. Data: Author (Year) Title. URI [dataset] University of Southampton Faculty of Arts and Humanities Film Studies Complicated Views: Mainstream Cinema’s Representation of Non-Cinematic Audio/Visual Technologies after Television. DOI: by Eliot W. Blades Thesis for the degree of Doctor of Philosophy May 2020 University of Southampton Abstract Faculty of Arts and Humanities Department of Film Studies Thesis for the degree of Doctor of Philosophy Complicated Views: Mainstream Cinema’s Representation of Non-Cinematic Audio/Visual Technologies after Television. by Eliot W. Blades This thesis examines a number of mainstream fiction feature films which incorporate imagery from non-cinematic moving image technologies. The period examined ranges from the era of the widespread success of television (i.e. -

Master Class with Douglas Trumbull: Selected Filmography 1 the Higher Learning Staff Curate Digital Resource Packages to Complem

Master Class with Douglas Trumbull: Selected Filmography The Higher Learning staff curate digital resource packages to complement and offer further context to the topics and themes discussed during the various Higher Learning events held at TIFF Bell Lightbox. These filmographies, bibliographies, and additional resources include works directly related to guest speakers’ work and careers, and provide additional inspirations and topics to consider; these materials are meant to serve as a jumping-off point for further research. Please refer to the event video to see how topics and themes relate to the Higher Learning event. * mentioned or discussed during the master class ^ special effects by Douglas Trumbull Early Special Effects Films (Pre-1968) The Execution of Mary, Queen of Scots. Dir. Alfred Clark, 1895, U.S.A. 1 min. Production Co.: Edison Manufacturing Company. The Vanishing Lady (Escamotage d’une dame au théâtre Robert Houdin). Dir. Georges Méliès, 1896, France. 1 min. Production Co.: Théâtre Robert-Houdin. A Railway Collision. Dir. Walter R. Booth, 1900, U.K. 1 mins. Production Co.: Robert W. Paul. A Trip to the Moon (Le voyage dans la lune). Dir. Georges Méliès, 1902, France. 14 mins. Production Co.: Star-Film. A Trip to Mars. Dir. Ashley Miller, 1910, U.S.A. 5 mins. Production Co.: Edison Manufacturing Company. The Conquest of the Pole (À la conquête de pôle). Dir. Georges Méliès, 1912, France. 33 mins. Production Co.: Star-Film. *Intolerance: Love’s Struggle Throughout the Ages. Dir. D.W. Griffith, 1916, U.S.A. 197 mins. Production Co.: Triangle Film Corporation / Wark Producing. The Ten Commandments. -

Humanity and Heroism in Blade Runner 2049 Ridley

Through Galatea’s Eyes: Humanity and Heroism in Blade Runner 2049 Ridley Scott’s 1982 Blade Runner depicts a futuristic world, where sophisticated androids called replicants, following a replicant rebellion, are being tracked down and “retired” by officers known as “blade runners.” The film raises questions about what it means to be human and memories as social conditioning. In the sequel, Blade Runner 2049, director Denis Villeneuve continues to probe similar questions, including what gives humans inalienable worth and at what point post-humans participate in this worth. 2049 follows the story of a blade runner named K, who is among the model of replicants designed to be more obedient. Various clues cause K to suspect he may have been born naturally as opposed to manufactured. Themes from the Pinocchio story are interwoven in K’s quest to discover if he is, in fact, a “real boy.” At its core Pinocchio is a Pygmalion story from the creature’s point of view. It is apt then that most of 2049 revolves around replicants, with humans as the supporting characters. By reading K through multiple mythological figures, including Pygmalion, Telemachus, and Oedipus, this paper will examine three hallmarks of humanity suggested by Villeneuve’s film: natural birth, memory, and self-sacrifice. These three qualities mark the stages of a human life but particularly a mythological hero’s life. Ultimately, only one of these can truly be said to apply to K, but it will be sufficient for rendering him a “real boy” nonetheless. Although Pinocchio stories are told from the creatures’ point of view, they are still based on what human authors project onto the creature. -

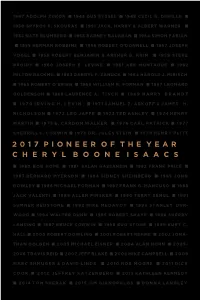

2017 Pioneer of the Year C H E R Y L B O O N E I S a A

1947 ADOLPH ZUKOR n 1948 GUS EYSSEL n 1949 CECIL B. DEMILLE n 1950 SPYROS P. SKOURAS n 1951 JACK, HARRY & ALBERT WARNER n 1952 NATE BLUMBERG n 1953 BARNEY BALABAN n 1954 SIMON FABIAN n 1955 HERMAN ROBBINS n 1956 ROBERT O’DONNELL n 1957 JOSEPH VOGEL n 1958 ROBERT BENJAMIN & ARTHUR B. KRIM n 1959 STEVE BROIDY n 1960 JOSEPH E. LEVINE n 1961 ABE MONTAGUE n 1962 MILTON RACKMIL n 1963 DARRYL F. ZANUCK n 1964 HAROLD J. MIRISCH n 1965 ROBERT O’BRIEN n 1966 WILLIAM R. FORMAN n 1967 LEONARD GOLDENSON n 1968 LAURENCE A. TISCH n 1969 HARRY BRANDT n 1970 IRVING H. LEVIN n 1971 SAMUEL Z. ARKOFF & JAMES H . NICHOLSON n 1972 LEO JAFFE n 1973 TED ASHLEY n 1974 HENRY MARTIN n 1975 E. CARDON WALKER n 1976 CARL PATRICK n 1977 SHERRILL C. CORWIN n 1978 DR. JULES STEIN n 1979 HENRY PLITT 2017 PIONEER OF THE YEAR CHERYL BOONE ISAACS n 1980 BOB HOPE n 1981 SALAH HASSANEIN n 1982 FRANK PRICE n 1983 BERNARD MYERSON n 1984 SIDNEY SHEINBERG n 1985 JOHN ROWLEY n 1986 MICHAEL FORMAN n 1987 FRANK G. MANCUSO n 1988 JACK VALENTI n 1989 ALLEN PINSKER n 1990 TERRY SEMEL n 1991 SUMNER REDSTONE n 1992 MIKE MEDAVOY n 1993 STANLEY DUR- WOOD n 1994 WALTER DUNN n 1995 ROBERT SHAYE n 1996 SHERRY LANSING n 1997 BRUCE CORWIN n 1998 BUD STONE n 1999 KURT C. HALL n 2000 ROBERT DOWLING n 2001 ROBERT REHME n 2002 JONA- THAN DOLGEN n 2003 MICHAEL EISNER n 2004 ALAN HORN n 2005– 2006 TRAVIS REID n 2007 JEFF BLAKE n 2008 MIKE CAMPBELL n 2009 MARC SHMUGER & DAVID LINDE n 2010 ROB MOORE n 2011 DICK COOK n 2012 JEFFREY KATZENBERG n 2013 KATHLEEN KENNEDY n 2014 TOM SHERAK n 2015 JIM GIANOPULOS n DONNA LANGLEY 2017 PIONEER OF THE YEAR CHERYL BOONE ISAACS 2017 PIONEER OF THE YEAR Welcome On behalf of the Board of Directors of the Will Rogers Motion Picture Pioneers Foundation, thank you for attending the 2017 Pioneer of the Year Dinner. -

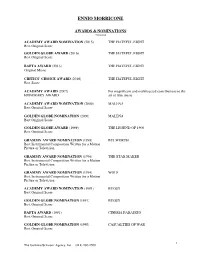

Ennio Morricone

ENNIO MORRICONE AWARDS & NOMINATIONS *selected BAFTA NOMINATION (2016) THE HATEFUL EIGHT Original Music GOLDEN GLOBE NOMINATION (2016) THE HATEFUL EIGHT Best Original Score CRITICS’ CHOICE AWARD NOMINATION THE HATEFUL EIGHT (2016) Best Score ACADEMY AWARD (2007) For magnificent and multifaceted contributions to the HONORARY AWARD art of film music ACADEMY AWARD NOMINATION (2000) MALENA Best Original Score GOLDEN GLOBE NOMINATION (2000) MALENA Best Original Score GOLD EN GLOBE AWARD (1999) THE LEGEND OF 1900 Best Original Score GRAMMY AWARD NOMINATION (1998) BULWORTH Best Instrumental Composition Written for a Motion Picture or Television GRAMMY AWARD NOMINATION (1996) THE STAR MAKER Best Instrumental Composition Written for a Motion Pi cture or Television GRAMMY AWARD NOMINATION (1994) WOLF Best Instrumental Composition Written for a Motion Picture or Television ACADEMY AWARD NOMINATION (1991) BUGSY Best Original Score GOLDEN GLOBE NOMINATION (19 91 ) BUGSY Best Original Score BAFTA AWARD (1991) CINEMA PARADISO Best Original Score GOLDEN GLOBE NOMINATION (1990) CASUALTIES OF WAR Best Original Score 1 The Gorfaine/Schwartz Agency, Inc. (818) 260-8500 ENNIO MORRICONE ACADEMY AWARD NOMINATION (1987) THE UNTOUCHABLES Best Original Score BAFTA AWARD (198 8) THE UNTOUCHABLES Best Original Score GOLDEN GLOBE NOMINATION (198 8) THE UNTOUCHABLES Best Original Score BAFTA AWARD (198 7) THE MISSION Best Original Score ACADEMY AWARD NOM INATION (1986) THE MISSION Best Original Score GOLDEN GLOBE AWARD (1986) THE MISSION Best Original Score BAFTA AWARD (198 5) ONCE UPON A TIME IN AMERICA Best Original Score GOLDEN GLOBE NOMINATION (198 5) ONCE UPON A TIME IN AMERICA Best Original Score BAFTA AWARD (198 0) DAYS OF HEAVEN Anthony Asquith Award for Film Music ACADEMY AWARD NOM INATION (19 79 ) DAYS OF HEAVEN Best Original Score MOTION PICTURES THE HATEFUL EIGHT Richard N. -

Ennio Morricone

ENNIO MORRICONE AWARDS & NOMINATIONS *selected ACADEMY AWARD NOMINATION (2015) THE HATEFUL EIGHT Best Original Score GOLDEN GLOBE AWARD (2016) THE HATEFUL EIGHT Best Original Score BAFTA AWARD (2016) THE HATEFUL EIGHT Original Music CRITICS’ CHOICE AWARD (2016) THE HATEFUL EIGHT Best Score ACADEMY AWARD (2007) For magnificent and multifaceted contributions to the HONORARY AWARD art of film music ACADEMY AWARD NOMINATION (2000) MALENA Best Original Score GOLDEN GLOBE NOMINATION (2000) MALENA Best O riginal Score GOLDEN GLOBE AWARD (1999) THE LEGEND OF 1900 Best Original Score GRAMMY AWARD NOMINATION (1998) BULWORTH Best Instrumental Composition Written for a Motion Picture or Television GRAMMY AWARD NOMINATION (1996) THE STAR MAKER Best Instrumental Composition Written for a Motion Picture or Television GRAMMY AWARD NOMINATION (1994) WOLF Best Instrumental Composition Written for a Motion Picture or Television ACADEMY AWARD NOMINATION (1991) BUGSY Best Original Score GOLDEN GLOBE NOMINATION (1991) BUGSY Best Original Score BAFTA AWARD (1991) CINEMA PARADISO Best Original Score GOLDEN GLOBE NOMINATION (1990) CASUALTIES OF WAR Best Original Score 1 The Gorfaine/Schwartz Agency, Inc. (818) 260-8500 ENNIO MORRICONE ACADEMY AWARD NOMINATION (1987) THE UNTOUCHABLES Best Original Score BAFTA AWARD (1988) THE UNTOUCHABLES Best Original Score GOLDEN GLOBE NOMINATION (1988) THE UNTOUCHABLES Best Original Score BAFTA AWARD (1987) THE MISSION Best Original Score ACADEMY AWARD NOMINATION (1986) THE MISSION Best Original Score GOLDEN GLOBE AWARD (1986) THE MISSION Best Original Score BAFTA AWARD (1985) ONCE UPON A TIME IN AMERICA Best Original Score GOLDEN GLOBE NOMINATION (1985) ONCE UPON A TIME IN AMERICA Best Original Score BAFTA AWARD (1980) DAYS OF HEAVEN Anthony Asquith Award fo r Film Music ACADEMY AWARD NOMINATION (1979) DAYS OF HEAVEN Best Original Score MOTION PICTURES THE HATEFUL EIGHT Richard N. -

Digital Art/Paint System Survey

Digital Art/Paint System Survey ROBERT RIVLIN . he most fundamental question to be three-dimensional letters, or complex addressed in this survey is, What computerized "mapping" designs, then is a digital art/paint system? output the results a frame at a time, Secondly,T thesurvey provides a basis for usually onto film, taking up to six minutes comparison among the different systems, to assemble each frame of a complex designed to show where they are similar, sequence. where they vary, and prompt you to The systems outlined here are designed directly contact those manufacturers to perform "down and dirty" whose systems you wish to evaluate artwork-artwork which is, in the words seriously . of Peter Black pf Xiphias, "short turn- It is clear, now that the dust from last around/short burst." In typical newsroom year's NAB show is beginning to settle, applications, the images will be created that we have seen, in the course of just the within minutes, then displayed, usually past year alone, the birth of a brand new compressed into a box wipe, for seconds. type of image creation product-the Though some of the systems possess the digital art/paint system. Its predecessors ability to perform 'limited animation"- pre-date it by several years, including the by having a finished picture build up from NEC Action Track/Digital Strobe Action scratch, for instance, unit or by having colors that uses extensive digital processing flash on and off simulating motion, these and a digital framestore to generate often systems are not designed to produce abstract images out of existing video animation effects. -

Film Locations in San Francisco

Film Locations in San Francisco Title Release Year Locations A Jitney Elopement 1915 20th and Folsom Streets A Jitney Elopement 1915 Golden Gate Park Greed 1924 Cliff House (1090 Point Lobos Avenue) Greed 1924 Bush and Sutter Streets Greed 1924 Hayes Street at Laguna The Jazz Singer 1927 Coffee Dan's (O'Farrell Street at Powell) Barbary Coast 1935 After the Thin Man 1936 Coit Tower San Francisco 1936 The Barbary Coast San Francisco 1936 City Hall Page 1 of 588 10/02/2021 Film Locations in San Francisco Fun Facts Production Company The Essanay Film Manufacturing Company During San Francisco's Gold Rush era, the The Essanay Film Manufacturing Company Park was part of an area designated as the "Great Sand Waste". In 1887, the Cliff House was severely Metro-Goldwyn-Mayer (MGM) damaged when the schooner Parallel, abandoned and loaded with dynamite, ran aground on the rocks below. Metro-Goldwyn-Mayer (MGM) Metro-Goldwyn-Mayer (MGM) Warner Bros. Pictures The Samuel Goldwyn Company The Tower was funded by a gift bequeathed Metro-Goldwyn Mayer by Lillie Hitchcock Coit, a socialite who reportedly liked to chase fires. Though the tower resembles a firehose nozzle, it was not designed this way. The Barbary Coast was a red-light district Metro-Goldwyn Mayer that was largely destroyed in the 1906 earthquake. Though some of the establishments were rebuilt after the earthquake, an anti-vice campaign put the establishments out of business. The dome of SF's City Hall is almost a foot Metro-Goldwyn Mayer Page 2 of 588 10/02/2021 Film Locations in San Francisco Distributor Director Writer General Film Company Charles Chaplin Charles Chaplin General Film Company Charles Chaplin Charles Chaplin Metro-Goldwyn-Mayer (MGM) Eric von Stroheim Eric von Stroheim Metro-Goldwyn-Mayer (MGM) Eric von Stroheim Eric von Stroheim Metro-Goldwyn-Mayer (MGM) Eric von Stroheim Eric von Stroheim Warner Bros. -

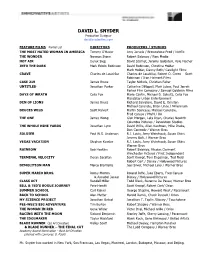

DAVID L. SNYDER Production Designer Davidlsnyderfilms.Com

DAVID L. SNYDER Production Designer davidlsnyderfilms.com FEATURE FILMS Partial List DIRECTORS PRODUCERS / STUDIOS THE MOST HATED WOMAN IN AMERICA Tommy O’Haver Amy Jarvela / Brownstone Prod / Netflix THE WONDER Norman Stone Robert Sidaway / Parc Media HOT AIR Derek Sieg David Szamet, Jeremy Goldstein, Kyle Fischer INTO THE DARK Mark Edwin Robinson David Robinson, Christine Holder Mark Holder, Danny Roth/ Castlight Films CRAVE Charles de Lauzirika Charles de Lauzirika, Robert O. Green Scott Robinson / Iron Helment Films CASE 219 James Bruce Taylor Nichols, Christian Faber UNTITLED Jonathan Parker Catherine DiNaPoli, Matt Luber, Paul Jarrett Parker Film ComPany / Samuel Goldwyn Films DAYS OF WRATH Celia Fox Marie Cantin, Michael D. Schultz, Celia Fox Mandalay Urban Entertainment DEN OF LIONS James Bruce Richard Salvatore, David E. Ornston Michael Cerenzie, Brian Linse / Millennium DEUCES WILD Scott Kalvert Martin Scorcese, Michael Cerenzie, Fred Caruso / MGM / UA THE ONE James Wong Glen Morgan, Lata Ryan, Charles Newirth Columbia Pictures / Revolution Studios THE WHOLE NINE YARDS Jonathan Lynn David Willis, Allan Kaufman, Mike Drake, Don Carmody / Warner Bros SOLDIER Paul W.S. Anderson R.J. Louis, Jerry Weintraub, Susan Ekins Jeremy Bolt, / Warner Bros VEGAS VACATION Stephen Kessler R.J. Louis, Jerry Weintraub, Susan Ekins Warner Bros RAINBOW Bob Hoskins Robert Sidaway, Nicolas Clermont Winchester Pictures / First IndePendent TERMINAL VELOCITY Deran Sarafian Scott Kroopf, Tom Engelman, Ted Field Robert Cort / Disney / Hollywood Pictures DEMOLITION MAN Marco Brambilla Joel Silver, Michael Levy / Warner Bros SUPER MARIO BROS. Rocky Morton Roland Joffe, Jake Eberts, Fred Caruso & Annabel Jankel Disney / Hollywood Pictures CLASS ACT Randall Miller Todd Black, Suzanne De Passe/ Warner Bros BILL & TED’S BOGUS JOURNEY Pete Hewitt Scott Kroopf, Robert Cort/ Orion SUMMER SCHOOL Carl Reiner George ShaPiro, Howard West / Paramount BACK TO SCHOOL Alan Metter Harold Ramis, Chuck Russell / Orion MY SCIENCE PROJECT Jonathan Betuel Jonathan T. -

Bad Lawyers in the Movies

Nova Law Review Volume 24, Issue 2 2000 Article 3 Bad Lawyers in the Movies Michael Asimow∗ ∗ Copyright c 2000 by the authors. Nova Law Review is produced by The Berkeley Electronic Press (bepress). https://nsuworks.nova.edu/nlr Asimow: Bad Lawyers in the Movies Bad Lawyers In The Movies Michael Asimow* TABLE OF CONTENTS I. INTRODUCTION .............................................................................. 533 I. THE POPULAR PERCEPTION OF LAWYERS ..................................... 536 El. DOES POPULAR LEGAL CULTURE FOLLOW OR LEAD PUBLIC OPINION ABOUT LAWYERS? ......................................................... 549 A. PopularCulture as a Followerof Public Opinion ................ 549 B. PopularCulture as a Leader of Public Opinion-The Relevant Interpretive Community .......................................... 550 C. The CultivationEffect ............................................................ 553 D. Lawyer Portrayalson Television as Compared to Movies ....556 IV. PORTRAYALS OF LAWYERS IN THE MOVIES .................................. 561 A. Methodological Issues ........................................................... 562 B. Movie lawyers: 1929-1969................................................... 569 C. Movie lawyers: the 1970s ..................................................... 576 D. Movie lawyers: 1980s and 1990s.......................................... 576 1. Lawyers as Bad People ..................................................... 578 2. Lawyers as Bad Professionals ..........................................