Operating Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

NTFS • Windows Reinstallation – Bypass ACL • Administrators Privilege – Bypass Ownership

Windows Encrypting File System Motivation • Laptops are very integrated in enterprises… • Stolen/lost computers loaded with confidential/business data • Data Privacy Issues • Offline Access – Bypass NTFS • Windows reinstallation – Bypass ACL • Administrators privilege – Bypass Ownership www.winitor.com 01 March 2010 Windows Encrypting File System Mechanism • Principle • A random - unique - symmetric key encrypts the data • An asymmetric key encrypts the symmetric key used to encrypt the data • Combination of two algorithms • Use their strengths • Minimize their weaknesses • Results • Increased performance • Increased security Asymetric Symetric Data www.winitor.com 01 March 2010 Windows Encrypting File System Characteristics • Confortable • Applying encryption is just a matter of assigning a file attribute www.winitor.com 01 March 2010 Windows Encrypting File System Characteristics • Transparent • Integrated into the operating system • Transparent to (valid) users/applications Application Win32 Crypto Engine NTFS EFS &.[ßl}d.,*.c§4 $5%2=h#<.. www.winitor.com 01 March 2010 Windows Encrypting File System Characteristics • Flexible • Supported at different scopes • File, Directory, Drive (Vista?) • Files can be shared between any number of users • Files can be stored anywhere • local, remote, WebDav • Files can be offline • Secure • Encryption and Decryption occur in kernel mode • Keys are never paged • Usage of standardized cryptography services www.winitor.com 01 March 2010 Windows Encrypting File System Availibility • At the GUI, the availibility -

CSE 220: Systems Programming Input and Output

CSE 220: Systems Programming Input and Output Ethan Blanton Department of Computer Science and Engineering University at Buffalo Introduction Unix I/O Standard I/O Buffering Summary References I/O Kernel Services We have seen some text I/O using the C Standard Library. printf() fgetc() … However, all I/O is built on kernel system calls. In this lecture, we’ll look at those services vs. standard I/O. © 2020 Ethan Blanton / CSE 220: Systems Programming Introduction Unix I/O Standard I/O Buffering Summary References Everything is a File These services are particularly important on Unix systems. On Unix, “everything is a file”. Many devices and services are accessed by opening device nodes. Device nodes behave like (but are not) files. Examples: /dev/null: Always readable, contains no data. Always writable, discards anything written to it. /dev/urandom: Always readable, reads a cryptographically secure stream of random data. © 2020 Ethan Blanton / CSE 220: Systems Programming Introduction Unix I/O Standard I/O Buffering Summary References File Descriptors All access to files is through file descriptors. A file descriptor is a small integer representing an open file in a particular process. There are three “standard” file descriptors: 0: standard input 1: standard output 2: standard error …sound familiar? (stdin, stdout, stderr) © 2020 Ethan Blanton / CSE 220: Systems Programming Introduction Unix I/O Standard I/O Buffering Summary References System Call Failures Kernel I/O (and most other) system calls return -1 on failure. When this happens, the global variable errno is set to a reason. Include errno.h to define errno in your code. -

Active @ UNDELETE Users Guide | TOC | 2

Active @ UNDELETE Users Guide | TOC | 2 Contents Legal Statement..................................................................................................4 Active@ UNDELETE Overview............................................................................. 5 Getting Started with Active@ UNDELETE........................................................... 6 Active@ UNDELETE Views And Windows......................................................................................6 Recovery Explorer View.................................................................................................... 7 Logical Drive Scan Result View.......................................................................................... 7 Physical Device Scan View................................................................................................ 8 Search Results View........................................................................................................10 Application Log...............................................................................................................11 Welcome View................................................................................................................11 Using Active@ UNDELETE Overview................................................................. 13 Recover deleted Files and Folders.............................................................................................. 14 Scan a Volume (Logical Drive) for deleted files..................................................................15 -

File Manager Manual

FileManager Operations Guide for Unisys MCP Systems Release 9.069W November 2017 Copyright This document is protected by Federal Copyright Law. It may not be reproduced, transcribed, copied, or duplicated by any means to or from any media, magnetic or otherwise without the express written permission of DYNAMIC SOLUTIONS INTERNATIONAL, INC. It is believed that the information contained in this manual is accurate and reliable, and much care has been taken in its preparation. However, no responsibility, financial or otherwise, can be accepted for any consequence arising out of the use of this material. THERE ARE NO WARRANTIES WHICH EXTEND BEYOND THE PROGRAM SPECIFICATION. Correspondence regarding this document should be addressed to: Dynamic Solutions International, Inc. Product Development Group 373 Inverness Parkway Suite 110, Englewood, Colorado 80112 (800)641-5215 or (303)754-2000 Technical Support Hot-Line (800)332-9020 E-Mail: [email protected] ii November 2017 Contents ................................................................................................................................ OVERVIEW .......................................................................................................... 1 FILEMANAGER CONSIDERATIONS................................................................... 3 FileManager File Tracking ................................................................................................ 3 File Recovery .................................................................................................................... -

Introduction to Unix

Introduction to Unix Rob Funk <[email protected]> University Technology Services Workstation Support http://wks.uts.ohio-state.edu/ University Technology Services Course Objectives • basic background in Unix structure • knowledge of getting started • directory navigation and control • file maintenance and display commands • shells • Unix features • text processing University Technology Services Course Objectives Useful commands • working with files • system resources • printing • vi editor University Technology Services In the Introduction to UNIX document 3 • shell programming • Unix command summary tables • short Unix bibliography (also see web site) We will not, however, be covering these topics in the lecture. Numbers on slides indicate page number in book. University Technology Services History of Unix 7–8 1960s multics project (MIT, GE, AT&T) 1970s AT&T Bell Labs 1970s/80s UC Berkeley 1980s DOS imitated many Unix ideas Commercial Unix fragmentation GNU Project 1990s Linux now Unix is widespread and available from many sources, both free and commercial University Technology Services Unix Systems 7–8 SunOS/Solaris Sun Microsystems Digital Unix (Tru64) Digital/Compaq HP-UX Hewlett Packard Irix SGI UNICOS Cray NetBSD, FreeBSD UC Berkeley / the Net Linux Linus Torvalds / the Net University Technology Services Unix Philosophy • Multiuser / Multitasking • Toolbox approach • Flexibility / Freedom • Conciseness • Everything is a file • File system has places, processes have life • Designed by programmers for programmers University Technology Services -

Partitioner Och Filsystem 2

Partitioner och filsystem 2 File systems FAT Unix-like NTFS Vad är ett filsystem? • Datorer behöver en metod för att lagra och hämta data… • Referensmodell för filsystem (Carrier) – Filsystem kategori • Layout och storleksinformation – Innehålls kategori • Kluster och block – data enheter – Metadata kategori • Tidsinformation, storlek, access kontroll • Adresser till allokerade data enheter – Filnamn kategori • Oftast ihop-kopplad med metadata – Applikations kategori • Quota • Journaler • De modernaste påminner mycket om relations databaser Windows • NTFS (New Technology File System) – 6 versioner finns, de nyaste är v3.0 (Windows 2000) och v3.1 (XP, 2003, Vista, 2008, 7), kallas även 5.0, 5.1, 5.2, 6.0 och 6.1 (efter OS version) – Stöd för unicode, säkerhet, mm. - är mycket mer komplext än FAT! – http://en.wikipedia.org/wiki/Ntfs • FAT 12/16/32, VFAT (långa filnamn i Win95) – Används fortfarande men är inte effektivt för större lagringskapaciteter (klusterstorleken) – Långsammare access än NTFS • Windows Future Storage (WinFS) inställt projekt, enligt rykten var det en SQL-databas som ligger ovanpå ett NTFS filsystem – Läs mer på: http://www.ntfs.com/ – Och: http://en.wikipedia.org/wiki/WinFS FAT12, 16 och 32 • FAT12, finns på floppy diskar – Begränsad lagringskapacitet – Designat för MS-DOS 1.0 • FAT16, var designat för större diskar – Äldre OS använde detta • MS-DOS 3.0, Win95 OSR1, NT 3.5 och NT 4.0 – Max diskstorlek 2 GB • FAT32 kom när diskar större än 2GB kom – Vissa äldre och alla nya OS kan använda FAT32 • Windows 98/Me/2000/XP/2003/Vista/7 och 2008 • Begränsningar med FAT32 – Största formaterabara volymen är 32GB (större volymer kan dock användas, < 16 TiB) – Begränsade features vad gäller komprimering, kryptering, säkerhet och hastighet jämfört mot NTFS • http://en.wikipedia.org/wiki/FAT_file_system exFAT • exFAT (Extended File Allocation Table, a.k.a. -

Storage Devices, Basic File System Design

CS162 Operating Systems and Systems Programming Lecture 17 Data Storage to File Systems October 29, 2019 Prof. David Culler http://cs162.eecs.Berkeley.edu Read: A&D Ch 12, 13.1-3.2 Recall: OS Storage abstractions Key Unix I/O Design Concepts • Uniformity – everything is a file – file operations, device I/O, and interprocess communication through open, read/write, close – Allows simple composition of programs • find | grep | wc … • Open before use – Provides opportunity for access control and arbitration – Sets up the underlying machinery, i.e., data structures • Byte-oriented – Even if blocks are transferred, addressing is in bytes What’s below the surface ?? • Kernel buffered reads – Streaming and block devices looks the same, read blocks yielding processor to other task • Kernel buffered writes Application / Service – Completion of out-going transfer decoupled from the application, File descriptor number allowing it to continue - an int High Level I/O streams • Explicit close Low Level I/O handles 9/12/19 The cs162file fa19 L5system abstraction 53 Syscall registers • File File System descriptors File Descriptors – Named collection of data in a file system • a struct with all the info I/O Driver Commands and Data Transfers – POSIX File data: sequence of bytes • about the files Disks, Flash, Controllers, DMA Could be text, binary, serialized objects, … – File Metadata: information about the file • Size, Modification Time, Owner, Security info • Basis for access control • Directory – “Folder” containing files & Directories – Hierachical -

Your Performance Task Summary Explanation

Lab Report: 11.2.5 Manage Files Your Performance Your Score: 0 of 3 (0%) Pass Status: Not Passed Elapsed Time: 6 seconds Required Score: 100% Task Summary Actions you were required to perform: In Compress the D:\Graphics folderHide Details Set the Compressed attribute Apply the changes to all folders and files In Hide the D:\Finances folder In Set Read-only on filesHide Details Set read-only on 2017report.xlsx Set read-only on 2018report.xlsx Do not set read-only for the 2019report.xlsx file Explanation In this lab, your task is to complete the following: Compress the D:\Graphics folder and all of its contents. Hide the D:\Finances folder. Make the following files Read-only: D:\Finances\2017report.xlsx D:\Finances\2018report.xlsx Complete this lab as follows: 1. Compress a folder as follows: a. From the taskbar, open File Explorer. b. Maximize the window for easier viewing. c. In the left pane, expand This PC. d. Select Data (D:). e. Right-click Graphics and select Properties. f. On the General tab, select Advanced. g. Select Compress contents to save disk space. h. Click OK. i. Click OK. j. Make sure Apply changes to this folder, subfolders and files is selected. k. Click OK. 2. Hide a folder as follows: a. Right-click Finances and select Properties. b. Select Hidden. c. Click OK. 3. Set files to Read-only as follows: a. Double-click Finances to view its contents. b. Right-click 2017report.xlsx and select Properties. c. Select Read-only. d. Click OK. e. -

Z/OS Distributed File Service Zseries File System Implementation Z/OS V1R13

Front cover z/OS Distributed File Service zSeries File System Implementation z/OS V1R13 Defining and installing a zSeries file system Performing backup and recovery, sysplex sharing Migrating from HFS to zFS Paul Rogers Robert Hering ibm.com/redbooks International Technical Support Organization z/OS Distributed File Service zSeries File System Implementation z/OS V1R13 October 2012 SG24-6580-05 Note: Before using this information and the product it supports, read the information in “Notices” on page xiii. Sixth Edition (October 2012) This edition applies to version 1 release 13 modification 0 of IBM z/OS (product number 5694-A01) and to all subsequent releases and modifications until otherwise indicated in new editions. © Copyright International Business Machines Corporation 2010, 2012. All rights reserved. Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Notices . xiii Trademarks . xiv Preface . .xv The team who wrote this book . .xv Now you can become a published author, too! . xvi Comments welcome. xvi Stay connected to IBM Redbooks . xvi Chapter 1. zFS file systems . 1 1.1 zSeries File System introduction. 2 1.2 Application programming interfaces . 2 1.3 zFS physical file system . 3 1.4 zFS colony address space . 4 1.5 zFS supports z/OS UNIX ACLs. 4 1.6 zFS file system aggregates. 5 1.6.1 Compatibility mode aggregates. 5 1.6.2 Multifile system aggregates. 6 1.7 Metadata cache. 7 1.8 zFS file system clones . 7 1.8.1 Backup file system . 8 1.9 zFS log files. -

Introduction to PC Operating Systems

Introduction to PC Operating Systems Operating System Concepts 8th Edition Written by: Abraham Silberschatz, Peter Baer Galvin and Greg Gagne John Wiley & Sons, Inc. ISBN: 978-0-470-12872-5 Chapter 2 Operating-System Structure The design of a new operating system is a major task. It is important that the goals of the system be well defined before the design begins. These goals for the basis for choices among various algorithms and strategies. What can be focused on in designing an operating system • Services that the system provides • The interface that it makes available to users and programmers • Its components and their interconnections Operating-System Services The operating system provides: • An environment for the execution of programs • Certain services to programs and to the users of those programs. Note: (services can differ from one operating system to another) A view of operating system services user and other system programs GUI batch command line user interfaces system calls program I/O file Resource communication accounting execution operations systems allocation error protection detection services and security operating system hardware Operating-System Services Almost all operating systems have a user interface. Types of user interfaces: 1. Command Line Interface (CLI) – method where user enter text commands 2. Batch Interface – commands and directives that controls those commands are within a file and the files are executed 3. Graphical User Interface – works in a windows system using a pointing device to direct I/O, using menus and icons and a keyboard to enter text. Operating-System Services Services Program Execution – the system must be able to load a program into memory and run that program. -

Linux Kernel and Driver Development Training Slides

Linux Kernel and Driver Development Training Linux Kernel and Driver Development Training © Copyright 2004-2021, Bootlin. Creative Commons BY-SA 3.0 license. Latest update: October 9, 2021. Document updates and sources: https://bootlin.com/doc/training/linux-kernel Corrections, suggestions, contributions and translations are welcome! embedded Linux and kernel engineering Send them to [email protected] - Kernel, drivers and embedded Linux - Development, consulting, training and support - https://bootlin.com 1/470 Rights to copy © Copyright 2004-2021, Bootlin License: Creative Commons Attribution - Share Alike 3.0 https://creativecommons.org/licenses/by-sa/3.0/legalcode You are free: I to copy, distribute, display, and perform the work I to make derivative works I to make commercial use of the work Under the following conditions: I Attribution. You must give the original author credit. I Share Alike. If you alter, transform, or build upon this work, you may distribute the resulting work only under a license identical to this one. I For any reuse or distribution, you must make clear to others the license terms of this work. I Any of these conditions can be waived if you get permission from the copyright holder. Your fair use and other rights are in no way affected by the above. Document sources: https://github.com/bootlin/training-materials/ - Kernel, drivers and embedded Linux - Development, consulting, training and support - https://bootlin.com 2/470 Hyperlinks in the document There are many hyperlinks in the document I Regular hyperlinks: https://kernel.org/ I Kernel documentation links: dev-tools/kasan I Links to kernel source files and directories: drivers/input/ include/linux/fb.h I Links to the declarations, definitions and instances of kernel symbols (functions, types, data, structures): platform_get_irq() GFP_KERNEL struct file_operations - Kernel, drivers and embedded Linux - Development, consulting, training and support - https://bootlin.com 3/470 Company at a glance I Engineering company created in 2004, named ”Free Electrons” until Feb. -

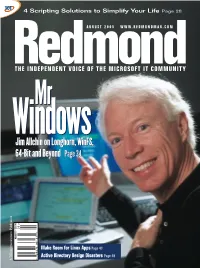

Jim Allchin on Longhorn, Winfs, 64-Bit and Beyond Page 34 Jim

0805red_cover.v5 7/19/05 2:57 PM Page 1 4 Scripting Solutions to Simplify Your Life Page 28 AUGUST 2005 WWW.REDMONDMAG.COM MrMr WindowsWindows Jim Allchin on Longhorn, WinFS, 64-Bit and Beyond Page 34 > $5.95 05 • AUGUST Make Room for Linux Apps Page 43 25274 867 27 Active Directory Design Disasters Page 49 71 Project1 6/16/05 12:36 PM Page 1 Exchange Server stores & PSTs driving you crazy? Only $399 for 50 mailboxes; $1499 for unlimited mailboxes! Archive all mail to SQL and save 80% storage space! Email archiving solution for internal and external email Download your FREE trial from www.gfi.com/rma Project1 6/16/05 12:37 PM Page 2 Get your FREE trial version of GFI MailArchiver for Exchange today! GFI MailArchiver for Exchange is an easy-to-use email archiving solution that enables you to archive all internal and external mail into a single SQL database. Now you can provide users with easy, centralized access to past email via a web-based search interface and easily fulfill regulatory requirements (such as the Sarbanes-Oxley Act). GFI MailArchiver leverages the journaling feature of Exchange Server 2000/2003, providing unparalleled scalability and reliability at a competitive cost. GFI MailArchiver for Exchange features Provide end-users with a single web-based location in which to search all their past email Increase Exchange performance and ease backup and restoration End PST hell by storing email in SQL format Significantly reduce storage requirements for email by up to 80% Comply with Sarbanes-Oxley, SEC and other regulations.