NASA Modeling, Simulation, Information Technology & Processing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The IRM the Iron That Binds: the Unexpectedly Strong Magnetism Of

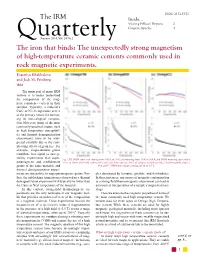

ISSN: 2152-1972 The IRM Inside... Visiting Fellows' Reports 2 Current Articles 4 QuarterlySummer 2014, Vol. 24 No.2 The iron that binds: The unexpectedly strong magnetism of high-temperature ceramic cements commonly used in rock magnetic experiments. Evgeniya Khakhalova and Josh M. Feinberg IRM The main goal of many IRM visitors is to better understand the composition of the mag- netic remanence carriers in their samples. Typically, a material’s Curie or Néel temperature serves as the primary means for estimat- ing its mineralogical composi- tion. However, many of the most commonly used techniques, such as high temperature susceptibil- ity and thermal demagnetization experiments, have to be inter- preted carefully due to the com- plicating effects of grain size. For example, single-domain grains contribute less signal to suscep- tibility experiments than super- Fig 1. RT SIRM curves on cooling from 300 K to 10 K and warming from 10 K to 300 K and SIRM warming curves from paramagnetic and multidomain 10 K to 300 K after field-cooling (FC) and zero-field-cooling (ZFC) of samples (a) Omega700_3, (b) Omega600, and (c) grains of the same material, and OmegaCC. SIRM was imparted using a field of 2.5 T. thermal demagnetization experi- ments are insensitive to superparamagnetic grains. Fur- ples dominated by hematite, goethite, and ferrihydrite). ther, the unblocking temperatures observed in a thermal In these instances, any source of magnetic contamination demagnetization experiment will typically be lower than in a strong-field thermomagnetic experiment can lead to the Curie or Néel temperature of the material. an incorrect interpretation of a sample’s magnetic miner- In this context, strong-field thermomagnetic ex- alogy. -

Amplify Science Earth's Changing Climate

Lawrence Hall of Science has new instructional materials that address the Next Generation Science Standards! Check out these Middle School Units… As just one example, compare Middle School units from three different Hall programs. See for yourself how each program goes about addressing the Middle School NGSS Standards related to Human Impacts and Climate Change, and choose the approach that best meets the needs of your school district. MS NGSS Performance Expectations: Human Impacts and Climate Change • MS-ESS3-2. Analyze and interpret data on natural hazards to forecast future catastrophic events and inform the development of technologies to mitigate their effects. • MS-ESS3-3. Apply scientific principles to design a method for monitoring and minimizing a human impact on the Environment. • MS-ESS3-4. Construct an argument supported by evidence for how increases in human population and per-capita consumption of natural resources impact Earth’s systems. • MS-ESS3-5. Ask questions to clarify evidence of the factors that have caused the rise in global temperatures over the past century. Sample Units from Three Different Hall Programs • Amplify Science—Earth’s Changing Climate: Vanishing Ice Earth’s Changing Climate Engineering Internship • FOSS—Weather and Water • Ocean Sciences Sequence—The Ocean-Atmosphere Connection and Climate Change ©The Regents of the University of California ©The Regents of the University of California Description of two Middle School units from Amplify Science Earth’s Changing Climate: Vanishing Ice and Earth’s Changing Climate Engineering Internship Grade 6-8 Units — requiring at least 19 and 10 45-minute class sessions respectively (two of 27 Middle School Amplify Science units) The Problem: Why is the ice on Earth’s surface melting? Students’ Role: In the role of student climatologists, students investigate what is causing ice on Earth’s surface to melt in order to help the fictional World Climate Institute educate the public about the processes involved. -

Planets of the Solar System

Chapter Planets of the 27 Solar System Chapter OutlineOutline 1 ● Formation of the Solar System The Nebular Hypothesis Formation of the Planets Formation of Solid Earth Formation of Earth’s Atmosphere Formation of Earth’s Oceans 2 ● Models of the Solar System Early Models Kepler’s Laws Newton’s Explanation of Kepler’s Laws 3 ● The Inner Planets Mercury Venus Earth Mars 4 ● The Outer Planets Gas Giants Jupiter Saturn Uranus Neptune Objects Beyond Neptune Why It Matters Exoplanets UnderstandingU d t di theth formationf ti and the characteristics of our solar system and its planets can help scientists plan missions to study planets and solar systems around other stars in the universe. 746 Chapter 27 hhq10sena_psscho.inddq10sena_psscho.indd 774646 PDF 88/15/08/15/08 88:43:46:43:46 AAMM Inquiry Lab Planetary Distances 20 min Turn to Appendix E and find the table entitled Question to Get You Started “Solar System Data.” Use the data from the How would the distance of a planet from the sun “semimajor axis” row of planetary distances to affect the time it takes for the planet to complete devise an appropriate scale to model the distances one orbit? between planets. Then find an indoor or outdoor space that will accommodate the farthest distance. Mark some index cards with the name of each planet, use a measuring tape to measure the distances according to your scale, and place each index card at its correct location. 747 hhq10sena_psscho.inddq10sena_psscho.indd 774747 22/26/09/26/09 111:42:301:42:30 AAMM These reading tools will help you learn the material in this chapter. -

A Retrospective Snapshot of the Planning Processes in MER Operations After 5 Years

A Retrospective Snapshot of the Planning Processes in MER Operations After 5 Years Anthony Barrett, Deborah Bass, Sharon Laubach, and Andrew Mishkin Jet Propulsion Laboratory California Institute of Technology 4800 Oak Grove Drive M/S 301-260 {firstname.lastname}@jpl.nasa.gov Abstract Over the past five years on Mars, the Mars Exploration Rovers have traveled over 20 kilometers to climb tall hills and descend into craters. Over that period the operations process has continued to evolve as deep introspection by the MER uplink team suggests streamlining improvements and lessons learned adds complexity to handle new problems. As such, the operations process and its supporting tools automate enough of the drudgery to circumvent staff burnout issues while being nimble and safe enough to let an operations staff respond to the problems that Mars throws up on a daily basis. This paper describes the currently used integrated set of planning processes that support rover operations on a daily basis after five years of evolution. Figure 1. Mars Exploration Rover For communications, each rover has a high gain antenna Introduction for receiving instructions from Earth, and a low gain On January 3 & 24, 2004 Spirit and Opportunity, the twin antenna for transmitting data to the Odyssey or Mars Mars Exploration Rovers (MER), landed on opposite sides Reconnaissance orbiters with subsequent relay to Earth. of Mars at Gusev Crater and Meridiani Planum Given that it takes between 6 and 44 minutes for a signal to respectively. Each rover was originally expected to last 90 travel to and from the rovers, simple joystick operations Sols (Martian days) due to dust accumulation on the solar are not feasible. -

2020 Crew Health & Performance EVA Roadmap

NASA/TP-20205007604 Crew Health and Performance Extravehicular Activity Roadmap: 2020 Andrew F. J. Abercromby1 Omar Bekdash4 J. Scott Cupples1 Jocelyn T. Dunn4 E. Lichar Dillon2 Alejandro Garbino3 Yaritza Hernandez4 Alexandros D. Kanelakos1 Christine Kovich5 Emily Matula6 Matthew J. Miller7 James Montalvo6 Jason Norcross4 Cameron W. Pittman7 Sudhakar Rajulu1 Richard A. Rhodes1 Linh Vu8 1NASA Johnson Space Center, Houston, Texas 2University of Texas Medical Branch, Galveston, Texas 3GeoControl Systems Inc., Houston, Texas 4KBR, Houston, Texas 5The Aerospace Corporation, Houston, Texas 6Stinger Ghaffarian Technologies (SGT) Inc., Houston, Texas 7Jacobs Technology, Inc., Houston, Texas 8MEI Technologies, Inc., Houston, Texas National Aeronautics and Space Administration Johnson Space Center Houston, Texas 77058 October 2020 The NASA STI Program Office ... in Profile Since its founding, NASA has been dedicated to the • CONFERENCE PUBLICATION. advancement of aeronautics and space science. The Collected papers from scientific and NASA scientific and technical information (STI) technical conferences, symposia, seminars, program plays a key part in helping NASA or other meetings sponsored or maintain this important role. co-sponsored by NASA. The NASA STI program operates under the • SPECIAL PUBLICATION. Scientific, auspices of the Agency Chief Information Officer. technical, or historical information from It collects, organizes, provides for archiving, and NASA programs, projects, and missions, disseminates NASA’s STI. The NASA STI often concerned with subjects having program provides access to the NTRS Registered substantial public interest. and its public interface, the NASA Technical Report Server, thus providing one of the largest • TECHNICAL TRANSLATION. collections of aeronautical and space science STI in English-language translations of foreign the world. Results are published in both non-NASA scientific and technical material pertinent to channels and by NASA in the NASA STI Report NASA’s mission. -

Earth Science SCIH 041 055 Credits: 0.5 Units / 5 Hours / NCAA

UNIVERSITY OF NEBRASKA HIGH SCHOOL Earth Science SCIH 041 055 Credits: 0.5 units / 5 hours / NCAA Course Description Have you ever wondered where marble comes from? or how deep the ocean is? or why it rains more in areas near the Equator than in other places? In this course students will study a variety of topics designed to give them a better understanding of the planet on which we live. They will study the composition of Earth including minerals and different rock types, weathering and erosion processes, mass movements, and surface and groundwater. They will also explore Earth's atmosphere and oceans, including storms, climate and ocean movements, plate tectonics, volcanism, earthquakes, mountain building, and geologic time. This course concludes with an in-depth look at the connections between our Earth's vast resources and the human population's dependence and impact on them. Graded Assessments: 5 Unit Evaluations; 2 Projects; 2 Proctored Progress Tests, 5 Teacher Connect Activities Course Objectives When you have completed the materials in this course, you should be able to: 1. Describe how Earth materials move through geochemical cycles (carbon, nitrogen, oxygen) resulting in chemical and physical changes in matter. 2. Understand the relationships among Earth’s structure, systems, and processes. 3. Describe how heat convection in the mantle propels the plates comprising Earth’s surface across the face of the globe (plate tectonics). 4. Evaluate the impact of human activity and natural causes on Earth’s resources (groundwater, rivers, land, fossil fuels). 5. Describe the relationships among the sources of energy and their effects on Earth’s systems. -

Of Curiosity in Gale Crater, and Other Landed Mars Missions

44th Lunar and Planetary Science Conference (2013) 2534.pdf LOCALIZATION AND ‘CONTEXTUALIZATION’ OF CURIOSITY IN GALE CRATER, AND OTHER LANDED MARS MISSIONS. T. J. Parker1, M. C. Malin2, F. J. Calef1, R. G. Deen1, H. E. Gengl1, M. P. Golombek1, J. R. Hall1, O. Pariser1, M. Powell1, R. S. Sletten3, and the MSL Science Team. 1Jet Propulsion Labora- tory, California Inst of Technology ([email protected]), 2Malin Space Science Systems, San Diego, CA ([email protected] ), 3University of Washington, Seattle. Introduction: Localization is a process by which tactical updates are made to a mobile lander’s position on a planetary surface, and is used to aid in traverse and science investigation planning and very high- resolution map compilation. “Contextualization” is hereby defined as placement of localization infor- mation into a local, regional, and global context, by accurately localizing a landed vehicle, then placing the data acquired by that lander into context with orbiter data so that its geologic context can be better charac- terized and understood. Curiosity Landing Site Localization: The Curi- osity landing was the first Mars mission to benefit from the selection of a science-driven descent camera (both MER rovers employed engineering descent im- agers). Initial data downlinked after the landing fo- Fig 1: Portion of mosaic of MARDI EDL images. cused on rover health and Entry-Descent-Landing MARDI imaged the landing site and science target (EDL) performance. Front and rear Hazcam images regions in color. were also downloaded, along with a number of When is localization done? MARDI thumbnail images. The Hazcam images were After each drive for which Navcam stereo da- used primarily to determine the rover’s orientation by ta has been acquired post-drive and terrain meshes triangulation to the horizon. -

New Research Opportunities in the Earth Sciences

New Research Opportunities in the Earth Sciences A national strategy to sustain basic research and training across all areas of the Earth sciences would help inform the response to many of the major challenges that will face the planet in coming years. Issues including fossil fuel and water resources, earthquake and tsunami hazards, and profound environmental changes due to shifts in the climate system could all be informed by new research in the Earth sciences. The National Science Foundation’s Division of Earth Sciences, as the only federal agency that maintains significant funding of both exploratory and problem-driven research in the Earth sciences, is central to these efforts, and coordinated research priorities are needed to fully capitalize on the contributions that the Earth sciences can make. ith Earth’s planet. However, it’s population important to note that this Wexpected to collaborative work builds reach 7 billion by the end on basic research in of 2011, and about 9.2 specific disciplines. Core billion by 2050, demand Earth science research for resources such as food, carried out by individual fuel, and water is investigators, or small increasing rapidly. At the groups of scientists, same time, humans are remains the most creative changing the landscape and effective way to and the temperature of the enhance the knowledge atmosphere is increasing. base upon which integra- Expanding basic research tive efforts can build. in the Earth sciences—the study of Earth’s solid Key Research surface, crust, mantle and Opportunities core, and the interactions Figure 1. Combining LiDAR data with geological Conceptual advances in observations helps determine how erosional processes between Earth and the basic Earth science theory respond to rock uplift at Dragon’s Back pressure ridge and technological improve- atmosphere, hydrosphere, along the San Andreas Fault. -

Chapter 11: Atmosphere

The Atmosphere and the Oceans ff the Na Pali coast in Hawaii, clouds stretch toward O the horizon. In this unit, you’ll learn how the atmo- sphere and the oceans interact to produce clouds and crashing waves. You’ll come away from your studies with a deeper understanding of the common characteristics shared by Earth’s oceans and its atmosphere. Unit Contents 11 Atmosphere14 Climate 12 Meteorology15 Physical Oceanography 13 The Nature 16 The Marine Environment of Storms Go to the National Geographic Expedition on page 880 to learn more about topics that are con- nected to this unit. 268 Kauai, Hawaii 269 1111 AAtmospheretmosphere What You’ll Learn • The composition, struc- ture, and properties that make up Earth’s atmos- phere. • How solar energy, which fuels weather and climate, is distrib- uted throughout the atmosphere. • How water continually moves between Earth’s surface and the atmo- sphere in the water cycle. Why It’s Important Understanding Earth’s atmosphere and its interactions with solar energy is the key to understanding weather and climate, which con- trol so many different aspects of our lives. To find out more about the atmosphere, visit the Earth Science Web Site at earthgeu.com 270 DDiscoveryiscovery LLabab Dew Formation Dew forms when moist air near 4. Repeat the experiment outside. the ground cools and the water vapor Record the temperature of the in the air changes into water droplets. water and the air outside. In this activity, you will model the Observe In your science journal, formation of dew. describe what happened to the out- 1. -

Vplanet: the Virtual Planet Simulator

VPLanet: The Virtual Planet Simulator Rory Barnes1,2, Rodrigo Luger2,3, Russell Deitrick2,4, Peter Driscoll2,5, David Fleming1,2, Hayden Smotherman1,2, Thomas R. Quinn1,2, Diego McDonald1,2, Caitlyn Wilhelm1,2, Benjamin Guyer1,2, Victoria S. Meadows1,2, Patrick Barth6, Rodolfo Garcia1,2, Shawn D. Domagal-Goldman2,7, John Armstrong2,8, Pramod Gupta1,2, and The NASA Virtual Planetary Laboratory 1Astronomy Dept., U. of Washington, Box 351580, Seattle, WA 98195 2NASA Virtual Planetary Laboratory 3Center for Computational Astrophysics, 6th Floor, 162 5th Ave, New York, NY 10010 4Astronomisches Institut, University of Bern, Sidlerstrasse 5, 3012 Bern, Switzerland 5Department of Terrestrial Magnetism, Carnegie Institute for Science, 5241 Broad Branch Road, NW, Washington, DC 20015 6Max Planck Institute for Astronomy, Heidelberg, Germany 7NASA Goddard Space Flight Center, Mail Code 699, Greenbelt, MD, 20771 8Department of Physics, Weber State University, 1415 Edvaldson Drive, Dept. 2508, Ogden, UT 84408-2508 Overview. VPLanet is software to simulate the • BINARY: Orbital evolution of a circumbinary planet evolution of an arbitrary planetary system for billions of from Leung & Lee (2013). years. Since planetary systems evolve due to a myriad • GalHabit: Evolution of wide binaries due to the of processes, VPLanet unites theories developed in galactic tide and passing stars (Heisler & Tremaine Earth science, stellar astrophysics, planetary science, 1986; Rickman et al. 2008; Kaib et al. 2013). and galactic astronomy. VPLanet can simulate a generic • SpiNBody: N-body integrator. planetary system, but is optimized for those with • DistOrb: 2nd and 4th order secular models of orbital potentially habitable worlds. VPLanet is open source evolution (Murray & Dermott 1999). -

Earth Science Standards of Learning for Virginia Public Schools – January 2010

Earth Science Standards of Learning for Virginia Public Schools – January 2010 Introduction The Science Standards of Learning for Virginia Public Schools identify academic content for essential components of the science curriculum at different grade levels. Standards are identified for kindergarten through grade five, for middle school, and for a core set of high school courses — Earth Science, Biology, Chemistry, and Physics. Throughout a student’s science schooling from kindergarten through grade six, content strands, or topics are included. The Standards of Learning in each strand progress in complexity as they are studied at various grade levels in grades K-6, and are represented indirectly throughout the high school courses. These strands are Scientific Investigation, Reasoning, and Logic; Force, Motion, and Energy; Matter; Life Processes; Living Systems; Interrelationships in Earth/Space Systems; Earth Patterns, Cycles, and Change; and Earth Resources. Five key components of the science standards that are critical to implementation and necessary for student success in achieving science literacy are 1) Goals; 2) K-12 Safety; 3) Instructional Technology; 4) Investigate and Understand; and 5) Application. It is imperative to science instruction that the local curriculum consider and address how these components are incorporated in the design of the kindergarten through high school science program. Goals The purposes of scientific investigation and discovery are to satisfy humankind’s quest for knowledge and understanding and to preserve and enhance the quality of the human experience. Therefore, as a result of science instruction, students will be able to achieve the following objectives: 1. Develop and use an experimental design in scientific inquiry. -

Origin and Evolution of Earth Research Questions for a Changing Planet

Origin and Evolution of Earth Research Questions for a Changing Planet Questions about the origins and nature of Earth have long preoccupied human thought and the scientific endeavor. Deciphering the planet’s history and processes could improve the abil- ity to predict catastrophes like earthquakes and volcanoes, to manage Earth’s resources, and to anticipate changes in climate and geologic processes. This report captures, in a series of questions, the essential scientific challenges that constitute the frontier of Earth science at the start of the 21st century. arth is an active place. Earthquakes rip along plate boundaries, volcanoes spew fountains of Emolten lava, and mountain ranges and seabed are constantly created and destroyed. Earth scientists have long been concerned with deciphering the history—and predicting the future—of this active planet. Over the past four decades, Earth scientists have made great strides in understanding Earth’s workings. Scientists have ever-improving tools to understand how Earth’s internal processes shape the planet’s surface, how life can be sustained over billions of years, and how geological, biological, atmospheric, and oceanic NASA/NDGC processes interact to produce climate—and climatic change. At the request of the U.S. Department of Energy, Na- tional Aeronautics and Space Administration, National Science Foundation, and U.S. Geological Survey, the National Research Council assembled a committee to propose and explore grand ques- tions in Earth science. This report, which is the result of the committee’s deliberations and input solicited from the Earth science community, describes ten “big picture” Earth science issues being pursued today.