The Posthuman Management Matrix: Understanding the Organizational Impact of Radical Biotechnological Convergence

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Posthumanism, Subjectivity, Autobiography

Posthumanism, Subjectivity, Autobiography Abstract In the following essay I would like to go back and reconnect a few things that may have become disjointed in sketches of posthumanist theory. In particular, the points to revisit are: the poststructuralist critique of the subject, the postmodernist approach to autobiography and the notion of the posthuman itself. I will briefly return to the work Haraway and Hayles, before setting out the relationship between the often proclaimed “death of the subject”, postmodern autobiography, and a few examples of what might be termed “posthuman auto-biographies”. Keywords Posthumanism, Speciesism, Subjectivity, Autobiography, Technology, Animal Studies Post-human-ism As the posthuman gets a life, it will be fascinating to observe and engage adaptations of narrative lives routed through an imaginary of surfaces, networks, assemblages, prosthetics, and avatars. (Smith, 2011: 571) It’s probably a fair to say that the official (auto)biography of posthumanism runs something like this: Posthumanism, Subjectivity, Autobiography 2 Traces of proto-posthumanist philosophy can be easily found in Nietzsche, Darwin, Marx, Freud and Heidegger and their attacks on various ideologemes of humanism. This critique was then taken further by the (in)famous antihumanism of the so-called “(French) poststructuralists” (Althusser, Lacan, Barthes, Kristeva, Foucault, Lyotard, Deleuze, Derrida…), who were translated, “homogenized”, received and institutionalized in the English-speaking world under the label “(French) theory” and added to the larger movement called “postmodernism”. At the same time, the impact of new digital or information technologies was being felt and theorized in increasingly “interdisciplinary” environments in the humanities and “(critical) science studies”. Two foundational texts are usually cited here, namely Donna Haraway’s “Cyborg Manifesto” (1985 [1991]) and N. -

Image Recognition by Spiking Neural Networks

TALLINN UNIVERSITY OF TECHNOLOGY Faculty of Information Technology Nikolai Jefimov 143689IASM IMAGE RECOGNITION BY SPIKING NEURAL NETWORKS Master's thesis Supervisor: Eduard Petlenkov PhD Tallinn 2017 TALLINNA TEHNIKAÜLIKOOL Infotehnoloogia teaduskond Nikolai Jefimov 143689IASM KUJUTISE TUVASTAMINE IMPULSI NÄRVIVÕRKUDEGA Magistritöö Juhendaja: Eduard Petlenkov PhD Tallinn 2017 Author’s declaration of originality I hereby certify that I am the sole author of this thesis. All the used materials, references to the literature and the work of others have been referred to. This thesis has not been presented for examination anywhere else. Author: Nikolai Jefimov 30.05.2017 3 Abstract The aim of this thesis is to present opportunities on implementations of spiking neural network for image recognition and proving learning algorithms in MATLAB environment. In this thesis provided examples of the use of common types of artificial neural networks for image recognition and classification. Also presented spiking neural network areas of use, as well as the principles of image recognition by this type of neural network. The result of this work is the realization of the learning algorithm in MATLAB environment, as well as the ability to recognize handwritten numbers from the MNIST database. Besides this presented the opportunity to recognizing Latin letters with dimensions of 3x3 and 5x7 pixels. In addition, were proposed User Guide for masters course ISS0023 Intelligent Control System in TUT. This thesis is written in English and is 62 pages long, including 6 chapters, 61 figures and 4 tables. 4 Annotatsioon Kujutise tuvastamine impulsi närvivõrkudega Selle väitekiri eesmärgiks on tutvustada impulsi närvivõrgu kasutamisvõimalused, samuti näidata õpetamise algoritmi MATLAB keskkonnas. -

Posthuman Dimensions of Cyborg: a Study of Man-Machine Hybrid in the Contemporary Scenario

Annals of R.S.C.B., ISSN:1583-6258, Vol. 25, Issue 4, 2021, Pages. 9442 - 9444 Received 05 March 2021; Accepted 01 April 2021. Posthuman Dimensions of Cyborg: A Study of Man-Machine Hybrid in the Contemporary Scenario Annliya Shaijan Postgraduate in English,University of Calicut. ABSTRACT Cyborg theory offers perspectives to analyze the cultural and social meanings of contemporary digital health technologies. Theorizing cyborg called for cultural interrogations. Critics challenged Haraway‟s cyborg and the cyborg theory for not affecting political changes. Cyborg theory is an intriguing approach to analyze the range of arenas such as biotechnology, medical issues and health conditions that incorporate disability, menopause, female reproduction, Prozac, foetal surgery and stem cells.Cyborg is defined by Donna Haraway, in her “A Cyborg Manifesto” (1986), as “a creature in a post-gender world; it has no truck with bisexuality, pre-oedipal symbiosis, unalienated labour, or other seductions to organic wholeness through a final appropriation of all the powers of the parts into a higher unity.” A cyborg or a cybernetic organism is a hybrid of machine and organism. The cyborg theory de-stabilizes from gender politics, traditional notions of feminism, critical race theory, and queer theory and identity studies. The technologies associated with the cyborg theory are complex and diverse. It valorizes the “monstrous, hybrid, disabled, mutated or otherwise „imperfect‟ or „unwhole‟ body.” Scholars have founded it useful and fruitful in the social and cultural analysis of health and medicine. Outline of the Total Work The biomechanics introduces two hybrids of genetic body and cyborg body. The pioneers, Clynes and Kline, in the field to generate man-machine systems, drew ways to new health technologies and their vision of the kind of cyborg has turned to a reality. -

Sapient Circuits and Digitalized Flesh: the Organization As Locus of Technological Posthumanization Second Edition Copyright © 2018 Matthew E

The Organization as Locus of Technological Posthumanization Sapient Circuits and Digitalized Flesh: The Organization as Locus of Technological Posthumanization Second Edition Copyright © 2018 Matthew E. Gladden Published in the United States of America by Defragmenter Media, an imprint of Synthypnion Press LLC Synthypnion Press LLC Indianapolis, IN 46227 http://www.synthypnionpress.com All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior permission of the publisher, apart from those fair-use purposes permitted by law. Defragmenter Media http://defragmenter.media ISBN 978-1-944373-21-4 (print edition) ISBN 978-1-944373-22-1 (ebook) 10 9 8 7 6 5 4 3 2 1 March 2018 The first edition of this book was published by Defragmenter Media in 2016. Preface ............................................................................................................13 Introduction: The Posthumanized Organization as a Synergism of Human, Synthetic, and Hybrid Agents ........................................................ 17 Part One: A Typology of Posthumanism: A Framework for Differentiating Analytic, Synthetic, Theoretical, and Practical Posthumanisms .............................................................................................31 Part Two: Organizational Posthumanism .................................................... 93 Part Three: The Posthuman Management Matrix: Understanding -

Posthumanism Research Network Two-Day Workshop March 30–31, 2019 SB107 Wilfrid Laurier University Waterloo, Ontario, Canada

‘Posthuman(ist) Alterities’ Posthumanism Research Network two-day workshop March 30–31, 2019 SB107 Wilfrid Laurier University Waterloo, Ontario, Canada We acknowledge the support of the following for making the workshop possible: SSHRC; The Royal Ontario Museum; WLU Faculty of Arts; WLU Office of Research Services; WLU Department of Philosophy; WLU Department of English and Film Studies: WLU Department of Communication Studies ÍÔ Saturday, March 30 9:30 – 11:00 Opening Remarks – SB107 (The Schlegel building, WLU campus) Dr. Russell Kilbourn (English and Film Studies, Wilfrid Laurier University) The conference organizers would like to acknowledge that we are on the traditional territories of the Neutral, Anishnabe, and Haudenosaunee Peoples. Session 1 1. Anna Mirzayan (University of Western): "Things Suck Everywhere: Extractive Capitalism and De-Posthumanism" 2. Christine Daigle (Brock University): “Je est un autre: Collective Material Agency” 3. Sean Braune (Brock University): “Being Always Arrives at its Destination” 11:00 – 11:15 Coffee Break 11:15 – 12:45 Session 2 1. Carly Ciufo (McMaster University): "Of Remembrance and (Human) Rights? Museological Legacies of the Transatlantic Slave Trade in Liverpool" 2. Sascha Priewe (University of Toronto; ROM): “Towards a posthumanist museology” 3. Mitch Goldsmith (Brock University): “Queering our relations with animals: An exploration of multispecies sexuality beyond the laboratory” 12:45 – 2:00 lunch Break veritas café (WLU campus) 2:00 – 3:30 Session 3 1. Matthew Pascucci (Arizona State University): “We Have Never Been Capitalist” 2. Nandita Biswas-Mellamphy (Western University): “Humans ‘Out-of-the-Loop’: AI and the Future of Governance” 3. David Fancy (Brock University): “Vibratory Capital, or: The Future is Already Here” 3:30 – 3:45 Coffee Break 3:45 – 4:45 Session 4 1. -

Climates of Mutation: Posthuman Orientations in Twenty-First Century

Climates of Mutation: Posthuman Orientations in Twenty-First Century Ecological Science Fiction Clare Elisabeth Wall A dissertation submitted to the Faculty of Graduate Studies in partial fulfillment of the requirements for the degree of Doctor of Philosophy Graduate Program in English York University Toronto, Ontario January 2021 © Clare Elisabeth Wall, 2021 ii Abstract Climates of Mutation contributes to the growing body of works focused on climate fiction by exploring the entangled aspects of biopolitics, posthumanism, and eco-assemblage in twenty- first-century science fiction. By tracing out each of those themes, I examine how my contemporary focal texts present a posthuman politics that offers to orient the reader away from a position of anthropocentric privilege and nature-culture divisions towards an ecologically situated understanding of the environment as an assemblage. The thematic chapters of my thesis perform an analysis of Peter Watts’s Rifters Trilogy, Larissa Lai’s Salt Fish Girl, Paolo Bacigalupi’s The Windup Girl, and Margaret Atwood’s MaddAddam Trilogy. Doing so, it investigates how the assemblage relations between people, genetic technologies, and the environment are intersecting in these posthuman works and what new ways of being in the world they challenge readers to imagine. This approach also seeks to highlight how these works reflect a genre response to the increasing anxieties around biogenetics and climate change through a critical posthuman approach that alienates readers from traditional anthropocentric narrative meanings, thus creating a space for an embedded form of ecological and technoscientific awareness. My project makes a case for the benefits of approaching climate fiction through a posthuman perspective to facilitate an environmentally situated understanding. -

Table of Contents Welcome from the General Chair

Table of Contents Welcome from the General Chair . .v Organizing Committee . vi Program Committee . vii-ix IJCNN 2011 Reviewers . ix-xiii Conference Topics . xiv-xv INNS Officers and Board of Governors . xvi INNS President’s Welcome . xvii IEEE CIS Officers and ADCOM . xviii IEEE CIS President’s Welcome . xix Cooperating Societies and Sponsors . xx Conference Information . xxi Hotel Maps . xxii. Schedule-At-A-Glance . xxiii-xxvii Schedule Grids . xxviii-xxxiii IJCNN 2012 Call for Papers . xxxiv-xxxvi Program . 1-31 Detailed Program . 33-146 Author Index . 147 i ii The 2011 International Joint Conference on Neural Networks Final Program July 31 – August 5, 2011 Doubletree Hotel San Jose, California, USA Sponsored by: International Neural Network Society iii The 2011 International Joint Conference on Neural Networks IJCNN 2011 Conference Proceedings © 2011 IEEE. Personal use of this material is permitted. However, permission to reprint/republish this material for advertising or promotional purposes or for creating new collective works for resale or redis- tribution to servers or lists, or to reuse any copyrighted component of this work in other works must be obtained from the IEEE. For obtaining permission, write to IEEE Copyrights Manager, IEEE Operations Center, 445 Hoes Lane, PO Box 1331, Piscataway, NJ 08855-1331 USA. All rights reserved. Papers are printed as received from authors. All opinions expressed in the Proceedings are those of the authors and are not binding on The Institute of Electrical and Electronics Engineers, Inc. Additional -

Posthumanism – a Critical Analysis

Posthumanism – A Critical Analysis Stefan Herbrechter 1 Contents: Preface 3 Towards a Critical Posthumanism 5 A Genealogy of Posthumanism 38 Our Posthuman Humanity and the Multiplicity of Its Forms 85 Posthumanism and Science Fiction 121 Interdisciplinarity and the Posthumanities 151 Posthumanism, Digitalization and New Media 199 Posthumanity – Subject and System 214 Afterword: The Other Side of Life 227 Bibliography 234 Index (to be provided) 254 2 Preface What is “man”? This age-old question is everywhere today being asked again and with increased urgency, given the current technological developments levering out “our” traditional humanist reflexes. What this development also shows, however, is that the current and intensified attack on the idea of a “human nature” is only the latest phase of a crisis which, in fact, has always existed at the centre of the humanist idea of the human. The present critical study thus produces a genealogy of the contemporary posthumanist scenario of the “end of man” and places it within the context of theoretical and philosophical developments and ways of thinking within modernity. Even though terms like “posthuman”, “posthumanist” or “posthumanism” have a surprisingly long history they have only really started to receive attention in contemporary theory and philosophy in the last two decades where they have produced an entire new way of thinking and theorizing. Only in the last ten years or so, posthumanism has established itself – mainly in the Anglo-American sphere – as an autonomous field of study with its own theoretical approach (especially within the so-called “theoretical humanities”). The first academic publications that deal systematically with the idea of the posthuman and posthumanism appeared at the end of the 1990s and the early 2000s (these are, in particular, works by N. -

A Typology of Posthumanism

Part One Abstract. The term ‘posthumanism’ has been employed to describe a diverse array of phenomena ranging from academic disciplines and artistic move- ments to political advocacy campaigns and the development of commercial technologies. Such phenomena differ widely in their subject matter, purpose, and methodology, raising the question of whether it is possible to fashion a coherent definition of posthumanism that encompasses all phenomena thus labelled. In this text, we seek to bring greater clarity to this discussion by formulating a novel conceptual framework for classifying existing and poten- tial forms of posthumanism. The framework asserts that a given form of posthumanism can be classified: 1) either as an analytic posthumanism that understands ‘posthumanity’ as a sociotechnological reality that already exists in the contemporary world or as a synthetic posthumanism that understands ‘posthumanity’ as a collection of hypothetical future entities whose develop- ment can be intentionally realized or prevented; and 2) either as a theoretical posthumanism that primarily seeks to develop new knowledge or as a practi- cal posthumanism that seeks to bring about some social, political, economic, or technological change. By arranging these two characteristics as orthogonal axes, we obtain a matrix that categorizes a form of posthumanism into one of four quadrants or as a hybrid posthumanism spanning all quadrants. It is suggested that the five resulting types can be understood roughly as posthu- manisms of critique, imagination, conversion, -

The Echo Maker Zachary Tavlin

Transatlantica Revue d’études américaines. American Studies Journal 2 | 2016 Ordinary Chronicles of the End of the World Chroniques ordinaires de la fin du monde Electronic version URL: http://journals.openedition.org/transatlantica/8278 DOI: 10.4000/transatlantica.8278 ISSN: 1765-2766 Publisher AFEA Electronic reference Transatlantica, 2 | 2016, “Ordinary Chronicles of the End of the World” [Online], Online since 31 December 2016, connection on 29 April 2021. URL: http://journals.openedition.org/transatlantica/ 8278; DOI: https://doi.org/10.4000/transatlantica.8278 This text was automatically generated on 29 April 2021. Transatlantica – Revue d'études américaines est mis à disposition selon les termes de la licence Creative Commons Attribution - Pas d'Utilisation Commerciale - Pas de Modification 4.0 International. 1 TABLE OF CONTENTS Chroniques ordinaires de la fin du monde Introduction. Chronicles of the End of the World: The End is not the End Arnaud Schmitt The Ubiquity of Strange Frontiers: Minor Eschatology in Richard Powers’s The Echo Maker Zachary Tavlin Writing (at) the End: Thomas Pynchon’s Bleeding Edge Brian Chappell Apocalypse and Sensibility: The Role of Sympathy in Jeff Lemire’s Sweet Tooth André Cabral de Almeida Cardoso The Double Death of Humanity in Cormac McCarthy’s The Road Stephen Joyce « A single gray flake, like the last host of christendom » : The Road ou l’apocalypse selon St McCarthy Yves Davo Hors Thème L’usure iconique. Circulation et valeur des images dans le cinéma américain contemporain Mathias Kusnierz Actualité de la recherche Civilisation Colloque « Corps en politique » Université Paris-Est Créteil, 7-9 septembre 2016 Lyais Ben Youssef Colloque « Conflit, Pouvoir et Représentations » Université du Maine, 18 et 19 novembre 2016 Clément Grouzard One-day symposium “Mapping the landscapes of abortion, birth control and power in the United States since the 1960s”. -

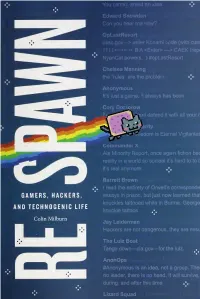

Respawn: Gamers, Hackers, and Technogenic Life

Digitized by the Internet Archive in 2019 with funding from Duke University Libraries https://archive.org/details/respawngamershacOOmilb EXPERIMENTAL FUTURES Technological lives, scientific arts, anthropological voices A series edited by Michael M. J. Fischer and Joseph Dumit GAMERS, HACKERS, AND TECHNOGENIC LIFE Colin Milburn © 2018 DUKE UNIVERSITY PRESS This title is freely available in All rights reserved. Printed in the United States of America an open access edition thanks to the tome initiative and on acid-free paper «=. Designed by Courtney Leigh Baker the generous support of the and typeset in Minion Pro and Knockout by Westchester University of California, Davis. Publishing Services. Learn more at openmono graphs.org. Library of Congress Cataloging-in-Publication Data This book is published Names: Milburn, Colin, [date] author. under the Creative Commons Title: Respawn : gamers, hackers, and technogenic life / Attribution-NonCommercial- Colin Milburn. NoDerivs 3.0 United States Description: Durham: Duke University Press, 2018. | (CC BY-NC-ND 3.0 US) Series: Experimental futures : technological lives, License, available at https:// creativecommons.org/licenses scientific arts, anthropological voices | /by-nc-nd/3.o/us/. Includes bibliographical references and index. Identifiers: lccn 2018019514 (print) Cover art: Nyan Cat. lccn 2018026364 (ebook) © Christopher Torres. isbn 9781478002789 (ebook) www.nyan.cat. ISBN 9781478001348 (hardcover : alk. paper) isbn 9781478002925 (pbk.: alk. paper) Subjects: lcsh: Video games. | Hackers. | Technology—Social aspects. Classification: LCC Gv1469.34.s52 (ebook) | lcc Gv1469.34.s52 M55 2018 (print) | ddc 794.8—dc23 lc record available at https://lccn.loc.gov/2018019514 CONTENTS Introduction. All Your Base 1 1 May the Lulz Be with You 25 2 Obstinate Systems 51 3 Still Inside 78 4 Long Live Play 102 5 We Are Heroes 134 6 Green Machine 172 7 Pwn 199 Conclusion. -

Posthumanist Education

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by CURVE/open Posthumanist Education Stefan Herbrechter Accepted manuscript PDF deposited in Coventry University’s Repository Original citation: ‘Posthumanist Education’, in International Handbook of Philosophy of Education, ed. by Paul Smeyers, pub 2018 (ISBN 9783319727592) Publisher: Springer Copyright © and Moral Rights are retained by the author(s) and/ or other copyright owners. A copy can be downloaded for personal non-commercial research or study, without prior permission or charge. This item cannot be reproduced or quoted extensively from without first obtaining permission in writing from the copyright holder(s). The content must not be changed in any way or sold commercially in any format or medium without the formal permission of the copyright holders. Stefan Herbrechter Posthumanist education Abstract: This contribution distinguishes between different varieties of posthumanism. It promotes a ‘critical posthumanism’ which engages with an emerging new world picture at the level of ethics, ecology, politics, technology and epistemology. On this basis, the contribution explores the question of a posthumanist and postanthropocentric education both from a philosophical, theoretical and practical, curricular point of view. It concludes by highlighting some of the implications that a posthumanist education might have. Keywords: Critical posthumanism, ecology, postanthropocentrism, animal studies, new literacies 1. The posthumanisation of the education system One might be very tempted to dismiss the subject posthumanism merely as another Anglo-American theory fashion and to simply wait for this latest ‘postism’ to go the way of all previous ones. If there was no globalization with its tangible effects both at an economic as well as media and cultural level, this might indeed be possible or even a sensible thing to do.