ADIOS/Tutorial

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Hyperloop: Group-Based NIC-Offloading To

HyperLoop: Group-Based NIC-Offloading to Accelerate Replicated Transactions in Multi-Tenant Storage Systems Daehyeok Kim1∗, Amirsaman Memaripour2∗, Anirudh Badam3, Yibo Zhu3, Hongqiang Harry Liu3y, Jitu Padhye3, Shachar Raindel3, Steven Swanson2, Vyas Sekar1, Srinivasan Seshan1 1Carnegie Mellon University, 2UC San Diego, 3Microsoft ABSTRACT CCS CONCEPTS Storage systems in data centers are an important component • Networks → Data center networks; • Information sys- of large-scale online services. They typically perform repli- tems → Remote replication; • Computer systems orga- cated transactional operations for high data availability and nization → Cloud computing; integrity. Today, however, such operations suffer from high tail latency even with recent kernel bypass and storage op- KEYWORDS timizations, and thus affect the predictability of end-to-end Distributed storage systems; Replicated transactions; RDMA; performance of these services. We observe that the root cause NIC-offloading of the problem is the involvement of the CPU, a precious commodity in multi-tenant settings, in the critical path of ACM Reference Format: replicated transactions. In this paper, we present HyperLoop, Daehyeok Kim, Amirsaman Memaripour, Anirudh Badam, Yibo a new framework that removes CPU from the critical path Zhu, Hongqiang Harry Liu, Jitu Padhye, Shachar Raindel, Steven of replicated transactions in storage systems by offloading Swanson, Vyas Sekar, Srinivasan Seshan. 2018. HyperLoop: Group- them to commodity RDMA NICs, with non-volatile memory Based NIC-Offloading to Accelerate Replicated Transactions in as the storage medium. To achieve this, we develop new and Multi-Tenant Storage Systems. In SIGCOMM ’18: ACM SIGCOMM general NIC offloading primitives that can perform memory 2018 Conference, August 20–25, 2018, Budapest, Hungary. -

Implementing a Verified FTP Client and Server Jennifer Ramseyer

Implementing a Verified FTP Client and Server by Jennifer Ramseyer S.B., Massachusetts Institute of Technology (2015) Submitted to the Department of Electrical Engineering and Computer Science in partial fulfillment of the requirements for the degree of Master of Engineering in Electrical Engineering and Computer Science at the MASSACHUSETTS INSTITUTE OF TECHNOLOGY June 2016 ○c Jennifer Ramseyer, MMXVI. All rights reserved. The author hereby grants to MIT permission to reproduce and to distribute publicly paper and electronic copies of this thesis document in whole or in part in any medium now known or hereafter created. Author................................................................ Department of Electrical Engineering and Computer Science May 20, 2016 Certified by. Dr. Martin Rinard Professor Thesis Supervisor Accepted by . Dr. Christopher J. Terman Chairman, Masters of Engineering Thesis Committee Implementing a Verified FTP Client and Server by Jennifer Ramseyer Submitted to the Department of Electrical Engineering and Computer Science on May 20, 2016, in partial fulfillment of the requirements for the degree of Master of Engineering in Electrical Engineering and Computer Science Abstract I present my implementation of an FTP: File Transfer Protocol system with GRASShop- per. GRASShopper is a program verifier that ensures that programs are memory safe. I wrote an FTP client and server in SPL, the GRASShopper programming language. SPL integrates the program logic’s pre- and post- conditions, along with loop invari- ants, into the language, so that programs that compile in GRASShopper are proven correct. Because of that, my client and server are guaranteed to be secure and correct. I am supervised by Professor Martin Rinard and Dr. -

Erfahrungen Mit Dem Cubietruck (Cubieboard 3)

Erfahrungen CubieTruck 09.04.17 Seite 1 Erfahrungen mit dem CubieTruck (Cubieboard 3) Dieter Drewanz Dokument begonnen: April 2016 Dokument letzter Stand: April 2017 Kurzfassung: Der Text umfaût die Vorbereitung, Erstinbetriebnahme, Installation weiterer praktischer Pakete, Anwendung der Pakete/Anwendungen und Installation von Treibern. Illustration 1: CubieTruck in Work Erfahrungen CubieTruck 09.04.17 Seite 2 Inhaltsverzeichnis 1 Einleitung.........................................................................................................................................6 1.1 Warum das entwurfsartige Dokument erstellt wurde...............................................................6 1.2 Wie die Wahl auf den Cubietruck fiel......................................................................................6 1.3 Zu den Auflistungen der Befehle..............................................................................................7 2 Die Erstinbetriebnahme....................................................................................................................7 2.1 Der Zusammenbau....................................................................................................................7 2.2 Der erste Start...........................................................................................................................8 2.2.1 Start des Androids auf internen Flash-Speicher (NAND).................................................8 2.2.2 Vorbereitungen zum Start eines Linux von der SD-Karte................................................9 -

Logo Exchange Celebrates 10Th Birth

Fall1991 Volume 10 Number 1 Logo Exchange Celebrates 10th Birth -·<Lll~~··•••• in this issue: > of Decadence? ......iN .... , Logo, and Arboreal Aging 1991-1992 ······ our Papert's R~flections International Society for Technology in Education ! LOGO tt tt EXCHANGE Journal of the ISTE Special Interest Group for Logo-Using Educators Founding Editor ISTE BOARD OF DIRECTORS 1991-92 Tom Lough Executive Board Members Editor-In-Chief Bonnie Marks, President Sharon Yoder Alameda County Office of Education (CA) Associate Editor Sally Sloan, President-Elect Judy Kull Winona State University (MN) Assistant Editor Gary Bitter, Past-President Ron Renchler Arizona State University Barry Pitsch, Secretaryffreasurer International Editor Heartland Area Education Agency (lA) Dennis Harper Don Knezek Contributing Editors Educational Services Center, Region 20 (TX) Eadie Adamson Jenelle Leonard Gina Bull Computer Literacy Training Laboratory (DC) Glen Bull Board Members Doug Clements Sandy Dawson Ruthie Blankenbaker Dorothy Fitch Park Tudor School (IN) Judi Harris Cyndy Everest-Bouch Mark Homey Christa McAuliffe Educator (HI) Sheila Cory SIGLogo Board of Directors Chapel Hill-Carrboro City Schools (NC) Lora Friedman, President Susan Friel Bev and Lee Cunningham, University of North Carolina (NC) Secretary{Treasurer Margaret Kelly Publisher California State University-San Marcos International Society for California Technology Project (CA) Technology in Education Marco Murray-Lasso Dave Moursund, Executive Director Sociedad Mexicana de Computacion en la C. -

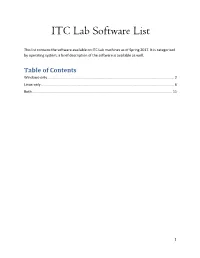

ITC Lab Software List

ITC Lab Software List This list contains the software available on ITC Lab machines as of Spring 2017. It is categorized by operating system; a brief description of the software is available as well. Table of Contents Windows-only ................................................................................................................................. 2 Linux-only ........................................................................................................................................ 6 Both ............................................................................................................................................... 11 1 Windows-only 7-Zip: file archiver ActivePerl: commercial version of the Perl scripting language ActiveTCL: TCL distribution Adobe Acrobat Reader: PDF reading and editing software Adobe Creative Suite: graphic art, web, video, and document design programs • Animate • Audition • Bridge • Dreamweaver • Edge Animate • Fuse • Illustrator • InCopy • InDesign • Media Encoder • Muse • Photoshop • Prelude • Premiere • SpeedGrade ANSYS: engineering simulation software ArcGIS: mapping software Arena: discrete event simulation Autocad: CAD and drafting software Avogadro: molecular visualization/editor CDFplayer: software for Computable Document Format files ChemCAD: chemical process simulator • ChemDraw 2 Chimera: molecular visualization CMGL: oil/gas reservoir simulation software Cygwin: approximate Linux behavior/functionality on Windows deltaEC: simulation and design environment for thermoacoustic -

NLM Development Concepts, Tools, and Functions Novdocx (En) 11 December 2007

NDK: NLM Development Concepts, Tools, and Functions novdocx (en) 11 December 2007 December 11 (en) novdocx Novell Developer Kit www.novell.com NLM™ DEVELOPMENT CONCEPTS, February 2008 TOOLS, AND FUNCTIONS novdocx (en) 11 December 2007 December 11 (en) novdocx Legal Notices Novell, Inc. makes no representations or warranties with respect to the contents or use of this documentation, and specifically disclaims any express or implied warranties of merchantability or fitness for any particular purpose. Further, Novell, Inc. reserves the right to revise this publication and to make changes to its content, at any time, without obligation to notify any person or entity of such revisions or changes. Further, Novell, Inc. makes no representations or warranties with respect to any software, and specifically disclaims any express or implied warranties of merchantability or fitness for any particular purpose. Further, Novell, Inc. reserves the right to make changes to any and all parts of Novell software, at any time, without any obligation to notify any person or entity of such changes. Any products or technical information provided under this Agreement may be subject to U.S. export controls and the trade laws of other countries. You agree to comply with all export control regulations and to obtain any required licenses or classification to export, re-export, or import deliverables. You agree not to export or re-export to entities on the current U.S. export exclusion lists or to any embargoed or terrorist countries as specified in the U.S. export laws. You agree to not use deliverables for prohibited nuclear, missile, or chemical biological weaponry end uses. -

The Cybox™ Language Defined Objects Specification Version 1.0(Draft)

The CybOX™ Language Defined Objects Specification Version 1.0(draft) Sean Barnum, Robert Martin, Bryan Worrell, Ivan Kirillov, Penny Chase, Desiree Beck, Stelios Melachrinoudis 4/13/2012 The Cyber Observable eXpression (CybOX) is a standardized language, being developed in collaboration with any and all interested parties, for the specification, capture, characterization and communication of events or stateful properties that are observable in the operational domain. A wide variety of high‐level cyber security use cases rely on such information including: event management/logging, malware characterization, intrusion detection, incident response/management, attack pattern characterization, etc. CybOX provides a common mechanism (structure and content) for addressing cyber observables across and among this full range of use cases improving consistency, efficiency, interoperability and overall situational awareness. To enable such an aggregate solution to be practical for any single use case, numerous flexibility mechanisms are designed into the language. In particular, almost everything is optional such that any single use case could leverage only the portions of CybOX that are relevant for it (from a single field to the entire language or anything in between) without being overwhelmed by the rest. This document defines the defined object types for the CybOX Language. Copyright © 2012, The MITRE Corporation. All rights reserved. Acknowledgements The authors Sean Barnum, Robert Martin, Bryan Worrell, Ivan Kirillov, Penny Chase, Desiree Beck and Stelios Melachrinoudis wish to thank the CybOX community for its assistance in contributing and reviewing this document. Trademark Information CybOX and the CybOX logo are trademarks of The MITRE Corporation. All other trademarks are the property of their respective owners. -

DOCUMENT RESUME ED 320 550 IR 014 466 AUTHOR Herrmann

DOCUMENT RESUME ED 320 550 IR 014 466 AUTHOR Herrmann, Thom, Ed. TITLE Show & Tell. Proceedings of the Ontario Universities' Conference (1st, Guelph, Canada, May 1987). INSTITUTION Guelph Univ. (Ontario). PUB DATE Hay 87 FOTE 217p.; For the proceedings of the 1988 conference, see IR 014 467. PUB TYPE Collected Works - Conference Proceedings (021) -- Reports - Descriptive (141) EDRS PRICE MF01/PC09 Plus Postage. DESCRIPTORS Authoring Aids (Programing); *Computer Assisted Instruction; *Computer Graphics; Computer Literacy; Databases; *Expert Systems; Foreign Countries; Higher Education; *Information Networks; *Information Technology; Interactive Video; Media Selection; *Teleconferencing IDENTIFIERS Canada; *Computer Mediated Communication; Ontario ABSTRACT Twenty-three conference papers focus on the use of information technology in Ontario's technical colleges and universities: "The Analytic Criticism Module--Authorial Structures & Design" (P. Beam); "Computing by Design" (R. D. Brown & J. D. Milliken); "Engineers and Computers" (P. S. Chisholm, M. Iwaniw, and G. Hayward); "Designing the CAL Screen: Problems and Processes" (R. Edmonds); "A Computer Aided Instruction in Dynamics" (H. Farazdaghi); "A Computer Assisted Curriculum Planning Program" (H. Farazdaghi , "RAPPI--Communications Networking in the Classroom" (L. Hazzan); "RAPPI--Open Door" (C. Hudel); "Computer Conferencing Systems: Educational Communications Facilitators" (C. S. Hunter); "Interactive Computer Art" (M. LeBlanc); "GEO-VITAL: Computer Enhanced Teaching in Geology" (I. P. Martini and S. Sadura); "Intentional Socialization and Computer-Mediated Communication" (E. K. McCreary & J. Ord); "Issues in the Development of Intelligent Tutoring Systems" (M. McLeish & K. Langton); "Which Objectives, Which Technologies? A Decision-Making Process" (C. Nash); "Library Instructional Module" (G. Pal, L. Rourke, and H. Salmon); "Computer-Assisted Language Learning (CALL) at the University of Guelph" (D. -

RSTS/E Release Notes

RSTS/E Release Notes Order No. AA-S246G-TC RSTS/E Release Notes Order No. AA-5246G-Te June 1985 These Release Notes describe new features of the RSTS/E system and ex plain the differences between this version and previous versions. of RSTS/E. System managers and system maintainers should read this document prior to system installation. OPERATING SYSTEM AND VERSION: RSTS/E V9.0 SOFTWARE VERSION: RSTS/E V9.0 digital equipment corporation, maynard, massachusetts The information in this document is subject to change without notice and should not be construed as a commitment by Digital Equipment Corporation. Digital Equipment Corporation assumes no responsibility for any errors that may appear in this document. The software described in this document is furnished under a license and may be used or copied only in accordance with the terms of such license. No responsibility is assumed for the use or reliability of software on equipment that is not supplied by DIGITAL or its affiliated companies. Copyright © 1982, 1985 by Digital Equipment Corporation. All rights reserved. The postage-paid READER'S COMMENTS form on the last page of this docu ment requests your critical evaluation to assist us in preparing future documenta tion. The following are trademarks of Digital EqUipment Corporation: DIBOL ReGIS ~D~DDmDTM FMS-11 RSTS DEC LA RSX DEC mail MASSBUS RT DECmate PDP UNIBUS DEC net P/OS VAX DECtape Professional VMS DEC US Q-BUS VT DECwriter Rainbow Work Processor CONTENTS Preface 1 New Features of RSTS/E V9. 0 . .. ....... 1 1.1 New Device Support . 1 1.1.1 The Virtual Disk (DVO:) .. -

GINO User Guide Version 6.0

GINO user guide version 6.0 BRADLY ASSOCIATES LTD Manhattan House 140 High Street Crowthorne Berkshire RG45 7AY England Tel: +44 (1344) 779381 Fax: +44 (1344) 773168 [email protected] www.gino-graphics.com Information in this manual is subject to change without notice. While Bradly Associates Ltd. makes every endeavour to ensure the accuracy of this document, it does not accept liability for any errors or omissions, or for any consequences arising from the use of the program or documentation. GINO user guide version 6.0 © Copyright Bradly Associates Ltd. 2003 All rights reserved. All trademarks where used are acknowledged. -

2 Du Module D’Autres Fonctionnalités Sont Ima- En Si Bon Chemin

GNU/Linux Magazine France est édité par Les Éditions Diamond éditORial La vie nous réserve parfois des expériences B.P. 20142 – 67603 Sélestat Cedex fascinantes. J’ai dernièrement souhaité effectuer Tél. : 03 67 10 00 20 – Fax : 03 67 10 00 21 une commande de fournitures sur un site dont E-mail : [email protected] je suis habituellement client. Sans être une mul- Service commercial : [email protected] tinationale américaine, ce site n’est pas non plus Sites : www.gnulinuxmag.com – boutique.ed-diamond.com celui d’un petit artisan de quartier. Cette société Directeur de publication : Arnaud Metzler est internationalisée et peut vous livrer dans de Chef des rédactions : Denis Bodor nombreux pays du monde. Rédacteur en chef : Tristan Colombo Réalisation graphique : Kathrin Scali & Jérémy Gall Ainsi, désirant remplir mon panier, je me connecte sur ce site et Responsable publicité : Valérie Fréchard, constate qu’il a été mis à jour : beaucoup plus clair, une ergonomie plus Tél. : 03 67 10 00 27 aboutie... Un bon point donc, si ce n’est la tentative de version mobile [email protected] pour laquelle l’ergonomie est inexistante. Service abonnement : Tél. : 03 67 10 00 20 Impression : pva, Druck und Medien-Dienstleistungen GmbH, Je commence à parcourir le catalogue et, afin de sauvegarder mon Landau, Allemagne début de commande, je tente de me connecter à mon compte... Im- Distribution France : (uniquement pour les dépositaires de presse) possible ! Peut-être me suis-je trompé de mot de passe ? Nouvel essai... MLP Réassort : Plate-forme de Saint-Barthélemy-d’Anjou. Même résultat ! Après cinq tentatives, je demande la réinitialisation Tél. -

Simple Html Text Editor Free

Simple html text editor free This free HTML WYSIWYG editor program allows you edit your source code online without downloading any application. Guaranteed the best visual webpage Online HTML Editor · HTML · Articles. Those who love text editors swear they are advantageous for speed and Platform: OSX or later, Windows 7 & 8, Linux; Price: Free Dubbed "a hackable text editor for the 21st Century", it's designed to be simple to use out of the If coding on the go is your thing, this is a great option for iOS devices. Download TinyMCE for free, the most advanced WYSWIYG HTML editor Just because TinyMCE is the most powerful rich text editor doesn't mean it is With 40+ powerful plugins available to developers, extending TinyMCE is as simple as. Brackets is a lightweight, yet powerful, modern text editor. We blend visual tools into the Make changes to CSS and HTML and you'll instantly see those changes on screen. Also see where your CSS Simple W3C Validator. Check Out New. The 9 Best Free HTML Editors for Web Developers (Windows Edition) selection of themes, for example, that would not only accommodate your taste, but also No other HTML editor is quite as triumphant as Sublime Text 2. Free online HTML editor for you to generate your own HTML code for your website. Full WYSIWYG HTML editor, so you can see your results as you edit. Mozilla SeaMonkey has a basic HTML editing capability that's actually not too bad for a beginner doing some simple editing. Sublime Text is a sophisticated text editor for code, markup and prose.