Improving the Way We Design Games for Learning by Examining How Popular Video Games Teach

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

![[Japan] SALA GIOCHI ARCADE 1000 Miglia](https://docslib.b-cdn.net/cover/3367/japan-sala-giochi-arcade-1000-miglia-393367.webp)

[Japan] SALA GIOCHI ARCADE 1000 Miglia

SCHEDA NEW PLATINUM PI4 EDITION La seguente lista elenca la maggior parte dei titoli emulati dalla scheda NEW PLATINUM Pi4 (20.000). - I giochi per computer (Amiga, Commodore, Pc, etc) richiedono una tastiera per computer e talvolta un mouse USB da collegare alla console (in quanto tali sistemi funzionavano con mouse e tastiera). - I giochi che richiedono spinner (es. Arkanoid), volanti (giochi di corse), pistole (es. Duck Hunt) potrebbero non essere controllabili con joystick, ma richiedono periferiche ad hoc, al momento non configurabili. - I giochi che richiedono controller analogici (Playstation, Nintendo 64, etc etc) potrebbero non essere controllabili con plance a levetta singola, ma richiedono, appunto, un joypad con analogici (venduto separatamente). - Questo elenco è relativo alla scheda NEW PLATINUM EDITION basata su Raspberry Pi4. - Gli emulatori di sistemi 3D (Playstation, Nintendo64, Dreamcast) e PC (Amiga, Commodore) sono presenti SOLO nella NEW PLATINUM Pi4 e non sulle versioni Pi3 Plus e Gold. - Gli emulatori Atomiswave, Sega Naomi (Virtua Tennis, Virtua Striker, etc.) sono presenti SOLO nelle schede Pi4. - La versione PLUS Pi3B+ emula solo 550 titoli ARCADE, generati casualmente al momento dell'acquisto e non modificabile. Ultimo aggiornamento 2 Settembre 2020 NOME GIOCO EMULATORE 005 SALA GIOCHI ARCADE 1 On 1 Government [Japan] SALA GIOCHI ARCADE 1000 Miglia: Great 1000 Miles Rally SALA GIOCHI ARCADE 10-Yard Fight SALA GIOCHI ARCADE 18 Holes Pro Golf SALA GIOCHI ARCADE 1941: Counter Attack SALA GIOCHI ARCADE 1942 SALA GIOCHI ARCADE 1943 Kai: Midway Kaisen SALA GIOCHI ARCADE 1943: The Battle of Midway [Europe] SALA GIOCHI ARCADE 1944 : The Loop Master [USA] SALA GIOCHI ARCADE 1945k III SALA GIOCHI ARCADE 19XX : The War Against Destiny [USA] SALA GIOCHI ARCADE 2 On 2 Open Ice Challenge SALA GIOCHI ARCADE 4-D Warriors SALA GIOCHI ARCADE 64th. -

000NAG Xbox Insider November 2006

Free NAG supplement (not to be sold separately) THE ONLY RESOURCE YOU’LL NEED FOR EVERYTHING XBOX 360 November 2006 Issue 2 THE SPIRIT OF SYSTEM SHOCK THE NEW ERA VENUS RISES OVER GAMING INSIDE! 360 SOUTH AFRICA RAGE LAUNCH EVERYTHING X06 TONY HAWK’S PROJECT 8 DASHBOARD WHAT’S IN THE BOX? 8 THE BUZZ: News and 360 views from around the planet 10 FEATURE: Local Launch We went, we stood in line, we watched people pick up their preorders. South Africa just got a little greener 12 FEATURE: The New Era As graphics improve, as gameplay evolves, naturally it is only a matter of time before seperation between male and female gamers dissolves 20 PREVIEW: Bioshock It is System Shock 3 in everything but the name. And it lacks Shodan. But it is still very System Shock, so much so that we call it BioSystemShock 28 FEATURE: Project 8 Mr. Hawk returns and this time he’s left the zany hijinks of Bam Magera behind. Project 8 is all about the motion, expression and momentum 29 HARDWARE: Madcatz offers up some gamepad and HD VGA cable love, and the gamepad can be used on the PC too! 36 OPINION: The Class of 360 This month James Francis reminds us why we bought a PlayStation 2 in the first place, and then calls us fanboys 4 11.2006 EDITOR SPEAK AND HE SAID, “LET THERE BE RING OF LIGHT” publisher tide media managing editor michael james [email protected] +27 83 409 8220 editor miktar dracon [email protected] assistant editor lauren das neves [email protected] copy editor nati de jager contributors matt handrahan james francis ryan king jon denton group sales and marketing manager len nery | [email protected] +27 84 594 9909 advertising sales jacqui jacobs | [email protected] +27 82 778 8439 t was nothing short of incredible to see so dave gore | [email protected] +27 82 829 1392 cheryl bassett | [email protected] much enthusiasm and support for the Xbox +27 72 322 9875 art director 360, as was seen at both rAge and at the BT chris bistline I designer Games Xbox 360 launch party. -

Case 2:08-Cv-00157-MHW-MRA Document 64-6 Filed 03/05/10 Page 1 of 306 Case 2:08-Cv-00157-MHW-MRA Document 64-6 Filed 03/05/10 Page 2 of 306

Case 2:08-cv-00157-MHW-MRA Document 64-6 Filed 03/05/10 Page 1 of 306 Case 2:08-cv-00157-MHW-MRA Document 64-6 Filed 03/05/10 Page 2 of 306 JURISDICTION AND VENUE 3. Jurisdiction is predicated upon 28 U.S.C. §§ 1331, 1338(a) and (b), and 1367(a). As the parties are citizens of different states and as the matters in controversy exceed the sum or value of seventy-five thousand dollars ($75,000.00), exclusive of interest and costs, this court also has jurisdiction over the state-law claims herein under 28 U.S.C. § 1332. 4. David Allison’s claims arise in whole or in part in this District; Defendant operates and/or transacts business in this District, and Defendant has aimed its tortious conduct in whole or in part at this District. Accordingly, venue is proper under 28 U.S.C. §§ 1391(b) and (c), and 1400(a). PARTIES 5. David Allison is a sole proprietorship with its principal place of business located in Broomfield, Colorado, and operates a website located at www.cheatcc.com. David Allison owns the exclusive copyrights to each of the web pages posted at www.cheatcc.com, as fully set forth below. 6. The true name and capacity of the Defendant is unknown to Plaintiff at this time. Defendant is known to Plaintiff only by the www.Ps3cheats.com website where the infringing activity of the Defendant was observed. Plaintiff believes that information obtained in discovery will lead to the identification of Defendant’s true name. -

Major League Baseball

007: EVERYTHING OR NOTHING 007: NIGHTFIRE 2 in 1 - Asterix and Obelix (BR) 2006 FIFA WORLD CUP 2K SPORTS: MAJOR LEAGUE BASEBALL 2K7 ACE COMBAT ADVANCE ACE LIGHTNING ACTION MAN: ROBOT ATAK ACTIVISION ANTHOLOGY ADVANCE GUARDIAN HEROES ADVANCE WARS (BR) ADVANCE WARS 2: BLACK HOLE RISING THE ADVENTURES OF JIMMY NEUTRON: BOY GENIUS VS. JI THE ADVENTURES OF JIMMY NEUTRON BOY GENIUS: ATTACK THE ADVENTURES OF JIMMY NEUTRON BOY GENIUS: JET FU AERO THE ACRO-BAT AGASSI TENNIS GENERATION 2002 AGENT HUGO: ROBORUMBLE (BR) AGGRESSIVE INLINE AIRFORCE DELTA STORM ALADDIN ALEX FERGUSON'S PLAYER MANAGER 2002 ALEX RIDER: STORMBREAKER ALIEN HOMINID ALIENATORS: EVOLUTION CONTINUES ALL GROWN UP!: EXPRESS YOURSELF ALL-STAR BASEBALL 2003 ALL-STAR BASEBALL 2004 ALTERED BEAST: GUARDIAN OF THE REALMS THE AMAZING VIRTUAL SEA-MONKEYS AMERICAN BASS CHALLENGE AMERICAN DRAGON: JAKE LONG AMERICAN IDOL ANIMAL SNAP - RESCUE THEM 2 BY 2 ANIMANIACS: LIGHTS, CAMERA, ACTION! THE ANT BULLY FOURMIZ EXTREME RACING ARCHER MACLEAN'S 3D POOL ARCTIC TALE ARMY MEN: TURF WARS ARMY MEN ADVANCE AROUND THE WORLD IN 80 DAYS ARTHUR AND THE INVISIBLES ASTÉRIX & OBÉLIX: BASH THEM ALL! ASTÉRIX & OBÉLIX XXL ASTRO BOY: OMEGA FACTOR ATARI ANNIVERSARY ATLANTIS: THE LOST EMPIRE ATOMIC BETTY ATV QUAD POWER RACING ATV - THUNDER RIDGE RIDERS Avatar - The Last Airbender (BR) BABAR: TO THE RESCUE BACK TO STONE BACK TRACK BACKYARD BASEBALL BACKYARD BASEBALL 2006 BACKYARD BASKETBALL BACKYARD FOOTBALL BACKYARD FOOTBALL 2006 BACKYARD HOCKEY BACKYARD SKATEBOARDING BACKYARD SPORTS: BASEBALL 2007 BACKYARD SPORTS: -

(12) United States Patent (10) Patent No.: US 8,202,167 B2 Ackley Et Al

USOO8202167B2 (12) United States Patent (10) Patent No.: US 8,202,167 B2 Ackley et al. (45) Date of Patent: Jun. 19, 2012 (54) SYSTEMAND METHOD OF INTERACTIVE SE A ck g E. KS et al. ....................... 463f4 VIDEO PLAYBACK 5,548,340- 44 A 8, 1996 BertramOSO 5,553,864 A * 9/1996 Sitrick ............................ 463,31 (75) Inventors: Jonathan Ackley, Glendale, CA (US); 5,606,374. A 2f1997 Bertram Christopher T. Carey, Santa Clarita, 5,634,850 A 6/1997 Kitahara CA (US); Bennet S. Carr, Burbank, CA 5,699,123 A 12/1997 Ebihara et al. (US); Kathleen S. Poole, Las Canada,s 5,782,6925,708,845. A 7/19981/1998 StelovskyWistendahl CA (US) 5,818.439 A 10/1998 Nagasaka et al. 5,830,065 A * 1 1/1998 Sitrick ............................ 463,31 (73) Assignee: Disney Enterprises, Inc., Burbank, CA 5,892,521 A 4/1999 Blossom (US) 5,893,084. A 4/1999 Morgan (Continued) (*) Notice: Subject to any disclaimer, the term of this patent is extended or adjusted under 35 FOREIGN PATENT DOCUMENTS U.S.C. 154(b) by 1834 days. EP O326830 8, 1989 (21) Appl. No.: 10/860,572 (Continued) OTHER PUBLICATIONS (22) Filed: Jun. 2, 2004 Halo 54. Airforce Delta Storm Faqs <URL: http://www.gamefaqs. (65) Prior Publication Data comfconsolexbox file/475.157.25199>. US 2005/OO2O359 A1 Jan. 27, 2005 (Continued) Related U.S. Application Data Primary Examiner — Steven J Hylinski (60) Provisional application No. 60/475,339, filed on Jun. (74) Attorney, Agent, or Firm — Farjami & Farjami LLP 2, 2003. -

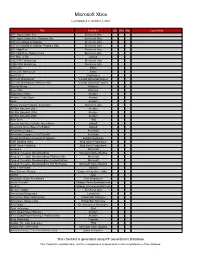

Microsoft Xbox

Microsoft Xbox Last Updated on October 2, 2021 Title Publisher Qty Box Man Comments 007: Agent Under Fire Electronic Arts 007: Agent Under Fire: Platinum Hits Electronic Arts 007: Everything or Nothing Electronic Arts 007: Everything or Nothing: Platinum Hits Electronic Arts 007: NightFire Electronic Arts 007: NightFire: Platinum Hits Electronic Arts 187 Ride or Die Ubisoft 2002 FIFA World Cup Electronic Arts 2006 FIFA World Cup Electronic Arts 25 to Life Eidos 25 to Life: Bonus CD Eidos 4x4 EVO 2 GodGames 50 Cent: Bulletproof Vivendi Universal Games 50 Cent: Bulletproof: Platinum Hits Vivendi Universal Games Advent Rising Majesco Aeon Flux Majesco Aggressive Inline Acclaim Airforce Delta Storm Konami Alias Acclaim Aliens Versus Predator: Extinction Electronic Arts All-Star Baseball 2003 Acclaim All-Star Baseball 2004 Acclaim All-Star Baseball 2005 Acclaim Alter Echo THQ America's Army: Rise of a Soldier: Special Edition Ubisoft America's Army: Rise of a Soldier Ubisoft American Chopper Activision American Chopper 2: Full Throttle Activision American McGee Presents Scrapland Enlight Interactive AMF Bowling 2004 Mud Duck Productions AMF Xtreme Bowling Mud Duck Productions Amped 2 Microsoft Amped: Freestyle Snowboarding Microsoft Game Studios Amped: Freestyle Snowboarding: Platinum Hits Microsoft Amped: Freestyle Snowboarding: Limited Edition Microsoft Amped: Freestyle Snowboarding: Not for Resale Microsoft Game Studios AND 1 Streetball UbiSoft Antz Extreme Racing Empire Interactive / Light... APEX Atari Aquaman: Battle For Atlantis TDK -

Dead Red (Ghosts & Magic) (Volume 2) by M.R. Forbes

Dead Red (Ghosts & Magic) (Volume 2) By M.R. Forbes READ ONLINE If you are searched for a book Dead Red (Ghosts & Magic) (Volume 2) by M.R. Forbes in pdf form, then you've come to the loyal website. We presented the full variant of this ebook in doc, DjVu, txt, PDF, ePub forms. You may read by M.R. Forbes online Dead Red (Ghosts & Magic) (Volume 2) or load. Additionally, on our site you can reading manuals and diverse artistic books online, or downloading them as well. We will to draw on your regard that our website does not store the book itself, but we provide ref to the site whereat you may load either read online. So that if have must to load Dead Red (Ghosts & Magic) (Volume 2) pdf by M.R. Forbes , then you have come on to loyal website. We have Dead Red (Ghosts & Magic) (Volume 2) doc, ePub, DjVu, txt, PDF forms. We will be pleased if you will be back to us again and again. Magic kaito volume 2 - detective conan wiki Magic Kaito Volume 2 was released on November 15, 1988 in 7 Ghost Game . Akako thinks that if Kaito died, her failure would be erased and her . The Secret of the Red Tear • The Witch, the Detective, and the Phantom 35 classic horror stories, free to download - ebook friendly On this list, you'll find classic horror stories from the 19th and 20th century. They are free to download from Project Gutenberg. Nowadays, when people want get horrified, they turn on ghost . -

Game Boy Advance

List of Game Boy Advance Games 1) 007: Everything or Nothing 28) Boktai 2: Solar Boy Django 2) 007: NightFire 29) Boktai 3: Sabata's Counterattack 3) Advance Guardian Heroes 30) Boktai: The Sun Is in Your Hand 4) Advance Wars 31) Bomberman Max 2 Red Advance 5) Advance Wars 2: Black Hole Rising 32) Bomberman Max 2: Blue Advance 6) Aero the Acrobat 33) Bomberman Tournament 7) AirForce Delta Storm 34) Breath of Fire 8) Alien Hominid 35) Breath of Fire II 9) Alienators: Evolution Continues 36) Broken Circle 10) Altered Beast: Guardian of the Realms 37) Broken Sword: The Shadow of the Templars 11) Anguna - Warriors of Virtue 38) Bruce Lee: Return of the Legend 12) Another World 39) Bubble Bobble: Old & New 13) Astro Boy: Omega Factor 40) CIMA: The Enemy 14) BackTrack 41) CT Special Forces 15) Baldur's Gate: Dark Alliance 42) CT Special Forces 2: Back in the Trenches 16) Banjo-Kazooie: Grunty's Revenge 43) CT Special Forces 3: Navy Ops 17) Banjo-Pilot 44) Caesars Palace Advance: Millenium Gold 18) Baseball Advance Edition 19) Batman Begins 45) Car Battler Joe 20) Batman: Rise of Sin Tzu 46) Castlevania: Aria of Sorrow 21) Batman: Vengeance 47) Castlevania: Circle of the Moon 22) Battle B-Daman 48) Castlevania: Harmony of Dissonance 23) Battle B-Daman: Fire Spirits! 49) ChuChu Rocket! 24) Beyblade: G-Revolution 50) Classic NES Series: Dr. Mario 25) Black Matrix Zero 51) Classic NES Series: Super Mario Bros. 26) Blackthorne 52) Columns Crown 27) Blades of Thunder 53) Comix Zone 79) Disney's Magical Quest 3 Starring Mickey & Donald 54) Contra -

Airforce Delta Storm

Airforce delta storm Continue Strap into the cockpit and blast through the guts of the agonizing world of flight combat. Your adrenaline pumps are like machine gun fire skins from your tail, you perform backbreaking barrel rolls and experience dog fighting action. Take your choice of more than 70 aircraft, including military fighter jets rendered from actual aircraft. With a full range of extreme missions to numb your senses, AirForce Delta Storm is pushing your Xbox into the sky! Konami Computer Entertainment Studios (Xbox)Mobile 21 (Game Boy Advance) Xbox November 15, 2001 (North America) February 22, 2002 (Japan) April 12, 2002 (Europe)Game Boy Advance September 16, 2002 (North America) September 26, 2002 (Japan) November 1, 2002 (Europe) Air Force game Delta Is the second in the Delta Delta Series Delta Series. The story of AfDS Story takes place in a fictional time (20X1-20X7), when scientific technologies have reached a new level and the ability to treat almost all human diseases has become a reality. As a result, however, the Earth is overcrowded and basic necessities are scarce. At the height of the growing epidemic, those countries that are largely industrialized but do not produce enough food for themselves have united to form a Joint Force and use their military advantages to seize agricultural land, while those countries that are threatened by the United Forces invasion have pooled their resources to form allied forces. Fighters Other S-72BK U-4 RF-4C Phantom II (Reconnaissance Variant F-4) AH-64D Apache Longbow B-1B Lancer -

Nintendo Game Boy Advance

Nintendo Game Boy Advance Last Updated on September 27, 2021 Title Publisher Qty Box Man Comments 007: Everything or Nothing Electronic Arts 007: NightFire Electronic Arts 2 Games In 1 Double Pack: Hot Wheels: World Race + Velocity X THQ 2 Games In 1: Cartoon Network Block Party + Speedway Majesco 2 Games In 1: Dora the Explorer - Double Pack Global Star Software 2 Games In 1: Dragon Ball Buu's Fury + Dragon Ball GT - Transformation Atari 2 Games In 1: Dragon Ball Z: The Legacy of Goku I & II Atari 2 Games In 1: Finding Nemo + Monsters, Inc. Double Pack THQ 2 Games In 1: Golden Nugget Casino + Texas Hold 'Em Poker Majesco 2 Games In 1: Monster Trucks + Quad Desert Fury Majesco 2 Games In 1: Power Rangers Time Force + Ninja Force THQ 2 Games In 1: Scooby Doo + Scooby Doo 2: Monsters Unleashed THQ 2 Games In 1: Scooby-Doo and the Cyber Chase + Mystery Mayhem THQ 2 Games In 1: SpongeBob SquarePants Supersponge + Revenge of the Flying Dutchman THQ 2 Games In 1: SpongeBob SquarePants: Battle for Bikini Bottom + Freeze Frame Frenzy THQ 2 Games In 1: SpongeBob SquarePants: Battle for Bikini Bottom / Fairly OddParents: Breakin' Da Rules THQ 2 Games in 1: The Incredibles + Finding Nemo: The Continuing Adventures Activision 2 In 1 Game Pack: Shark Tale + Shrek 2 Activision 2 in 1 Game Pack: Spider-Man + Spider-Man 2 Activision 2 In 1 Game Pack: Spider-Man: Mysterio's Menace + X2: Wolverine's Revenge Activision 2 In 1 Game Pack: Tony Hawk's Underground + Kelly Slater's Pro Surfer Activision 2-in-1 Fun Pack: Madagascar: Operation Penguin + Shrek -

Liste Des Jeux Pour Les Consoles Retro NES, Retro SNES, Retro Megadrive, Retro Neo Geo Et Pour Bartop À Base De Raspberry Pi 13.483 Jeux

Liste des jeux pour les consoles Retro NES, Retro SNES, Retro Megadrive, Retro Neo Geo et pour Bartop à base de Raspberry Pi 13.483 jeux www.2players.shop Table des matières Amiga 1200 – 314 jeux ........................................................................................................................... 4 Amstrad CPC – 344 jeux ....................................................................................................................... 11 Apple II – 89 jeux .................................................................................................................................. 18 Final Burn Alpha – 2171 jeux ................................................................................................................ 21 Atari 2600 – 565 jeux ........................................................................................................................... 64 Atari 7800 – 65 jeux ............................................................................................................................. 76 Commodore 64 – 398 jeux ................................................................................................................... 78 ColecoVision – 156 jeux ....................................................................................................................... 86 Family Computer Disk System – 193 jeux ............................................................................................ 90 Game Gear – 295 jeux ......................................................................................................................... -

Taito Type X2 Atomiswave Naomi Naomi Shoot Sega Model 2

Taito Type X2 Atomiswave Naomi } Naomi Shoot Sega Model 2 Daphne Cave IGS/PGM CPSIII Capcom Zinc Neo Geo MVS / AES Sega ST-V Arcade System AAE Arcade Classics Touhou Project PC-Engine SuperGrafx PC-Engine CD PCFX Neo Geo Pocket Color DICE Colecovision Vectrex GX4000 Philips CD-i Panasonic 3DO Amiga CDTV Amiga CD32 MSX Atari 2600 Atari 7800 Lynx Jaguar SG-1000 Master System Game Gear Megadrive Mega-CD Megadrive-32X Saturn Dreamcast Wonderswan Mono Wonderswan Color Game Boy Game Boy Color Virtual Boy Game Boy Advance Nintendo Famicom Disk System Super Nintendo Playstation PSP Arcana Heart 3 Battle Fantasia BlazBlue Calamity Trigger BlazBlue Continuum Shift Chaos Breaker Daemon Bride Gigawing Generations Homura King Of Fighters 98 Ultimate Match King Of Fighters Maximum Impact A King Of Fighters XII King Of Fighters XIII Power Instinct The Commemoration Raiden III Raiden IV Samurai Shodown Edge of Destiny Shikigami no Shiro III Spica Adventure Super Street Fighter 4 Arcade Edition Tetris The Grand Master 3 Trouble Witches Demolish Fist Dirty Pigy Football Dolphin Blue Fist of the North Star Guilty Gear Isuka King of Fighters NeoWave Knights Of Valour: The Seven Spirits Metal Slug 6 Neo geo Battle coliseum Salary Man Kintaro The Rumble Fish The Rumble Fish2 Asian Dynamite/Dynamite Deka EX Border Down Cannon Spike / Gun Spike Capcom vs SNK 2 Millionaire Fighting 2001 Capcom Vs. SNK Millennium Fight 2000 Cleopatra Fortune Plus Cosmic Smash Crazy Taxi Doki Doki Idol Star Seeker Giant Gram Giant Gram 2000 Guilty Gear X Guilty Gear XX Guilty Gear XX Accent Core Guilty Gear XX Reload Guilty Gear XX Slash Jambo! Safari Kurukuru Chameleon Marvel vs.