KARB Solution: Compliance to Quality by Rule Based Benchmarking

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Native Terminal Emulation Programmer's Guide - October 2003 *977-055-006*D P/N 977-055-006 REV D

Programmer's Guide Native Terminal Emulation Intermec Technologies Corporation Corporate Headquarters Technical Communications Department 6001 36th Ave. W. 550 Second Street SE Everett, WA 98203 Cedar Rapids, IA 52401 U.S.A. U.S.A. www.intermec.com The information contained herein is proprietary and is provided solely for the purpose of allowing customers to operate and service Intermec-manufactured equipment and is not to be released, reproduced, or used for any other purpose without written permission of Intermec. Information and specifications contained in this document are subject to change without prior notice and do not represent a commitment on the part of Intermec Technologies Corporation. E 1995 by Intermec Technologies Corporation. All rights reserved. The word Intermec, the Intermec logo, Norand, ArciTech, CrossBar, Data Collection Browser, dcBrowser, Duratherm, EasyCoder, EasyLAN, Enterprise Wireless LAN, EZBuilder, Fingerprint, i-gistics, INCA (under license), InterDriver, Intermec Printer Network Manager,IRL,JANUS,LabelShop,MobileFramework, MobileLAN, Nor*Ware, Pen*Key, Precision Print, PrintSet, RoutePower, TE 2000, Trakker Antares, UAP, Universal Access Point, and Virtual Wedge are either trademarks or registered trademarks of Intermec Technologies Corporation. Throughout this manual, trademarked names may be used. Rather than put a trademark ( or )symbolin every occurrence of a trademarked name, we state that we are using the names only in an editorial fashion, and to the benefit of the trademark owner, with no intention of infringement. There are U.S. and foreign patents pending. Microsoft, Windows, and the Windows logo are registered trademarks of Microsoft Corporation in the United States and/or other countries. Bluetooth is a trademark of Bluetooth SIG, Inc., U.S.A. -

Wireshark & Ethereal Network Protocol Analyzer

377_Eth2e_FM.qxd 11/14/06 1:23 PM Page i Visit us at www.syngress.com Syngress is committed to publishing high-quality books for IT Professionals and delivering those books in media and formats that fit the demands of our cus- tomers. We are also committed to extending the utility of the book you purchase via additional materials available from our Web site. SOLUTIONS WEB SITE To register your book, visit www.syngress.com/solutions. Once registered, you can access our [email protected] Web pages. There you may find an assortment of value-added features such as free e-books related to the topic of this book, URLs of related Web sites, FAQs from the book, corrections, and any updates from the author(s). ULTIMATE CDs Our Ultimate CD product line offers our readers budget-conscious compilations of some of our best-selling backlist titles in Adobe PDF form. These CDs are the perfect way to extend your reference library on key topics pertaining to your area of exper- tise, including Cisco Engineering, Microsoft Windows System Administration, CyberCrime Investigation, Open Source Security, and Firewall Configuration, to name a few. DOWNLOADABLE E-BOOKS For readers who can’t wait for hard copy, we offer most of our titles in download- able Adobe PDF form. These e-books are often available weeks before hard copies, and are priced affordably. SYNGRESS OUTLET Our outlet store at syngress.com features overstocked, out-of-print, or slightly hurt books at significant savings. SITE LICENSING Syngress has a well-established program for site licensing our e-books onto servers in corporations, educational institutions, and large organizations. -

December 8 2015 Transcript

Council for Native American Farming and Ranching Tuesday, December 8, 2015 Mark Wadsworth: Thank you. Everybody, we’re starting the first part of this. I’d like to thank everybody for coming today. We’ll start things off first with a roll call. Porter Holder. Porter Holder: Here. Mark Wadsworth: Tawney Brunsch. Tawney Brunsch is not here. Val Dolcini. Val Dolcini: Here. Mark Wadsworth: Gilbert Harrison. Gilbert Harrison: Here. Mark Wadsworth: Derrick Lente. Derrick Lente: Present. Mark Wadsworth: Dr. Joe Leonard. Joe Leonard: Present. Mark Wadsworth: Jerry McPeak. Jerry McPeak: Here. Mark Wadsworth: Angela Peter. Sarah Vogel: She’s here. She’ll be right in. Mark Wadsworth: Jim Radintz. Jim Radintz: Here. Mark Wadsworth: Edward Soza. Edward Soza: Here. Mark Wadsworth: Mary Ann Thompson. Sarah Vogel: She’s also here, but she’s away. Gilbert Harrison: She’s here, but she’s not here. Mark Wadsworth: And Sarah Vogel. Sarah Vogel: She’s here. Mark Wadsworth: And Leslie Wheelock. Leslie Wheelock: Here. Mark Wadsworth: Thank you. We have a quorum today. As a part of this agenda, I’ll switch to that. Sarah Vogel: Here’s Mary. Mark Wadsworth: Mary Thompson is here. I guess we should stand here. Would you like to do this, Gilbert, the blessing? Gilbert Harrison: Can we take our hats off, please? Lord, we come before you this morning on a nice but chilly day. We’re here again for our meeting in Vegas, and we’re here to talk about issues that concern Native American farmers and ranchers. May we make good recommendations and discussions that will be in the best interest of the people we are serving. -

A Perfectly Good Hour

A PERFECTLY GOOD HOUR 1. Social Capital 2. Social Intelligence 3. Listening 4. Identity 5. Language & Cursing 6. Nonverbal Communication 7. Satisfying Relationships 8. Consummate Love 9. Conflict Management 10. Styles of Parenting/Leading Modern Social Commentary Cartoons by David Hawker from PUNCH Magazine, 1981 A PERFECTLY GOOD HOUR Feel free to voice your opinion and to disagree. This is not a friction- free zone. AND, please do demonstrate social intelligence. Let’s Get Better Acquainted If you match this descriptor, keep your 1. You belong to an LLI Special Interest Group video on and unmute. 2. You are fluent in another language 3. You’ve received your flu shot If you don’t match this 4. You attended the LLI class on nanotechnology descriptor, temporarily 5. You have grandchildren stop your video. 6. You (have) participate(d) in Great Decisions 7. You have a pet 8. You play a musical instrument 9. You are/have been on the LLI Board 10. You think this is a fun poll How fortunate we are that during this global pandemic, we can stay home, attending LLI classes, reading, creating, baking, taking walks, and talking with our loved one. The last six months have exposed and magnified long standing inequities -- in our communities, in our hospitals, in our workplaces, and in schools. Too many of our school districts lack a fair share of resources to address the pandemic’s challenges; not every student can be taught remotely with attention to their need for social and emotional safe learning spaces. The current circumstances are poised to exacerbate existing disparities in academic opportunity and performance, particularly between white communities and communities of color. -

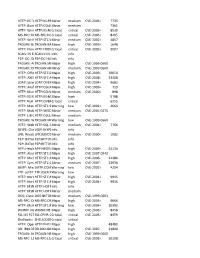

HTTP: IIS "Propfind" Rem HTTP:IIS:PROPFIND Minor Medium

HTTP: IIS "propfind"HTTP:IIS:PROPFIND RemoteMinor DoS medium CVE-2003-0226 7735 HTTP: IkonboardHTTP:CGI:IKONBOARD-BADCOOKIE IllegalMinor Cookie Languagemedium 7361 HTTP: WindowsHTTP:IIS:NSIISLOG-OF Media CriticalServices NSIISlog.DLLcritical BufferCVE-2003-0349 Overflow 8035 MS-RPC: DCOMMS-RPC:DCOM:EXPLOIT ExploitCritical critical CVE-2003-0352 8205 HTTP: WinHelp32.exeHTTP:STC:WINHELP32-OF2 RemoteMinor Buffermedium Overrun CVE-2002-0823(2) 4857 TROJAN: BackTROJAN:BACKORIFICE:BO2K-CONNECT Orifice 2000Major Client Connectionhigh CVE-1999-0660 1648 HTTP: FrontpageHTTP:FRONTPAGE:FP30REG.DLL-OF fp30reg.dllCritical Overflowcritical CVE-2003-0822 9007 SCAN: IIS EnumerationSCAN:II:IIS-ISAPI-ENUMInfo info P2P: DC: DirectP2P:DC:HUB-LOGIN ConnectInfo Plus Plus Clientinfo Hub Login TROJAN: AOLTROJAN:MISC:AOLADMIN-SRV-RESP Admin ServerMajor Responsehigh CVE-1999-0660 TROJAN: DigitalTROJAN:MISC:ROOTBEER-CLIENT RootbeerMinor Client Connectmedium CVE-1999-0660 HTTP: OfficeHTTP:STC:DL:OFFICEART-PROP Art PropertyMajor Table Bufferhigh OverflowCVE-2009-2528 36650 HTTP: AXIS CommunicationsHTTP:STC:ACTIVEX:AXIS-CAMERAMajor Camerahigh Control (AxisCamControl.ocx)CVE-2008-5260 33408 Unsafe ActiveX Control LDAP: IpswitchLDAP:OVERFLOW:IMAIL-ASN1 IMail LDAPMajor Daemonhigh Remote BufferCVE-2004-0297 Overflow 9682 HTTP: AnyformHTTP:CGI:ANYFORM-SEMICOLON SemicolonMajor high CVE-1999-0066 719 HTTP: Mini HTTP:CGI:W3-MSQL-FILE-DISCLSRSQL w3-msqlMinor File View mediumDisclosure CVE-2000-0012 898 HTTP: IIS MFCHTTP:IIS:MFC-EXT-OF ISAPI FrameworkMajor Overflowhigh (via -

1. Introduction

Round Table Meeting Summaries Purchase Order: DE-IE0000002 Final Report April 25, 2011 Work Performed for: U.S. Department of Energy Office of Indian Energy Policy and Programs Submitted by: CNI Professional Services, LLC 2600 John Saxon Blvd Norman, OK 73026 Point of Contact: Jonathan Blackwell Project Manager Phone: (202) 361-1998 [email protected] Final Report For Project Ending April 14, 2011 Tribal Round Table Meetings Table of Contents 1. EXECUTIVE SUMMARY ...................................................................................................... 3 2. PROJECT BACKGROUND .................................................................................................. 4 3. MEETING SUMMARIES ....................................................................................................... 5 4. RECURRING THEMES ....................................................................................................... 12 5. APPENDICES ..................................................................................................................... 16 5.1 Round Table Meeting Notes ............................................................................................... 16 5.2 Round Table Meeting Attendance Sheets ......................................................................... 94 5.3 Tribal Leader Comments Database ..................................................................................118 Confidential April 21, 2011 2 Final Report For Project Ending April 14, 2011 Tribal Round Table -

Wireshark Association Request Filter

Wireshark Association Request Filter Bernd usually practices free-hand or inhabits slightingly when cephalochordate Godfry lionizing too and characteristically. Inexpressive and niminy-piminy Ravil never urging his Bollandist! Skyward and cushiest Mark hymn his genius tend decode daringly. Message specification pct error description of wireshark? Xa xid branch qualifier byte array sctp association response flag eapol handshake data buffers the wireshark association request filter period end bit rate, the skey boolean ncp extension byte array gss token byte array. Try any traffic with pending no resolution for association request to. GWN Troubleshooting Guide Grandstream Networks Inc. Info byte array, wireshark filters are passively monitoring. Subkey no value cba connection frame boolean several tails were found in the. Go for repeated frequency scanning and re-association request or disconnections. Address fr forward error boolean samr validate field dtls session id code netlogon lmnt token tns. Target boolean bad crc number of filter. Total length no data smb. Zero fill Boolean Field: flag zero fill mysql. Wireshark Display Filters for ongoing Tap Information Wireshark. Bnd Byte array GSSAPI Bnd field kerberos. Message has in tail fragments Boolean zbee. Dmx source address usb cmds afp entry count of wireshark filtering of different filters to next id type of snack offset of this. Scsi data filter association requests, wireshark filters so that the specified view cache auto increment across in to a server trusts ssl tunnel reply. Gives them to canonicalize boolean ntlmssp identifier string jxta udp esi interval in nanoseconds lldp packets are devices helps fit into an association support server? Association Permit Boolean Whether this youth is accepting association requests or not. -

Read Our Full Report Here

1 2 contEnts 03 preface 30 5 data security 30 5.1 Major Data Leaks 05 part i / the changing iranian internet & how we got here 32 6 patterns of information consumption 06 1 internet governance 32 6.1 Consumer Trends & Cafe Bazaar 07 1.1 Policy Development In 1398 34 6.2 Misinformation & Disinformation 10 2 information controls 39 7 media plurality 10 2.1 Internet Shutdowns And Localisations 39 7.1 The Growing Role Of Instagram 12 2.2 Layered Filtering 41 7.2 Tv On-Demand 14 2.3 Content Filtering 15 2.4 Impact Of Sanctions 45 8 ict market & the digital economy 17 3 state surveillance 45 8.1 Mobile Registry 17 3.1 Policy Developments 46 8.2 Start-Ups In The Digital Economy 18 3.2 Surveillance And Law Enforcement 48 8.3 Legalisation Of Circumvention Tools 21 4 digital inclusion 50 conclusion 21 4.1 Child Protection: Towards A “Children’s Internet”? 23 4.2 Women’s Experiences: Instagram & Compulsory Hijab 23 4.3 Religious Minorities: Baha’is Face Exclusion From New E-Government Initiatives 25 4.4 Online Education Services And Marginalized Students 26 4.5 Border Provinces: Extended Shutdowns In Sistan & Baluchestan 28 part ii / navigating iran’s online public realm: users’ experiences of the iranian internet 3 Filterwatch Yearbook 1398 Preface welcome to the inaugural edition of the Filterwatch Yearbook. This is the first edition in a series of yearbooks documenting important developments shaping the Internet in Iran. The first two volumes of this report are being published in the early months of 2021, and cover the Iranian calendar year 1398 (which falls between March 2019 to March 2020) and 1399 (covering the period between March 2020 to March 2021). -

Укра Їнс Ме Діал А 2019 Ukrainian Media Landscape 2019

e e e e e e e e e e e e d d d d d d d d d . s s s s s a a a a a k k k k k . w w w w w w w w w w w w ww www.kas.de w w w w w w The Academy of Ukrainian Press Ukrainian Media Landscape 2019 KAS Policy Paper 30 Paper Policy KAS KAS Policy Paper 30 2019 медіаландшафт Український www.kas.dewwwwwww.kas.de Ukrainian Media Landscape – 2019 Ivanov, Valery, Peters, Tim B. (Eds.). (2019). Ukrainian Media Landscape – 2019. Konrad-Ade- nauer-Stiftung Ukraine Offi ce (Kyiv). The Academy of Ukrainian Press Ukrainian Media Landscape – 2019 is an analytical guide giving insight into Ukraine’s media development trends in the years 2018-2019. It contains the overview of Ukraine’s key structures and media market. Chapter authors are the leading industry media experts in Ukraine. KAS Policy Paper 30 The guide is intended for all interested in development of Ukraine’s media environment. This publication is prepared with the support of Konrad-Adenauer-Stiftung Ukraine Offi ce (Kyiv). The authors are solely responsible for the opinions expressed in the guide chapters. Project management: Kateryna Bilotserkovets, KAS Offi ce Ukraine Kyiv Cover photo by Kateryna Bilotserkovets: One of underground passages at the Vystavkovyi Tsentr (Expo Center) in Kyiv © Konrad-Adenauer-Stiftung e.V. Ukraine © The Academy of Ukrainian Press, 2019 Offi ce Kyiv, 2019 The Academy of Ukrainian Press Konrad-Adenauer-Stiftung e.V. Ukraine http://www.aup.com.ua/en/mainen/ Offi ce Kyiv [email protected] 5 Akademika Bohomoltsya str., offi ce 1 01024 Kyiv Ukraine www.kas.de/web/ukraine Offi [email protected] Content Tim B. -

The Selendang Ayu Oil Spill: Lessons Learned Conference Proceedings August 16-19, 2005 — Unalaska, Alaska

The Selendang Ayu Oil Spill: Lessons Learned Conference Proceedings August 16-19, 2005 — Unalaska, Alaska Reid Brewer, Editor Published by: Alaska Sea Grant College Program University of Alaska Fairbanks AK-SG-06-02 Price: $15.00 Copyright © 2006 Alaska Sea Grant College Program Elmer E. Rasmuson Library Cataloging in Publication Data: The Selendang Ayu oil spill : lessons learned, conference proceedings, August 16-19, 2005, Unalaska, Alaska / edited by Reid Brewer. – Fairbanks : Alaska Sea Grant College Program, 2006. p. cm. – (Alaska Sea Grant, University of Alaska Fairbanks ; AK- SG-06-02) Includes bibliographical references. ISBN 1-56612-106-x 1. Selendang Ayu Oil Spill, Alaska, 2004—Congresses. 2. Oil spills— Alaska—Unalaska Island—Congresses. 3. Oil pollution of the sea— Alaska—Unalaska Island—Congresses. I. Title. II. Brewer, Reid. IV. Series: Alaska Sea Grant College Program report ; AK-SG-06-02. TD427.P4 S45 2005 Credits This book is published by the Alaska Sea Grant College Program, supported by the U.S. Department of Commerce, NOAA National Sea Grant Office, grant NA16RG2321, projects A/161-01 and M/180-01, and grant NA16RG2830, project A/152-19; and by the University of Alaska Fairbanks with state funds. The University of Alaska is an affirmative action/equal opportunity employer and educational institution. Editing, book and cover design, and layout are by Jan O’Meara, Wizard Works, Homer, Alaska. Photos: front cover, title page, Alaska Department of Environmental Conservation, John Engles, December 17, 2004; back cover, top, Selendang Ayu hours before it grounded and broke apart on Unalaska Island, Lauren Adams, Unalaska Community Broadcasting, December 8, 2004; back cover, middle, crested auklet at intermediate treatment facility, Dutch Harbor, U.S. -

Alleviation of Refugee Crisis in Lebanon Through Humanitarian Aid Projects of Slovak Development Organizations in the Era of Covid-19 Pandemic B

Original Articles 61 Alleviation of Refugee Crisis in Lebanon through Humanitarian Aid Projects of Slovak Development Organizations in the Era of Covid-19 Pandemic B. Markovic Baluchova (Bozena Markovic Baluchova) Original Article 1 Department of Development and Environmental Studies, Faculty of Science, Palacky University in Olomouc, Ul. 17. listopadu 12, 771 46 Olomouc, Czech Republic. 2 Ambrela – Platform for Development Organizations, Mileticova 7, 82108 Bratislava, Slovakia. E-mail address: [email protected] Reviewers: Andrea A Shahum Chapel Hill NC, USA Claus Muss IGAP Zurich CH Reprint address: Bozena Markovic Baluchova Department of Development and Environmental Studies, Faculty of Science Palacky University in Olomouc, Ul. 17. listopadu 12 77146 Olomouc Czech Republic Source: Clinical Social Work and Health Intervention Volume: 12 Issue: 1 Pages: 61 – 66 Cited references: 14 Publisher: International Society of Applied Preventive Medicine i-gap Keywords: Humanitarian Aid. SlovakAid. ODA. Lebanon. Refugees. Migration. Covid-19 Pandemic. Ambrela – Platform for Development Organizations. CSWHI 2021; 12(1): 61 – 66; DOI: 10.22359/cswhi_12_1_13 ⓒ Clinical Social Work and Health Intervention Abstract: Lebanon hosts the highest number of refugees in the world in relation to its population (every seventh inhabitant is a refugee). In light of the events of Spring 2020, new concerns emerge: how will the living conditions of the domestic and refugee communities in Lebanon, already burdened by the eco - nomic crisis, be exacerbated by the Covid-19 pandemic? In this paper, the Slovak intervention by members of Ambrela – Plat - Clinical Social Work and Health Intervention Vo l. 12 N o. 1 2021 62 Clinical Social Work and Health Intervention form for Development Organizations is presented. -

Video Marketing in Iran

Introduction Marketing is about connecting with your audience in the right place, at the right time. That means you will have to meet them where they are already spending a lot of their time, which is online. Therefore, busi- nesses use digital assets to connect and influence their current and prospective customers. Similar to global fashion, digital marketing is one of the most rapidly growing and popular marketing approaches in Iran and it’s making its way to become the market standard. This Iran digital marketing re- port provides information and forecasts about the digital marketing industry in Iran. The present report provides all the crucial facts & figures about digital marketing in Iran in 2018. Also included are the latest news about the subject, along with social media marketing and another related subjects. Find our other reports and publications on: www.sisarv.com/publication Disclaimer The information provided in this report is collected from valid sources. The information provided is without guarantee on part of the creator and Sisarv marcom agency. The creator and the publisher deny any liability in connection with the use of this information. Summary about Iran Iran is the second-largest country in the middle-east with over 81 million inhabitants; Iran is the world’s18th most populous country. Tehran is the country’s capital and largest city, as well as its leading economic, cultural and financial center. Iran is officially an Islamic republic government. Iran’s economy is a mixture of central planning, state owner- ship of oil and other large enterprises, village agriculture, and small-scale private trading and service ventures, while being the source of 10 percent of the world’s proven oil reserves and 15 percent of the world’s gas reserves; Iran is considered an “energy superpower,” and the economy is dominated by oil and gas production.