Computational Models of Human Intelligence

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Framework for Representing Knowledge Marvin Minsky MIT-AI Laboratory Memo 306, June, 1974. Reprinted in the Psychology of Comp

A Framework for Representing Knowledge Marvin Minsky MIT-AI Laboratory Memo 306, June, 1974. Reprinted in The Psychology of Computer Vision, P. Winston (Ed.), McGraw-Hill, 1975. Shorter versions in J. Haugeland, Ed., Mind Design, MIT Press, 1981, and in Cognitive Science, Collins, Allan and Edward E. Smith (eds.) Morgan-Kaufmann, 1992 ISBN 55860-013-2] FRAMES It seems to me that the ingredients of most theories both in Artificial Intelligence and in Psychology have been on the whole too minute, local, and unstructured to account–either practically or phenomenologically–for the effectiveness of common-sense thought. The "chunks" of reasoning, language, memory, and "perception" ought to be larger and more structured; their factual and procedural contents must be more intimately connected in order to explain the apparent power and speed of mental activities. Similar feelings seem to be emerging in several centers working on theories of intelligence. They take one form in the proposal of Papert and myself (1972) to sub-structure knowledge into "micro-worlds"; another form in the "Problem-spaces" of Newell and Simon (1972); and yet another in new, large structures that theorists like Schank (1974), Abelson (1974), and Norman (1972) assign to linguistic objects. I see all these as moving away from the traditional attempts both by behavioristic psychologists and by logic-oriented students of Artificial Intelligence in trying to represent knowledge as collections of separate, simple fragments. I try here to bring together several of these issues by pretending to have a unified, coherent theory. The paper raises more questions than it answers, and I have tried to note the theory's deficiencies. -

Chapter 1 of Artificial Intelligence: a Modern Approach

1 INTRODUCTION In which we try to explain why we consider artificial intelligence to be a subject most worthy of study, and in which we try to decide what exactly it is, this being a good thing to decide before embarking. INTELLIGENCE We call ourselves Homo sapiens—man the wise—because our intelligence is so important to us. For thousands of years, we have tried to understand how we think; that is, how a mere handful of matter can perceive, understand, predict, and manipulate a world far larger and ARTIFICIAL INTELLIGENCE more complicated than itself. The field of artificial intelligence, or AI, goes further still: it attempts not just to understand but also to build intelligent entities. AI is one of the newest fields in science and engineering. Work started in earnest soon after World War II, and the name itself was coined in 1956. Along with molecular biology, AI is regularly cited as the “field I would most like to be in” by scientists in other disciplines. A student in physics might reasonably feel that all the good ideas have already been taken by Galileo, Newton, Einstein, and the rest. AI, on the other hand, still has openings for several full-time Einsteins and Edisons. AI currently encompasses a huge variety of subfields, ranging from the general (learning and perception) to the specific, such as playing chess, proving mathematical theorems, writing poetry, driving a car on a crowded street, and diagnosing diseases. AI is relevant to any intellectual task; it is truly a universal field. 1.1 WHAT IS AI? We have claimed that AI is exciting, but we have not said what it is. -

Artificial Intelligence Has Enjoyed Tre- List of Offshoot Technologies, from Time Mendous Success Over the Last Twenty five Sharing to Functional Programming

AI Magazine Volume 26 Number 4 (2006)(2005) (© AAAI) 25th Anniversary Issue Artificial Intelligence: The Next Twenty-Five Years Edited by Matthew Stone and Haym Hirsh ■ Through this collection of programmatic state- that celebrates the exciting new work that AI ments from key figures in the field, we chart the researchers are currently engaged in. At the progress of AI and survey current and future direc- same time, we hope to help to articulate, to tions for AI research and the AI community. readers thinking of taking up AI research, the rewards that practitioners can expect to find in our mature discipline. And even if you’re a stranger to AI, we think you’ll enjoy this inside e know—with a title like that, you’re peek at the ways AI researchers talk about what expecting something awful. You’re they really do. on the lookout for fanciful prognos- W We asked our contributors to comment on tications about technology: Someday comput- goals that drive current research in AI; on ways ers will fit in a suitcase and have a whole our current questions grow out of our past re- megabyte of memory. And you’re wary of lurid sults; on interactions that tie us together as a Hollywood visions of “the day the robots field; and on principles that can help to repre- come”: A spiderlike machine pins you to the sent our profession to society at large. We have wall and targets a point four inches behind your eyes with long and disturbing spikes. Your excerpted here from the responses we received last memory before it uploads you is of it ask- and edited them together to bring out some of ing, with some alien but unmistakable existen- the most distinctive themes. -

How to Do Research at the MIT AI Lab

Massachusetts Institute of Technology Artificial Intelligence Laboratory AI Working Paper 316 October, 1988 How to do Research At the MIT AI Lab by a whole bunch of current, former, and honorary MIT AI Lab graduate students David Chapman, Editor Version 1.3, September, 1988. Abstract This document presumptuously purports to explain how to do re- search. We give heuristics that may be useful in picking up the specific skills needed for research (reading, writing, programming) and for understanding and enjoying the process itself (methodol- ogy, topic and advisor selection, and emotional factors). Copyright °c 1987, 1988 by the authors. A. I. Laboratory Working Papers are produced for internal circulation, and may contain information that is, for example, too preliminary or too detailed for formal publication. It is not intended that they should be considered papers to which reference can be made in the literature. Contents 1 Introduction 1 2 Reading AI 2 3 Getting connected 4 4 Learning other fields 7 5 Notebooks 11 6 Writing 11 7 Talks 18 8 Programming 20 9 Advisors 22 10 The thesis 26 11 Research methodology 30 12 Emotional factors 31 1 Introduction What is this? There’s no guaranteed recipe for success at research. This doc- ument collects a lot of informal rules-of-thumb advice that may help. Who’s it for? This document is written for new graduate students at the MIT AI Laboratory. However, it may be useful to many others doing research in AI at other institutions. People even in other fields have found parts of it useful. -

By Eve M. Phillips Submitted to the Department of Electrical

If It Works, It's Not Al: A Commercial Look at Artificial Intelligence Startups by Eve M. Phillips Submitted to the Department of Electrical Engineering and Computer Science in Partial Fulfillment of the Requirements for the Degrees of Bachelor of Science in Computer Science and Engineering and Master of Engineering in Electrical Engineering and Computer Science at the Massachusetts Institute of Technology ,.ay7, 1999 @ Copyright 1999 Eve M. Phillips. All rights reserved. The author hereby grants to M.I.T. permission to reproduce and distribute publicly paper and electronic copies of this thesis and to grant others the right to do so. Author Department of Electrical Engineering and Computer Science May 7, 1999 Certified by_ Patrick Winston Thesis Supervisor Accepted by Arthur C. Smith Chairman, Department Committee on Graduate Theses If It Works, It's Not Al: A Commercial Look at Artificial Intelligence Startups by Eve M. Phillips Submitted to the Department of Electrical Engineering and Computer Science May 7, 1999 In Partial Fulfillment of the Requirements for the Degree of Bachelor of Science in Computer Science and Engineering and Master of Engineering in Electrical Engineering and Computer Science ABSTRACT The goal of this thesis is to learn from the successes and failure of a select group of artificial intelligence (AI) firms in bringing their products to market and creating lasting businesses. I have chosen to focus on Al firms from the 1980s in both the hardware and software industries because the flurry of activity during this time makes it particularly interesting. The firms I am spotlighting include the LISP machine makers Symbolics and Lisp Machines Inc.; Al languages firms, such as Gold Hill; and expert systems software companies including Teknowledge, IntelliCorp, and Applied Expert Systems. -

Enabling Imagination Through Story Alignment

Enabling Imagination through Story Alignment by Matthew Paul Fay B.S. Chemistry Purdue University, 2009 Submitted to the Department of Electrical Engineering and Computer Science in Partial Fulfillment of the Requirements for the Degree of Master of Science in Electrical Engineering and Computer Science at the MASSACHUSETTS INSTITUTE OF TECHNOLOGY February, 2012 © 2012 Massachusetts Institute of Technology. All rights reserved Signature of Author ………………………………………………………………………………... Department of Electrical Engineering and Computer Science January 26, 2012 Certified by ………………………………………………………………………………………... Patrick H. Winston Ford Professor of Electrical Engineering and Computer Science Thesis Supervisor Accepted by ……………………………………………………………………………………….. Leslie A. Kolodziejski Professor of Electrical Engineering and Computer Science Chair, EECS Committee on Graduate Students 2 Enabling Imagination through Story Alignment by Matthew Paul Fay Submitted to the Department of Electrical Engineering and Computer Science on January 20, 2011 in Partial Fulfillment of the Requirements for the Degree of Master of Science in Electrical Engineering and Computer Science ABSTRACT Stories are an essential piece of human intelligence. They exist in countless forms and varieties seamlessly integrated into every facet of our lives. Stories fuel human understanding and our explanations of the world. Narrative acts as a Swiss army knife, simultaneously facilitating the transfer of knowledge, culture and beliefs while also powering our high level mental faculties. If we are to develop artificial intelligence with the cognitive capacities of humans, our systems must not only be able to understand stories but also to incorporate them into the thought process as humans do. In order to work towards the goal of computational story understanding, I developed a novel story comparison method. -

Working Paper 61 Qualitative Knowledge, Causal Reasoning, And

WORKING PAPER 61 QUALITATIVE KNOWLEDGE, CAUSAL REASONING, AND THE LOCALIZATION OF FAILURES by ALLEN L. BROWN Massachusetts Institute of Technology Artificial Intelligence Laboratory March, 1974 Abstract A research program is proposed, the goal of which is a computer system that embodies the knowledge and methodology of a competent radio repairman. Work reported herein was conducted at the Artificial Intelligence Laboratory, a Massachusetts Institute of TechnoTogy research program supported in part by the Advanced Research Projects Agency of the Department of Defense and monitored by the Office of Naval Research under Contract Number N00014-70-A-0362-0005. Working Papers are informal papers intended for internal use. 2 Qualitative Knowledge, Causal Reasoning, and the Localization of Failures --A Proposal for Research Whither Debugging? The recent theses of Fahiman <Fahlman>, Goldstein <Goldstein>, and Sussman <Sussman> document programs which exhibit formidable problem-solving competences in particular micro-worlds. In the last instance, the problem-solving system begins as a rank novice and proceeds, with suitable "homework," to improve its skill. In these problem-solving systems, a number of distinct, but by no means independent, components are identifiable. Among these are planning, model-building, the formulation and testing of hypotheses, and debugging. It is debugging as a problem-solving faculty, that will be the focus of attention of our research. Goldstein and Sussman, in automatic programming, and Papert <Papert>, in education, have more than adequately argued the importance of a bug as a Powerful Idea, and debugging as an important intellectual process. Despite these important insights into the role of debugging in various intellectual endeavors, we are left, nonetheless, with inadequate models of the debugging process and a less than complete characterization of any category of bugs. -

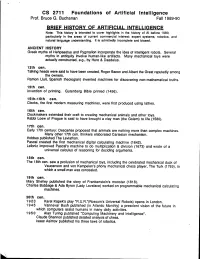

CS 2711 Foundations of Artificial Intelligence Prof

CS 2711 Foundations of Artificial Intelligence Prof. Bruce G. Buchanan Fall 1989-90 BRIEF HISTORY OF ARTIFICIAL INTELLIGENCE Note: This history is intended to cover highlights in the history of Al before 1980, particularly in the areas of current commercial interest: expert systems, robotics, and natural language understanding. It is admittedly incomplete and biased. ANCIENT HISTORY Greek myths of Hehpaestus and Pygmalion incorporate the idea of intelligent robots. Several myths in antiquity Involve human-like artifacts. Many mechanical toys were actually constructed, e.g., by Hero & Daedelus. 13th cen. Talking heads were said to have been created, Roger Bacon and Albert the Great reputedly among the owners. Ramon Llull, Spanish theologian) invented machines for discovering non-mathematical truths. 15th cen. Invention of printing. Gutenberg Bible printed (1456) 15th-16th cen. Clocks, the first modern measuring machines, were first produced using lathes 16th cen. Clockmakers extended their craft to creating mechanical animals and other toys. Rabbi Loew of Prague is said to have brought a clay man (the Golem) to life (1580) 17th cen. Early 17th century: Descartes proposed that animals are nothing more than complex machines. Many other 17th cen. thinkers elaborated Cartesian mechanism. Hobbes published The Leviathan. Pascal created the first mechanical digital calculating machine (1642). Leibniz improved Pascal's machine to do multiplication & division (1673) and wrote of a universal calculus of reasoning for deciding arguments. 18th cen. The 18th cen. saw a profusion of mechanical toys, including the celebrated mechanical duck of Vaucanson and yon Kempelen's phony mechanical chess player, The Turk (1769), in which a small man was concealed. -

AAAI Officials

AI Magazine Volume 26 Number 4 (2006)(2005) (© AAAI) 25th Anniversary Issue Term Expired in 2005 Carla Gomes AAAI Officials and Staff Michael Littman Maja Mataric Yoav Shoham 1980–2005 Term Expires in 2006 Steve Chien Yolanda Gil Haym Hirsh Presidents Peter Hart Johan de Kleer Andrew Moore Raj Reddy Benjamin Kuipers Term Expires in 2007 Allen Newell (1979–80) Paul Rosenbloom Edward Feigenbaum (1980–81) Term Expired in 1986 Beverly Woolf Oren Etzioni Marvin Minsky (1981–82) Eugene Charniak Lise Getoor Nils Nilsson (1982–83) Randall Davis Term Expired in 1996 Karen Myers John McCarthy (1983–84) Stanley Rosenschein Thomas Dean Illah Nourbakhsh Woodrow Bledsoe (1984–85) Mark Stefik Robert Engelmore Term Expires in 2008 Patrick Winston (1985–87) Peter Friedland Raj Reddy (1987–89) Term Expired in 1987 Ramesh Patil Maria Gini Daniel Bobrow (1989–91) Ronald Brachman Kevin Knight Patrick Hayes (1991–93) John McDermott Term Expired in 1997 Peter Stone Barbara Grosz (1993–95) Charles Rich Tim Finin Sebastian Thrun Randall Davis (1995–97) Edward Shortliffe Martha E. Pollack David Waltz (1997–1999) Katia Sycara Standing Committees Bruce Buchanan (1999–2001) Term Expired in 1988 Daniel Weld Finance Committee Chairs Tom M. Mitchell (2001–03) John Seely Brown Term Expired in 1998 Ron Brachman (2003–05) Ryszard Michalski Lester Earnest, 1980 Alan Mackworth (2005–07) Tom Mitchell Kenneth Ford Raj Reddy, 1984–87 Eric Horvitz (2007–09) Fernando Pereira Richard Korf Bruce G. Buchanan, 1988–92 Steven Minton Norman R. Nielsen, 1993–2002 Secretary-Treasurers Term Expired in 1989 Lynn Andrea Stein Ted Senator, 2003– Lynn Conway Donald Walker (1979–83) Term Expired in 1999 Membership Barbara Grosz Richard Fikes (1983–1986) Committee Chairs Douglas Lenat Jon Doyle Bruce Buchanan (1987–93) William Woods Leslie Pack Kaelbling Bruce G. -

Director of the Artificial Laboratory in the Massachusetts Institute of Technology Professot Patrick Winston

Director of the Artificial Laboratory in the Massachusetts Institute of Technology Professot Patrick Winston Dear Patrick: It was really bad news – the greatest scientist and outstanding person Marvin Minsky has left us. We are very sorry indeed. We know how high he was positioned in the West. But we would like to stress that his creative books and ideas were very influential in Russia as well. His books and papers were very famous among Russian expert and students. I am proud to mention that I have translated his books about frames and about neural perceptrons. These books were published in the best our editorial house called МИР (WORLD). Actually his book on frame theory was translated into Russian even twice as it was very popular. I think it would be impossible to find a PhD in Russia where Marvin Minsky was not referenced as the great master of Artificial Intelligence. Now when he is no more with us, I vividly remember my visit to his home after the IJCAI conference in Boston. In my memory I keep his big room fool of various machines, many pianos with the scores opened and the really huge adjustable wrench on his wall. Especially I would like to mention a TV film that I had once. This film showed that Marvin Minsky and John McCarthy flew to Europe to support personally Donald Micky in his difficult debate with his opponents that tried to stop penetration AI to Europe. I demonstrated this film in a big gathering of Russian Academicians and it was applauded. Maybe one of the latest scientific result by Prof. -

LEARNING to COLLABORATE ROBOTS BUILDING TOGETHER by Kathleen Sofia Hajash

LEARNING TO COLLABORATE ROBOTS BUILDING TOGETHER by Kathleen Sofia Hajash B.Arch - California Polytechnic State University, San Luis Obispo (2013) Submitted to the Department of Architecture and the Department of Electrical Engineering and Computer Science in partial fulfillment of the requirements for the degrees of Master of Science in Architecture Studies and Master of Science in Electrical Engineering and Computer Science at the Massachusetts Institute of Technology June 2018 © 2018 Kathleen S. Hajash. All rights reserved. The author hereby grants MIT permission to reproduce and to distribute publicly paper and electronic copies of this thesis document in whole or in part in any medium now known or hereafter created. Signature of Author: _________________________________________________________ Kathleen Hajash Department of Architecture Department of Electrical Engineering and Computer Science May 23, 2018 Certified by: ________________________________________________________________ Skylar Tibbits Assistant Professor of Design Research Thesis Advisor Certified by: ________________________________________________________________ Patrick Winston Ford Professor of Artificial Intelligence and Computer Science Thesis Advisor Accepted by: ________________________________________________________________ Sheila Kennedy Professor of Architecture Chair of the Department Committee on Graduate Students Accepted by: ________________________________________________________________ Leslie A. Kolodziejski Professor of Electrical Engineering and -

Latanya Sweeney. That's AI?: a History and Critique of the Field. Carnegie Mellon Technical Report

That's AI?: A History and Critique of the Field Latanya Sweeney July 2003 CMU-CS-03-1063 School of Computer Science Carnegie Mellon University Pittsburgh, PA 15213-3890 Keywords: History of AI, philosophy of AI, human intelligence, machine intelligence, Turing Test, reasoning Abstract What many AI researchers do when they say they are doing AI contradicts what some AI researchers say is AI. Surveys of leading AI textbooks demonstrate a lack of a generally accepted historical record. These surveys also show AI researchers as primarily concerned with prescribing ideal mathematical behaviors into computers -- accounting for 987 of 996 (or 99%) of the AI references surveyed. The most common expectation of AI concerns constructing machines that behave like humans, yet only 27 of 996, (or 2%) of the AI references surveyed were directly consistent with this description. Both approaches have shortcomings - prescribing superior behavior into machines fails to scale to multiple tasks easily, while on the other hand, modeling human behaviors in machines can give results that are not always correct or fast. The discrepancy between the kind of work conducted in AI and the kind of work expected from AI cripples the ability to measure progress in the field. This research was supported in part by the Laboratory for International Data Privacy in the School of Computer Science at Carnegie Mellon University. 1. Introduction More than 50 years have passed since the origin of artificial intelligence (AI). Recently, Warner Brothers and DreamWorks released Steven Spielberg's film, "A.I.", thereby advancing the state of science fiction from "E.T." [43], which is a story about an extra terrestrial who comes to earth and befriends a boy, to "A.I" [44], which is a story about a human-like robot that believes he is a boy.