Digital Video and HDTV Algorithms and Interface

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Elementary Number Theory and Methods of Proof

CHAPTER 4 ELEMENTARY NUMBER THEORY AND METHODS OF PROOF Copyright © Cengage Learning. All rights reserved. SECTION 4.4 Direct Proof and Counterexample IV: Division into Cases and the Quotient-Remainder Theorem Copyright © Cengage Learning. All rights reserved. Direct Proof and Counterexample IV: Division into Cases and the Quotient-Remainder Theorem The quotient-remainder theorem says that when any integer n is divided by any positive integer d, the result is a quotient q and a nonnegative remainder r that is smaller than d. 3 Example 1 – The Quotient-Remainder Theorem For each of the following values of n and d, find integers q and r such that and a. n = 54, d = 4 b. n = –54, d = 4 c. n = 54, d = 70 Solution: a. b. c. 4 div and mod 5 div and mod A number of computer languages have built-in functions that enable you to compute many values of q and r for the quotient-remainder theorem. These functions are called div and mod in Pascal, are called / and % in C and C++, are called / and % in Java, and are called / (or \) and mod in .NET. The functions give the values that satisfy the quotient-remainder theorem when a nonnegative integer n is divided by a positive integer d and the result is assigned to an integer variable. 6 div and mod However, they do not give the values that satisfy the quotient-remainder theorem when a negative integer n is divided by a positive integer d. 7 div and mod For instance, to compute n div d for a nonnegative integer n and a positive integer d, you just divide n by d and ignore the part of the answer to the right of the decimal point. -

Designing a Cinema Auditorium

ROLV GJESTLAND HOW TO DESIGN A CINEMA AUDITORIUM Practical guidelines for architects, cinema owners and others involved in planning and building cinemas HOW TO USE THIS BOOK This book can be read from beginning to end, to learn more about the elements that need to be taken into consideration when designing a cinema auditorium. But it may also be used as a reference in the design process, as the different parts of the auditorium are planned. Just be aware that making a change in one element might affect others. Designing a cinema auditorium is quite easy if you follow the rules. Although, if everyone follows all the rules, all auditoriums would look the same. Go beyond the rules, but always keep in mind that the main purpose of a cinema auditorium is to give the audience the best film experience. In addition, there are many other rooms in a cinema complex where you can use your creativity to create great experiences for patrons and make them want to come back. FEEDBACK This is the first edition of the book, and I am sure someone will miss something, someone will disagree with something and something will be incomplete or difficult to understand. Please do not hesitate to share your opinions, so the next edition can be better and the next one after that… Please use the contact info below. ABOUT THE AUTHOR Rolv Gjestland has a master's degree in metallurgy from the Norwegian University of Science and Technology. He is now advisor in cinema concepts, design, logistics and technology for Film&Kino, the nonprofit trade organisation for Norwegian cinemas. -

Dtavhal Programmers Interface Table of Contents

DTAvHAL Programmers Interface Revision 0.1 Copyright (c) 2004-2012 Drastic Technologies Ltd. All Rights Reserved www.drastic.tv Table of Contents DTAvHAL Programmers Interface......................................................................................1 Introduction.....................................................................................................................3 Direct Link Usage...........................................................................................................3 Windows 32 OS – using 32 bit..............................................................................3 Windows 64 – using 32 bit....................................................................................3 Windows 64 – using 64 bit....................................................................................3 Mac OS-X..............................................................................................................3 Linux 64.................................................................................................................3 Functions.........................................................................................................................5 avOpen...................................................................................................................5 avClose..................................................................................................................5 avChannels.............................................................................................................5 -

"(222A. A772AMMAY Sept

Sept. 29, 1959 L. DIETCH 2,906,814 SIGNAL OPERATED AUTOMATIC COLOR KILLER SYSTEM Filed April 28, l955 2. Sheets-Sheet l AAW22 a a2 aa’ a2/2 ass 122A 2 ASAf MaaZ AVA272M 77AAF42 4. A (So a 72.7 3.39 M269 22 66 (ol) (4) (C) -- - - - Hí2.É.-aws7 (a) A38 INVENTOR. Aawaz A7cay Aza y "(222a. A772AMMAY Sept. 29, 1959 L, DIETCH 2,906,814 SIGNAL OPERATED AUTOMATIC COLOR KILLER SYSTEM Filed April 28, 1955 2 Sheets-Sheet 2 |- 2,906,814 United States Patent Office Patented Sept. 29, 1959 1. 2 the phase of the sync bursts with the phase of the locally produced wave to derive a correction voltage for con trolling the frequency and phase of the oscillator which 2,906,814 produces the reference wave at the receiver. It is SIGNAL OPERATED AUTOMATIC COLOR desirable that synchronization of the receiver's color KILLERSYSTEM reference oscillator have been effected before the chro minance channel is activated, in order to prevent the Leonard Dietch, Haddonfield, N.J., assignor to Radio production of spurious color information pending such Corporation of America, a corporation of Delaware synchronization. Application April 28, 1955, Serial No. 504,503 O It is, therefore, a primary object of the present inven tion to provide new and improved automatic color chan 4 Claims. (C. 178-5.4) nel disabling means. Another object of the invention is the provision of automatic color channel disabling means, the operation The present invention relates to circuitry for automati 15 of which is correlated with the action of the receiver cally switching between two modes of operation and, color. -

Square Vs Non-Square Pixels

Square vs non-square pixels Adapted from: Flash + After Effects By Chris Jackson Square vs non square pixels can cause problems when exporting flash for TV and video if you get it wrong. Here Chris Jackson explains how best to avoid these mistakes... Before you adjust the Stage width and height, you need to be aware of the pixel aspect ratio. This refers to the width and height of each pixel that makes up an image. Computer screens display square pixels. Every pixel has an aspect ratio of 1:1. Video uses non-square rectangular pixels, actually scan lines. To make matters even more complicated, the pixel aspect ratio is not consistent between video formats. NTSC video uses a non-square pixel that is taller than it is wide. It has a pixel aspect ratio of 1:0.906. PAL is just the opposite. Its pixels are wider than they are tall with a pixel aspect ratio of 1:1.06. Figure 1: The pixel aspect ratio can produce undesirable image distortion if you do not compensate for the difference between square and non-square pixels. Flash only works in square pixels on your computer screen. As the Flash file migrates to video, the pixel aspect ratio changes from square to non-square. The end result will produce a slightly stretched image on your television screen. On NTSC, round objects will appear flattened. PAL stretches objects making them appear skinny. The solution is to adjust the dimensions of the Flash Stage. A common Flash Stage size used for NTSC video is 720 x 540 which is slightly taller than its video size of 720 x 486 (D1). -

13A Computer Video (1022-1143)

Section13a COMPUTER VIDEO 12 Inch Design ............................1024-1027 Adobe ...........................................1028-1043 AJA ................................................1044-1063 Apple.............................................1064-1079 Avid ...............................................1080-1083 Automatic Duck..........................1084-1085 BlackMagic Design ....................1086-1099 Boris FX .........................................1100-1109 Video Cineform ........................................1110-1111 DigiEffects .....................................1112-1113 Contour Design......................................1114 Grass Valley ...................................1115-1119 Intel..........................................................1120 JL Cooper ......................................1122-1123 Matrox ...........................................1124-1127 MOTU .............................................1128-1129 SourceBook Newtek ..........................................1130-1133 Red Giant ......................................1134-1139 Sony ...............................................1140-1143 Sorenson.................................................1144 Tiffen........................................................1145 Obtaining information and ordering from B&H is quick and The easy. When you call us, just punch in the corresponding Quick Dial number anytime during our welcome message. The Quick Dial code then directs you to the specific professional sales associates in our order department. -

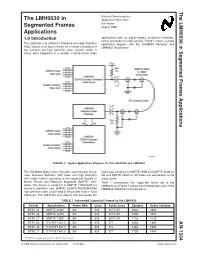

Application Note 1334 the LMH0030 in Segmented Frames Applications

The LMH0030 in Segmented Frames Applications AN-1334 National Semiconductor The LMH0030 in Application Note 1334 Kai Peters Segmented Frames August 2006 Applications 1.0 Introduction applications such as digital routers, production switchers, format converters or video servers. Figure 1 shows a typical The LMH0030 and LMH0031 Standard and High Definition application diagram with the LMH0030 Serializer and Video chipset is an ideal solution for a variety of products in LMH0031 Deserializer. the standard and high definition video systems realm. It allows easy integration in a number of professional video 20108501 FIGURE 1. Typical Application Diagram for the LMH0030 and LMH0031 The LMH0030 Digital Video Serializer automatically recog- video data compliant to SMPTE 259M and SMPTE 344M for nizes Standard Definition (SD) video and High Definition SD and SMPTE 292M for HD Video and serialization to the (HD) video formats according to the respective Society of output ports. Motion Picture and Television Engineers (SMPTE) stan- Table 1 summarizes the supported frame set of the dards. The device is compliant to SMPTE 125M/267M for LMH0030 and Figure 2 shows the simplified data path of the standard definition and SMPTE 260M/274M/295M/296M LMH0030 SD/HD Encoder/Serializer. high definition video as provided to the parallel 10bit or 20bit interfaces. The LMH0030 auto-detects and processes the TABLE 1. Automated Supported Frames by the LMH0030 Format Apecification Frame Rate Lines Active Lines Samples Active Samples SDTV, 54 SMPTE 344M 60I 525 507/1487 3432 2880 SDTV, 36 SMPTE 267M 60I 525 507/1487 2288 1920 SDTV, 27 SMPTE 125M 60I 525 507/1487 1716 1440 SDTV, 54 ITU-R BT 601.5 50I 625 577 3456 2880 SDTV, 36 ITU-R BT 601.5 50I 625 577 2304 1920 SDTV, 27 ITU-R BT 601.5 50I 625 577 1728 1440 PHYTER® is a registered trademark of National Semiconductor. -

Tv Signal Processor for Multi System

To our customers, Old Company Name in Catalogs and Other Documents On April 1st, 2010, NEC Electronics Corporation merged with Renesas Technology Corporation, and Renesas Electronics Corporation took over all the business of both companies. Therefore, although the old company name remains in this document, it is a valid Renesas Electronics document. We appreciate your understanding. Renesas Electronics website: http://www.renesas.com April 1st, 2010 Renesas Electronics Corporation Issued by: Renesas Electronics Corporation (http://www.renesas.com) Send any inquiries to http://www.renesas.com/inquiry. Notice 1. All information included in this document is current as of the date this document is issued. Such information, however, is subject to change without any prior notice. Before purchasing or using any Renesas Electronics products listed herein, please confirm the latest product information with a Renesas Electronics sales office. Also, please pay regular and careful attention to additional and different information to be disclosed by Renesas Electronics such as that disclosed through our website. 2. Renesas Electronics does not assume any liability for infringement of patents, copyrights, or other intellectual property rights of third parties by or arising from the use of Renesas Electronics products or technical information described in this document. No license, express, implied or otherwise, is granted hereby under any patents, copyrights or other intellectual property rights of Renesas Electronics or others. 3. You should not alter, modify, copy, or otherwise misappropriate any Renesas Electronics product, whether in whole or in part. 4. Descriptions of circuits, software and other related information in this document are provided only to illustrate the operation of semiconductor products and application examples. -

Introduction to Uncertainties (Prepared for Physics 15 and 17)

Introduction to Uncertainties (prepared for physics 15 and 17) Average deviation. When you have repeated the same measurement several times, common sense suggests that your “best” result is the average value of the numbers. We still need to know how “good” this average value is. One measure is called the average deviation. The average deviation or “RMS deviation” of a data set is the average value of the absolute value of the differences between the individual data numbers and the average of the data set. For example if the average is 23.5cm/s, and the average deviation is 0.7cm/s, then the number can be expressed as (23.5 ± 0.7) cm/sec. Rule 0. Numerical and fractional uncertainties. The uncertainty in a quantity can be expressed in numerical or fractional forms. Thus in the above example, ± 0.7 cm/sec is a numerical uncertainty, but we could also express it as ± 2.98% , which is a fraction or %. (Remember, %’s are hundredths.) Rule 1. Addition and subtraction. If you are adding or subtracting two uncertain numbers, then the numerical uncertainty of the sum or difference is the sum of the numerical uncertainties of the two numbers. For example, if A = 3.4± .5 m and B = 6.3± .2 m, then A+B = 9.7± .7 m , and A- B = - 2.9± .7 m. Notice that the numerical uncertainty is the same in these two cases, but the fractional uncertainty is very different. Rule2. Multiplication and division. If you are multiplying or dividing two uncertain numbers, then the fractional uncertainty of the product or quotient is the sum of the fractional uncertainties of the two numbers. -

Unit 6: Multiply & Divide Fractions Key Words to Know

Unit 6: Multiply & Divide Fractions Learning Targets: LT 1: Interpret a fraction as division of the numerator by the denominator (a/b = a ÷ b) LT 2: Solve word problems involving division of whole numbers leading to answers in the form of fractions or mixed numbers, e.g. by using visual fraction models or equations to represent the problem. LT 3: Apply and extend previous understanding of multiplication to multiply a fraction or whole number by a fraction. LT 4: Interpret the product (a/b) q ÷ b. LT 5: Use a visual fraction model. Conversation Starters: Key Words § How are fractions like division problems? (for to Know example: If 9 people want to shar a 50-lb *Fraction sack of rice equally by *Numerator weight, how many pounds *Denominator of rice should each *Mixed number *Improper fraction person get?) *Product § 3 pizzas at 10 slices each *Equation need to be divided by 14 *Division friends. How many pieces would each friend receive? § How can a model help us make sense of a problem? Fractions as Division Students will interpret a fraction as division of the numerator by the { denominator. } What does a fraction as division look like? How can I support this Important Steps strategy at home? - Frac&ons are another way to Practice show division. https://www.khanacademy.org/math/cc- - Fractions are equal size pieces of a fifth-grade-math/cc-5th-fractions-topic/ whole. tcc-5th-fractions-as-division/v/fractions- - The numerator becomes the as-division dividend and the denominator becomes the divisor. Quotient as a Fraction Students will solve real world problems by dividing whole numbers that have a quotient resulting in a fraction. -

The Electricimage™ Reference

Book Page 1 Friday, July 21, 2000 4:18 PM The ElectricImage™ Reference A guide to the ElectricImage™ Animation System Version 2.0 Book Page 2 Friday, July 21, 2000 4:18 PM © 1989, 1990, 1991, 1992, 1993, 1994 Electric Image, Inc. All Rights Reserved No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, recording, or otherwise, without the prior written permission of Electric Image. The software described in this manual is furnished under license and may only be used or copied in accordance with the terms of such license. The information in this manual is furnished for informational use only, is subject to change without notice and should not be construed as a commitment by Electric Image. Electric Image assumes no responsibility or liability for any errors or inaccuracies that may appear in this book. Electric Image recommends that you observe the rights of the original artist or publisher of the images you scan or acquire in the use of texture maps, reflection maps, bump maps, backgrounds or any other usage. If you plan to use a previously published image, contact the artist or publisher for information on obtaining permission. ElectricImage, Pixel Perfect Rendering and Animation, Mr. Fractal, and Mr. Font are trademarks of Electric Image. Macintosh and Apple are registered trademarks of Apple Computer, Incorporated. Other brand names and product names are trademarks or registered trademarks of their respective companies. This document written and designed by Stephen Halperin at: Electric Image, Inc. 117 East Colorado Boulevard Suite 300 Pasadena, California 91105 Cover image by Jay Roth. -

Understanding HD and 3G-SDI Video Poster

Understanding HD & 3G-SDI Video EYE DIGITAL SIGNAL TIMING EYE DIAGRAM The eye diagram is constructed by overlaying portions of the sampled data stream until enough data amplitude is important because of its relation to noise, and because the Y', R'-Y', B'-Y', COMMONLY USED FOR ANALOG COMPONENT ANALOG VIDEO transitions produce the familiar display. A unit interval (UI) is defined as the time between two adjacent signal receiver estimates the required high-frequency compensation (equalization) based on the Format 1125/60/2:1 750/60/1:1 525/59.94/2:1, 625/50/2:1, 1250/50/2:1 transitions, which is the reciprocal of clock frequency. UI is 3.7 ns for digital component 525 / 625 (SMPTE remaining half-clock-frequency energy as the signal arrives. Incorrect amplitude at the Y’ 0.2126 R' + 0.7152 G' + 0.0722 B' 0.299 R' + 0.587 G' + 0.114 B' 259M), 673.4 ps for digital high-definition (SMPTE 292) and 336.7ps for 3G-SDI serial digital (SMPTE 424M) sending end could result in an incorrect equalization applied at the receiving end, thus causing Digital video synchronization is provided by End of Active Video (EAV) and Start of Active Video (SAV) sequences which start with a R'-Y' 0.7874 R' - 0.7152 G' - 0.0722 B' 0.701 R' - 0.587 G' - 0.114 B' as shown in Table 1. A serial receiver determines if the signal is “high” or “low” in the center of each eye, and signal distortions. Overshoot of the rising and falling edge should not exceed 10% of the waveform HORIZONTAL LINE TIMING unique three word pattern: 3FFh (all bits in the word set to 1), 000h (all 0’s), 000h (all 0’s), followed by a fourth “XYZ” word whose B'-Y' -0.2126 R' - 0.7152 G' + 0.9278 B' -0.299 R' - 0.587 G' + 0.886 B' detects the serial data.