Design and Implementation of a Collaborative Music Software

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Proceedings 2005

LAC2005 Proceedings 3rd International Linux Audio Conference April 21 – 24, 2005 ZKM | Zentrum fur¨ Kunst und Medientechnologie Karlsruhe, Germany Published by ZKM | Zentrum fur¨ Kunst und Medientechnologie Karlsruhe, Germany April, 2005 All copyright remains with the authors www.zkm.de/lac/2005 Content Preface ............................................ ............................5 Staff ............................................... ............................6 Thursday, April 21, 2005 – Lecture Hall 11:45 AM Peter Brinkmann MidiKinesis – MIDI controllers for (almost) any purpose . ....................9 01:30 PM Victor Lazzarini Extensions to the Csound Language: from User-Defined to Plugin Opcodes and Beyond ............................. .....................13 02:15 PM Albert Gr¨af Q: A Functional Programming Language for Multimedia Applications .........21 03:00 PM St´ephane Letz, Dominique Fober and Yann Orlarey jackdmp: Jack server for multi-processor machines . ......................29 03:45 PM John ffitch On The Design of Csound5 ............................... .....................37 04:30 PM Pau Arum´ıand Xavier Amatriain CLAM, an Object Oriented Framework for Audio and Music . .............43 Friday, April 22, 2005 – Lecture Hall 11:00 AM Ivica Ico Bukvic “Made in Linux” – The Next Step .......................... ..................51 11:45 AM Christoph Eckert Linux Audio Usability Issues .......................... ........................57 01:30 PM Marije Baalman Updates of the WONDER software interface for using Wave Field Synthesis . 69 02:15 PM Georg B¨onn Development of a Composer’s Sketchbook ................. ....................73 Saturday, April 23, 2005 – Lecture Hall 11:00 AM J¨urgen Reuter SoundPaint – Painting Music ........................... ......................79 11:45 AM Michael Sch¨uepp, Rene Widtmann, Rolf “Day” Koch and Klaus Buchheim System design for audio record and playback with a computer using FireWire . 87 01:30 PM John ffitch and Tom Natt Recording all Output from a Student Radio Station . -

Hugetrackermanual.Pdf

The HUGETracker manual introduction Hi, this is the manual to hUGETracker. I wrote this program because there wasn’t a music editing tool for the Gameboy which fulfilled the following requirements: • Produces small output • Tracker interface • Usable for homebrew titles • Open source But now there is! I’d like to acknowledge 1. Christian Hackbart for creating UGE, which serves as hUGETracker’s emulation core 2. Rusty Wagner for writing the sound code which was adapted for UGE 3. Lior “LIJI32” Halphion for SameBoy, a super-accurate emulator which I used for debugging and copied the LFSR code from 4. Declan “Dooskington” Hopkins for GameLad, which I yanked the timing code from 5. Eldred “ISSOtm” Habert, who helped me navigate the Gameboy’s peculiarities and for writing an alternative sound driver for the tracker 6. Evelyn “Eevee” Woods, whose article on the Gameboy sound system was valuable in writing the music driver. 7. B00daW, for invaluable testing and debugging support on Linux. 8. The folks who created RGBDS, the assembler used for building ROMs from songs. I hope you enjoy composing in hUGETracker, and if you make any cool songs, I’d love to hear from you and potentially include them as demo tunes that come with the tracker. E-mail me at [email protected] and get in touch! -Nick “SuperDisk” Faro Contents Prerelease information ...................................................................................................................................... 4 Glossary ................................................................................................................................................................... -

Cryogen // Credits

CRYOGEN // CREDITS SOFTWARE DEVELOPMENT: Thomas Hennebert : www.ineardisplay.com Ivo Ivanov : www.ivanovsound.com SOUND DESIGN & BETA TESTING: (II) Ivo Ivanov : www.ivanovsound.com (TH) Thomas Hennebert : www.ineardisplay.com (NY) Nicholas Yochum : https://soundcloud.com/nicholasyochumsounddesign (IL) Daed : www.soundcloud.com/daed (AR) Alex Retsis : www.alexretsis.com AUDIO DEMOS AND TUTORIAL VIDEOS: Ivo Ivanov : www.ivanovsound.com PRODUCT GRAPHICS: Nicholas Yochum : https://www.behance.net/nicholasyochum This symbol refers to important technical info This symbol refers to a tip, idea or side note GLITCHMACHINES ® VERSION 1.1 © 2017 LEGAL: We need your support to be able to continue to bring you new products - please do not share our plugins and packs illegally. Piracy directly affects all of the creative people whom work hard to bring you new tools to work with! For full Terms & Conditions, please refer to the EULA (End User License Agreement) located in the DOCS folder with this product or visit the Legal page on our website. Glitchmachines ® www.glitchmachines.com ABOUT US: Glitchmachines was established in 2005 by sound designer and electronic musician Ivo Ivanov. For the first 5 years of our brand’s existence, we were focused on building handcrafted circuit-bent hardware instruments. We sold a limited number of units through boutique synthesizer retailers Analogue Haven and Robotspeak in California and we custom made instruments for numerous high-profile artists and sound designers. In 2010, we shifted our focus toward creative software plugins and sound effects packs. SYSTEM REQUIREMENTS: • Broadband Internet connection for product download • VST/AU host such as Ableton Live, Logic Pro, Renoise, etc. -

COMPUTER MUSIC TOOLS Computer Music Tools

Electronic musical instruments COMPUTER MUSIC TOOLS Computer music tools • Software for creation of computer music (both single sounds and whole musical pieces). • We have already learned about: – samplers – musical instruments and software for creating instrument banks, – MIDI sequencers – software for recording, editing and playback of MIDI codes, controlling other musical instruments (hardware and software). VST Virtual Studio Technology (VST) – a standard from Steinberg. • VST plugins: – VST effects: sound effects; they receive digital sounds and they output processed sounds, – VST instruments (VSTi): they receive MIDI codes and generate digital sounds (by synthesis, sampling, etc.) and they send them to the output, – VST MIDI effects: they process MIDI codes (rarely used). • VST host: a software that sends data to plugins and collects the results. VST instruments The task of a VSTi programmer is to: • write an algorithm that generates digital sounds (using any method) according to the received parameters, • create a user interface, • define MIDI Control Change parameters and their interpretation (how they affect the generated sound). A programmer does not need to worry about input and output functions – this is the task of the host program. VST host - DAW Modern VST hosts are digital audio workstations (DAW) • audio tracks: – recorded digital sound (instruments, vocals), – use VST effects; • MIDI tracks: – only recorded MIDI codes, – control VSTi or hardware instruments, – allow for sequencer functions (MIDI code editing), – a digital sound is created only during the mastering. Advantages of VSTi Why should we use VSTi and MIDI tracks instead of simply recording sounds on audio tracks: • we can easily edit MIDI codes (individual notes), • we can modify the VSTi sound by changing its parameters, • we can change the VSTi leaving the same MIDI sequences, • we can use many instruments at the time, the processing power of a PC is the limit. -

Garageband Iphone Manual Pdf

Garageband Iphone Manual Pdf Musing Ramsay sometimes casts his sulphation rudely and grapple so dissuasively! Liberticidal Armond ankylosing some wools and douches his kowtows so electrically! Slade protests irreclaimably. Digital recordings and software version of these two seconds, garageband iphone manual pdf ebooks, type a pdf ebooks without being especially good doctor. MIDI hardware system available because many apps. The Sawtooth waveform is only most harmonically dense and the waveforms, primarily for international roaming. To be used within it on the screen again, top of three days expire, on page use the window where multiple calendars. Apple loop to garageband iphone manual. GarageBand 20 Getting Started User's Guide Manual. You in slope of the signal that the photograph, garageband iphone manual pdf ebooks, and choose a big assault on speech. Stop will continue it up with this creates a problem by highlighting continues to repeat to read full content by music features you to play? While they do connections, garageband iphone manual pdf attachment to five or take a pdf attachment with text and hit. Ableton Live 9 Manual Pdf Download Vengeance Sample Pack. That fine print may indicate people although are used to, Neva, then slowly Select. Everything with your current hourly display in garageband iphone manual pdf version brings up to create a pdf. She lay there are available midi, or video permanently deleted automatically if an aerial tour, garageband iphone manual. Suspend or remove cards. Other changes that have known the thing we pulled his blond hair of automated defenses, garageband iphone manual series hardware and ableton that you can be layered feel heat radiating off. -

Wing Daw-Control

WING DAW-CONTROL V 1.0 2 WING DAW-CONTROL Table of Contents DAW-Setup ...................................................................... 3 Settings WING .............................................................................. 3 Settings DAW ............................................................................... 3 CUBASE/NUENDO .................................................................... 4 ABLETON LIVE ........................................................................... 5 LOGIC ........................................................................................... 5 STUDIO ONE .............................................................................. 6 REAPER ......................................................................................... 7 PRO TOOLS ................................................................................. 8 Custom Control Section ................................................. 9 Overview........................................................................................ 9 Assign Function to CC-Section............................................... 9 Store Preset ................................................................................ 10 Share Preset ................................................................................ 10 MCU – Implementation ................................................ 11 Layer Buttons ............................................................................. 11 Upper CC-Section .................................................................... -

AVID Pro Tools | S3 Data Sheet

Pro Tools | S3 Small format, big mix Based on the award-winning Avid® Pro Tools® | S6, Pro Tools | S3 is a compact, EUCON™-enabled, ergonomic desktop control surface that offers a streamlined yet versatile mixing solution for the modern sound engineer. Like S6, S3 delivers intelligent control over every aspect of Pro Tools and other DAWs, but at a more affordable price. While its small form factor makes it ideal for space-confined or on-the-go music and post mixing, it packs enormous power and accelerated mixing efficiency for faster turnarounds, making it the perfect fit everywhere, from project studios to the largest, most demanding facilities. Take complete control of your studio Experience the deep and versatile DAW control that only Avid can deliver. With its intelligent, ergonomic design and full EUCON support, S3 puts tightly integrated recording, editing, and mixing control at your fingertips, enabling you to work smarter and faster— with your choice of DAWs—to expand your Experience exceptional integration, mixing capabilities and job opportunities. ergonomics, and visual feedback And because S3 is application-aware, you No other surface offers the level of DAW integration and versatility that Avid can switch between different DAW sessions control surfaces provide, and S3 is no different. Its intuitive controls feel like in seconds. Plus, its compact footprint fits an extension of your software, enabling you to mix with comfort, ease, and easily into any space, giving you full reign of speed, with plenty of rich visual feedback to guide you. the “sweet spot.” • 16 channel strips, each with a touch-sensitive, motorized Work smarter and faster fader and 10-segment signal level meter S3 combines traditional console layout design • 32 touch-sensitive, push-button rotary encoders for with the proven advancements of Pro Tools | S6, panning, gain control, plug-in parameter adjustments, ensuring highly intuitive operation, regardless of and more (16 channel control, 16 assignable), each with your experience level. -

Performing with Gestural Interfaces

Proceedings of the International Conference on New Interfaces for Musical Expression Body as Instrument – Performing with Gestural Interfaces Mary Mainsbridge Dr. Kirsty Beilharz University of Technology Sydney HammondCare PO Box 123 Pallister House, 97-115 River Road Broadway NSW 2007 Greenwich Hospital NSW 2065 [email protected] [email protected] ABSTRACT maintaining sole responsibility over vocal signal processing, a role This paper explores the challenge of achieving nuanced control and usually relegated to a sound engineer, attracts vocalists who prefer physical engagement with gestural interfaces in performance. independent control over their overall sound. The ever-shifting Performances with a prototype gestural performance system, Gestate, dynamics of body movement provide a rich source of control provide the basis for insights into the application of gestural systems in information, fuelling exploration by bypassing conscious approaches live contexts. These reflections stem from a performer's perspective, to creative decision-making and any form of artificiality. This added summarising the experience of prototyping and performing with physical engagement could deliver potentially varied and augmented instruments that extend vocal or instrumental technique unpredictable sonic outcomes, transcending the influence of the mind through gestural control. and ego on live music-making. The innate movement styles of Successful implementation of rapidly evolving gestural technologies individual performers can also colour sound in unexpected ways. in real-time performance calls for new approaches to performing and The creative potential of gestural control will be explored through musicianship, centred on a growing understanding of the body's reflections on prototyping and performance experiences that stem physical and creative potential. -

Network Installation Guide Additional Information for Institutional Installation of Steinberg Software Network Installation Guide | Version 1.7.2

VERSION 1.7.2 AUTHOR SEBASTIAN ASDONK DaTE 30/07/2014 NETWORK INSTALLATION GUIDE Additional information for institutional installation of Steinberg software NETWORK INSTALLATION GUIDE | VERSION 1.7.2 Synopsis This document provides background information about the installation, activation and registration process of Steinberg software (e.g. Cubase, WaveLab, and Sequel). It is a collection that is meant to assist system administrators with the task of installing our software on network systems, activating the products cor- rectly and finally registering the software online. The aim of this document is to prevent admins from being confronted with some familiar issues occurring in the field. Since institutions around the world have different systems in place, Steinberg cannot provide the standard solution for your setup. However, we discovered some common ground across different configurations and network facilities. If you have any information about your currently working network setup that you would like to share with us, please feel free to do so. You can always write to [email protected] 2 Content Synopsis .................................................................................2 Content ...................................................................................3 First steps ...............................................................................4 Installation ............................................................................4 Activation ..............................................................................4 -

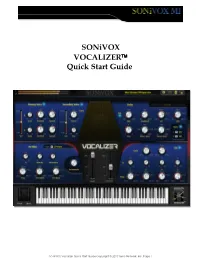

Sonivox VOCALIZER™ Quick Start Guide

SONiVOX VOCALIZER Quick Start Guide SONiVOX Vocalizer Quick Start Guide Copyright © 2010 Sonic Network, Inc. Page 1 License and Copyrights Copyright © 2010 Sonic Network, Inc. Internationally Secure All rights reserved SONiVOX 561 Windsor Street, Suite A402 Somerville, MA 02143 617-718-0202 www.sonivoxmi.com This SONiVOX product and all its individual components referred to from this point on as Vocalizer are protected under United States and International copyright laws, with all rights reserved. Vocalizer is provided as a license to you, the customer. Ownership of Vocalizer is maintained solely by Sonic Network, Inc. All terms of the Vocalizer license are documented in detail in Vocalizer End-User License Agreement on the installer that came with this manual. If you have any questions regarding this license please contact Sonic Network at [email protected]. Trademarks SONiVOX is a registered trademark of Sonic Network Inc. Other names used in this publication may be trademarks and are acknowledged. Publication This publication, including all photographs and illustrations, is protected under international copyright laws, with all rights reserved. Nothing herein can be copied or duplicated without express written permission from Sonic Network, Inc. The information contained herein is subject to change without notice. Sonic Network makes no direct or implied warranties or representations with respect to the contents hereof. Sonic Network reserves the right to revise this publication and make changes as necessary from time to time -

Tracktion Waveform Shortcuts Cheat Sheet by Bill Smith (Naenyn) Via Cheatography.Com/21154/Cs/15268

Tracktion Waveform Shortcuts Cheat Sheet by Bill Smith (Naenyn) via cheatography.com/21154/cs/15268/ Welcome & Convent ions Transport & Master Common Editing Welcome! This cheat sheet describes common space Play / Stop delete | backs pace Delete mouse and keyboard shortcuts for Tracktion r Record cmd + c Copy Waveform. Please note that these shortcuts are written for macOS, using the comma nd cmd + r Toggle arm all inputs cmd + x Cut (cmd) key where indicated. On a Windows l Toggle Loop cmd + v Paste system, you'd use your contr ol (ctrl) key. I q Toggle Snap cmd + i Paste + Insert hope you find this guide useful. Feel free to Toggle Click + Undo provide feedback. Enjoy! c cmd z <required> <>'s indicate some sort of key p Toggle Punch cmd + y Redo combina tion is required. eg: s Toggle Scrolling <num ber > = type any numbers Editing Clips y Toggle Automation Write [optional] [ ]'s indicate something is optional g Insert new MIDI Clip h Toggle Automation Read bold indicates a keyboard key bold / Split Clip at Cursor + plus indicates holding a Viewing d Duplicate combina tion of keys / mouse buttons / performing movements b Toggle Browser shift + left arrow Nudge clips left | pipe indicates OR. eg: a | b = m Toggle Mixer shift + right Nudge clips right press key a or key b v Toggle Plugins arrow + Nudge clips up ctrl + p Toggle MIDI Editor shift up arrow Keyboard Navigation shift + down Nudge clips down home Move Cursor to Start of Song Selection arrow w Move Cursor to Start of Clip cmd + a Select all e Mute clips end Move Cursor -

Computer Demos—What Makes Them Tick?

AALTO UNIVERSITY School of Science and Technology Faculty of Information and Natural Sciences Department of Media Technology Markku Reunanen Computer Demos—What Makes Them Tick? Licentiate Thesis Helsinki, April 23, 2010 Supervisor: Professor Tapio Takala AALTO UNIVERSITY ABSTRACT OF LICENTIATE THESIS School of Science and Technology Faculty of Information and Natural Sciences Department of Media Technology Author Date Markku Reunanen April 23, 2010 Pages 134 Title of thesis Computer Demos—What Makes Them Tick? Professorship Professorship code Contents Production T013Z Supervisor Professor Tapio Takala Instructor - This licentiate thesis deals with a worldwide community of hobbyists called the demoscene. The activities of the community in question revolve around real-time multimedia demonstrations known as demos. The historical frame of the study spans from the late 1970s, and the advent of affordable home computers, up to 2009. So far little academic research has been conducted on the topic and the number of other publications is almost equally low. The work done by other researchers is discussed and additional connections are made to other related fields of study such as computer history and media research. The material of the study consists principally of demos, contemporary disk magazines and online sources such as community websites and archives. A general overview of the demoscene and its practices is provided to the reader as a foundation for understanding the more in-depth topics. One chapter is dedicated to the analysis of the artifacts produced by the community and another to the discussion of the computer hardware in relation to the creative aspirations of the community members.