Individual Tree Crown Delineation for the Species Classification And

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Sample Design and Weighting Procedures for Bulgaria Crisis Monitoring Survey (CMS) and the Bulgarian Longitudinal Inclusive Society Survey (BLISS)

Sample Design and Weighting Procedures for Bulgaria Crisis Monitoring Survey (CMS) and the Bulgarian Longitudinal Inclusive Society Survey (BLISS) David J. Megill Statistical Consultant, World Bank May 2014 1. Background The Bulgaria Crisis Monitoring Survey (CMS) was a multi-topic panel survey conducted in February 2010, October 2010 and February 2011. A longitudinal analysis is used for tracking the impact of the economic crisis over time. The CMS collected information on various demographic and socioeconomic characteristics of the households and individual household members, including questions on labor market participation and earnings, access to and receipts from social protection programs, informal safety nets and remittances. The survey focused on how Bulgarian households are affected by and are coping with the economic crisis, and includes information on informal employment, reduced spending, postponement of investments, the sale of household assets, and the reliance on formal and informal credit. A national sample of 2,400 households was selected for the main CMS, based on a stratified multi-stage sample design. Given the special need to study the more vulnerable ethnic minority Roma population, an independent "booster sample" of 300 households was selected in settlements and neighborhoods identified as predominantly Roma. The Bulgarian Longitudinal Inclusive Society Survey (BLISS) was conducted in March and April 2013, as a continuation of the longitudinal CMS, with an additional module about the skills of the adult population in Bulgaria. The main purpose of BLISS is to analyze the major barriers to activation (such as skills gaps and mismatches, informational asymmetries, and/or disincentives inherent in the tax-benefit schemes) for different groups (such as women, older workers, and Roma) through an understanding of the labor markets’ behavior. -

The Black Sea to the North Sea - 2018

The Black Sea To The North Sea - 2018 Our overall route is shown to the right, beginning with a few days in Bucharest (Flying Pig Adventure #39 already published), and ending in Amsterdam / the North Sea, where we also spent a few days (and will be covered in an upcoming Flying Pig Adventures travelogue). All this was supposed to be on a single ship, but river water levels along the way required some changes. In the area of Budapest, the river levels were so low we had to abandon the ship and resort to buses which over a few days took us to a place further upstream on the Danube where we could continue our journey on different ship. The ship we transferred to was built a couple of years before the one we had been on before the Budapest abandonment. That ship had been on it’s way to the Black Sea going the opposite way so all the passengers just switched ships. After meeting up with the rest of our travel companions at the Bucharesti hotel we were all bused a couple of hours away to our ship on the Danube. From there we headed down river towards the Black Sea and into the Danube Delta. The Danube splits up into several waterways. We took a southern branch, the Delta’s oldest, most winding arm, Braţul Sfântu Gheorghe. It is the least used by freighters and fishing boats, wider but shallower than the Sulina arm. Sfântu Gheorghe is a small village of brightly painted Lipovani and Ukrainian cottages that has subsisted on fishing since the fourteenth century. -

Annex A, Competitiveness and Economic Growth

SIESTA Spatial Indicators for a ‘Europe 2020 Strategy’ Territorial Analysis Applied Research 2013/1/18 Draft Scientific Report | Version 10/08/2012 Annex A Competitiveness and Economic Growth ESPON 2013 1 This report presents the draft final results of an Applied Research Project conducted within the framework of the ESPON 2013 Programme, partly financed by the European Regional Development Fund. The partnership behind the ESPON Programme consists of the EU Commission and the Member States of the EU27, plus Iceland, Liechtenstein, Norway and Switzerland. Each partner is represented in the ESPON Monitoring Committee. This report does not necessarily reflect the opinion of the members of the Monitoring Committee. Information on the ESPON Programme and projects can be found on www.espon.eu The web site provides the possibility to download and examine the most recent documents produced by finalised and ongoing ESPON projects. This basic report exists only in an electronic version. © ESPON & Universidade de Santiago de Compostela, 2012. Printing, reproduction or quotation is authorised provided the source is acknowledged and a copy is forwarded to the ESPON Coordination Unit in Luxembourg. ESPON 2013 2 List of authors: Lidia Mierzejewska Adam Mickiewicz University Poznan, Poland ESPON 2013 3 Table of contents 1. Introduction...................................................................... 5 2. Comments on maps.............................................................. 10 2.1. GDP per capita in PPS, 2009.......................................... 10 2.2. Gross Domestic Product per inhabitants in PPS of NUTS 3 regions, 2007-2009..................................................... 16 2.3. Growth measured as GDP variations, 2000-2008................. 24 2.4. Growth measured as GDP per capita. Change 2007-2011..... 30 2.5. Labour productivity at regional level, 2008...................... -

Republic of Bulgaria Ministry of Energy 1/73 Fifth

REPUBLIC OF BULGARIA MINISTRY OF ENERGY FIFTH NATIONAL REPORT ON BULGARIA’S PROGRESS IN THE PROMOTION AND USE OF ENERGY FROM RENEWABLE SOURCES Drafted in accordance with Article 22(1) of Directive 2009/28/EC on the promotion of the use of energy from renewable sources on the basis of the model for Member State progress reports set out in Directive 2009/28/EC December 2019 1/73 REPUBLIC OF BULGARIA MINISTRY OF ENERGY TABLE OF CONTENTS ABBREVIATIONS USED ..................................................................................................................................4 UNITS OF MEASUREMENT ............................................................................................................................5 1. Shares (sectoral and overall) and actual consumption of energy from renewable sources in the last 2 years (2017 and 2018) (Article 22(1) of Directive 2009/28/EC) ........................................................................6 2. Measures taken in the last 2 years (2017 and 2018) and/or planned at national level to promote the growth of energy from renewable sources, taking into account the indicative trajectory for achieving the national RES targets as outlined in your National Renewable Energy Action Plan. (Article 22(1)(a) of Directive 2009/28/EC) ......................................................................................................................................................... 11 2.a Please describe the support schemes and other measures currently in place that are applied to promote energy from renewable sources and report on any developments in the measures used with respect to those set out in your National Renewable Energy Action Plan (Article 22(1)(b) of Directive 2009/28/EC) ..................... 18 2.b Please describe the measures in ensuring the transmission and distribution of electricity produced from renewable energy sources and in improving the regulatory framework for bearing and sharing of costs related to grid connections and grid reinforcements (for accepting greater loads). -

Late Ottoman Empire

01_Hanioglu_Prelims_p00i-pxviii.indd i 8/23/2007 7:33:25 PM 01_Hanioglu_Prelims_p00i-pxviii.indd ii 8/23/2007 7:33:25 PM A BRIEF HISTORY OF THE Late Ottoman Empire M. Şükrü Hanioğlu 01_Hanioglu_Prelims_p00i-pxviii.indd iii 8/23/2007 7:33:26 PM Copyright © 2008 by Princeton University Press Published by Princeton University Press, 41 William Street, Princeton, New Jersey 08540 In the United Kingdom: Princeton University Press, 3 Market Place, Woodstock, Oxfordshire OX20 1SY All Rights Reserved ISBN 978-0-691-13452-9 British Library Cataloging-in-Publication Data is available This book has been composed in Minion Typeface Printed on acid-free paper. ∞ press.princeton.edu Printed in the United States of America 1 3 5 7 9 10 8 6 4 2 01_Hanioglu_Prelims_p00i-pxviii.indd iv 8/23/2007 7:33:26 PM 01_Hanioglu_Prelims_p00i-pxviii.indd v 8/23/2007 7:33:26 PM Mazi ve müstakbel ahvâline vakıf ve belki ezel ve ebed esrarını ârif olmağa insanda bir meyl-i tabiî olduğundan ale-l-umum nev>-i beșerin bu fenne [tarih] ihtiyac-ı ma>nevîsi derkârdır. Since man has a natural aptitude for comprehending past and future affairs, and perhaps also for unlocking the secrets of eternities past and future, humanity’s spiritual need for this science [history] is evident. —Ahmed Cevdet, Tarih-i Cevdet, 1 (Istanbul: Matbaa-i Osmaniye, 1309 [1891]), pp. 16–17 01_Hanioglu_Prelims_p00i-pxviii.indd vi 8/23/2007 7:33:26 PM Contents List of Figures ix Acknowledgments xi Note on Transliteration, Place Names, and Dates xiii Introduction 1 1. The Ottoman Empire at the Turn of the Nineteenth Century 6 2. -

Shark) Dental Morphology During the Early Mesozoic Dynamik Av Selachian (Haj) Tandmorfologi Under Tidig Mesozoisk

Examensarbete vid Institutionen för geovetenskaper Degree Project at the Department of Earth Sciences ISSN 1650-6553 Nr 399 Dynamics of Selachian (Shark) Dental Morphology During the Early Mesozoic Dynamik av Selachian (haj) tandmorfologi under Tidig Mesozoisk Alexander Paxinos INSTITUTIONEN FÖR GEOVETENSKAPER DEPARTMENT OF EARTH SCIENCES Examensarbete vid Institutionen för geovetenskaper Degree Project at the Department of Earth Sciences ISSN 1650-6553 Nr 399 Dynamics of Selachian (Shark) Dental Morphology During the Early Mesozoic Dynamik av Selachian (haj) tandmorfologi under Tidig Mesozoisk Alexander Paxinos ISSN 1650-6553 Copyright © Alexander Paxinos Published at Department of Earth Sciences, Uppsala University (www.geo.uu.se), Uppsala, 2017 Abstract Dynamics of Selachian (Shark) Dental Morphology During the Early Mesozoic Alexander Paxinos The ancestors of all modern day sharks and rays (Neoselachii) may have appeared during the Late Palaeozoic, but their major diversification happened sometime during the Early Mesozoic. Taxonomic evidence places the first neoselachian diversification in the Early Jurassic. Taxonomic diversity analyses, however, are often affected by incompleteness of the fossil record and sampling biases. On the other hand, the range of morphological variation (disparity) offers a different perspective for studying evolutionary patterns across time. Disparity analyses are much more resilient to sampling biases than diversity analyses, and disparity has the potential to provide a more ecologically-relevant context. -

Deteriogenic Effect of Microbial Colonization of Prehistoric Paintings in Magoura Cave, Bulgaria

Annuaire de l’Université de Sofia“ St. Kliment Ohridski” Faculte de Biologie 2015, volume 100, livre 4, pp. 253-259 First National Conference of Biotechnology, Sofia 2014 DETERIOGENIC EFFECT OF MICROBIAL COLONIZATION OF PREHISTORIC PAINTINGS IN MAGOURA CAVE, BULGARIA MILENA MITOVA*, MICHAIL ILIEV, RALITSA ANGELOVA, VENETA GROUDEVA Department of General and Industrial Microbiology, Faculty of Biology, Sofia University “St. Kliment Ohridski”, Sofia, Bulgaria *Corresponding author: [email protected] Keywords: Biodeterioration effect, microbial colonization, paintings, Magoura cave Abstract: The Magoura Cave is located in North-Western Bulgaria. The cave contained an impressive display of prehistoric paintings, made by the guano faeces of cave dwelling bats. Many different types of microorganisms may grow on such substrates under favourable environmental conditions and have a biodeterioration effect on the paintings. The aim of the present study is focused on complete characterization of the microbial communities inhabiting the Magoura cave and determination of the most active biodeteriogens. The comparative analysis of the microbial colonization clearly demonstrates that each sample possesses unique microbial population structure and specific ratios between different target groups. The monitoring studies reveal that in the cave present continuously stable microbial population with steady qualitative and quantitative composition. In some samples high levels of sulphate-reducing bacteria, denitrifying and ammonifying bacteria as well as silicate bacteria were found. Some of these bacteria are typical biodeteriogens and it is possible they take part in the destruction of the paintings. Based on the results obtained, special treatments must be developed for the restriction of the microbial colonization and the conservation of the unique rock paintings. -

Fast Delivery

Delivery and Returns Delivery rates: We strive to offer an unbeatable service and deliver our products safely and cost-effectively. Our main focus is serving our The delivery is performed by a third-party delivery service provider. customers’ needs with a combination of great design, quality products, value for money, respect for the environment and All orders under 20 kg are shipped by courier with door-to-door delivery service. outstanding service. Please read our Delivery terms and details before you complete your order. If you have any questions, we advise that you contact us at 080019889 and speak with one of our customer service associates. All orders over 20 kg are shipped by transport company with delivery to building address service. All orders are processed within 72 hours from the day following the day on which the order is confirmed and given to the courier or transport company for delivery. After the ordered goods are given to courier or transport company for delivery, we will send you a tracking number, which will allow you to check on their website for recent status. The delivery price is not included in the price of the goods. The transport and delivery cots depend on the weight and volume of the ordered items, the delivery area and any additional services (delivery to apartment entrance, etc.). The following delivery pricelist is applied. All prices are in Bulgarian Lev (BGN) with included VAT. 1. Delivery of samples and small 3. Additional service - Delivery to packages door-to-door up to 20 kg. apartment entrance. If you need assistance by us for delivering the goods to your apartment, Kilograms Zone 1 - Zone 11 this is additionaly paid handling service, which you can request by 0 - 1 kg. -

Investbulgaria Agency

InvestBulgaria Agency January 2014 InvestBulgaria Agency issue 1 (4), year 2 Economic News - Business, Investments, Trade Analysis of Sector Investment Opportunities of Municipality Monthly Interview Electronic Publication Chambers of Commerce in Bulgaria Macroeconomic Indicators Upcoming Events INVESTBULGARIA AGENCY Employment policy in 2014 supports economic and social recovery and stabilization of labor market Rositsa Yankova Deputy Minister of Labor and Social Policy Until June 2013 she took the post of executive secretary on labor market in Confederation of independent Trade Union in Bulgaria From 2009 until 2011 she served as Employment agency’s CEO. From 2003 until 2009 she took the post of a deputy CEO in the Employment agency, as she was mainly responsible for implementation of labor market policies and for European funds. From 2002 until 2003 she was a director of "Pre-Accession Funds and International Relations" department in the Employment Agency. She graduates from language school Geo Milev in Dobrich. She is a Master in law from Sofia University St. Kliment Ohridski and has a master’s degree in marketing from Konstantin Preslavsky University of Shumen. She specialized in administrative management in the Administrative college of India. Employment policy in 2014 supports economic and social recovery and stabilization of labor market. It will be realized via National action plan on employment, which is a key tool for the transition between two periods of development: period of crisis and period of animation and shrink and of growth in employment. Its annual implementation will contribute to meeting the goals and commitments of the country in the implementation of the EU Strategy 2020, namely: - Achievement of 76% employment; - Decrease of number of those living beyond poverty threshold by 260,000. -

Regional Disparities in Bulgaria Today: Economic, Social, and Demographic Challenges

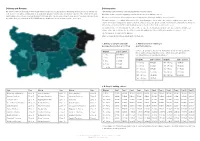

REGIONAL DISPARITIES IN BULGARIA TODAY: ECONOMIC, SOCIAL, AND DEMOGRAPHIC CHALLENGES Sylvia S. Zarkova, PhD Student1 D. A. Tsenov Academy of Economics – Svishtov, Department of Finance and Credit Abstract: To accelerate Bulgaria's economic development taking into account the specific characteristics of their regions is a serious challenge for the local governments in the country. The ongoing political and economic changes require a reassessment of the country's economic development. The aim of this study was to analyse the disparities among Bulgaria’s regions (de- fined in accordance with the Nomenclature of Territorial Units for Statistics (NUTS)) by assessing the degree of economic, social and demographic chal- lenges they face and performing a multivariate comparative analysis with sets of statistically significant indicators. The analysis clearly outlines the bounda- ries of the regional disparities and the need to improve the country’s regional and cohesion policies. Key words: regional policy, differences, NUTS, taxonomic develop- ment measure. JEL: J11, O18, R11. * * * 1 Е-mail: [email protected] The author is a member of the target group of doctoral students who participated in activities and training within the implementation of project BG05M2OP001-2.009-0026-C01 ‘Capacity development of students, PhD students, post-doctoral students and young scientists from the Dimitar A. Tsenov Academy of Economics - Svishtov for innovative scientific and practical Research in the field of economics, administration and management’ funded by the Operational Program ‘Science and Education for Smart Growth’ co-financed by the Structural and Investment Funds of the European Union. The paper won first place in the ‘Doctoral Students’ category of the national competition ‘Young Economist 2018’. -

Illegal Caviar Trade in Bulgaria and Romania

WORKING TOGETHER TO INSPIRE SUSTAINABLE SOLUTIONS REPORT EN 2013 ILLEGAL CAVIAR TRADE IN BULGARIA AND ROMANIA RESultS OF A MARKET SURVEY ON TRADE IN caviar FROM STURGEONS (ACIPENSERIDAE) Cover photo: © WWF / Phyllis Rachler Back cover photo: © Ralf Reinartz Author: Jutta Jahrl WWF Austria Ottakringer Straße 114-116 1160 Vienna Austria [email protected] Published by WWF Austria, Vienna, and TRAFFIC © 2013 WWF Austria and TRAFFIC All rights reserved. All material appearing in this publication is copyrighted and may be produced with permission. Any reproduction in full or in part of this publication must credit WWF Austria and TRAFFIC as the copyright owners. The designations of geographical entities in this publication, and the presentation of the material, do not imply the expression of any opinion whatsoever on the part of WWF, TRAFFIC or its supporting organizations concerning the legal status of any country, territory, or area, or its authorities, or concerning the delimitation of its frontiers or boundaries. Suggested citation: Jahrl J. (2013). Illegal caviar trade in Bulgaria and Romania - Results of a market survey on trade in caviar from sturgeons (Acipenseridae). WWF Austria & TRAFFIC, Vienna, Austria. Illegal caviar trade in Bulgaria and Romania | 2013 TABLE OF CONTENTS ACKNOWLEDGEMENTS 1 EXECUTIVE SUMMARY 2 SummarY RESultS table 4 1. INTRODUCTION 5 1.1 CONServation StatuS OF DANUBE STURGEONS 5 1.2 CITES regulationS REGARDING TRADE IN caviar 7 1.3 Caviar LABELLING regulationS 8 1.4 THE caviar TRADE FROM BULGARIA AND ROMANIA 11 1.5 Sturgeon FARMING AND caviar PRODUCTION 11 1.6 ILLEGAL FISHING AND TRADE IN STURGEONS AND caviar IN/FROM ROMANIA AND BULGARIA 15 2. -

Dolj County Dolj Originally Meant

Dolj County Dolj originally meant "lower Jiu", as opposed to Gorj (upper Jiu) is a county of Romania on the border with Bulgaria, in Oltenia, with the capital city at Craiova. In 2011, it had a population of 660,544 and a population density of 89/km2 (230/sq mi). • Romanians – over 96% • Romani – 3% • Others almost 1%. Geography This county has a total area of 7,414 km2 (2,863 sq mi). The entire area is a plain with the Danube on the south forming a wide valley crossed by the Jiu River in the middle. Other small rivers flow through the county, each one forming a small valley. There are some lakes across the county and many ponds and channels in the Danube valley. 6% of the county's area is a desert. Neighbours • Olt County to the east. • Mehedinți County to the west. • Gorj County and Vâlcea County to the north. • Bulgaria – Vidin Province to the southwest, Montana and Vratsa provinces to the south. Economy Agriculture is the county's main industry. The county has a land that is ideal for growing cereals, vegetables and wines. Other industries are mainly located in the city of Craiova, the largest city in southwestern Romania. The county's main industries: • Automotive industry – Ford has a factory. • Heavy electrical and transport equipment – Electroputere Craiova is the largest factory plant in Romania. • Aeronautics ; ITC • Chemicals processing ; Foods and beverages • Textiles ; Mechanical parts and components There are two small ports on the shore of the Danube river – Bechet and Calafat. Tourism Major tourist attractions: The city of Craiova; The city of Calafat; Fishing on the Danube; The city of Băilești.