Improving Generalizability of Protein Sequence Models with Data Augmentations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Structural and Functional Studies on Photoactive Proteins and Proteins Involved in Cell Differentiation

Technische Universität München Fakultät für Organishe Chemie und Biochemie Max-Planck-Institut für Biochemie Abteilung Strukturforschung Biologische NMR-Arbeitsgruppe STRUCTURAL AND FUNCTIONAL STUDIES ON PHOTOACTIVE PROTEINS AND PROTEINS INVOLVED IN CELL DIFFERENTIATION Pawel Smialowski Vollständiger Abdruck der von der Fakultät für Chemie der Technischen Universität München zur Erlangung des akademischen Grades eines Doktors der Naturwissenschaften genehmigten Dissertation. Vorsitzender: Univ.-Prof. Dr. Dr. A. Bacher Prüfer der Dissertation: 1. apl. Prof. Dr. Dr. h.c. R. Huber 2. Univ.-Prof. Dr. W. Hiller Die Dissertation wurde am 10.02.2004 bei der Technischen Universität München eingereicht und durch die Fakultät für Chemie am 17.03.2004 angenommen. PUBLICATIONS Parts of this thesis have been or will be published in due course: Markus H. J. Seifert, Dorota Ksiazek, M. Kamran Azim, Pawel Smialowski, Nedilijko Budisa and Tad A. Holak Slow Exchange in the Chromophore of a Green Fluorescent Protein Variant J. Am. Chem. Soc. 2002, 124, 7932-7942. Markus H. J. Seifert, Julia Georgescu, Dorota Ksiazek, Pawel Smialowski, Till Rehm, Boris Steipe and Tad A. Holak Backbone Dynamics of Green Fluorescent Protein and the effect of Histidine 148 Substitution Biochemistry. 2003 Mar 11; 42(9): 2500-12. Pawel Smialowski, Mahavir Singh, Aleksandra Mikolajka, Narashimsha Nalabothula, Sudipta Majumdar, Tad A. Holak The human HLH proteins MyoD and Id–2 do not interact directly with either pRb or CDK6. FEBS Letters (submitted) 2004. ABBREVIATIONS ABBREVIATIONS -

Protein G Agarose

Protein G Agarose Item No Size 223-51-01 10 mL INTRODUCTION Table 1. Relative Affinity of Immobilized Protein G and Protein A for Various Antibody Species and Subclasses of Protein G Agarose consists of recombinant protein G, which is (8) produced in E. coli and after purification, is covalently polyclonal and monoclonal IgG’s . immobilized onto 4% cross-linked agarose beads. Protein G agarose is suitable for the isolation of IgG antibodies using Species/ Subclass Protein G Protein A column or immunoprecipitation methods. DNA sequencing of MONOCLONAL native protein G (from Streptococcal group G) has revealed Human two IgG-binding domains as well as sites for albumin and cell IgG 1 ++++ ++++ surface binding (1 - 6). Protein G has been designed to IgG 2 ++++ ++++ eliminate the albumin and cell surface binding domains to IgG 3 ++++ --- reduce nonspecific binding while maintaining efficient IgG 4 ++++ ++++ binding of the Fc region of IgG’s. With the removal of these binding domains, Protein G can be used to separate albumin Mouse from crude human IgG samples(7). IgG 1 ++++ + IgG 2a ++++ ++++ Covalently coupled Protein G Agarose has been widely used IgG 2b +++ +++ for the isolation of a wide variety of immunoglobulin IgG 3 +++ ++ molecules from several mammalian species. Protein G has greater affinity for many more mammalian IgGs than Protein Rat A (Table 1). IgG 1 + --- IgG 2a ++++ --- FORM/STORAGE IgG 2b ++ --- IgG ++ + Protein G Agarose is supplied in a total volume of 15 mL 2c consisting of 10 mL Protein G agarose suspended in 20% POLYCLONAL ethanol/PBS. Store at 2 - 8°C. -

Thermo Scientific Pierce Protein Purification Technical Handbook

Thermo Scientific Pierce Protein Purification Technical Handbook O O H H2N O N N + Version 2 O Bead Bead H2N Agarose O O NH2 NH2 Table of Contents Introduction for Protein Purification 1-5 Antibody Purification 58-67 Overview 58 Purification Accessories – Protein Extraction, Immobilized Protein L, Protein A, Protein G 59-61 and Protein A/G Binding and Elution Buffers 6-11 IgG Binding and Elution Buffers for 62 Protease and Phosphatase Inhibitors 8 Protein A, G, A/G and L for Protein Purification Melon Gel Purification Products 63 Buffers for Protein Purification 9 Thiophilic Gel Antibody Purification 64 Spin Cups and Columns 10 IgM and IgA Purification 65 Disposable Plastic and Centrifuge Columns 11 Avidin:Biotin Binding 66-69 Fusion Protein Purification 12-21 Biotin-binding Proteins 66 His-Tagged Protein Purification Resin 14-15 Immobilized Avidin Products 67 Cobalt Resin, Spin Columns and 16-17 Immobilized Streptavidin Products 67 Chromatography Cartridges Immobilized NeutrAvidin Products 68 High-quality purification of GST-fusion proteins 18-19 Immobilized Monomeric Avidin and Kit 68 GST- and PolyHis-Tagged Pull-Down Assay Kits 20-21 Immobilized Iminobiotin and Biotin 69 Covalent Coupling of Affinity Ligands FPLC Cartridges 70-77 to Chromatography Supports 22-35 Overview 70 Covalent Immobilization of Ligands 22-25 His-tagged Protein FPLC Purification 71-72 Products for Immobilizing Ligands 26-29 GST-Tagged Protein FPLC Purification 72 through Primary Amines Antibody FPLC Purification 73-75 Products for Immobilizing Ligands 30-32 through Sulfhydryl Groups Phosphoprotein FPLC Purification 75 Products for Immobilizing Ligands 33 Biotinylated Protein FPLC Purification 76 through Carbonyl Groups Protein Desalting 77 Products for Immobilizing Ligands 34-35 through Carboxyl Groups Affinity Supports 78-80 IP/Co-IP 36-45 Immunoprecipitation 36 Traditional Methods vs. -

Chapter 2. Materials and Methods 2 Materials and Methods 2.1

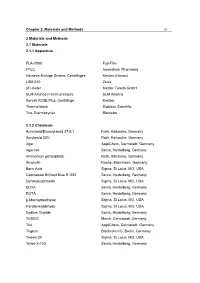

Chapter 2. Materials and Methods 20 2 Materials and Methods 2.1 Materials 2.1.1 Apparatus FLA-3000 Fuji-Film FPLC Amersham Pharmacia Heraeus Biofuge Stratos, Centrifuges Kendro (Honau) LSM 510 Zeiss pH-meter Mettler Toledo GmbH SLM Aminco French pressure SLM Aminco Sorvall RC5B Plus, Centrifuge Kendro Thermo-block Bioblock Scientific Trio-Thermocycler Biometra 2.1.2 Chemicals Acrylamid/Bisacrylamid 37:5:1 Roth, Karlsruhe, Germany Acrylamid 30% Roth, Karlsruhe, Germany Agar AppliChem, Darmstadt, Germany Agarose Serva, Heidelberg, Germany Ammonium persulphate Roth, Karlsruhe, Germany Ampicilin Roche, Mannheim, Germany Boric Acid Sigma, St Loius, MO, USA Coomassie Brilliant Blue R G25 Serva, Heidelberg, Germany Dimetylsulphoxide Sigma, St Loius, MO, USA EDTA Serva, Heidelberg, Germany EGTA Serva, Heidelberg, Germany β-Mercaptoethanol Sigma, St Loius, MO, USA Paraformaldehyde Sigma, St Loius, MO, USA Sodium fluoride Serva, Heidelberg, Germany TEMED Merck, Darmstadt, Germany Tris AppliChem, Darmstadt, Germany Trypsin Biochrom KG, Berlin, Germany Tween-20 Sigma, St Loius, MO, USA Triton X-100 Serva, Heidelberg, Germany Chapter 2. Materials and Methods 21 2.1.3 Standards and kits ECL Western Blotting Reagents Amersham Pharmacia, Uppsala, Sweden Coomassie Protein Assay kit Pierce, Illinois, USA Qiagen Plasmid Mini Kit Qiagen, Hilden The Netherlands Qiagen Plasmid Midi Kit Qiagen, Hilden, The Netherlands QIAquick Gel Extraction Kit Qiagen, Hilden, The Netherlands QIAquick PCR Extraction Kit Qiagen, Hilden, The Netherlands QIAquick Nucleotide Extraction -

A Tool to Detect Cis-Acting Elements Near Promoter Regions in Rice

Planta (2021) 253:40 https://doi.org/10.1007/s00425-021-03572-w ORIGINAL ARTICLE Rice protein‑binding microarrays: a tool to detect cis‑acting elements near promoter regions in rice Joung Sug Kim1 · SongHwa Chae1 · Kyong Mi Jun2 · Gang‑Seob Lee3 · Jong‑Seong Jeon4 · Kyung Do Kim1 · Yeon‑Ki Kim1 Received: 27 October 2020 / Accepted: 8 January 2021 / Published online: 21 January 2021 © The Author(s) 2021 Abstract Main conclusion The present study showed that a rice (Oryza sativa)-specifc protein-binding microarray (RPBM) can be applied to analyze DNA-binding motifs with a TF where binding is evaluated in extended natural promoter regions. The analysis may facilitate identifying TFs and their downstream genes and constructing gene networks through cis-elements. Abstract Transcription factors (TFs) regulate gene expression at the transcriptional level by binding a specifc DNA sequence. Thus, predicting the DNA-binding motifs of TFs is one of the most important areas in the functional analysis of TFs in the postgenomic era. Although many methods have been developed to address this challenge, many TFs still have unknown DNA- binding motifs. In this study, we designed RPBM with 40-bp probes and 20-bp of overlap, yielding 49 probes spanning the 1-kb upstream region before the translation start site of each gene in the entire genome. To confrm the efciency of RPBM technology, we selected two previously studied TFs, OsWOX13 and OsSMF1, and an uncharacterized TF, OsWRKY34. We identifed the ATT GAT TG and CCA CGT CA DNA-binding sequences of OsWOX13 and OsSMF1, respectively. In total, 635 and 932 putative feature genes were identifed for OsWOX13 and OsSMF1, respectively. -

Analysis of the Biochemical and Cellular Activities of Substrate Binding by the Molecular Chaperone Hsp110/Sse1

The Texas Medical Center Library DigitalCommons@TMC The University of Texas MD Anderson Cancer Center UTHealth Graduate School of The University of Texas MD Anderson Cancer Biomedical Sciences Dissertations and Theses Center UTHealth Graduate School of (Open Access) Biomedical Sciences 5-2017 ANALYSIS OF THE BIOCHEMICAL AND CELLULAR ACTIVITIES OF SUBSTRATE BINDING BY THE MOLECULAR CHAPERONE HSP110/SSE1 Veronica M. Garcia Follow this and additional works at: https://digitalcommons.library.tmc.edu/utgsbs_dissertations Part of the Biochemistry Commons, Medicine and Health Sciences Commons, Microbiology Commons, Molecular Biology Commons, and the Molecular Genetics Commons Recommended Citation Garcia, Veronica M., "ANALYSIS OF THE BIOCHEMICAL AND CELLULAR ACTIVITIES OF SUBSTRATE BINDING BY THE MOLECULAR CHAPERONE HSP110/SSE1" (2017). The University of Texas MD Anderson Cancer Center UTHealth Graduate School of Biomedical Sciences Dissertations and Theses (Open Access). 771. https://digitalcommons.library.tmc.edu/utgsbs_dissertations/771 This Dissertation (PhD) is brought to you for free and open access by the The University of Texas MD Anderson Cancer Center UTHealth Graduate School of Biomedical Sciences at DigitalCommons@TMC. It has been accepted for inclusion in The University of Texas MD Anderson Cancer Center UTHealth Graduate School of Biomedical Sciences Dissertations and Theses (Open Access) by an authorized administrator of DigitalCommons@TMC. For more information, please contact [email protected]. ANALYSIS OF THE BIOCHEMICAL AND CELLULAR ACTIVITIES OF SUBSTRATE BINDING BY THE MOLECULAR CHAPERONE HSP110/SSE1 By Veronica Margarita Garcia, B.S. APPROVED: _______________________________ Supervisory Professor Kevin A. Morano, Ph.D. _______________________________ Catherine Denicourt, Ph.D. _______________________________ Theresa M. Koehler, Ph.D. _______________________________ Michael C. -

Sensitive Single Molecule Protein Quantification and Protein Complex

Proteomics 2011, 11, 4731–4735 DOI 10.1002/pmic.201100361 4731 TECHNICAL BRIEF Sensitive single-molecule protein quantification and protein complex detection in a microarray format Lee A. Tessler and Robi D. Mitra Department of Genetics, Center for Genome Sciences and Systems Biology, Washington University, St. Louis, MO, USA Single-molecule protein analysis provides sensitive protein quantitation with a digital read- Received: June 30, 2011 out and is promising for studying biological systems and detecting biomarkers clinically. Accepted: October 3, 2011 However, current single-molecule platforms rely on the quantification of one protein at a time. Conventional antibody microarrays are scalable to detect many proteins simultaneously, but they rely on less sensitive and less quantitative quantification by the ensemble averaging of fluorescent molecules. Here, we demonstrate a single-molecule protein assay in a microarray format enabled by an ultra-low background surface and single-molecule imaging. The digital read-out provides a highly sensitive, low femtomolar limit of detection and four orders of magnitude of dynamic range through the use of hybrid digital-analog quantifica- tion. From crude cell lysate, we measured levels of p53 and MDM2 in parallel, proving the concept of a digital antibody microarray for use in proteomic profiling. We also applied the single-molecule microarray to detect the p53–MDM2 protein complex in cell lysate. Our study is promising for development and application of single-molecule protein methods because it represents a technological bridge between single-plex and highly multiplex studies. Keywords: Antibody microarray / Sandwich immunoassay / Single-molecule detection / Technology / Total internal reflection fluorescence Single-molecule protein detection has the potential to cence (TIRF) imaging has provided a platform for single- benefit systems biology and biomarker studies by providing molecule quantification on planar surfaces. -

Beta-Barrel Scaffold of Fluorescent Proteins: Folding, Stability and Role in Chromophore Formation

Author's personal copy CHAPTER FOUR Beta-Barrel Scaffold of Fluorescent Proteins: Folding, Stability and Role in Chromophore Formation Olesya V. Stepanenko*, Olga V. Stepanenko*, Irina M. Kuznetsova*, Vladislav V. Verkhusha**,1, Konstantin K. Turoverov*,1 *Institute of Cytology of Russian Academy of Sciences, St. Petersburg, Russia **Department of Anatomy and Structural Biology and Gruss-Lipper Biophotonics Center, Albert Einstein College of Medicine, Bronx, NY, USA 1Corresponding authors: E-mails: [email protected]; [email protected] Contents 1. Introduction 222 2. Chromophore Formation and Transformations in Fluorescent Proteins 224 2.1. Chromophore Structures Found in Fluorescent Proteins 225 2.2. Autocatalytic and Light-Induced Chromophore Formation and Transformations 228 2.3. Interaction of Chromophore with Protein Matrix of β-Barrel 234 3. Structure of Fluorescent Proteins and Their Unique Properties 236 3.1. Aequorea victoria GFP and its Genetically Engineered Variants 236 3.2. Fluorescent Proteins from Other Organisms 240 4. Pioneering Studies of Fluorescent Protein Stability 243 4.1. Fundamental Principles of Globular Protein Folding 244 4.2. Comparative Studies of Green and Red Fluorescent Proteins 248 5. Unfolding–Refolding of Fluorescent Proteins 250 5.1. Intermediate States on Pathway of Fluorescent Protein Unfolding 251 5.2. Hysteresis in Unfolding and Refolding of Fluorescent Proteins 258 5.3. Circular Permutation and Reassembly of Split-GFP 261 5.4. Co-translational Folding of Fluorescent Proteins 263 6. Concluding Remarks 267 Acknowledgments 268 References 268 Abstract This review focuses on the current view of the interaction between the β-barrel scaf- fold of fluorescent proteins and their unique chromophore located in the internal helix. -

Reader Biolologische Chemie

Cursushandleiding Biologische Chemie Oktober 2009 Inhoudsopgave Voorwoord 1 0. Basisvaardigheden 5 1. Spectrofotometrie 17 2. Enzymkinetiek 35 3. Centrifugatie 51 4. Gelfiltratie-chromatografie 69 5. Eiwitbepaling 83 6. Gelelectroforese 91 Veiligheidsvoorschriften 103 Colofon Versie: 20090917 Copyright © 2008 – 2009 Tekst: L. van Zon, met bijdragen van vele medewerkers van de subafdeling Structuurbiologie van de Faculteit der Aard- en Levenswetenschappen, Vrije Universiteit Amsterdam Voorwoord Over dit practicum Het maakt niet uit welke richting je kiest binnen de levenswetenschappen, vroeger of later krijg je te maken met laboratoriumwerk, of met resultaten uit laboratoriumwerk. Dit ligt voor de hand als je zelfstandig wetenschappelijk onderzoek gaat doen aan de universiteit of bij een bedrijf. Maar ook bijvoorbeeld medici hebben direct of indirect te maken artsen- of ziekenhuis- laboratoria, en moeten minimaal de resultaten van een biochemische analyse kunnen inter- preteren. Dit geldt ook voor managers in de farmaceutische industrie, en voor alle andere mensen die indirect of direct met de analyse van processen in het menselijk lichaam of andere levende systemen te maken hebben. Zelfs achter de moderne online databases van genen en proteïnen gaat een grote hoeveelheid laboratoriumonderzoek schuil. Het feit dat steeds meer standaard-analyses geautomatiseerd worden neemt niet weg dat ze nog steeds gebaseerd zijn op slechts een handjevol beproefde methodes en principes. In dit practicum zullen we zes van de belangrijkere methodes behandelen die in de praktijk gebruikt worden voor biochemisch onderzoek. Deze methodes zijn grofweg in te delen in analyse- en scheidingsmethodes. Ook in de biologie geldt “meten is weten”, dus analyse- methodes worden gebruikt om metingen te verrichten aan cellen, moleculen en biologische systemen. -

A Comparison of Biuret, Lowry, and Bradford Methods for Measuring

MENDELNET 2015 A COMPARISON OF BIURET, LOWRY AND BRADFORD METHODS FOR MEASURING THE EGG’S PROTEINS VRSANSKA MARTINA1, KUMBAR VOJTECH2 1Department of Chemistry and Biochemistry 2Department of Technology and Automobile Transport Mendel University in Brno Zemedelska 1, 613 00 Brno CZECH REPUBLIC [email protected] Abstract: Quantitation of the total protein content in a sample is a critical step in protein analysis. Molecular UV-Vis absorption spectroscopy is very efficient in quantitative analysis such as protein quantitation and has extensive applications in chemical and biochemical laboratories, medicine and food industry. Traditional spectroscopic methods are cheap, easy-working and the most common way to quantitate protein concentrations. This study compares Biuret, Lowry and Bradford methods for measuring hen albumen and egg yolk as protein samples. These methods are commonly used for determination proteins. The Biuret test uses as a reagent: Biuret reagent. For Lowry assay are used four reagents: reagent A, reagent B, reagent C and reagent D. For last method, Bradford, is used as a reagent Coomassie Brilliant Blue G-250. The absorbance was measured at a wavelength of 750 nm for Lowry, 540 nm for Biuret and 595 nm for Bradford assay. The lowest content of proteins was analysed in albumen (0.706 mg·ml-1) and egg yolk (0.996 mg·ml-1) for Biuret method. According to the Lowry assay, was content of proteins in albumen 0.908 mg·ml-1 and content in egg yolk was 1.003 mg·ml-1. The highest content of proteins, which was analysed using method Bradford, was content of protein in albumen 1.125 mg·ml-1 and content for egg yolk was 1.369 mg·ml-1. -

Methods of Protein Analysis and Variation in Protein Results

Methods of Protein Analysis and Variation in Protein Results C. E. McDonald Premiums on high-protein hard red spring wheat has created much interest in the protein test. The Kjeldahl method, a chemical procedure for nitrogen, is still the basic method used for protein analysis. The Kjeldahl method, the Udy dye binding method and the new infrared reflectance method for determining protein are described in this paper. In the analysis of wheat protein by the Kjeldahl method, the moximum amount of variance in results expected between different laboratories and within the same laboratory is larger than the increments of pro tein on which price premiums have been paid. The causes of protein variance and ways of reducing the variance are discussed. In the past year, the price of hard red spring Table I. Basis of Kjeldahl Protein Procedure. wheat has been substantially influenced by the 1. Protein made of amino acid building blocks. protein content. Price premiums have been paid on increased increments of 0.1 percentage points 2. Amino acids made of carbon, hydrogen, oxygen, of protein. The price expected for wheat on the sulfur, nitrogen. basis of protein as determined by a North Dakota laboratory sometimes is changed because the pro 3. Protein calculated from measurement of nitro tein found later at Minneapolis or Duluth differs gen. more than the protein increment required for 4. Per cent nitrogen x 5.7 = per cent crude pro premium. With barley, an unexpected discount tein of wheat. may be applied against the seller because the pro Per cent nitrogen X 6.25 = per cent protein of tein is found to be over the discount protein level other grains. -

Protein Blotting Handbook Tips and Tricks

Protein Blotting Handbook Tips and Tricks The life science business of Merck KGaA, Darmstadt, Germany operates as MilliporeSigma in the U.S. and Canada. About the Sixth Edition With the publication of the sixth edition of the Protein Blotting Handbook, MilliporeSigma continues to keep researchers up to date on innovations in protein detection. We’ve added more comprehensive guidance on optimizing antibody concentrations and reducing background, along with expanded data and protocols for fluorescence detection. This handbook represents the collective experience of our application scientists, who are actively engaged in advancing the science of protein blotting and detection. It also includes many of the most common recommendations provided by our technical service specialists who are contacted by scientists worldwide for assistance. Better membranes, better blots. MilliporeSigma has been a leading supplier of transfer membranes for nearly four decades. E.M. Southern used these membranes to develop the first nucleic acid transfer from an agarose gel in 19751. The first 0.45 µm PVDF substrate for Western blotting, Immobilon®-P membrane, was introduced in 1985, and the first 0.2 µm PVDF substrate for protein blotting and sequencing, Immobilon®-PSQ membrane, was introduced in 1988. In addition to Immobilon® membranes and reagents, MilliporeSigma provides a wide selection of other tools for protein research, including gentle protein extraction kits, rapid protein isolation with PureProteome™ magnetic beads, and fast, effective concentration with Amicon® Ultra centrifugal filters. Where to Get Additional Information If you have questions or need assistance, please contact a MilliporeSigma technical service specialist, or pose your question online at: SigmaAldrich.com/techservice You’ll also find answers to frequently asked questions (FAQs) concerning Western blotting and other related methods at: SigmaAldrich.com/WesternHelp 1.