WRL Research Report 94/2 ATOM: a System for Building Customized Program Analysis Tools

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Icons of Survival: Metahumanism As Planetary Defense." Nerd Ecology: Defending the Earth with Unpopular Culture

Lioi, Anthony. "Icons of Survival: Metahumanism as Planetary Defense." Nerd Ecology: Defending the Earth with Unpopular Culture. London: Bloomsbury Academic, 2016. 169–196. Environmental Cultures. Bloomsbury Collections. Web. 25 Sep. 2021. <http:// dx.doi.org/10.5040/9781474219730.ch-007>. Downloaded from Bloomsbury Collections, www.bloomsburycollections.com, 25 September 2021, 20:32 UTC. Copyright © Anthony Lioi 2016. You may share this work for non-commercial purposes only, provided you give attribution to the copyright holder and the publisher, and provide a link to the Creative Commons licence. 6 Icons of Survival: Metahumanism as Planetary Defense In which I argue that superhero comics, the most maligned of nerd genres, theorize the transformation of ethics and politics necessary to the project of planetary defense. The figure of the “metahuman,” the human with superpowers and purpose, embodies the transfigured nerd whose defects—intellect, swarm-behavior, abnormality, flux, and love of machines—become virtues of survival in the twenty-first century. The conflict among capitalism, fascism, and communism, which drove the Cold War and its immediate aftermath, also drove the Golden and Silver Ages of Comics. In the era of planetary emergency, these forces reconfigure themselves as different versions of world-destruction. The metahuman also signifies going “beyond” these economic and political systems into orders that preserve democracy without destroying the biosphere. Therefore, the styles of metahuman figuration represent an appeal to tradition and a technique of transformation. I call these strategies the iconic style and metamorphic style. The iconic style, more typical of DC Comics, makes the hero an icon of virtue, and metahuman powers manifest as visible signs: the “S” of Superman, the tiara and golden lasso of Wonder Woman. -

COMIC BOOKS AS AMERICAN PROPAGANDA DURING WORLD WAR II a Master's Thesis Presented to College of Arts & Sciences Departmen

COMIC BOOKS AS AMERICAN PROPAGANDA DURING WORLD WAR II A Master’s Thesis Presented To College of Arts & Sciences Department of Communications and Humanities _______________________________ In Partial Fulfillment of the Requirements for the Master of Science Degree _______________________________ SUNY Polytechnic Institute By David Dellecese May 2018 © 2018 David Dellecese Approval Page SUNY Polytechnic Institute DEPARTMENT OF COMMUNICATIONS AND HUMANITIES INFORMATION DESIGN AND TECHNOLOGY MS PROGRAM Approved and recommended for acceptance as a thesis in partial fulfillment of the requirements for the degree of Master of Science in Information Design + Technology. _________________________ DATE ________________________________________ Kathryn Stam Thesis Advisor ________________________________________ Ryan Lizardi Second Reader ________________________________________ Russell Kahn Instructor 1 ABSTRACT American comic books were a relatively, but quite popular form of media during the years of World War II. Amid a limited media landscape that otherwise consisted of radio, film, newspaper, and magazines, comics served as a useful tool in engaging readers of all ages to get behind the war effort. The aims of this research was to examine a sampling of messages put forth by comic book publishers before and after American involvement in World War II in the form of fictional comic book stories. In this research, it is found that comic book storytelling/messaging reflected a theme of American isolation prior to U.S. involvement in the war, but changed its tone to become a strong proponent for American involvement post-the bombing of Pearl Harbor. This came in numerous forms, from vilification of America’s enemies in the stories of super heroics, the use of scrap, rubber, paper, or bond drives back on the homefront to provide resources on the frontlines, to a general sense of patriotism. -

Superheroes Trivia Quiz Iv

SUPERHEROES TRIVIA QUIZ IV ( www.TriviaChamp.com ) 1> Which superhero carries an indestructible shield? a. The Green Lantern b. Captain America c. Captain Flag d. The Red Tornado 2> Which character is often romantically paired with Batman? a. The Black Canary b. Miss America c. Catwoman d. Hawkgirl 3> Which superhero started out as a petty criminal? a. The Atom b. Spiderman c. The Blue Knight d. Plastic Man 4> Which superhero's alter ego is Raymond Palmer? a. The Atom b. Hawkman c. The Green Arrow d. The Tornado 5> Which superhero is associated with the phrase, "With great power there must also come great responsibility"? a. Spiderman b. Hell Boy c. Batman d. The Hulk 6> Which superhero is nicknamed the "Scarlett Speedster"? a. The Flash b. Speedball c. Stardust d. The Thing 7> Which superhero is dubbed the "Man without Fear"? a. Daredevil b. The Flash c. Wolverine d. Green Lantern 8> Which superhero is the medical doctor for the X-men? a. Storm b. Shadowcat c. Ice Man d. The Beast 9> Who is Batgirl's father (Barbara Gordon)? a. The Mayor b. The Governor c. Batman's Butler d. The Chief of Police 10> Which superhero gains his power from a ring? a. The Green Lantern b. Storm c. Dazzler d. The Hulk 11> Which superhero can manipulate the weather? a. The Tornado b. The Atom c. Strom d. The Thing 12> Which Island does Wonder Woman call home? a. Emerald Island b. Paradise Island c. Amazonia d. Eden Isle 13> Where does the Green Arrow operate? a. -

Archives - Search

Current Auctions Navigation All Archives - Search Category: ALL Archive: BIDDING CLOSED! Over 150 Silver Age Comic Books by DC, Marvel, Gold Key, Dell, More! North (167 records) Lima, OH - WEDNESDAY, November 25th, 2020 Begins closing at 6:30pm at 2 items per minute Item Description Price ITEM Description 500 1966 DC Batman #183 Aug. "Holy Emergency" 10.00 501 1966 DC Batman #186 Nov. "The Jokers Original Robberies" 13.00 502 1966 DC Batman #188 Dec. "The Ten Best Dressed Corpses in Gotham City" 7.50 503 1966 DC Batman #190 Mar. "The Penguin and his Weapon-Umbrella Army against Batman and Robin" 10.00 504 1967 DC Batman #192 June. "The Crystal Ball that Betrayed Batman" 4.50 505 1967 DC Batman #195 Sept. "The Spark-Spangled See-Through Man" 4.50 506 1967 DC Batman #197 Dec. "Catwoman sets her Claws for Batman" 37.00 507 1967 DC Batman #193 Aug. 80pg Giant G37 "6 Suspense Filled Thrillers" 8.00 508 1967 DC Batman #198 Feb. 80pg Giant G43 "Remember? This is the Moment that Changed My Life!" 8.50 509 1967 Marvel Comics Group Fantastic Four #69 Dec. "By Ben Betrayed!" 6.50 510 1967 Marvel Comics Group Fantastic Four #66 Dec. "What Lurks Behind the Beehive?" 41.50 511 1967 Marvel Comics Group The Mighty Thor #143 Aug. "Balder the Brave!" 6.50 512 1967 Marvel Comics Group The Mighty Thor #144 Sept. "This Battleground Earth!" 5.50 513 1967 Marvel Comics Group The Mighty Thor #146 Nov. "...If the Thunder Be Gone!" 5.50 514 1969 Marvel Comics Group The Mighty Thor #166 July. -

Xero Comics 3

[A/katic/Po about Wkatto L^o about ltdkomp5on,C?ou.l5on% ^okfy Madn.^5 and klollot.-........ - /dike U^eckin^z 6 ^Tion-t tke <dk<dfa............. JlaVuj M,4daVLi5 to Tke -dfec'iet o/ (2apta Ln Video ~ . U 1 _____ QilkwAMyn n 2t £L ......conducted byddit J—upo 40 Q-b iolute Keto.................. ............Vldcjdupo^ 48 Q-li: dVyL/ia Wklie.... ddkob dVtewait.... XERO continues to appall an already reeling fandom at the behest of Pat & Dick Lupoff, 21J E 7Jrd Street, New York 21, New York. Do you want to be appalled? Conies are available for contributions, trades, or letters of comment. No sales, no subs. No, Virginia, the title was not changed. mimeo by QWERTYUIOPress, as usual. A few comments about lay ^eam's article which may or lay not be helpful. I've had similar.experiences with readers joining fan clubs. Tiile at Penn State, I was president of the 3F‘Society there, founded by James F. Cooper Jr, and continued by me after he gafiated. The first meeting held each year packed them in’ the first meeting of all brought in 50 people,enough to get us our charter from the University. No subsequent meeting ever brought in more than half that, except when we held an auction. Of those people, I could count on maybe five people to show up regularly, meet ing after meeting, just to sit and talk. If we got a program together, we could double or triple that. One of the most popular was the program vzhen we invited a Naval ROTO captain to talk about atomic submarines and their place in future wars, using Frank Herbert's novel Dragon in the ~ea (or Under Pressure or 21 st Century Sub, depending upon where you read itj as a starting point. -

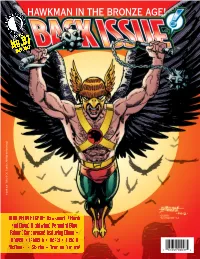

Hawkman in the Bronze Age!

HAWKMAN IN THE BRONZE AGE! July 2017 No.97 ™ $8.95 Hawkman TM & © DC Comics. All Rights Reserved. BIRD PEOPLE ISSUE: Hawkworld! Hawk and Dove! Nightwing! Penguin! Blue Falcon! Condorman! featuring Dixon • Howell • Isabella • Kesel • Liefeld McDaniel • Starlin • Truman & more! 1 82658 00097 4 Volume 1, Number 97 July 2017 EDITOR-IN-CHIEF Michael Eury PUBLISHER John Morrow Comics’ Bronze Age and Beyond! DESIGNER Rich Fowlks COVER ARTIST George Pérez (Commissioned illustration from the collection of Aric Shapiro.) COVER COLORIST Glenn Whitmore COVER DESIGNER Michael Kronenberg PROOFREADER Rob Smentek SPECIAL THANKS Alter Ego Karl Kesel Jim Amash Rob Liefeld Mike Baron Tom Lyle Alan Brennert Andy Mangels Marc Buxton Scott McDaniel John Byrne Dan Mishkin BACK SEAT DRIVER: Editorial by Michael Eury ............................2 Oswald Cobblepot Graham Nolan Greg Crosby Dennis O’Neil FLASHBACK: Hawkman in the Bronze Age ...............................3 DC Comics John Ostrander Joel Davidson George Pérez From guest-shots to a Shadow War, the Winged Wonder’s ’70s and ’80s appearances Teresa R. Davidson Todd Reis Chuck Dixon Bob Rozakis ONE-HIT WONDERS: DC Comics Presents #37: Hawkgirl’s First Solo Flight .......21 Justin Francoeur Brenda Rubin A gander at the Superman/Hawkgirl team-up by Jim Starlin and Roy Thomas (DCinthe80s.com) Bart Sears José Luís García-López Aric Shapiro Hawkman TM & © DC Comics. Joe Giella Steve Skeates PRO2PRO ROUNDTABLE: Exploring Hawkworld ...........................23 Mike Gold Anthony Snyder The post-Crisis version of Hawkman, with Timothy Truman, Mike Gold, John Ostrander, and Grand Comics Jim Starlin Graham Nolan Database Bryan D. Stroud Alan Grant Roy Thomas Robert Greenberger Steven Thompson BRING ON THE BAD GUYS: The Penguin, Gotham’s Gentleman of Crime .......31 Mike Grell Titans Tower Numerous creators survey the history of the Man of a Thousand Umbrellas Greg Guler (titanstower.com) Jack C. -

2News Summer 05 Catalog

Roy Thomas’ All-Star-Struck SEE? WE TOLD YOU $ Comics Fanzine THERE’D BE ANOTHER 8.95 ISSUE STARRING THE In the USA JUSTICEJUSTICE SOCIETYSOCIETY TM OFOF AMERICAAMERICA No.121 November 2013 JOHNNY THUNDER! THE ATOM! JUSTICE SOCIETY! JOHNWRITER/CO-CREATOR LENB. WENTWORTH! SANSONE! OF INKER/CO-CREATOR OF BERNARD SACHS! INKER OF 1948-1951 1 0 SPECIAL! Justice Society of America TM & © DC Comics. 1 82658 27763 5 Vol. 3, No. 121 / November 2013 Editor Roy Thomas Associate Editors Bill Schelly Jim Amash Design & Layout Christopher Day Consulting Editor John Morrow FCA Editor P.C. Hamerlinck Comic Crypt Editor Michael T. Gilbert Editorial Honor Roll Jerry G. Bails (founder) Ronn Foss, Biljo White Mike Friedrich Proofreaders Rob Smentek Contents William J. Dowlding Cover Artists Writer/Editorial: “…And All The Stars Looked Down”. 2 Shane Foley John B. Wentworth—All-American Thunderbolt . 3 (after Irwin Hasen) Cover Colorist Daughter Rebecca Wentworth tells Richard Arndt about the creator of “Johnny Thunder,” “Sargon the Sorcerer,” & “The Whip.” Tom Ziuko Special A/E Interlude: “The Will Of William Wilson” . 18 With Special Thanks to: Splitting The Atom—Three Ways! . 21 Heidi Amash Mark Lewis Richard J. Arndt Jim Ludwig Mrs. Emily Sokoloff & Maggie Sansone talk to Shaun Clancy about Leonard Sansone— Bob Bailey Ed Malsberg inker/co-creator of “The Atom”—and about co-creators Ben Flinton and Bill O’Connor. Rod Beck Doug Martin “The Life Of A Freelancer… Is Always Feast Or Famine” . 40 Judy Swayze Bruce Mason Blackman Mike Lynch Bernice Sachs-Smollet to Richard Arndt about her late husband, JSA/JLA artist Bernard Sachs. -

Jlunlimitedchecklist

WWW.HEROCLIXIN.COM WWW.HEROCLIXIN.COM WWW.HEROCLIXIN.COM WWW.HEROCLIXIN.COM 001_____SUPERMAN .......................................................... 100 C 001_____SUPERMAN .......................................................... 100 C TEAM-UP CARDS (1 of 2) TEAM-UP CARDS (2 of 2) 002_____GREEN LANTERN .................................................. 65 C 002_____GREEN LANTERN .................................................. 65 C 003_____THE FLASH ............................................................. 30 C 003_____THE FLASH ............................................................. 30 C SUPERMAN GREEN ARROW 004_____DR. FATE .........................................................65 - 10 C 004_____DR. FATE .........................................................65 - 10 C 001.01 _____TEAM UP: GREEN LANTERN 026.01 _____TEAM UP: SUPERMAN 005a____BATMAN .................................................................. 70 C 005a____BATMAN .................................................................. 70 C 001.02 _____TEAM UP: BATMAN 026.02 _____TEAM UP: GREEN LANTERN 005b____BATMAN .................................................................. 40 P 005b____BATMAN .................................................................. 40 P 001.03 _____TEAM UP: WONDER WOMAN 026.03 _____TEAM UP: BATMAN 006_____CADMUS LABS SCIENTIST ................................... 15 C 006_____CADMUS LABS SCIENTIST ................................... 15 C 001.04 _____TEAM UP: THE FLASH 026.04 _____TEAM UP: WONDER WOMAN 007_____S.T.A.R. -

Activity Kit

Activity Kit Copyright (c) 2011 DC Comics. All related characters and elements are trademarks of and (c) DC Comics. (s11) ACTIvITy KIT wORD SCRAMBLE Help Batman solve a mystery by unscrambling the following words! Nermusap __ __ __ __ __ __ __ __ Barrzio __ __ __ __ __ __ __ Matbna __ __ __ __ __ __ Eth Kojre __ __ __ __ __ __ __ __ Redwon Waomn Wactnamo __ __ __ __ __ __ __ __ __ __ __ __ __ __ __ __ __ __ __ Raincaib __ __ __ __ __ __ __ __ Neerg Nerltan Hheecat __ __ __ __ __ __ __ __ __ __ __ __ __ __ __ __ __ __ __ Ssrtonie __ __ __ __ __ __ __ __ Eht Shafl __ __ __ __ __ __ __ __ Slirev Naws __ __ __ __ __ __ __ __ __ __ Quamnaa __ __ __ __ __ __ __ Hte Ylaid Neplta __ __ __ __ __ __ __ __ __ __ __ __ __ __ Slio Anle __ __ __ __ __ __ __ __ Msliporote __ __ __ __ __ __ __ __ __ __ Nibor __ __ __ __ __ Maghto Ycti __ __ __ __ __ __ __ __ __ __ Ptknory __ __ __ __ __ __ __ Zamona __ __ __ __ __ __ Vacbtae __ __ __ __ __ __ __ Repow grin __ __ __ __ __ __ __ __ __ Elx Ultroh __ __ __ __ __ __ __ __ __ Copyright (c) 2011 DC Comics. -

Kinetic Energy

Relative Motion Red Car sees Blue Car travel at U Blue Car throws Ball at V' What is V – the speed of the Ball seen by Red Car? Newton’s Principia in 1687. Galilean Relativity Velocities add: V= U +V' V’= 25m/s V = 45m/s U = 20 m/s TheThe GalileanTransformationsGalileanTransformations Consider two reference frames S and S'. The coordinate axes in S are x, y, z and those in S' are x', y', z'. Reference frame S' moves with velocity v relative to S along the x-axis. Equivalently, S moves with velocity −v relative to S'. The Galilean transformations of position are: The Galilean transformations of velocity are: What if instead of a ball, it is a light wave? Do velocities add according to Galilean Relativity? Clocks slow down and rulers shrink in order to keep the speed of light the same for all observers! Time is Relative! Space is Relative! Only the SPEED OF LIGHT is Absolute! James Clerk Maxwell 1860s Light is wave. The medium is the Ether. 1 cxm==3.0 108 / s με0 o Measure the Speed of the Ether Wind Light should travel faster with the wind and slower against it during the year. Michelson-Morely Experiment 1887 The speed of light is independent of the motion and is always c. The speed of the Ether wind is zero. Lorentz Contraction The apparatus shrinks by a factor : 1/− vc22 On the Electrodynamics of Moving Bodies 1905 EinsteinEinstein’’ss PrinciplePrinciple ofof RelativityRelativity • Maxwell’s equations are true in all inertial reference frames. • Maxwell’s equations predict that electromagnetic waves, including light, travel at speed c = 3.00 × 108 m/s =300,000km/s = 300m/µs . -

File02.Introduction.Qxp Layout 1

Rebooting the Academy: Why Universities Need to Finally Start Taking Comic Books Seriously Gian Pagnucci and Alex Romagnoli Has the age of the comic book dawned at last? Comic book su- perheroes dominate movie theatres across the land. The Walking Dead scares millions on television, while others tune in to Gotham. Clothing stores are filled with t-shirts bearing Superman’s “S” and the Bat symbol. And bookstores now hold large graphic novel sec- tions, while comic book style novels like Captain Underpants (Pilkey, 1997) and The Diary of a Wimpy Kid (Kinney, 2007) are best sellers. Yet for all the excitement that comic books and comic book based ideas have generated in popular culture, there still remains skepti- cism about the scholarly value of studying comic books and graphic novels. If a person searches hard enough, it is possible to find a course about comic books here or there or a graphic novel on a few required course reading lists. A few departments have comics studies scholars laboring away in them, and there are more books and arti- cles about comic books and graphic novels now being published by academic presses. Yet job ads asking for knowledge of the comics studies field are rare, and the most prestigious academic journals contain few articles that discuss comic books and graphic novels. This means comics studies scholars are forever having to defend the genre they find so compelling. Every comics studies scholar knows how frustrating it is to always have to face the eyebrow rais- ing, snickering, questioning, and “Ohhh, you’re studying those things” comments from colleagues, administrators, and students. -

Justice Society America!

Roy Thomas’ Star-Bedecked $ Comics Fanzine JUST WHEN YOU THOUGHT 8.95 YOU KNEW EVERYTHING THERE In the USA WAS TO KNOW ABOUT THE No.109 May JUSTICE 2012 SOCIETY ofAMERICA!™ 5 0 5 3 6 7 7 2 8 5 Art © DC Comics; Justice Society of America TM & © 2012 DC Comics. 6 Plus: SPECTRE & HOUR-MAN 2 8 Co-Creator 1 BERNARD BAILY Vol. 3, No. 109 / April 2012 Editor Roy Thomas Associate Editors Bill Schelly Jim Amash Design & Layout Jon B. Cooke Consulting Editor John Morrow FCA Editor AST! P.C. Hamerlinck AT L Comic Crypt Editor ALL IN Michael T. Gilbert FOR Editorial Honor Roll COLOR $8.95! Jerry G. Bails (founder) Ronn Foss, Biljo White Mike Friedrich Proofreader Rob Smentek Cover Artist Contents George Pérez Writer/Editorial: An All-Star Cast—Of Mind . 2 Cover Colorist Bernard Baily: The Early Years . 3 Tom Ziuko With Special Thanks to: Ken Quattro examines the career of the artist who co-created The Spectre and Hour-Man. “Fairytales Can Come True…” . 17 Rob Allen Roger Hill The Roy Thomas/Michael Bair 1980s JSA retro-series that didn’t quite happen! Heidi Amash Allan Holtz Dave Armstrong Carmine Infantino What If All-Star Comics Had Sported A Variant Line-up? . 25 Amy Baily William B. Jones, Jr. Eugene Baily Jim Kealy Hurricane Heeran imagines different 1940s JSA memberships—and rivals! Jill Baily Kirk Kimball “Will” Power . 33 Regina Baily Paul Levitz Stephen Baily Mark Lewis Pages from that legendary “lost” Golden Age JSA epic—in color for the first time ever! Michael Bair Bob Lubbers “I Absolutely Love What I’m Doing!” .