Supplementary Logic Notes 1 Propositional Logic

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Classifying Material Implications Over Minimal Logic

Classifying Material Implications over Minimal Logic Hannes Diener and Maarten McKubre-Jordens March 28, 2018 Abstract The so-called paradoxes of material implication have motivated the development of many non- classical logics over the years [2–5, 11]. In this note, we investigate some of these paradoxes and classify them, over minimal logic. We provide proofs of equivalence and semantic models separating the paradoxes where appropriate. A number of equivalent groups arise, all of which collapse with unrestricted use of double negation elimination. Interestingly, the principle ex falso quodlibet, and several weaker principles, turn out to be distinguishable, giving perhaps supporting motivation for adopting minimal logic as the ambient logic for reasoning in the possible presence of inconsistency. Keywords: reverse mathematics; minimal logic; ex falso quodlibet; implication; paraconsistent logic; Peirce’s principle. 1 Introduction The project of constructive reverse mathematics [6] has given rise to a wide literature where various the- orems of mathematics and principles of logic have been classified over intuitionistic logic. What is less well-known is that the subtle difference that arises when the principle of explosion, ex falso quodlibet, is dropped from intuitionistic logic (thus giving (Johansson’s) minimal logic) enables the distinction of many more principles. The focus of the present paper are a range of principles known collectively (but not exhaustively) as the paradoxes of material implication; paradoxes because they illustrate that the usual interpretation of formal statements of the form “. → . .” as informal statements of the form “if. then. ” produces counter-intuitive results. Some of these principles were hinted at in [9]. Here we present a carefully worked-out chart, classifying a number of such principles over minimal logic. -

Lecture 1: Informal Logic

Lecture 1: Informal Logic 1 Sentential/Propositional Logic Definition 1.1 (Statement). A statement is anything we can say, write, or otherwise express that can be either true or false. Remark 1. Veracity of a statement doesn't depend on one's ability to verify it's truth or falsity. Example 1.2. The expression \Venkatesh is twenty years old" is a statement, because it is either true or false. We will be making following two assumptions when dealing with statements. Assumptions 1.3 (Bivalence). Every statement is either true or false. Assumptions 1.4. No statement is both true and false. One of the consequences of the bivalence assumption is that if a statement is not true, then it must be false. Hence, to prove that something is true, it would suffice to prove that it is not false. 1.1 Combination of Statements In this subsection, we would look at five basic ways of combining two statements P and Q to form new statements. We can form more complicated compound statements by using combinations of these basic operations. Definition 1.5 (Conjunction). We define conjunction of statements P and Q to be the statement that is true if both P and Q are true, and is false otherwise. Conjunction of of P and Q is denoted P ^ Q, and read \P and Q." Remark 2. Logical and is different from it's colloquial usages for therefore or for relations. 1 Definition 1.6 (Disjunction). We define disjunction of statements P and Q to be the statement that is true if either P is true or Q is true or both are true, and is false otherwise. -

Section 1.4: Predicate Logic

Section 1.4: Predicate Logic January 22, 2021 Abstract We now consider the logic associated with predicate wffs, including a new set of derivation rules for demonstrating validity (the analogue of tautology in the propositional calculus) – that is, for proving theorems! 1 Derivation rules • First of all, all the rules of propositional logic still hold. Whew! Propositional wffs are simply boring, variable-less predicate wffs. • Our author suggests the following “general plan of attack”: – strip off the quantifiers – work with the separate wffs – insert quantifiers as necessary Now, how may we legitimately do so? Consider the classic syllo- gism: a. (All) Humans are mortal. b. Socrates is human. Therefore Socrates is mortal. The way we reason is that the rule “Humans are mortal” applies to the specific example “Socrates”; hence this Socrates is mortal. We might write this as a. (∀x)(H(x) → M(x)) b. H(s) Therefore M(s). It seems so obvious! But how do we justify that in a proof se- quence? • New rules for predicate logic: in the following, you should un- derstand by the symbol x in P (x) an expression with free variable x, possibly containing other (quantified) variables: e.g. P (x) ≡ (∀y)(∃z)Q(x,y,z) (1) – Universal Instantiation: from (∀x)P (x) deduce P (t). Caveat: t must not already appear as a variable in the ex- pression for P (x): in the equation above, (1), it would not do to deduce P (y) or P (z), as those variables appear in the expression (in a quantified fashion) already. -

On Basic Probability Logic Inequalities †

mathematics Article On Basic Probability Logic Inequalities † Marija Boriˇci´cJoksimovi´c Faculty of Organizational Sciences, University of Belgrade, Jove Ili´ca154, 11000 Belgrade, Serbia; [email protected] † The conclusions given in this paper were partially presented at the European Summer Meetings of the Association for Symbolic Logic, Logic Colloquium 2012, held in Manchester on 12–18 July 2012. Abstract: We give some simple examples of applying some of the well-known elementary probability theory inequalities and properties in the field of logical argumentation. A probabilistic version of the hypothetical syllogism inference rule is as follows: if propositions A, B, C, A ! B, and B ! C have probabilities a, b, c, r, and s, respectively, then for probability p of A ! C, we have f (a, b, c, r, s) ≤ p ≤ g(a, b, c, r, s), for some functions f and g of given parameters. In this paper, after a short overview of known rules related to conjunction and disjunction, we proposed some probabilized forms of the hypothetical syllogism inference rule, with the best possible bounds for the probability of conclusion, covering simultaneously the probabilistic versions of both modus ponens and modus tollens rules, as already considered by Suppes, Hailperin, and Wagner. Keywords: inequality; probability logic; inference rule MSC: 03B48; 03B05; 60E15; 26D20; 60A05 1. Introduction The main part of probabilization of logical inference rules is defining the correspond- Citation: Boriˇci´cJoksimovi´c,M. On ing best possible bounds for probabilities of propositions. Some of them, connected with Basic Probability Logic Inequalities. conjunction and disjunction, can be obtained immediately from the well-known Boole’s Mathematics 2021, 9, 1409. -

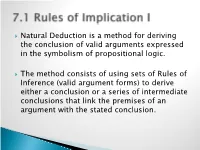

7.1 Rules of Implication I

Natural Deduction is a method for deriving the conclusion of valid arguments expressed in the symbolism of propositional logic. The method consists of using sets of Rules of Inference (valid argument forms) to derive either a conclusion or a series of intermediate conclusions that link the premises of an argument with the stated conclusion. The First Four Rules of Inference: ◦ Modus Ponens (MP): p q p q ◦ Modus Tollens (MT): p q ~q ~p ◦ Pure Hypothetical Syllogism (HS): p q q r p r ◦ Disjunctive Syllogism (DS): p v q ~p q Common strategies for constructing a proof involving the first four rules: ◦ Always begin by attempting to find the conclusion in the premises. If the conclusion is not present in its entirely in the premises, look at the main operator of the conclusion. This will provide a clue as to how the conclusion should be derived. ◦ If the conclusion contains a letter that appears in the consequent of a conditional statement in the premises, consider obtaining that letter via modus ponens. ◦ If the conclusion contains a negated letter and that letter appears in the antecedent of a conditional statement in the premises, consider obtaining the negated letter via modus tollens. ◦ If the conclusion is a conditional statement, consider obtaining it via pure hypothetical syllogism. ◦ If the conclusion contains a letter that appears in a disjunctive statement in the premises, consider obtaining that letter via disjunctive syllogism. Four Additional Rules of Inference: ◦ Constructive Dilemma (CD): (p q) • (r s) p v r q v s ◦ Simplification (Simp): p • q p ◦ Conjunction (Conj): p q p • q ◦ Addition (Add): p p v q Common Misapplications Common strategies involving the additional rules of inference: ◦ If the conclusion contains a letter that appears in a conjunctive statement in the premises, consider obtaining that letter via simplification. -

Propositional Logic (PDF)

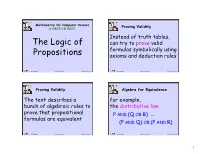

Mathematics for Computer Science Proving Validity 6.042J/18.062J Instead of truth tables, The Logic of can try to prove valid formulas symbolically using Propositions axioms and deduction rules Albert R Meyer February 14, 2014 propositional logic.1 Albert R Meyer February 14, 2014 propositional logic.2 Proving Validity Algebra for Equivalence The text describes a for example, bunch of algebraic rules to the distributive law prove that propositional P AND (Q OR R) ≡ formulas are equivalent (P AND Q) OR (P AND R) Albert R Meyer February 14, 2014 propositional logic.3 Albert R Meyer February 14, 2014 propositional logic.4 1 Algebra for Equivalence Algebra for Equivalence for example, The set of rules for ≡ in DeMorgan’s law the text are complete: ≡ NOT(P AND Q) ≡ if two formulas are , these rules can prove it. NOT(P) OR NOT(Q) Albert R Meyer February 14, 2014 propositional logic.5 Albert R Meyer February 14, 2014 propositional logic.6 A Proof System A Proof System Another approach is to Lukasiewicz’ proof system is a start with some valid particularly elegant example of this idea. formulas (axioms) and deduce more valid formulas using proof rules Albert R Meyer February 14, 2014 propositional logic.7 Albert R Meyer February 14, 2014 propositional logic.8 2 A Proof System Lukasiewicz’ Proof System Lukasiewicz’ proof system is a Axioms: particularly elegant example of 1) (¬P → P) → P this idea. It covers formulas 2) P → (¬P → Q) whose only logical operators are 3) (P → Q) → ((Q → R) → (P → R)) IMPLIES (→) and NOT. The only rule: modus ponens Albert R Meyer February 14, 2014 propositional logic.9 Albert R Meyer February 14, 2014 propositional logic.10 Lukasiewicz’ Proof System Lukasiewicz’ Proof System Prove formulas by starting with Prove formulas by starting with axioms and repeatedly applying axioms and repeatedly applying the inference rule. -

Chapter 9: Answers and Comments Step 1 Exercises 1. Simplification. 2. Absorption. 3. See Textbook. 4. Modus Tollens. 5. Modus P

Chapter 9: Answers and Comments Step 1 Exercises 1. Simplification. 2. Absorption. 3. See textbook. 4. Modus Tollens. 5. Modus Ponens. 6. Simplification. 7. X -- A very common student mistake; can't use Simplification unless the major con- nective of the premise is a conjunction. 8. Disjunctive Syllogism. 9. X -- Fallacy of Denying the Antecedent. 10. X 11. Constructive Dilemma. 12. See textbook. 13. Hypothetical Syllogism. 14. Hypothetical Syllogism. 15. Conjunction. 16. See textbook. 17. Addition. 18. Modus Ponens. 19. X -- Fallacy of Affirming the Consequent. 20. Disjunctive Syllogism. 21. X -- not HS, the (D v G) does not match (D v C). This is deliberate to make sure you don't just focus on generalities, and make sure the entire form fits. 22. Constructive Dilemma. 23. See textbook. 24. Simplification. 25. Modus Ponens. 26. Modus Tollens. 27. See textbook. 28. Disjunctive Syllogism. 29. Modus Ponens. 30. Disjunctive Syllogism. Step 2 Exercises #1 1 Z A 2. (Z v B) C / Z C 3. Z (1)Simp. 4. Z v B (3) Add. 5. C (2)(4)MP 6. Z C (3)(5) Conj. For line 4 it is easy to get locked into line 2 and strategy 1. But they do not work. #2 1. K (B v I) 2. K 3. ~B 4. I (~T N) 5. N T / ~N 6. B v I (1)(2) MP 7. I (6)(3) DS 8. ~T N (4)(7) MP 9. ~T (8) Simp. 10. ~N (5)(9) MT #3 See textbook. #4 1. H I 2. I J 3. -

Logic and Proof

CS2209A 2017 Applied Logic for Computer Science Lecture 11, 12 Logic and Proof Instructor: Yu Zhen Xie 1 Proofs • What is a theorem? – Lemma, claim, etc • What is a proof? – Where do we start? – Where do we stop? – What steps do we take? – How much detail is needed? 2 The truth 3 Theories and theorems • Theory: axioms + everything derived from them using rules of inference – Euclidean geometry, set theory, theory of reals, theory of integers, Boolean algebra… – In verification: theory of arrays. • Theorem: a true statement in a theory – Proved from axioms (usually, from already proven theorems) Pythagorean theorem • A statement can be a theorem in one theory and false in another! – Between any two numbers there is another number. • A theorem for real numbers. False for integers! 4 Axioms example: Euclid’s postulates I. Through 2 points a line segment can be drawn II. A line segment can be extended to a straight line indefinitely III. Given a line segment, a circle can be drawn with it as a radius and one endpoint as a centre IV. All right angles are congruent V. Parallel postulate 5 Some axioms for propositional logic • For any formulas A, B, C: – A ∨ ¬ – . – . – A • Also, like in arithmetic (with as +, as *) – , – Same holds for ∧. – Also, • And unlike arithmetic – ( ) 6 Counterexamples • To disprove a statement, enough to give a counterexample: a scenario where it is false – To disprove that • Take = ", = #, • Then is false, but B is true. – To disprove that if %& '( ) &, ( , then '( %& ) &, ( , • Set the domain of x and y to be {0,1} • Set P(0,0) and P(1,1) to true, and P(0,1), P(1,0) to false. -

CS 205 Sections 07 and 08 Supplementary Notes on Proof Matthew Stone March 1, 2004 [email protected]

CS 205 Sections 07 and 08 Supplementary Notes on Proof Matthew Stone March 1, 2004 [email protected] 1 Propositional Natural Deduction The proof systems that we have been studying in class are called natural deduction. This is because they permit the same lines of reasoning and the same form of argument that you see in ordinary mathematics. Students generally find it easier to represent their mathematical ideas in natural deduction than in other ways of doing proofs. In these systems the proof is a sequence of lines. Each line has a number, a formula, and a justification that explains why the formula can be introduced into the proof. The simplest kind of justification is that the formula is a premise, and the argument depends on it. Another common justification is modus ponens, which derives the consequent of a conditional in the proof whose antecedent is also part of the proof. Here is a simple proof with these two rules used together. Example 1 1P! QPremise 2Q! RPremise 3P Premise 4 Q Modus ponens 1,3 5 R Modus ponens 2,4 This proof assumes that P is true, that P ! Q,andthatQ ! R. It uses modus ponens to conclude that R must then be true. Some inference rules in natural deduction allow assumptions to be made for the purposes of argument. These inference rules create a subproof. A subproof begins with a new assumption. This assumption can be used just within this subproof. In addition, all the assumption made in outer proofs can be used in the subproof. -

Argument Forms and Fallacies

6.6 Common Argument Forms and Fallacies 1. Common Valid Argument Forms: In the previous section (6.4), we learned how to determine whether or not an argument is valid using truth tables. There are certain forms of valid and invalid argument that are extremely common. If we memorize some of these common argument forms, it will save us time because we will be able to immediately recognize whether or not certain arguments are valid or invalid without having to draw out a truth table. Let’s begin: 1. Disjunctive Syllogism: The following argument is valid: “The coin is either in my right hand or my left hand. It’s not in my right hand. So, it must be in my left hand.” Let “R”=”The coin is in my right hand” and let “L”=”The coin is in my left hand”. The argument in symbolic form is this: R ˅ L ~R __________________________________________________ L Any argument with the form just stated is valid. This form of argument is called a disjunctive syllogism. Basically, the argument gives you two options and says that, since one option is FALSE, the other option must be TRUE. 2. Pure Hypothetical Syllogism: The following argument is valid: “If you hit the ball in on this turn, you’ll get a hole in one; and if you get a hole in one you’ll win the game. So, if you hit the ball in on this turn, you’ll win the game.” Let “B”=”You hit the ball in on this turn”, “H”=”You get a hole in one”, and “W”=”you win the game”. -

Philosophy 109, Modern Logic Russell Marcus

Philosophy 240: Symbolic Logic Hamilton College Fall 2014 Russell Marcus Reference Sheeet for What Follows Names of Languages PL: Propositional Logic M: Monadic (First-Order) Predicate Logic F: Full (First-Order) Predicate Logic FF: Full (First-Order) Predicate Logic with functors S: Second-Order Predicate Logic Basic Truth Tables - á á @ â á w â á e â á / â 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 1 0 0 1 1 0 1 0 0 1 0 0 0 0 1 0 1 1 0 1 1 0 0 1 0 0 0 0 0 0 0 1 0 0 1 0 Rules of Inference Modus Ponens (MP) Conjunction (Conj) á e â á á / â â / á A â Modus Tollens (MT) Addition (Add) á e â á / á w â -â / -á Simplification (Simp) Disjunctive Syllogism (DS) á A â / á á w â -á / â Constructive Dilemma (CD) (á e â) Hypothetical Syllogism (HS) (ã e ä) á e â á w ã / â w ä â e ã / á e ã Philosophy 240: Symbolic Logic, Prof. Marcus; Reference Sheet for What Follows, page 2 Rules of Equivalence DeMorgan’s Laws (DM) Contraposition (Cont) -(á A â) W -á w -â á e â W -â e -á -(á w â) W -á A -â Material Implication (Impl) Association (Assoc) á e â W -á w â á w (â w ã) W (á w â) w ã á A (â A ã) W (á A â) A ã Material Equivalence (Equiv) á / â W (á e â) A (â e á) Distribution (Dist) á / â W (á A â) w (-á A -â) á A (â w ã) W (á A â) w (á A ã) á w (â A ã) W (á w â) A (á w ã) Exportation (Exp) á e (â e ã) W (á A â) e ã Commutativity (Com) á w â W â w á Tautology (Taut) á A â W â A á á W á A á á W á w á Double Negation (DN) á W --á Six Derived Rules for the Biconditional Rules of Inference Rules of Equivalence Biconditional Modus Ponens (BMP) Biconditional DeMorgan’s Law (BDM) á / â -(á / â) W -á / â á / â Biconditional Modus Tollens (BMT) Biconditional Commutativity (BCom) á / â á / â W â / á -á / -â Biconditional Hypothetical Syllogism (BHS) Biconditional Contraposition (BCont) á / â á / â W -á / -â â / ã / á / ã Philosophy 240: Symbolic Logic, Prof. -

Chapter 13: Formal Proofs and Quantifiers

Chapter 13: Formal Proofs and Quantifiers § 13.1 Universal quantifier rules Universal Elimination (∀ Elim) ∀x S(x) ❺ S(c) Here x stands for any variable, c stands for any individual constant, and S(c) stands for the result of replacing all free occurrences of x in S(x) with c. Example 1. ∀x ∃y (Adjoins(x, y) ∧ SameSize(y, x)) 2. ∃y (Adjoins(b, y) ∧ SameSize(y, b)) ∀ Elim: 1 General Conditional Proof (∀ Intro) ✾c P(c) Where c does not occur outside Q(c) the subproof where it is introduced. ❺ ∀x (P(x) → Q(x)) There is an important bit of new notation here— ✾c , the “boxed constant” at the beginning of the assumption line. This says, in effect, “let’s call it c.” To enter the boxed constant in Fitch, start a new subproof and click on the downward pointing triangle ❼. This will open a menu that lets you choose the constant you wish to use as a name for an arbitrary object. Your subproof will typically end with some sentence containing this constant. In giving the justification for the universal generalization, we cite the entire subproof (as we do in the case of → Intro). Notice that although c may not occur outside the subproof where it is introduced, it may occur again inside a subproof within the original subproof. Universal Introduction (∀ Intro) ✾c Where c does not occur outside the subproof where it is P(c) introduced. ❺ ∀x P(x) Remember, any time you set up a subproof for ∀ Intro, you must choose a “boxed constant” on the assumption line of the subproof, even if there is no sentence on the assumption line.