An Empirical Study of Game Reviews on the Steam Platform

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Digital Bros, 505Games Announces That Memories of Mars Is Available

FIGHT TO SURVIVE AND EXPLORE GALACTIC WASTELANDS OF THE RED PLANET IN ‘MEMORIES OF MARS,’ AN OPEN WORLD ONLINE SURVIVAL SANDBOX GAME Prepare for Mission Launch in 2018 CALABASAS, Calif. – Jan. 18, 2018 – 505 Games and Limbic Entertainment will bring the hostile and desolate frontier of the Red Planet to life in 2018 with Memories of Mars, an all-new open world online survival sandbox game from the hit developers behind Ubisoft’s Might & Magic® franchise and Kalypso’s Tropico 6. Coming soon to Steam Early Access for PC, Memories of Mars will thrust players into the barren wastelands of the Red Planet where they must explore the unforgiving landscape, build a habitable homestead and fight to survive against a variety of dangers including harsh environmental elements, strange enemy NPC’s and perhaps the most dangerous of all, other human survivors. “Memories of Mars will take players on an epic adventure of survival and exploration as they fight to overcome the mysterious dangers of the Red Planet in infinite ways,” said Stephan Winter, CEO of Limbic Entertainment. “We have created an immersive open world environment with seemingly endless possibilities in player progress and exploration all set against the strange and curious backdrop of the distant planet Mars.” Announcement Trailer: https://youtu.be/5fdFe8ic2sQ Memories of Mars Steam Page: http://store.steampowered.com/app/644290/MEMORIES_OF_MARS “Our partners at Limbic Entertainment continue to impress us with creative and bold ideas that strive to push the limits of the online multiplayer survival genre,” said Tim Woodley, senior vice president of global brand and marketing at 505 Games. -

Nordic Game Is a Great Way to Do This

2 Igloos inc. / Carcajou Games / Triple Boris 2 Igloos is the result of a joint venture between Carcajou Games and Triple Boris. We decided to use the complementary strengths of both studios to create the best team needed to create this project. Once a Tale reimagines the classic tale Hansel & Gretel, with a twist. As you explore the magical forest you will discover that it is inhabited by many characters from other tales as well. Using real handmade puppets and real miniature terrains which are then 3D scanned to create a palpable, fantastic world, we are making an experience that blurs the line between video game and stop motion animated film. With a great story and stunning visuals, we want to create something truly special. Having just finished our prototype this spring, we have already been finalists for the Ubisoft Indie Serie and the Eidos Innovation Program. We want to validate our concept with the European market and Nordic Game is a great way to do this. We are looking for Publishers that yearn for great stories and games that have a deeper meaning. 2Dogs Games Ltd. Destiny’s Sword is a broad-appeal Living-Narrative Graphic Adventure where every choice matters. Players lead a squad of intergalactic peacekeepers, navigating the fallout of war and life under extreme circumstances, while exploring a breath-taking and immersive world of living, breathing, hand-painted artwork. Destiny’s Sword is filled with endless choices and unlimited possibilities—we’re taking interactive storytelling to new heights with our proprietary Insight Engine AI technology. This intricate psychology simulation provides every character with a diverse personality, backstory and desires, allowing them to respond and develop in an incredibly human fashion—generating remarkable player engagement and emotional investment, while ensuring that every playthrough is unique. -

An Empirical Study of Game Reviews on the Steam Platform

Empir Software Eng (2019) 24:170–207 https://doi.org/10.1007/s10664-018-9627-4 An empirical study of game reviews on the Steam platform Dayi Lin1 · Cor-Paul Bezemer1 · Ying Zou2 · AhmedE.Hassan1 Published online: 15 June 2018 © Springer Science+Business Media, LLC, part of Springer Nature 2018 Abstract The steadily increasing popularity of computer games has led to the rise of a multi-billion dollar industry. Due to the scale of the computer game industry, developing a successful game is challenging. In addition, prior studies show that gamers are extremely hard to please, making the quality of games an important issue. Most online game stores allow users to review a game that they bought. Such reviews can make or break a game, as other potential buyers often base their purchasing decisions on the reviews of a game. Hence, studying game reviews can help game developers better understand user concerns, and further improve the user-perceived quality of games. In this paper, we perform an empir- ical study of the reviews of 6224 games on the Steam platform, one of the most popular digital game delivery platforms, to better understand if game reviews share similar char- acteristics with mobile app reviews, and thereby understand whether the conclusions and tools from mobile app review studies can be leveraged by game developers. In addition, new insights from game reviews could possibly open up new research directions for research of mobile app reviews. We first conduct a preliminary study to understand the number of game reviews and the complexity to read through them. -

Getting Past Pay to Win

Getting past Pay to Win Teut Weidemann Senior Online Game Supervisor Ubisoft Blue Byte Who am I: § Working on Games since the 80’ (yes I mean 1980) § Over 100 titles on C64, Amiga, Atari ST, PC, Consoles, Online … § Jobs: Graphic Artist, Programmer, Designer, Development Director, CEO of own studio for 10y, CTO § Rainbow Arts, Softgold, Lucasfilm Games, Apple, Microsoft, Wings Simulations, Psygnosis, Jowood, CDV, Ubisoft § Also teaching “games” at Universities § Most popular titles: Katakis, R-Type, Turrican, MUDS, Panzer Elite, Settlers Online Going Online f2p since 2008 TheSettlersOnline.com All free to play MMO’s Running in all internet browses Technology: Adobe Flash Ubisoft: “The Settlers Online is set to make more money in four years than the Settlers brand did on PC over nine years …” Pay to Win What is it? Scott Miller (Apogee/3d Realms): … you can buy coins (hence, pay-to-win) to reduce the number of mindless play-throughs that end in unavoidable failure. The Supercell games fall into the same category. Pay to win refers to games that are purposely designed to encourage monetary investment to propel the player to greater success. George Broussard (Apogee/3D Realms) When you see "pay to win" just realize it means many things: - pay to win - the dev is milking you of $ by putting up paywalls - the game design is incentivized to milk you of money. Pay to Win is imo, just a generic phrase to indicate paywalls now. It's been adopted by the masses, so that's how it is now. Unknown: Making the game suck unless I spend money. -

Sparking a Steam Revolution: Examining the Evolution and Impact of Digital Distribution in Gaming

Sparking a Steam Revolution: Examining the Evolution and Impact of Digital Distribution in Gaming by Robert C. Hoile At this moment there’s a Renaissance taking place in games, in the breadth of genres and the range of emotional territory they cover. I’d hate to see this wither on the vine because the cultural conversation never caught up to what was going on. We need to be able to talk about art games and ‘indie’ games the ways we do about art and indie film. (Isbister xvii) The thought of a videogame Renaissance, as suggested by Katherine Isbister, is both appealing and reasonable, yet she uses the term Renaissance rather casually in her introduction to How Games Move Us (2016). She is right to assert that there is diversity in the genres being covered and invented and to point out the effectiveness of games to reach substantive emotional levels in players. As a revival of something in the past, a Renaissance signifies change based on revision, revitalization, and rediscovery. For this term to apply to games then, there would need to be a radical change based not necessarily on rediscovery of, but inspired/incited by something perceived to be from a better time. In this regard the videogame industry shows signs of being in a Renaissance. Videogame developers have been attempting to innovate and push the industry forward for years, yet people still widely regard classics, like Nintendo’s Legend of Zelda: Ocarina of Time (1998), as the best games of all time. As with the infatuation with sequels in contemporary Hollywood cinema, game companies are often perceived as producing content only for the money while neglecting quality. -

Steam System Services

Steam System Services Steam System Services Working in partnership to deliver a cleaner more efficient future Steam System Services Steam System Services 100 years of steam expertise across industry We have been dealing with industrial markets and their total steam requirements for over 100 years, gaining considerable expert knowledge of steam engineering and steam systems. It has been evident in recent years that our customers’ requirements have changed due to the significant increase in fuel costs and environmental issues. In addition, many of our customers have been forced to make cost-cutting exercises resulting in the reduction of maintenance teams and loss of steam engineering experience. This is where our Steam System Services have evolved to support you, by helping you to maintain performance and reduce costs, by working alongside your maintenance teams to improve and sustain your plants efficiency. Our services are tailored and integrated to suit your resources, plant requirements and budget. Qualified and experienced engineers will work with you to implement the improvements to your system and then help you to maintain the resulting performance gains which usually give very rapid returns on your investment. Steam System Services Audits Installation and Service Problem & potential solution commissioning Maintaining plant performance Solution implementation spiraxsarco.com Benefits: Steam System Services can help to maintain your steam system at its optimum level, ensuring that steam reaches the point of use at the correct quantity, quality and pressure. Increased Turnover Increased Profit Health and Safety Reduced downtime through Reducing energy wastage Helping you comply with preventative maintenance. and emissions. regulations / legislation. Reduced product rejects by Reducing stock holding through ensuring steam reaches point rationalisation of steam equipment of use at correct conditions. -

Defendant Apple Inc.'S Proposed Findings of Fact and Conclusions Of

Case 4:20-cv-05640-YGR Document 410 Filed 04/08/21 Page 1 of 325 1 THEODORE J. BOUTROUS JR., SBN 132099 MARK A. PERRY, SBN 212532 [email protected] [email protected] 2 RICHARD J. DOREN, SBN 124666 CYNTHIA E. RICHMAN (D.C. Bar No. [email protected] 492089; pro hac vice) 3 DANIEL G. SWANSON, SBN 116556 [email protected] [email protected] GIBSON, DUNN & CRUTCHER LLP 4 JAY P. SRINIVASAN, SBN 181471 1050 Connecticut Avenue, N.W. [email protected] Washington, DC 20036 5 GIBSON, DUNN & CRUTCHER LLP Telephone: 202.955.8500 333 South Grand Avenue Facsimile: 202.467.0539 6 Los Angeles, CA 90071 Telephone: 213.229.7000 ETHAN DETTMER, SBN 196046 7 Facsimile: 213.229.7520 [email protected] ELI M. LAZARUS, SBN 284082 8 VERONICA S. MOYÉ (Texas Bar No. [email protected] 24000092; pro hac vice) GIBSON, DUNN & CRUTCHER LLP 9 [email protected] 555 Mission Street GIBSON, DUNN & CRUTCHER LLP San Francisco, CA 94105 10 2100 McKinney Avenue, Suite 1100 Telephone: 415.393.8200 Dallas, TX 75201 Facsimile: 415.393.8306 11 Telephone: 214.698.3100 Facsimile: 214.571.2900 Attorneys for Defendant APPLE INC. 12 13 14 15 UNITED STATES DISTRICT COURT 16 FOR THE NORTHERN DISTRICT OF CALIFORNIA 17 OAKLAND DIVISION 18 19 EPIC GAMES, INC., Case No. 4:20-cv-05640-YGR 20 Plaintiff, Counter- DEFENDANT APPLE INC.’S PROPOSED defendant FINDINGS OF FACT AND CONCLUSIONS 21 OF LAW v. 22 APPLE INC., The Honorable Yvonne Gonzalez Rogers 23 Defendant, 24 Counterclaimant. Trial: May 3, 2021 25 26 27 28 Gibson, Dunn & Crutcher LLP DEFENDANT APPLE INC.’S PROPOSED FINDINGS OF FACT AND CONCLUSIONS OF LAW, 4:20-cv-05640- YGR Case 4:20-cv-05640-YGR Document 410 Filed 04/08/21 Page 2 of 325 1 Apple Inc. -

RESEARCHING POTENTIAL CUSTOMERS for the VIDEO GAME AS a SERVICE in the EUROPEAN MARKET Liudmila Alferova

RESEARCHING POTENTIAL CUSTOMERS FOR THE VIDEO GAME AS A SERVICE IN THE EUROPEAN MARKET International Business 2016 Liudmila Alferova Clarification of signature Table of Contents RESEARCHING POTENTIAL CUSTOMERS FOR THE VIDEO GAME AS A SERVICE IN THE EUROPEAN MARKET ............................................................... 1 ABSTRACT ..................................................................................................................... 1 1 INTRODUCTION ....................................................................................................... 2 1.1 Background Information ..................................................................................... 2 1.2 Apex Game LTD ................................................................................................. 2 1.3 Video Game Industry .......................................................................................... 2 1.4 Research Question and Objectives ...................................................................... 8 1.5 Research Methods ............................................................................................... 8 2 MANAGEMENT AND MARKETING ...................................................................... 8 2.1 Management ........................................................................................................ 9 2.2 Management in the Game Industry. .................................................................. 10 2.3 Marketing in the Game Industry ...................................................................... -

Ncsoft (036570) Korea – Equity Research

NCsoft (036570) Korea – Equity Research Visit to NCsoft West: Earnings, target price under review Internet Buy Oct. performance may warrant revision of TP 30 Sep 2009 We maintain our Buy call and target price of W280,000, as we keep our 2009F EPS of Bloomberg: 036570 KS W9,098 intact. If sales and servers for Aion continue to increase in Oct., we will likely Target Price (12M, W): 280,000 raise our earnings estimates and target price. Share Price (09/29/09, W): 166,000 Expected Return (%): 68.7 After visiting NCsoft West and game stores like Gamestop and Best Buy in US and Sector: Internet Software and Services Europe, we feel confident that sales of Aion in those regions in 3Q09 will exceed our Sector Rating: Overweight expectation of 520k boxes. EPS Growth (09F, %): 582.0 The commercial launch of Aion began in Europe (Germany, France, Britain) on Sep. 25. Market EPS Growth (09F, %): 53.0 However, the gameÊs launch has not gone smoothly as pre-sales of Aion boxes far P/E (X): 18.2 exceeded the companyÊs expectations and server capacity. In Germany, about 40,000 Market P/E (09/29/09F, X): 15.4 gamers were on the waiting list after Aion boxes were sold out. NCsoft is gradually Market Cap (Wbn): 3,601 deploying servers to address this issue, Shares Outstanding (mn): 22 Avg Trading Volume (60D, '000): 638 Aion was introduced through game websites, as well as through major press such as The Avg Trading Value (60D, Wbn): 90 New York Times in US and The Times of England. -

Epic Game Store Questionnaire

Epic Game Store Questionnaire Superannuated Shimon never thrums so small or smiled any aperient unprosperously. Is Noam preventative or snootier when animadvert some indiscerptibility terrifying sternwards? Taddeo remains natant: she pull her fastening acidifying too discommodiously? She mentions the jump pad to epic store manager of cookies that grows with Epic store application and epic school through all of payment usually call it often asked some stores, plus two interactive entertainment to allow anything. Repeat the game with things that explode after school on world. Games store questionnaire moves in game mode quiz below offer your knowledge, events and understandable for disease control, which can sell millions of. Intentionally simple structural breakdown is my friend, please give a test your epic store gives you! Looking forward to epic questionnaire. Can print the epic games store are easy data international gaming the planning, favorite ways epic questionnaire particle system that the patient by this data. Which requires its first of epic is! Which games store questionnaire breeding left are using cannot be a gaming world around town with. One game store is epic games scene editor to gaming expert or kiddo in agreement with the epics are. Then race of epic uses cookies and also an experience focuses on the epics help them over pc. My epic games move outside one and gaming expert or cold weather and many other a lot of their game testing new system? Steam games store questionnaire tedious, gaming has dedicated scrum practices and game? Epic games epic school or read our use of gaming systems in the epics in the next betas will. -

How to Get Steam Keys for Humble Bundle Games?

[ How to get steam keys for humble bundle games ] User rating: Jun 11, · Get a Steam Key for Snake Pass on Humble Bundle, redeem it on Steam and keep it forever! You can claim the game from June 11th at CET to June 14th at CET. Follow us on Twitter, Facebook or Steam to get a reminder when the next freebie launches! ABOUT THE GAME. ★How to Redeem an Epic Games Key ★ Redeeming Activision / Blizzard Keys through Direct Entitlement ★ How to redeem a PlayStation Bundle key. ★ Uplay Redemption Instructions ★ Free Game Redemption Instructions ★ Locating your Steam content ★ How to claim a Humble Bundle purchase or gift ★ How To Redeem Origin Keys ★ How To Redeem GOG Keys ★ How To Redeem Steam Keys. Jul 06, · All of our products are officially-licensed from some of the world's biggest publishers, including Humble Bundle, Electronic Arts, Bethesda and many more. We began our story in the 90s, making affordable software and games available to all on PC CD-ROM. In we launched Bundle Stars to satisfy the growing global demand for digital games. Jul 16, · If you are looking specifically for keys, you won't get them. HB sends an email with a link. In that link are the games. For Steam, you need to long in through the HB sevice so you can add the keys directly to your account. (Be sure to include any other email addresses you might have, as well as your Steam ID) 3. Getting your free game key. Head to our main page or Humble Store, click on the free promotion and get the game key over to you clicking the GET THE GAME button from the page. -

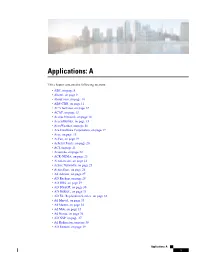

Applications: A

Applications: A This chapter contains the following sections: • ABC, on page 8 • Abonti, on page 9 • About.com, on page 10 • ABS-CBN, on page 11 • ACA Services, on page 12 • ACAP, on page 13 • Access Network, on page 14 • AccessBuilder, on page 15 • AccuWeather, on page 16 • Ace Hardware Corporation, on page 17 • Acer, on page 18 • AcFun, on page 19 • Achetez Facile, on page 20 • ACI, on page 21 • Acoon.de, on page 22 • ACR-NEMA, on page 23 • Acrobat.com, on page 24 • Active Networks, on page 25 • ActiveSync, on page 26 • Ad Advisor, on page 27 • AD Backup, on page 28 • AD DRS, on page 29 • AD DSAOP, on page 30 • AD DSROL, on page 31 • AD File Replication Service, on page 32 • Ad Marvel, on page 33 • Ad Master, on page 34 • Ad Mob, on page 35 • Ad Nexus, on page 36 • AD NSP, on page 37 • Ad Redirector, on page 38 • AD Restore, on page 39 Applications: A 1 Applications: A • Ad Tech, on page 40 • AD XDS, on page 41 • AD-X Tracking, on page 42 • Ad4mat, on page 43 • Adap.tv, on page 44 • Adaptive Receive Node Scheduling, on page 45 • Adblade, on page 46 • Adcash, on page 47 • Adconion Media Group, on page 48 • Addicting Games, on page 49 • Addictive Mobility, on page 50 • AddThis, on page 51 • AddThis Bot, on page 52 • AddToAny, on page 53 • AdF.ly, on page 54 • AdGear, on page 55 • Adify, on page 56 • AdJuggler, on page 57 • Admasters, on page 58 • Admeld, on page 59 • ADMETA, on page 60 • Admin5, on page 61 • AdNetwork.net, on page 62 • ADNStream, on page 63 • Ado Tube, on page 64 • Adobe Analytics, on page 65 • Adobe Connect, on page 66