The Quality of Statistical Reporting and Data Presentation in Predatory Dental Journals Was Lower Than in Non-Predatory Journals

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

REVIEW Bibliographic Analysis of Oral Precancer and Cancer Research Papers from Saudi Arabia

DOI:10.31557/APJCP.2020.21.1.13 Bibliometric Analysis of Saudi Precancer/Cancer Papers REVIEW Editorial Process: Submission:10/01/2019 Acceptance:12/16/2019 Bibliographic Analysis of Oral Precancer and Cancer Research Papers from Saudi Arabia Shankargouda Patil1, Sachin C Sarode2*, Hosam Ali Baeshen3, Shilpa Bhandi4, A Thirumal Raj5, Gargi S Sarode2, Sadiq M Sait6, Amol R Gadbail7, Shailesh Gondivkar8 Abstract Objective: Oral cancer and precancers are a major public health challenge in developing countries. Researchers in Saudi Arabia have constantly been directing their efforts on oral cancer research and have their results published. Systematic analysis of such papers is the need of the hour as it will not only acknowledge the current status but will also help in framing future policies on oral cancer research in Saudi Arabia. Method: The search string “oral cancer” OR “Oral Squamous Cell Carcinoma” OR “oral premalignant lesion” OR “oral precancer” OR “Oral Potentially malignant disorder” AND AFFIL (Saudi AND Arabia ) was used for retrieval of articles from Scopus database. Various tools available in Scopus database were used for analyzing the bibliometric related parameters. Results: The search revealed a total of 663 publications based on the above query. Maximum affiliations were from King Saud University (163) followed by Jazan University (109) and then King Abdulaziz University (106). A large number of international collaborations were observed, the maximum with India (176) and the USA (127). The maximum number of articles were published in the Asia Pacific Journal of Cancer Prevention (34) followed by the Journal of Contemporary Dental Practice (33) and Journal of Oral Pathology and Medicine (19). -

Machine Learning for Prognosis of Oral Cancer: What Are the Ethical Challenges?

Proceedings of the Conference on Technology Ethics 2020 - Tethics 2020 Machine learning for prognosis of oral cancer: What are the ethical challenges? Long paper Rasheed Omobolaji Alabi1 [0000-0001-7655-5924], Tero Vartiainen2 [0000-0003-3843-8561], Mohammed Elmusrati1 [0000-0001-9304-6590] 1 Department of Industrial Digitalization, School of Technology and Innovations, University of Vaasa, Vaasa, Finland 2 Department of Computer Science, School of Technology and Innovations, University of Vaasa, Vaasa, Finland [email protected] Abstract. Background: Machine learning models have shown high performance, particularly in the diagnosis and prognosis of oral cancer. However, in actual everyday clinical practice, the diagnosis and prognosis using these models remain limited. This is due to the fact that these models have raised several ethical and morally laden dilemmas. Purpose: This study aims to provide a systematic state- of-the-art review of the ethical and social implications of machine learning models in oral cancer management. Methods: We searched the OvidMedline, PubMed, Scopus, Web of Science and Institute of Electrical and Electronics Engineers databases for articles examining the ethical issues of machine learning or artificial intelligence in medicine, healthcare or care providers. The Preferred Reporting Items for Systematic Review and Meta-Analysis was used in the searching and screening processes. Findings: A total of 33 studies examined the ethical challenges of machine learning models or artificial intelligence in medicine, healthcare or diagnostic analytics. Some ethical concerns were data privacy and confidentiality, peer disagreement (contradictory diagnostic or prognostic opinion between the model and the clinician), patient’s liberty to decide the type of treatment to follow may be violated, patients–clinicians’ relationship may change and the need for ethical and legal frameworks. -

ORAL ONCOLOGY a Journal Related to Head & Neck Oncology

ORAL ONCOLOGY A Journal Related to Head & Neck Oncology AUTHOR INFORMATION PACK TABLE OF CONTENTS XXX . • Description p.1 • Impact Factor p.2 • Abstracting and Indexing p.2 • Editorial Board p.2 • Guide for Authors p.5 ISSN: 1368-8375 DESCRIPTION . Oral Oncology is an international interdisciplinary journal which publishes high quality original research, clinical trials and review articles, editorials, and commentaries relating to the etiopathogenesis, epidemiology, prevention, clinical features, diagnosis, treatment and management of patients with neoplasms in the head and neck. Oral Oncology is of interest to head and neck surgeons, radiation and medical oncologists, maxillo- facial surgeons, oto-rhino-laryngologists, plastic surgeons, pathologists, scientists, oral medical specialists, special care dentists, dental care professionals, general dental practitioners, public health physicians, palliative care physicians, nurses, radiologists, radiographers, dieticians, occupational therapists, speech and language therapists, nutritionists, clinical and health psychologists and counselors, professionals in end of life care, as well as others interested in these fields. Basic, translational, or clinical Research or Review papers of high quality and that make a contribution to new knowledge are invited on the following aspects of neoplasms arising in the head and neck (including lip, tongue, oral cavity, oropharynx, salivary glands, sinuses, nose, nasopharynx, larynx, skull base, thyroid, and craniofacial region, and the related hard and -

ARCHIVES of ORAL BIOLOGY a Multidisciplinary Journal of Oral & Craniofacial Sciences

ARCHIVES OF ORAL BIOLOGY A Multidisciplinary Journal of Oral & Craniofacial Sciences AUTHOR INFORMATION PACK TABLE OF CONTENTS XXX . • Description p.1 • Audience p.1 • Impact Factor p.1 • Abstracting and Indexing p.2 • Editorial Board p.3 • Guide for Authors p.4 ISSN: 0003-9969 DESCRIPTION . Archives of Oral Biology is an international journal which aims to publish papers of the highest scientific quality in the oral and craniofacial sciences including: Developmental biology Cell and molecular biology Molecular genetics Immunology Pathogenesis Microbiology Biology of dental caries and periodontal disease Forensic dentistry Neuroscience Salivary biology Mastication and swallowing Comparative anatomy Paeleodontology Archives of Oral Biology will also publish expert reviews and articles concerned with advancement in relevant methodologies. The journal will only consider clinical papers where they make a significant contribution to the understanding of a disease process.Journal Metrics AUDIENCE . Oral biologists, physiologists, anatomists, pathologists. IMPACT FACTOR . 2020: 2.633 © Clarivate Analytics Journal Citation Reports 2021 AUTHOR INFORMATION PACK 28 Sep 2021 www.elsevier.com/locate/archoralbio 1 ABSTRACTING AND INDEXING . Elsevier BIOBASE Nutrition Research Newsletter Nutrition Abstracts and Reviews Series PharmacoEconomics and Outcomes News Pig News and Information Reactions Weekly Review of Medical and Veterinary Entomology Science Citation Index SIIC Data Bases Soils and Fertilizers Sugar Industry Abstracts Tropical Diseases Bulletin -

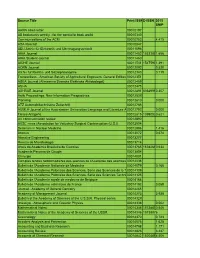

Journal List of Scopus.Xlsx

Sourcerecord id Source Title (CSA excl.) (Medline-sourced journals are indicated in Green). Print-ISSN Including Conference Proceedings available in the scopus.com Source Browse list 16400154734 A + U-Architecture and Urbanism 03899160 5700161051 A Contrario. Revue interdisciplinaire de sciences sociales 16607880 19600162043 A.M.A. American Journal of Diseases of Children 00968994 19400157806 A.M.A. archives of dermatology 00965359 19600162081 A.M.A. Archives of Dermatology and Syphilology 00965979 19400157807 A.M.A. archives of industrial health 05673933 19600162082 A.M.A. Archives of Industrial Hygiene and Occupational Medicine 00966703 19400157808 A.M.A. archives of internal medicine 08882479 19400158171 A.M.A. archives of neurology 03758540 19400157809 A.M.A. archives of neurology and psychiatry 00966886 19400157810 A.M.A. archives of ophthalmology 00966339 19400157811 A.M.A. archives of otolaryngology 00966894 19400157812 A.M.A. archives of pathology 00966711 19400157813 A.M.A. archives of surgery 00966908 5800207606 AAA, Arbeiten aus Anglistik und Amerikanistik 01715410 28033 AAC: Augmentative and Alternative Communication 07434618 50013 AACE International. Transactions of the Annual Meeting 15287106 19300156808 AACL Bioflux 18448143 4700152443 AACN Advanced Critical Care 15597768 26408 AACN clinical issues 10790713 51879 AACN clinical issues in critical care nursing 10467467 26729 AANA Journal 00946354 66438 AANNT journal / the American Association of Nephrology Nurses and Technicians 07441479 5100155055 AAO Journal 27096 AAOHN -

JCR Bibliometrics Report 2016

JCR Bibliometrics Report 2016 American College of Prosthodontists Journal of Prosthodontics The information and details provided in this report are proprietary and contain information provided in confidence by Wiley to the American College of Prosthodontists. It is understood that both parties shall treat the contents of this report in strict confidence in perpetuity. © 2017 Wiley. Contents Impact Factor Metrics and Calculations ....................................................................... 2 Journals Citation Ranking (JCR) metrics .................................................................. 2 2016 Impact Factor calculations ............................................................................... 2 Journal of Prosthodontics Impact Factor Breakdown ............................................... 3 Comparison with Peer Journals ................................................................................ 3 Journal and peer journal Impact Factor history ........................................................ 4 Citation Trends ............................................................................................................. 5 Citation Distributions ................................................................................................. 5 Document Types ....................................................................................................... 7 Geographical Analysis ............................................................................................ 10 Top 20 Institutions by -

Scimago Journal Rank Indicator: a Viable Alternative to Journal Impact Factor for Dental Journals

volume 26, issue 2, pages 144-151 (2016) SCImago Journal Rank Indicator: A Viable Alternative to Journal Impact Factor for Dental Journals Khalid Mahmood (corresponding author) Department of Information Management, University of the Punjab, Lahore, Pakistan [email protected] Khalid Almas College of Dentistry, University of Dammam, Saudi Arabia [email protected] ABSTRACT Objective. This paper investigated the possibility of SCImago Journal Rank (SJR) indicator as an alternative to the Journal Impact Factor (JIF) in the field of dentistry. Method. The SJR and JIF scores and ranking order of 88 dental journals were downloaded from the relevant websites. Pearson and Spearman correlation coefficients were calculated to test hypotheses for association between the two journal quality metrics. Result. A very strong positive correlation was found between the scores and ranking order based on the SJR and JIF of selected journals. Hence, academics and researchers in dentistry can use the SJR indicator as an alternative to JIF. INTRODUCTION Assessment of research quality is an important activity in all academic and professional fields. Citation-based evaluation metrics have been the most common method in the last few decades. As the only provider of citation data, the Institute for Scientific Information (now Thomson Reuters) has a long-established monopoly of the market. The Journal Impact Factor (JIF), first conceived in 1955 by Eugene Garfield, was the main quantitative measure of quality for scientific journals. The JIFs, published annually in the Journal Citation Reports (JCR), are widely used for quality ranking of journals and extensively used by leading journals in their advertising (Elsaie & Kammer, 2009). -

Source Title Print ISSNE-ISSN 2015 SNIP AARN News Letter

Source Title Print ISSNE-ISSN 2015 SNIP AARN news letter 00010197 AB bookman's weekly : for the specialist book world 00010340 Communications of the ACM 00010782 4.415 ADA forecast 00010847 AEU-Archiv für Elektronik und Uberträgungstechnik 00011096 AIAA Journal 00011452 15333851.656 AIAA Student Journal 00011460 AICHE Journal 00011541 15475901.391 AORN Journal 00012092 0.520 Archiv fur Rechts- und Sozialphilosophie 00012343 0.119 Transactions - American Society of Agricultural Engineers: General Edition 00012351 ASEA Journal (Allmaenna Svenska Elektriska Aktiebolaget) 00012459 ASHA 00012475 ASHRAE Journal 00012491 03649960.307 Aslib Proceedings: New Information Prespectives 0001253X Planning 00012610 0.000 ATZ Automobiltechnische Zeitschrift 00012785 AUMLA-Journal of the Australasian Universities Language and Literature A 00012793 0.000 Tissue Antigens 00012815 13990030.621 AV communication review 00012890 AVSC news (Association for Voluntary Surgical Contraception (U.S.)) 00012904 Seminars in Nuclear Medicine 00012998 1.416 Abacus 00013072 0.674 Abrasive Engineering 00013277 Revista de Microbiologia 00013714 Anais da Academia Brasileira de Ciencias 00013765 16782690.634 Academia Peruana de Cirugia 00013854 Chirurgie 00014001 Comptes rendus hebdomadaires des séances de l'Académie des sciences 00014036 Bulletin de l'Academie Nationale de Medecine 00014079 0.166 Bulletin de l'Academie Polonaise des Sciences. Serie des Sciences de la T 00014109 Bulletin de l'Academie Polonaise des Sciences. Serie des Sciences Techni 00014125 Bulletin de l'Academie royale de medecine de Belgique 00014168 Bulletin de l'Academie veterinaire de France 00014192 0.059 Journal - Academy of General Dentistry 00014265 Academy of Management Journal 00014273 3.938 Bulletin of the Academy of Sciences of the U.S.S.R. -

JOURNAL of ORAL BIOSCIENCES the Official Journal of the Japanese Association for Oral Biology

JOURNAL OF ORAL BIOSCIENCES The official journal of the Japanese Association for Oral Biology AUTHOR INFORMATION PACK TABLE OF CONTENTS XXX . • Description p.1 • Abstracting and Indexing p.1 • Editorial Board p.1 • Guide for Authors p.4 ISSN: 1349-0079 DESCRIPTION . The Journal of Oral Biosciences (JOB) is the official journal of the Japanese Association for Oral Biology, and is published quarterly in addition to a supplementary issue for the Proceedings of the Annual Meeting of the Japanese Association for Oral Biology. The Journal is an interdisciplinary journal, devoted to the advancement and dissemination of fundamental knowledge concerning every aspect of oral biosciences including cariology research, craniofacial biology, dental materials, implant biology, geriatric oral biology, microbiology/immunology and infection control, mineralized tissue, neuroscience, oral oncology, periodontal research, pharmacology, pulp biology, salivary research, and other fields. A global journal, JOB welcomes submission from both society members and non-members from around the world. Article types accepted include original and review articles, short communications, technical notes and letters. Authors submitting to the journal may choose to publish their article open access. ABSTRACTING AND INDEXING . PubMed/Medline Scopus Emerging Sources Citation Index (ESCI) EDITORIAL BOARD . Editor-in-Chief Norio Amizuka, Hokkaido University, Sapporo, Japan Developmental Biology of Hard Tissue Vice Editors-in-Chief Kenji Mishima, Showa University Graduate School -

The 100 Most Cited Articles in Dentistry

Clin Oral Invest DOI 10.1007/s00784-013-1017-0 ORIGINAL ARTICLE The 100 most cited articles in dentistry Javier F. Feijoo & Jacobo Limeres & Marta Fernández-Varela & Isabel Ramos & Pedro Diz Received: 17 October 2012 /Accepted: 4 June 2013 # Springer-Verlag Berlin Heidelberg 2013 Abstract opinion (19 %). The most common area of study was peri- Objectives To identify the 100 most cited articles published odontology (43 % of articles). in dental journals. Conclusions To our knowledge, this is the first report of the Materials and methods A search was performed on the top-cited articles in Dentistry. There is a predominance of Institute for Scientific Information (ISI) Web of Science for clinical studies, particularly case series and narrative the most cited articles in all the journals included in the reviews/expert opinions, despite their low-evidence level. Journal Citation Report (2010 edition) in the category of The focus of the articles has mainly been on periodontology “Dentistry, Oral Surgery, and Medicine”. Each one of the and implantology, and the majority has been published in the 77 journals selected was analyzed using the Cited Reference highest impact factor dental journals. Search tool of the ISI Web of Science database to identify the Clinical significance The number of citations that an article most cited articles up to June 2012. The following informa- receives does not necessarily reflect the quality of the re- tion was gathered from each article: names and number of search, but the present study gives some clues to the topics authors, journal, year of publication, type of study, method- and authors contributing to major advances in Dentistry. -

Leticia Citelli Conti

Leticia Citelli Conti DISSERTAÇÃO INTER-RELAÇÃO ENTRE A PERIODONTITE APICAL E A ATEROSCLEROSE. ANÁLISE METABÓLICA, HISTOLÓGICA E DO PERFIL LIPÍDICO ARAÇATUBA- SP 2018 Leticia Citelli Conti INTER-RELAÇÃO ENTRE A PERIODONTITE APICAL E A ATEROSCLEROSE. ANÁLISE METABÓLICA, HISTOLÓGICA E DO PERFIL LIPÍDICO Dissertação apresentada à Faculdade de Odontologia de Araçatuba, Universidade Estadual Paulista “Júlio de Mesquita Filho” - UNESP como parte dos requisitos para obtenção do título de Mestre em Ciência Odontológica, área de concentração em Endodontia. Orientador: Prof. Adj. Luciano Tavares Angelo Cintra Coorientadora: Profª. Dra. Suely Regina Mogami Bomfim ARAÇATUBA- SP 2018 Catalogação na Publicação (CIP) Diretoria Técnica de Biblioteca e Documentação – FOA / UNESP Conti, Leticia Citelli. C762i Inter-relação entre a periodontite apical e a aterosclerose. Análise metabólica, histológica e do perfil lipídico / Leticia Citelli Conti. - Araçatuba, 2018 123 f. : il. ; tab. Dissertação (Mestrado) – Universidade Estadual Paulista, Faculdade de Odontologia de Araçatuba Orientador: Prof. Luciano Tavares Angelo Cintra Coorientadora: Profa. Suely Regina Mogami Bomfim 1. Periodontite periapical 2. Aterosclerose 3. Histologia 4. Colesterol I. Título Black D24 CDD 617.67 Claudio Hideo Matsumoto CRB-8/5550 Dedicatória Dedico esse trabalho às pessoas mais importantes da minha vida, meus pais: Isabel de Lurdes Citelli Conti e Marcio Forlan Conti. Muito obrigada por juntos construírem uma linda história de amor, por se amarem incondicionalmente a tal ponto -

Publications

PUBLICATIONS 1. BKM Reddy, V Lokesh, MS Vidyasagar, K Shenoy, KG Babu, and et al. Nimotuzumab provides survival benefit to patients with inoperable advancedsquamous cell carcinoma of the head and neck: A randomized, open-label, phase IIB,5-year study in Indian patients. Oral Oncology 2014; 50:498-505. INDEXED IN EMBASE, ELSEVIER BIOBASE, SCIENCE CITATION INDEX, SCISEARCH, RESEARCH ALERT, CURRENT CONTENTS/CLINICAL MEDICINE, MEDLINE, CURRENT CLINICAL CANCER, CINAHL, GLOBAL HEALTH, SCOPUS. (ORIGINAL RESEARCH ARTICLE) 2. Shenoy VK, Shenoy KK, Rodrigues S, Shetty P. Management of oral health in patients irradiated for head and neck cancer-a review.Kathmandu University Medical Journal. 2007;5(1-17):117-120.INDEXED IN MEDLINE/PUBMED.(ORIGINAL RESEARCH ARTICLE) 3. Shobha Rodrigues, VidyaKamalakshShenoy, KamalakshShenoy.ProstheticRehabilitation of a patient after partial rhinectomy: a clinical reportJ Prosthet Dent 2005; 93:125-128.INDEXED INSCOPUS.(ORIGINAL RESEARCH ARTICLE) 4. Fluka Monte Carlofor basic dosimetric studies of dual energy medical linear accelerator. Siddharth, Shakir KK. Guided/Graded Motor Imagery for Cancer Pain: Exploring the Mind-Brain Inter-relationship.SenthilParamashivam Kumar, Anup Kumar, KamalakshShenoy,Mariella D’Souza, Vijaya K Kumar.Indian J Palliat Care 2013;19(2):125-126.INDEXED INDOAJ, EMERGING SOURCES CITATION INDEX, INDEX COPERNICUS, INDIAN SCIENCE ABSTRACTS, PUBMED CENTRAL, SCIMAGO JOURNAL RANKING, SCOPUS.(ORIGINAL RESEARCH ARTICLE) 5. K Reshma, S Kamalaksh, YS Bindu, K Pramod, A Asfa, D Amritha, S Aswathi, R Chandrashekhar.Tulasi (Ocimum Sanctum) as radioprotector in head and neck cancer patients undergoing radiation therapy. Biomedicine 2012;32(1):39–44..INDEXED INMEDLINE, SCISEARCH, BIOSIS/BIOLOGICAL ABSTRACTS, PASCAL/INIST- CNRSCURRENT CONTENTS/LIFE SCIENCES, EMBASE/EXCERPTA MEDICA, SCIENCE CITATION INDEX, SCIENCEDIRECT, ELSEVIER BIOBASE, SCOPUS, RESEARCH4LIFE (HINARI).(ORIGINAL RESEARCH ARTICLE).(ORIGINAL RESEARCH ARTICLE) 6.