Introduction to Storage Area Networks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

FICON Native Implementation and Reference Guide

Front cover FICON Native Implementation and Reference Guide Architecture, terminology, and topology concepts Planning, implemention, and migration guidance Realistic examples and scenarios Bill White JongHak Kim Manfred Lindenau Ken Trowell ibm.com/redbooks International Technical Support Organization FICON Native Implementation and Reference Guide October 2002 SG24-6266-01 Note: Before using this information and the product it supports, read the information in “Notices” on page vii. Second Edition (October 2002) This edition applies to FICON channel adaptors installed and running in FICON native (FC) mode in the IBM zSeries procressors (at hardware driver level 3G) and the IBM 9672 Generation 5 and Generation 6 processors (at hardware driver level 26). © Copyright International Business Machines Corporation 2001, 2002. All rights reserved. Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Notices . vii Trademarks . viii Preface . ix The team that wrote this redbook. ix Become a published author . .x Comments welcome. .x Chapter 1. Overview . 1 1.1 How to use this redbook . 2 1.2 Introduction to FICON . 2 1.3 zSeries and S/390 9672 G5/G6 I/O connectivity. 3 1.4 zSeries and S/390 FICON channel benefits . 5 Chapter 2. FICON topology and terminology . 9 2.1 Basic Fibre Channel terminology . 10 2.2 FICON channel topology. 12 2.2.1 Point-to-point configuration . 14 2.2.2 Switched point-to-point configuration . 15 2.2.3 Cascaded FICON Directors configuration. 16 2.3 Access control. 18 2.4 Fibre Channel and FICON terminology. -

Cisco MDS 9250I Multiservice Fabric Switch for IBM an Optimized Solution for Departmental and Branch-Office Sans As Well As Large-Scale Sans

IBM Systems Data Sheet Cisco MDS 9250i Multiservice Fabric Switch for IBM An optimized solution for departmental and branch-office SANs as well as large-scale SANs Cisco MDS 9250i Multiservice Fabric Switch for IBM® System Highlights Storage® is an optimized platform for deploying high-performance SAN extension solutions, distributed intelligent fabric services and ●● ●●Provide 16 Gbps connectivity in a cost-effective multiprotocol connectivity for both open systems and high-densit y Fibre Channel switch mainframe environments. With a compact form factor and advanced ●● ●●Enable storage area network (SAN) con- capabilities normally available only on director-class switches, solidation with integrated multiprotocol MDS 9250i is an ideal solution for departmental and remote branch- support office SANs as well as large-scale SANs in conjunction with the ●● ●●Deliver high-p erformance Fibre Channel Cisco MDS 9710 Multilayer Director. over IP (FCIP) and fast disaster recovery ●● ●●Support hardware-bas ed virtual fabric MDS 9250i offers up to 40 16 Gbps Fibre Channel ports, two isolation with virtual SANs (VSANs) and 10 Gigabit Ethernet IP storage services ports and eight 10 Gigabit Fibre Channel routing with Inter-V SAN routing (IVR) Ethernet Fibre Channel over Ethernet (FCoE) ports in a fixed, two- rack-unit (2RU) form factor. MDS 9250i connects to existing native Fibre ●● ●●Enable cost- effective, high- performing Channel networks, protecting current investments in storage networks. Fibre Channel and FCIP connectivity for open systems and mainframe environments Main features and benefits ●● ●●Cisco Data Mobility Manager (DMM) as a MDS 9250i provides unique multiservice and multiprotocol functions in distributed fabric service a compact 2RU form factor. -

IBM System Z9 Enterprise Class

The server built to help optimize your resources throughout the enterprise IBM System z9 Enterprise Class A “classic” might just be the best Today’s market finds that business needs are changing, and having a com petitive advantage isn’t always about having more or being bigger, but more about being smarter and responding faster to change and to your clients. Often, being reactive to change has led to infrastructures with mixed technolo gies, spread across an enterprise, that are complex and difficult to control and costly to manage. Integration of appli cations and data is limited and difficult. Using internal information to make insightful decisions for the company Highlights can be difficult because knowing you are using the “best” data—that which is ■ Strengthening the role of the ■ Continued improvement in most current and complete—may not mainframe as the data hub of IBM FICON® performance and be possible. the enterprise throughput In many situations, investments have ■ New versatile capacity settings ■ On demand innovative tech been made in disparate technologies designed to optimize capacity nologies to help meet ever- that may fall short of meeting their and cost changing business demands goals. Merging information from one branch to another may not be possible ■ IBM System z9™ Integrated and so company direction is set with Information Processor (IBM zIIP) is designed to improve resource optimization and lower the cost of eligible work only a portion of the data at hand, and help achieve advanced I/O function and But data management can be a big in a global economy that can really hurt. -

What Is a Storage Area Network?

8646_Barker_01_d.qxd 9/20/01 10:21 AM Page 3 CHAPTER1 What Storage Networking Is and What It Can Mean to You n this chapter we’ll learn more about: II What a storage area network is I What properties a storage area network must have, should have, and may have I The importance of software to storage area networks I Why information services professionals should be interested in storage networking I Information processing capabilities enabled by storage area networks I Some quirks in the vocabulary of storage networking What Is a Storage Area Network? According to the Storage Networking Industry Association (and who should know better?): A storage area network (SAN) is any high-performance network whose primary pur- pose is to enable storage devices to communicate with computer systems and with each other. We think that the most interesting things about this definition are what it doesn’t say: I It doesn’t say that a SAN’s only purpose is communication between computers and storage. Many organizations operate perfectly viable SANs that carry occa- sional administrative and other application traffic. I It doesn’t say that a SAN uses Fibre Channel or Ethernet or any other specific interconnect technology. A growing number of network technologies have archi- tectural and physical properties that make them suitable for use in SANs. 3 8646_Barker_01_d.qxd 9/20/01 10:21 AM Page 4 4 STORAGE AREA NETWORK ESSENTIALS I It doesn’t say what kind of storage devices are interconnected. Disk and tape drives, RAID subsystems, robotic libraries, and file servers are all being used pro- ductively in SAN environments today. -

Fibre Channel Solution Guide

Fibre Channel Solutions Guide 2019 fibrechannel.org FIBRE CHANNEL Powering the next generation private, public, and hybrid cloud storage networks ABOUT THE FCIA The Fibre Channel Industry Association (FCIA) is a non-profit international organization whose sole purpose is to be the independent technology and marketing voice of the Fibre Channel industry. We are committed to helping member organizations promote and position Fibre Channel, and to providing a focal point for Fibre Channel information, standards advocacy, and education. CONTACT THE FCIA For more information: www.fibrechannel.org • [email protected] TABLE OF CONTENTS Foreword .........................................................................................3 FCIA President Introduction.............................................................4 The State of Fibre Channel by Storage Switzerland .......................6 Fibre Channel New Technologies: FC-NVMe-2 ............................... 7 The 2019 Fibre Channel Roadmap ................................................. 8 Fibre Channel’s Future is Bright in Media and Entertainment ......10 Securing Fibre Channel SANs with End-to-End Encryption ..........12 FOREWORD By Rupin Mohan, FCIA Marketing Chairman; Director R&D and CTO, Hewlett-Packard Enterprise It’s 2019, and Fibre Channel continues to remain the premier storage fabric connectivity protocol in today’s data centers. Fibre Channel is deployed by thousands of customers in their data centers around the world and 80–90% of all All-Flash storage arrays are connected to servers via Fibre Channel. Customers have recently made a considerable investment in Gen 6 (32GFC), and given the 4-5-year depreciation cycle, this equipment will continue to run critical business applications requiring reliable, fast and scalable storage infrastructure. The NVMe over Fibre Channel (FC-NVMe) standard is published, and we see products being announced and released in the market across the board. -

Troubleshooting FICON

Send documentation comments to [email protected] CHAPTER 16 Troubleshooting FICON Fibre Connection (FICON) interface capabilities enhance the Cisco MDS 9000 Family by supporting both open systems and mainframe storage network environments. Inclusion of Control Unit Port (CUP) support further enhances the MDS offering by allowing in-band management of the switch from FICON processors. This chapter includes the following sections: • FICON Overview, page 16-1 • FICON Configuration Requirements, page 16-6 • Initial Troubleshooting Checklist, page 16-7 • FICON Issues, page 16-9 FICON Overview The Cisco MDS 9000 Family supports the Fibre Channel, FICON, iSCSI, and FCIP capabilities within a single, high-availability platform. Fibre Channel and FICON are different FC4 protocols and their traffic are independent of each other. If required, devices using these protocols can be isolated using VSANs. The Cisco SAN-OS FICON feature supports high-availability, scalability, and SAN extension technologies including VSANs, IVR, FCIP, and PortChannels. Tip When you create a mixed environment, place all FICON devices in one VSAN (other than the default VSAN) and segregate the FCP switch ports in a separate VSAN (other than the default VSAN). This isolation ensures proper communication for all connected devices. You can implement FICON on the following switches: • Any switch in the Cisco MDS 9500 Series. • Any switch in the Cisco MDS 9200 Series (including the Cisco MDS 9222i Multiservice Modular Switch). • Cisco MDS 9134 Multilayer Fabric Switch. • MDS 9000 Family 18/4-Port Multiservice Module. Cisco MDS 9000 Family Troubleshooting Guide, Release 3.x OL-9285-05 16-1 Chapter 16 Troubleshooting FICON FICON Overview Send documentation comments to [email protected] Note The FICON feature is not supported on Cisco MDS 9120, 9124 or 9140 switches, the 32-port Fibre Channel switching module, Cisco Fabric Switch for HP c-Class BladeSystem or Cisco Fabric Switch for IBM BladeCenter. -

IBM Z Connectivity Handbook

Front cover IBM Z Connectivity Handbook Octavian Lascu John Troy Anna Shugol Frank Packheiser Kazuhiro Nakajima Paul Schouten Hervey Kamga Jannie Houlbjerg Bo XU Redbooks IBM Redbooks IBM Z Connectivity Handbook August 2020 SG24-5444-20 Note: Before using this information and the product it supports, read the information in “Notices” on page vii. Twentyfirst Edition (August 2020) This edition applies to connectivity options available on the IBM z15 (M/T 8561), IBM z15 (M/T 8562), IBM z14 (M/T 3906), IBM z14 Model ZR1 (M/T 3907), IBM z13, and IBM z13s. © Copyright International Business Machines Corporation 2020. All rights reserved. Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Notices . vii Trademarks . viii Preface . ix Authors. ix Now you can become a published author, too! . xi Comments welcome. xi Stay connected to IBM Redbooks . xi Chapter 1. Introduction. 1 1.1 I/O channel overview. 2 1.1.1 I/O hardware infrastructure . 2 1.1.2 I/O connectivity features . 3 1.2 FICON Express . 4 1.3 zHyperLink Express . 5 1.4 Open Systems Adapter-Express. 6 1.5 HiperSockets. 7 1.6 Parallel Sysplex and coupling links . 8 1.7 Shared Memory Communications. 9 1.8 I/O feature support . 10 1.9 Special-purpose feature support . 12 1.9.1 Crypto Express features . 12 1.9.2 Flash Express feature . 12 1.9.3 zEDC Express feature . 13 Chapter 2. Channel subsystem overview . 15 2.1 CSS description . 16 2.1.1 CSS elements . -

Infiniband Technology Overview

InfiniBand Technology Overview Dror Goldenberg, Mellanox Technologies SNIA Legal Notice The material contained in this tutorial is copyrighted by the SNIA. Member companies and individuals may use this material in presentations and literature under the following conditions: Any slide or slides used must be reproduced without modification The SNIA must be acknowledged as source of any material used in the body of any document containing material from these presentations. This presentation is a project of the SNIA Education Committee. InfiniBand Technology Overview 2 © 2008 Storage Networking Industry Association. All Rights Reserved. Abstract InfiniBand Technology Overview The InfiniBand architecture brings fabric consolidation to the data center. Storage networking can concurrently run with clustering, communication and management fabrics over the same infrastructure, preserving the behavior of multiple fabrics. The tutorial provides an overview of the InfiniBand architecture including discussion of High Speed – Low Latency, Channel I/O, QoS scheduling, partitioning, high availability and protocol offload. InfiniBand based storage protocols, iSER (iSCSI RDMA Protocol), NFS over RDMA and SCSI RDMA Protocol (SRP), are introduced and compared with alternative storage protocols, such as iSCSI and FCP. The tutorial further enumerates value-add features that the InfiniBand brings to clustered storage, such as atomic operations and end to end data integrity. Learning Objectives: Understand the InfiniBand architecture and feature set. Understand the benefits of InfiniBand for networked storage. Understand the standard InfiniBand storage protocols. InfiniBand Technology Overview 3 © 2008 Storage Networking Industry Association. All Rights Reserved. Agenda Motivation and General Overview Protocol Stack Layers Storage Protocols over InfiniBand Benefits InfiniBand Technology Overview 4 © 2008 Storage Networking Industry Association. -

IBM System Z9 109 Technical Introduction

Front cover IBM System z9 109 Technical Introduction Hardware description Software support Key functions Bill Ogden Jose Fadel Bill White ibm.com/redbooks International Technical Support Organization IBM System z9 109 Technical Introduction July 2005 SG24-6669-00 Note: Before using this information and the product it supports, read the information in “Notices” on page vii. First Edition (July 2005) This edition applies to the initial announcement of the IBM System z9 109. © Copyright International Business Machines Corporation 2005. All rights reserved. Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Notices . vii Trademarks . viii Preface . ix The authors . ix Become a published author . ix Comments welcome. .x Chapter 1. Introduction. 1 1.1 Evolution . 2 1.2 z9-109 server highlights . 3 1.3 zSeries comparisons. 4 1.4 z9-109 server models and processor units . 6 1.5 Upgrades. 6 1.6 Considerations . 7 Chapter 2. Hardware overview . 9 2.1 System frames . 10 2.2 Books . 10 2.3 MCM . 12 2.4 Processor units . 13 2.4.1 PU characterizations. 14 2.5 Memory . 14 2.6 I/O interfaces. 15 2.7 I/O cages and features . 16 2.7.1 Physical I/O connections. 18 2.7.2 Coupling connections . 20 2.7.3 Cryptographic functions . 20 2.8 Time functions. 21 2.8.1 Sysplex Timer . 21 2.8.2 Server Time Protocol (STP) . 22 2.9 Hardware Storage Area . 22 2.10 System control . 22 2.11 HMC and SE . -

Effective Data Backup System Using Storage Area Network Solution

Effective Data Backup System Using Storage Area Network Solution Ume, L.E. Department of Computer Science, Ebonyi State University, Abakaliki, Ebonyi State, Nigeria Correspondence e-mail: [email protected] Abstract One of the most crucial benefits of the computer system is its ability to manage data send to it for processing. An aspect of data management that all other aspects hinge upon is data storage and retrieval. Data loss has rendered many firms bankrupt. The primary cause of data loss is lack or non- existent of data backup. Storage Area Network Solution (SANS) is internet-based software which will collect clients data and host them in several locations to forestall data loss in case of disaster in one location. The researcher used adobe Dreamweaver (CSC3) embedded with PHP incorporated with MySQL database technology to develop the application. The objectives of the research were realized. Keywords: Backup, Storage area network, Data, Effective and Data loss 1.0 Introduction Storage Area Network (SAN) is a high- (NAS) systems. SAN evolved from the speed sub-network of shared storage concept of taking storage devices and devices. A storage device is a machine that therefore storage traffic, off the LAN and contains nothing but a disk or disks for creating a separate back-end network storing data. A SAN’s architecture works in designed specifically for data. This is a a way that makes all storage devices contrast to the use of a traditional LAN for available to all servers on a LAN or WAN. providing a connection for server-storage, a As more storage devices are added to a strategy that limits overall network SAN, they too will be accessible from any bandwidth. -

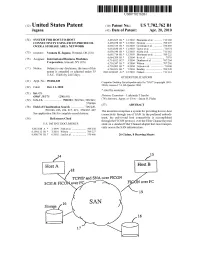

TCP/IP and SNA Over FICON SCSI & Ficonover FC FICON Over FC 19

USOO7702762B1 (12) United States Patent (10) Patent No.: US 7,702,762 B1 Jagana (45) Date of Patent: Apr. 20, 2010 (54) SYSTEM FOR HOST TO-HOST 6,493.825 B1* 12/2002 Blumenau et al. ........... T13,168 CONNECTIVITY USING FICON PROTOCOL 6,499,058 B1* 12/2002 Hozumi ............... ... TO9,225 OVER A STORAGE AREANETWORK 6,636,529 B1 * 10/2003 Goodman et al. ... 370/469 6,654,830 B1 * 1 1/2003 Taylor et al. .................. 71 Of 74 (75)75 Inventor: Venkata R. Jagana, Portland, OR (US) 6,665,7146,658,540 B1* 12/2003 SicolaBlumenau et al. et al....... ........... ... 709/222711,162 (73) Assignee: states insists 6,714,9526,684,209 B2*B1 3/20041/2004 Ito(Shane et al. ........................ at O'707:01 707/9 s s 6,718,347 B1 * 4/2004 Wilson .......... ... TO7,201 c - 6,728,803 B1 * 4/2004 Nelson et al. ................. T10/60 (*) Notice: Subject to any disclaimer, the term of this 6,769,021 B1* 7/2004 Bradley ......... ... TO9.220 patent is extended or adjusted under 35 2003/0236945 A1 12/2003 Nahum ....................... 711 114 U.S.C. 154(b) by 2415 days. OTHER PUBLICATIONS (21) Appl. No.: 09/686,049 Computer Desktop Encyclopedia entry for "SAN” (copyright 1981 (22) Filed1C Oct.cl. 11,1 2000 2004), version 174, 4th Quarter 2004. * cited by examiner (51) Int. Cl. G06F 5/73 (2006.01) E. Early line Pich (52) U.S. Cl. ....................... 709:23.700,246.700,249 (74) Attorney, Agent, or Firm Jason O. Piche 37Of 466 57 ABSTRACT (58) Field of Classification Search ................ -

Storage Area Network (SAN) Security and Performance

MASTER THESIS Evaluation of Storage Area Network (SAN) Security and Performance Master Thesis in Computer Network Engineering November 2013 Author: Siavash Hajirostam Supervisor: Tony Larsson Examiner: Tony Larsson __________________________________ School of Information Science, Computer and Electrical Engineering Halmstad University PO Box 823, SE-301 18 HALMSTAD Sweden Evaluation of Storage Area Network (SAN) Security and Performance Siavash Hajirostam © Copyright Siavash Hajirostam, 2013. All rights reserved. Master thesis report IDE 1325 School of Information Science, Computer and Electrical Engineering Halmstad University Preface I would like to express my appreciation to my supervisor, Professor Tony Larsson, for the many useful discussions, comments and suggestions on this thesis and also my thanks go to the staff of Halmstad University for giving me the opportunity to study in the computer network engineering program. Finally, my special thanks to my family for their encouragement and support during my stud ies. Siavash Hajirostam Halmstad, November 2013 i Abstract Due to growing the number of Information Technology (IT) users all around the world, consequently the amount of data that needs to be stored is increasing day by day. Single attached disks and old storage technologies cannot manage the storing these amounts of data. Storage Area Network (SAN) is a distributed storage technology to manage the data from several nodes in centralize place and secure. This thesis investigates how SAN works, the file system and protocols that are used in implementation of SAN. The thesis also investigate about other storages technologies such as Network Attached Storage (NAS) and Direct Attached Storage (DAS) to figure out the advantages and disadvantages of SAN , The main focus of the thesis project is on identifying the security vulnerabilities in SAN such as possible attacks in different SAN protocols.