The Road Towards a Cloud-Based High-Performance Solution for Genomic Data Analysis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Role of Nlrs in the Regulation of Type I Interferon Signaling, Host Defense and Tolerance to Inflammation

International Journal of Molecular Sciences Review Role of NLRs in the Regulation of Type I Interferon Signaling, Host Defense and Tolerance to Inflammation Ioannis Kienes 1, Tanja Weidl 1, Nora Mirza 1, Mathias Chamaillard 2 and Thomas A. Kufer 1,* 1 Department of Immunology, Institute for Nutritional Medicine, University of Hohenheim, 70599 Stuttgart, Germany; [email protected] (I.K.); [email protected] (T.W.); [email protected] (N.M.) 2 University of Lille, Inserm, U1003, F-59000 Lille, France; [email protected] * Correspondence: [email protected] Abstract: Type I interferon signaling contributes to the development of innate and adaptive immune responses to either viruses, fungi, or bacteria. However, amplitude and timing of the interferon response is of utmost importance for preventing an underwhelming outcome, or tissue damage. While several pathogens evolved strategies for disturbing the quality of interferon signaling, there is growing evidence that this pathway can be regulated by several members of the Nod-like receptor (NLR) family, although the precise mechanism for most of these remains elusive. NLRs consist of a family of about 20 proteins in mammals, which are capable of sensing microbial products as well as endogenous signals related to tissue injury. Here we provide an overview of our current understanding of the function of those NLRs in type I interferon responses with a focus on viral infections. We discuss how NLR-mediated type I interferon regulation can influence the development of auto-immunity and the immune response to infection. Citation: Kienes, I.; Weidl, T.; Mirza, Keywords: NOD-like receptors; Interferons; innate immunity; immune regulation; type I interferon; N.; Chamaillard, M.; Kufer, T.A. -

Interoperability in Toxicology: Connecting Chemical, Biological, and Complex Disease Data

INTEROPERABILITY IN TOXICOLOGY: CONNECTING CHEMICAL, BIOLOGICAL, AND COMPLEX DISEASE DATA Sean Mackey Watford A dissertation submitted to the faculty at the University of North Carolina at Chapel Hill in partial fulfillment of the requirements for the degree of Doctor of Philosophy in the Gillings School of Global Public Health (Environmental Sciences and Engineering). Chapel Hill 2019 Approved by: Rebecca Fry Matt Martin Avram Gold David Reif Ivan Rusyn © 2019 Sean Mackey Watford ALL RIGHTS RESERVED ii ABSTRACT Sean Mackey Watford: Interoperability in Toxicology: Connecting Chemical, Biological, and Complex Disease Data (Under the direction of Rebecca Fry) The current regulatory framework in toXicology is expanding beyond traditional animal toXicity testing to include new approach methodologies (NAMs) like computational models built using rapidly generated dose-response information like US Environmental Protection Agency’s ToXicity Forecaster (ToXCast) and the interagency collaborative ToX21 initiative. These programs have provided new opportunities for research but also introduced challenges in application of this information to current regulatory needs. One such challenge is linking in vitro chemical bioactivity to adverse outcomes like cancer or other complex diseases. To utilize NAMs in prediction of compleX disease, information from traditional and new sources must be interoperable for easy integration. The work presented here describes the development of a bioinformatic tool, a database of traditional toXicity information with improved interoperability, and efforts to use these new tools together to inform prediction of cancer and complex disease. First, a bioinformatic tool was developed to provide a ranked list of Medical Subject Heading (MeSH) to gene associations based on literature support, enabling connection of compleX diseases to genes potentially involved. -

NOD-Like Receptors in the Eye: Uncovering Its Role in Diabetic Retinopathy

International Journal of Molecular Sciences Review NOD-like Receptors in the Eye: Uncovering Its Role in Diabetic Retinopathy Rayne R. Lim 1,2,3, Margaret E. Wieser 1, Rama R. Ganga 4, Veluchamy A. Barathi 5, Rajamani Lakshminarayanan 5 , Rajiv R. Mohan 1,2,3,6, Dean P. Hainsworth 6 and Shyam S. Chaurasia 1,2,3,* 1 Ocular Immunology and Angiogenesis Lab, University of Missouri, Columbia, MO 652011, USA; [email protected] (R.R.L.); [email protected] (M.E.W.); [email protected] (R.R.M.) 2 Department of Biomedical Sciences, University of Missouri, Columbia, MO 652011, USA 3 Ophthalmology, Harry S. Truman Memorial Veterans’ Hospital, Columbia, MO 652011, USA 4 Surgery, University of Missouri, Columbia, MO 652011, USA; [email protected] 5 Singapore Eye Research Institute, Singapore 169856, Singapore; [email protected] (V.A.B.); [email protected] (R.L.) 6 Mason Eye Institute, School of Medicine, University of Missouri, Columbia, MO 652011, USA; [email protected] * Correspondence: [email protected]; Tel.: +1-573-882-3207 Received: 9 December 2019; Accepted: 27 January 2020; Published: 30 January 2020 Abstract: Diabetic retinopathy (DR) is an ocular complication of diabetes mellitus (DM). International Diabetic Federations (IDF) estimates up to 629 million people with DM by the year 2045 worldwide. Nearly 50% of DM patients will show evidence of diabetic-related eye problems. Therapeutic interventions for DR are limited and mostly involve surgical intervention at the late-stages of the disease. The lack of early-stage diagnostic tools and therapies, especially in DR, demands a better understanding of the biological processes involved in the etiology of disease progression. -

Cell Adhesion and Specificity

RESEARCH ARTICLE Molecular basis of sidekick-mediated cell- cell adhesion and specificity Kerry M Goodman1†, Masahito Yamagata2,3†, Xiangshu Jin1,4‡, Seetha Mannepalli1, Phinikoula S Katsamba4,5, Go¨ ran Ahlse´ n4,5, Alina P Sergeeva4,5, Barry Honig1,4,5,6,7*, Joshua R Sanes2,3*, Lawrence Shapiro1,5,7* 1Department of Biochemistry and Molecular Biophysics, Columbia University, New York, United States; 2Department of Molecular and Cellular Biology, Harvard University, Cambridge, United States; 3Center for Brain Science, Harvard University, Cambridge, United States; 4Howard Hughes Medical Institute, Columbia University, New York, United States; 5Department of Systems Biology, Columbia University, New York, United States; 6Department of Medicine, Columbia University, New York, United States; 7Zuckerman Mind Brain and Behavior Institute, Columbia University, New York, United States *For correspondence: bh6@cumc. Abstract Sidekick (Sdk) 1 and 2 are related immunoglobulin superfamily cell adhesion proteins columbia.edu (BH); sanesj@mcb. required for appropriate synaptic connections between specific subtypes of retinal neurons. Sdks harvard.edu (JRS); shapiro@ mediate cell-cell adhesion with homophilic specificity that underlies their neuronal targeting convex.hhmi.columbia.edu (LS) function. Here we report crystal structures of Sdk1 and Sdk2 ectodomain regions, revealing similar †These authors contributed homodimers mediated by the four N-terminal immunoglobulin domains (Ig1–4), arranged in a equally to this work horseshoe conformation. These Ig1–4 horseshoes interact in a novel back-to-back orientation in both homodimers through Ig1:Ig2, Ig1:Ig1 and Ig3:Ig4 interactions. Structure-guided mutagenesis Present address: ‡Department results show that this canonical dimer is required for both Sdk-mediated cell aggregation (via trans of Chemistry, Michigan State interactions) and Sdk clustering in isolated cells (via cis interactions). -

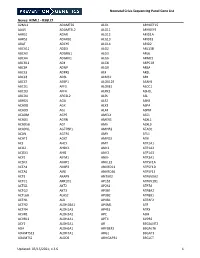

Supplementary Table 1: Adhesion Genes Data Set

Supplementary Table 1: Adhesion genes data set PROBE Entrez Gene ID Celera Gene ID Gene_Symbol Gene_Name 160832 1 hCG201364.3 A1BG alpha-1-B glycoprotein 223658 1 hCG201364.3 A1BG alpha-1-B glycoprotein 212988 102 hCG40040.3 ADAM10 ADAM metallopeptidase domain 10 133411 4185 hCG28232.2 ADAM11 ADAM metallopeptidase domain 11 110695 8038 hCG40937.4 ADAM12 ADAM metallopeptidase domain 12 (meltrin alpha) 195222 8038 hCG40937.4 ADAM12 ADAM metallopeptidase domain 12 (meltrin alpha) 165344 8751 hCG20021.3 ADAM15 ADAM metallopeptidase domain 15 (metargidin) 189065 6868 null ADAM17 ADAM metallopeptidase domain 17 (tumor necrosis factor, alpha, converting enzyme) 108119 8728 hCG15398.4 ADAM19 ADAM metallopeptidase domain 19 (meltrin beta) 117763 8748 hCG20675.3 ADAM20 ADAM metallopeptidase domain 20 126448 8747 hCG1785634.2 ADAM21 ADAM metallopeptidase domain 21 208981 8747 hCG1785634.2|hCG2042897 ADAM21 ADAM metallopeptidase domain 21 180903 53616 hCG17212.4 ADAM22 ADAM metallopeptidase domain 22 177272 8745 hCG1811623.1 ADAM23 ADAM metallopeptidase domain 23 102384 10863 hCG1818505.1 ADAM28 ADAM metallopeptidase domain 28 119968 11086 hCG1786734.2 ADAM29 ADAM metallopeptidase domain 29 205542 11085 hCG1997196.1 ADAM30 ADAM metallopeptidase domain 30 148417 80332 hCG39255.4 ADAM33 ADAM metallopeptidase domain 33 140492 8756 hCG1789002.2 ADAM7 ADAM metallopeptidase domain 7 122603 101 hCG1816947.1 ADAM8 ADAM metallopeptidase domain 8 183965 8754 hCG1996391 ADAM9 ADAM metallopeptidase domain 9 (meltrin gamma) 129974 27299 hCG15447.3 ADAMDEC1 ADAM-like, -

ATP-Binding and Hydrolysis in Inflammasome Activation

molecules Review ATP-Binding and Hydrolysis in Inflammasome Activation Christina F. Sandall, Bjoern K. Ziehr and Justin A. MacDonald * Department of Biochemistry & Molecular Biology, Cumming School of Medicine, University of Calgary, 3280 Hospital Drive NW, Calgary, AB T2N 4Z6, Canada; [email protected] (C.F.S.); [email protected] (B.K.Z.) * Correspondence: [email protected]; Tel.: +1-403-210-8433 Academic Editor: Massimo Bertinaria Received: 15 September 2020; Accepted: 3 October 2020; Published: 7 October 2020 Abstract: The prototypical model for NOD-like receptor (NLR) inflammasome assembly includes nucleotide-dependent activation of the NLR downstream of pathogen- or danger-associated molecular pattern (PAMP or DAMP) recognition, followed by nucleation of hetero-oligomeric platforms that lie upstream of inflammatory responses associated with innate immunity. As members of the STAND ATPases, the NLRs are generally thought to share a similar model of ATP-dependent activation and effect. However, recent observations have challenged this paradigm to reveal novel and complex biochemical processes to discern NLRs from other STAND proteins. In this review, we highlight past findings that identify the regulatory importance of conserved ATP-binding and hydrolysis motifs within the nucleotide-binding NACHT domain of NLRs and explore recent breakthroughs that generate connections between NLR protein structure and function. Indeed, newly deposited NLR structures for NLRC4 and NLRP3 have provided unique perspectives on the ATP-dependency of inflammasome activation. Novel molecular dynamic simulations of NLRP3 examined the active site of ADP- and ATP-bound models. The findings support distinctions in nucleotide-binding domain topology with occupancy of ATP or ADP that are in turn disseminated on to the global protein structure. -

NICU Gene List Generator.Xlsx

Neonatal Crisis Sequencing Panel Gene List Genes: A2ML1 - B3GLCT A2ML1 ADAMTS9 ALG1 ARHGEF15 AAAS ADAMTSL2 ALG11 ARHGEF9 AARS1 ADAR ALG12 ARID1A AARS2 ADARB1 ALG13 ARID1B ABAT ADCY6 ALG14 ARID2 ABCA12 ADD3 ALG2 ARL13B ABCA3 ADGRG1 ALG3 ARL6 ABCA4 ADGRV1 ALG6 ARMC9 ABCB11 ADK ALG8 ARPC1B ABCB4 ADNP ALG9 ARSA ABCC6 ADPRS ALK ARSL ABCC8 ADSL ALMS1 ARX ABCC9 AEBP1 ALOX12B ASAH1 ABCD1 AFF3 ALOXE3 ASCC1 ABCD3 AFF4 ALPK3 ASH1L ABCD4 AFG3L2 ALPL ASL ABHD5 AGA ALS2 ASNS ACAD8 AGK ALX3 ASPA ACAD9 AGL ALX4 ASPM ACADM AGPS AMELX ASS1 ACADS AGRN AMER1 ASXL1 ACADSB AGT AMH ASXL3 ACADVL AGTPBP1 AMHR2 ATAD1 ACAN AGTR1 AMN ATL1 ACAT1 AGXT AMPD2 ATM ACE AHCY AMT ATP1A1 ACO2 AHDC1 ANK1 ATP1A2 ACOX1 AHI1 ANK2 ATP1A3 ACP5 AIFM1 ANKH ATP2A1 ACSF3 AIMP1 ANKLE2 ATP5F1A ACTA1 AIMP2 ANKRD11 ATP5F1D ACTA2 AIRE ANKRD26 ATP5F1E ACTB AKAP9 ANTXR2 ATP6V0A2 ACTC1 AKR1D1 AP1S2 ATP6V1B1 ACTG1 AKT2 AP2S1 ATP7A ACTG2 AKT3 AP3B1 ATP8A2 ACTL6B ALAS2 AP3B2 ATP8B1 ACTN1 ALB AP4B1 ATPAF2 ACTN2 ALDH18A1 AP4M1 ATR ACTN4 ALDH1A3 AP4S1 ATRX ACVR1 ALDH3A2 APC AUH ACVRL1 ALDH4A1 APTX AVPR2 ACY1 ALDH5A1 AR B3GALNT2 ADA ALDH6A1 ARFGEF2 B3GALT6 ADAMTS13 ALDH7A1 ARG1 B3GAT3 ADAMTS2 ALDOB ARHGAP31 B3GLCT Updated: 03/15/2021; v.3.6 1 Neonatal Crisis Sequencing Panel Gene List Genes: B4GALT1 - COL11A2 B4GALT1 C1QBP CD3G CHKB B4GALT7 C3 CD40LG CHMP1A B4GAT1 CA2 CD59 CHRNA1 B9D1 CA5A CD70 CHRNB1 B9D2 CACNA1A CD96 CHRND BAAT CACNA1C CDAN1 CHRNE BBIP1 CACNA1D CDC42 CHRNG BBS1 CACNA1E CDH1 CHST14 BBS10 CACNA1F CDH2 CHST3 BBS12 CACNA1G CDK10 CHUK BBS2 CACNA2D2 CDK13 CILK1 BBS4 CACNB2 CDK5RAP2 -

NLRP2 and FAF1 Deficiency Blocks Early Embryogenesis in the Mouse

REPRODUCTIONRESEARCH NLRP2 and FAF1 deficiency blocks early embryogenesis in the mouse Hui Peng1,*, Haijun Liu2,*, Fang Liu1, Yuyun Gao1, Jing Chen1, Jianchao Huo1, Jinglin Han1, Tianfang Xiao1 and Wenchang Zhang1 1College of Animal Science, Fujian Agriculture and Forestry University, Fujian, Fuzhou, People’s Republic of China and 2Tianjin Institute of Animal Science and Veterinary Medicine, Tianjin, People’s Republic of China Correspondence should be addressed to W Zhang; Email: [email protected] *(H Peng and H Liu contributed equally to this work) Abstract Nlrp2 is a maternal effect gene specifically expressed by mouse ovaries; deletion of this gene from zygotes is known to result in early embryonic arrest. In the present study, we identified FAF1 protein as a specific binding partner of the NLRP2 protein in both mouse oocytes and preimplantation embryos. In addition to early embryos, both Faf1 mRNA and protein were detected in multiple tissues. NLRP2 and FAF1 proteins were co-localized to both the cytoplasm and nucleus during the development of oocytes and preimplantation embryos. Co-immunoprecipitation assays were used to confirm the specific interaction between NLRP2 and FAF1 proteins. Knockdown of the Nlrp2 or Faf1 gene in zygotes interfered with the formation of a NLRP2–FAF1 complex and led to developmental arrest during early embryogenesis. We therefore conclude that NLRP2 interacts with FAF1 under normal physiological conditions and that this interaction is probably essential for the successful development of cleavage-stage mouse embryos. Our data therefore indicated a potential role for NLRP2 in regulating early embryo development in the mouse. Reproduction (2017) 154 245–251 Introduction (Peng et al. -

Dock3 Stimulates Axonal Outgrowth Via GSK-3ß-Mediated Microtubule

264 • The Journal of Neuroscience, January 4, 2012 • 32(1):264–274 Development/Plasticity/Repair Dock3 Stimulates Axonal Outgrowth via GSK-3-Mediated Microtubule Assembly Kazuhiko Namekata, Chikako Harada, Xiaoli Guo, Atsuko Kimura, Daiji Kittaka, Hayaki Watanabe, and Takayuki Harada Visual Research Project, Tokyo Metropolitan Institute of Medical Science, Tokyo 156-8506, Japan Dock3, a new member of the guanine nucleotide exchange factors, causes cellular morphological changes by activating the small GTPase Rac1. Overexpression of Dock3 in neural cells promotes axonal outgrowth downstream of brain-derived neurotrophic factor (BDNF) signaling. We previously showed that Dock3 forms a complex with Fyn and WASP (Wiskott–Aldrich syndrome protein) family verprolin- homologous (WAVE) proteins at the plasma membrane, and subsequent Rac1 activation promotes actin polymerization. Here we show that Dock3 binds to and inactivates glycogen synthase kinase-3 (GSK-3) at the plasma membrane, thereby increasing the nonphos- phorylated active form of collapsin response mediator protein-2 (CRMP-2), which promotes axon branching and microtubule assembly. Exogenously applied BDNF induced the phosphorylation of GSK-3 and dephosphorylation of CRMP-2 in hippocampal neurons. More- over, increased phosphorylation of GSK-3 was detected in the regenerating axons of transgenic mice overexpressing Dock3 after optic nerve injury. These results suggest that Dock3 plays important roles downstream of BDNF signaling in the CNS, where it regulates cell polarity and promotes axonal outgrowth by stimulating dual pathways: actin polymerization and microtubule assembly. Introduction We recently detected a common active center of Dock1ϳ4 within The Rho-GTPases, including Rac1, Cdc42, and RhoA, are best the DHR-2 domain and reported that the DHR-1 domain is nec- known for their roles in regulating the actin cytoskeleton and are essary for the direct binding between Dock1ϳ4 and WAVE1ϳ3 implicated in a broad spectrum of biological functions, such as (Namekata et al., 2010). -

Identification of Potential Key Genes and Pathway Linked with Sporadic Creutzfeldt-Jakob Disease Based on Integrated Bioinformatics Analyses

medRxiv preprint doi: https://doi.org/10.1101/2020.12.21.20248688; this version posted December 24, 2020. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted medRxiv a license to display the preprint in perpetuity. All rights reserved. No reuse allowed without permission. Identification of potential key genes and pathway linked with sporadic Creutzfeldt-Jakob disease based on integrated bioinformatics analyses Basavaraj Vastrad1, Chanabasayya Vastrad*2 , Iranna Kotturshetti 1. Department of Biochemistry, Basaveshwar College of Pharmacy, Gadag, Karnataka 582103, India. 2. Biostatistics and Bioinformatics, Chanabasava Nilaya, Bharthinagar, Dharwad 580001, Karanataka, India. 3. Department of Ayurveda, Rajiv Gandhi Education Society`s Ayurvedic Medical College, Ron, Karnataka 562209, India. * Chanabasayya Vastrad [email protected] Ph: +919480073398 Chanabasava Nilaya, Bharthinagar, Dharwad 580001 , Karanataka, India NOTE: This preprint reports new research that has not been certified by peer review and should not be used to guide clinical practice. medRxiv preprint doi: https://doi.org/10.1101/2020.12.21.20248688; this version posted December 24, 2020. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted medRxiv a license to display the preprint in perpetuity. All rights reserved. No reuse allowed without permission. Abstract Sporadic Creutzfeldt-Jakob disease (sCJD) is neurodegenerative disease also called prion disease linked with poor prognosis. The aim of the current study was to illuminate the underlying molecular mechanisms of sCJD. The mRNA microarray dataset GSE124571 was downloaded from the Gene Expression Omnibus database. Differentially expressed genes (DEGs) were screened. -

Greg's Awesome Thesis

Analysis of alignment error and sitewise constraint in mammalian comparative genomics Gregory Jordan European Bioinformatics Institute University of Cambridge A dissertation submitted for the degree of Doctor of Philosophy November 30, 2011 To my parents, who kept us thinking and playing This dissertation is the result of my own work and includes nothing which is the out- come of work done in collaboration except where specifically indicated in the text and acknowledgements. This dissertation is not substantially the same as any I have submitted for a degree, diploma or other qualification at any other university, and no part has already been, or is currently being submitted for any degree, diploma or other qualification. This dissertation does not exceed the specified length limit of 60,000 words as defined by the Biology Degree Committee. November 30, 2011 Gregory Jordan ii Analysis of alignment error and sitewise constraint in mammalian comparative genomics Summary Gregory Jordan November 30, 2011 Darwin College Insight into the evolution of protein-coding genes can be gained from the use of phylogenetic codon models. Recently sequenced mammalian genomes and powerful analysis methods developed over the past decade provide the potential to globally measure the impact of natural selection on pro- tein sequences at a fine scale. The detection of positive selection in particular is of great interest, with relevance to the study of host-parasite conflicts, immune system evolution and adaptive dif- ferences between species. This thesis examines the performance of methods for detecting positive selection first with a series of simulation experiments, and then with two empirical studies in mammals and primates. -

Lfp Cv May 2021 1

Curriculum Vitae Luis F. Parada, Ph.D. [email protected] 1275 York Avenue, Box 558 New York, NY 10065 T 646-888-3781 www.mskcc.org Education & positions held 1979 B.S., Molecular Biology (with Honors), University of Wisconsin-Madison, Wisconsin 1985 Ph.D., Biology, Massachusetts Institute of Technology, Cambridge, Massachusetts 1985 - 1987 Postdoctoral Fellow, Unité de Génetique Cellulaire, Pasteur Institute, Paris, France 1988 - 1994 Head, Molecular Embryology Group & Molecular Embryology Section (with tenure), Mammalian Genetics Laboratory, ABL-Basic Research Program, NCI-Frederick Cancer Research and Development Center, Frederick, Maryland 1994 - 2006 Director, Center for Developmental Biology and Professor of Cell Biology University of Texas Southwestern Medical Center, Dallas, TX 1995 - 2015 Diana and Richard C. Strauss Distinguished Chair in Developmental Biology 1997 - 2015 Director, Kent Waldrep Center for Basic Research on Nerve Growth and Regeneration 1998 - 2015 Southwestern Ball Distinguished Chair in Basic Neuroscience Research 2003 - American Cancer Society Research Professor 2006 - 2015 Chairman, Department of Developmental Biology, University of Texas Southwestern Medical School 2015- Director, Brain Tumor Center & Member Cancer Biology and Genetics Program, SKI & MSKCC 2015- Albert C. Foster Chair, SKI & MSKCC 2015- Attending Neuroscientist, Departments of Neurology and Neurosurgery, MSKCC Luis F. Parada Page 2 of 28 Honors 2018 EACR Keynote Lecture. The 4th Brain Tumours 2018: From Biology to Therapy Conference. Warsaw, Poland. 2018 Keynote Lecture CRUK Brain Tumour Conference 2018. London 2016 - 2023 NCI Outstanding Investigator Award (R35) 2015 Distinguished Lectureship in Cancer Biology, MD Anderson 2014 The Maestro Award – Dallas, TX 2014 The Herman Vanden Berghe Lectures – University of Leuven, Belgium 2013 Blaffer Lecture – M.D.