Fast Internet-Wide Scanning: a New Security Perspective

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

SSL/TLS Implementation CIO-IT Security-14-69

DocuSign Envelope ID: BE043513-5C38-4412-A2D5-93679CF7A69A IT Security Procedural Guide: SSL/TLS Implementation CIO-IT Security-14-69 Revision 6 April 6, 2021 Office of the Chief Information Security Officer DocuSign Envelope ID: BE043513-5C38-4412-A2D5-93679CF7A69A CIO-IT Security-14-69, Revision 6 SSL/TLS Implementation VERSION HISTORY/CHANGE RECORD Person Page Change Posting Change Reason for Change Number of Number Change Change Initial Version – December 24, 2014 N/A ISE New guide created Revision 1 – March 15, 2016 1 Salamon Administrative updates to Clarify relationship between this 2-4 align/reference to the current guide and CIO-IT Security-09-43 version of the GSA IT Security Policy and to CIO-IT Security-09-43, IT Security Procedural Guide: Key Management 2 Berlas / Updated recommendation for Clarification of requirements 7 Salamon obtaining and using certificates 3 Salamon Integrated with OMB M-15-13 and New OMB Policy 9 related TLS implementation guidance 4 Berlas / Updates to clarify TLS protocol Clarification of guidance 11-12 Salamon recommendations 5 Berlas / Updated based on stakeholder Stakeholder review / input Throughout Salamon review / input 6 Klemens/ Formatting, editing, review revisions Update to current format and Throughout Cozart- style Ramos Revision 2 – October 11, 2016 1 Berlas / Allow use of TLS 1.0 for certain Clarification of guidance Throughout Salamon server through June 2018 Revision 3 – April 30, 2018 1 Berlas / Remove RSA ciphers from approved ROBOT vulnerability affected 4-6 Salamon cipher stack -

Using Frankencerts for Automated Adversarial Testing of Certificate

Using Frankencerts for Automated Adversarial Testing of Certificate Validation in SSL/TLS Implementations Chad Brubaker ∗ y Suman Janay Baishakhi Rayz Sarfraz Khurshidy Vitaly Shmatikovy ∗Google yThe University of Texas at Austin zUniversity of California, Davis Abstract—Modern network security rests on the Secure Sock- many open-source implementations of SSL/TLS are available ets Layer (SSL) and Transport Layer Security (TLS) protocols. for developers who need to incorporate SSL/TLS into their Distributed systems, mobile and desktop applications, embedded software: OpenSSL, NSS, GnuTLS, CyaSSL, PolarSSL, Ma- devices, and all of secure Web rely on SSL/TLS for protection trixSSL, cryptlib, and several others. Several Web browsers against network attacks. This protection critically depends on include their own, proprietary implementations. whether SSL/TLS clients correctly validate X.509 certificates presented by servers during the SSL/TLS handshake protocol. In this paper, we focus on server authentication, which We design, implement, and apply the first methodology for is the only protection against man-in-the-middle and other large-scale testing of certificate validation logic in SSL/TLS server impersonation attacks, and thus essential for HTTPS implementations. Our first ingredient is “frankencerts,” synthetic and virtually any other application of SSL/TLS. Server authen- certificates that are randomly mutated from parts of real cer- tication in SSL/TLS depends entirely on a single step in the tificates and thus include unusual combinations of extensions handshake protocol. As part of its “Server Hello” message, and constraints. Our second ingredient is differential testing: if the server presents an X.509 certificate with its public key. -

Hacker Public Radio

hpr0001 :: Introduction to HPR hpr0002 :: Customization the Lost Reason hpr0003 :: Lost Haycon Audio Aired on 2007-12-31 and hosted by StankDawg Aired on 2008-01-01 and hosted by deepgeek Aired on 2008-01-02 and hosted by Morgellon StankDawg and Enigma talk about what HPR is and how someone can contribute deepgeek talks about Customization being the lost reason in switching from Morgellon and others traipse around in the woods geocaching at midnight windows to linux Customization docdroppers article hpr0004 :: Firefox Profiles hpr0005 :: Database 101 Part 1 hpr0006 :: Part 15 Broadcasting Aired on 2008-01-03 and hosted by Peter Aired on 2008-01-06 and hosted by StankDawg as part of the Database 101 series. Aired on 2008-01-08 and hosted by dosman Peter explains how to move firefox profiles from machine to machine 1st part of the Database 101 series with Stankdawg dosman and zach from the packetsniffers talk about Part 15 Broadcasting Part 15 broadcasting resources SSTRAN AMT3000 part 15 transmitter hpr0007 :: Orwell Rolled over in his grave hpr0009 :: This old Hack 4 hpr0008 :: Asus EePC Aired on 2008-01-09 and hosted by deepgeek Aired on 2008-01-10 and hosted by fawkesfyre as part of the This Old Hack series. Aired on 2008-01-10 and hosted by Mubix deepgeek reviews a film Part 4 of the series this old hack Mubix and Redanthrax discuss the EEpc hpr0010 :: The Linux Boot Process Part 1 hpr0011 :: dd_rhelp hpr0012 :: Xen Aired on 2008-01-13 and hosted by Dann as part of the The Linux Boot Process series. -

Luigi Documentation Release 2.8.13

Luigi Documentation Release 2.8.13 The Luigi Authors Apr 29, 2020 Contents 1 Background 3 2 Visualiser page 5 3 Dependency graph example 7 4 Philosophy 9 5 Who uses Luigi? 11 6 External links 15 7 Authors 17 8 Table of Contents 19 8.1 Example – Top Artists.......................................... 19 8.2 Building workflows........................................... 23 8.3 Tasks................................................... 28 8.4 Parameters................................................ 33 8.5 Running Luigi.............................................. 36 8.6 Using the Central Scheduler....................................... 38 8.7 Execution Model............................................. 41 8.8 Luigi Patterns............................................... 43 8.9 Configuration............................................... 48 8.10 Configure logging............................................ 60 8.11 Design and limitations.......................................... 61 9 API Reference 63 9.1 luigi package............................................... 63 9.2 Indices and tables............................................ 248 Python Module Index 249 Index 251 i ii Luigi Documentation, Release 2.8.13 Luigi is a Python (2.7, 3.6, 3.7 tested) package that helps you build complex pipelines of batch jobs. It handles dependency resolution, workflow management, visualization, handling failures, command line integration, and much more. Run pip install luigi to install the latest stable version from PyPI. Documentation for the latest release is hosted on readthedocs. Run pip install luigi[toml] to install Luigi with TOML-based configs support. For the bleeding edge code, pip install git+https://github.com/spotify/luigi.git. Bleeding edge documentation is also available. Contents 1 Luigi Documentation, Release 2.8.13 2 Contents CHAPTER 1 Background The purpose of Luigi is to address all the plumbing typically associated with long-running batch processes. You want to chain many tasks, automate them, and failures will happen. -

Not-Quite-So-Broken TLS Lessons in Re-Engineering a Security Protocol Specification and Implementation

Not-quite-so-broken TLS Lessons in re-engineering a security protocol specification and implementation David Kaloper Meršinjak Hannes Mehnert Peter Sewell Anil Madhavapeddy University of Cambridge, Computer Labs Usenix Security, Washington DC, 12 August 2015 INT SSL23_GET_CLIENT_HELLO(SSL *S) { CHAR BUF_SPACE[11]; /* REQUEST THIS MANY BYTES IN INITIAL READ. * WE CAN DETECT SSL 3.0/TLS 1.0 CLIENT HELLOS * ('TYPE == 3') CORRECTLY ONLY WHEN THE FOLLOWING * IS IN A SINGLE RECORD, WHICH IS NOT GUARANTEED BY * THE PROTOCOL SPECIFICATION: * BYTE CONTENT * 0 TYPE \ * 1/2 VERSION > RECORD HEADER * 3/4 LENGTH / * 5 MSG_TYPE \ * 6-8 LENGTH > CLIENT HELLO MESSAGE Common CVE sources in 2014 Class # Memory safety 15 State-machine errors 10 Certificate validation 5 ASN.1 parsing 3 (OpenSSL, GnuTLS, SecureTransport, Secure Channel, NSS, JSSE) Root causes Error-prone languages Lack of separation Ambiguous and untestable specification nqsb approach Choice of language and idioms Separation and modular structure A precise and testable specification of TLS Reuse between specification and implementation Choice of language and idioms OCaml: a memory-safe language with expressive static type system Well contained side-effects Explicit flows of data Value-based Explicit error handling We leverage it for abstraction and automated resource management. Formal approaches Either reason about a simplified model of the protocol; or reason about small parts of OpenSSL. In contrast, we are engineering a deployable implementation. nqsb-tls A TLS stack, developed from scratch, with dual goals: Executable specification Usable TLS implementation Structure nqsb-TLS ML module layout Core Is purely functional: VAL HANDLE_TLS : STATE -> BUFFER -> [ `OK OF STATE * BUFFER OPTION * BUFFER OPTION | `FAIL OF FAILURE ] Core OCaml helps to enforce state-machine invariants. -

![A Letter to the FCC [PDF]](https://docslib.b-cdn.net/cover/6009/a-letter-to-the-fcc-pdf-126009.webp)

A Letter to the FCC [PDF]

Before the FEDERAL COMMUNICATIONS COMMISSION Washington, DC 20554 In the Matter of ) ) Amendment of Part 0, 1, 2, 15 and 18 of the ) ET Docket No. 15170 Commission’s Rules regarding Authorization ) Of Radio frequency Equipment ) ) Request for the Allowance of Optional ) RM11673 Electronic Labeling for Wireless Devices ) Summary The rules laid out in ET Docket No. 15170 should not go into effect as written. They would cause more harm than good and risk a significant overreach of the Commission’s authority. Specifically, the rules would limit the ability to upgrade or replace firmware in commercial, offtheshelf home or smallbusiness routers. This would damage the compliance, security, reliability and functionality of home and business networks. It would also restrict innovation and research into new networking technologies. We present an alternate proposal that better meets the goals of the FCC, not only ensuring the desired operation of the RF portion of a WiFi router within the mandated parameters, but also assisting in the FCC’s broader goals of increasing consumer choice, fostering competition, protecting infrastructure, and increasing resiliency to communication disruptions. If the Commission does not intend to prohibit the upgrade or replacement of firmware in WiFi devices, the undersigned would welcome a clear statement of that intent. Introduction We recommend the FCC pursue an alternative path to ensuring Radio Frequency (RF) compliance from WiFi equipment. We understand there are significant concerns regarding existing users of the WiFi spectrum, and a desire to avoid uncontrolled change. However, we most strenuously advise against prohibiting changes to firmware of devices containing radio components, and furthermore advise against allowing nonupdatable devices into the field. -

Bài 12: Quản Trị Từ Xa Với Control Panel Nhắc Lại

Linux và Phần mềm Mã nguồn mở Bài 12: Quản trị từ xa với control panel Nhắc lại . Khái niệm máy chủ internet và những ưu điểm của máy chạy linux khi dùng làm máy chủ internet . LAMP = Linux + Apache + MySQL + PHP . Cài đặt LAMP trên hệ điều hành CentOS . Cài đặt LAMP trên hệ điều hành Ubuntu . Cách thức làm việc của tường lửa (firewall) . Một số kinh nghiệm khi vận hành máy chủ internet . Gọi quản trị MySQL bằng từ web: phpMyAdmin . Một số gói bổ sung của PHP TRƯƠNG XUÂN NAM 2 Nội dung 1. Giao diện quản trị từ xa (remote control panels) . Quản trị từ xa với internet server . Các kiểu công cụ quản trị từ xa . Quản trị từ xa với giao diện web . Phân cấp người dùng với RCP 2. Một vài RCP thông dụng . VestaCP . Webmin . zPanel . Các RCP khác TRƯƠNG XUÂN NAM 3 Phần 1 Giao diện quản trị từ xa (remote control panels) TRƯƠNG XUÂN NAM 4 Quản trị từ xa với internet server . Thực tế: đa số các internet server đặt ở những địa điểm “xa tầm tay với” của quản trị hệ thống . Do yêu cầu về băng thông: internet server cung cấp dữ liệu chủ yếu cho truy cập qua internet, vì thế kết nối với internet càng cao càng tốt . Do yêu cầu về độ ổn định: internet server cần hoạt động càng ổn định càng tốt để tránh dịch vụ khách hàng bị gián đoạn hoặc chập chờn . Do yêu cầu về an toàn: cần những dịch vụ hỗ trợ để tránh việc bị phá hoại, gây hỏng hóc, mất mát,… TRƯƠNG XUÂN NAM 5 Quản trị từ xa với internet server . -

Sizzle: a Standards-Based End-To-End Security Architecture for the Embedded Internet

Sizzle: A Standards-based end-to-end Security Architecture for the Embedded Internet Vipul Gupta, Matthew Millard,∗ Stephen Fung*, Yu Zhu*, Nils Gura, Hans Eberle, Sheueling Chang Shantz Sun Microsystems Laboratories 16 Network Circle, UMPK16 160 Menlo Park, CA 94025 [email protected], [email protected], [email protected] [email protected], {nils.gura, hans.eberle, sheueling.chang}@sun.com Abstract numbers of even simpler, more constrained devices (sen- sors, home appliances, personal medical devices) get con- This paper introduces Sizzle, the first fully-implemented nected to the Internet. The term “embedded Internet” is end-to-end security architecture for highly constrained em- often used to refer to the phase in the Internet’s evolution bedded devices. According to popular perception, public- when it is invisibly and tightly woven into our daily lives. key cryptography is beyond the capabilities of such devices. Embedded devices with sensing and communication capa- We show that elliptic curve cryptography (ECC) not only bilities will enable the application of computing technolo- makes public-key cryptography feasible on these devices, it gies in settings where they are unusual today: habitat mon- allows one to create a complete secure web server stack itoring [26], medical emergency response [31], battlefield including SSL, HTTP and user application that runs effi- management and home automation. ciently within very tight resource constraints. Our small Many of these applications have security requirements. footprint HTTPS stack needs less than 4KB of RAM and For example, health information must only be made avail- interoperates with an ECC-enabled version of the Mozilla able to authorized personnel (authentication) and be pro- web browser. -

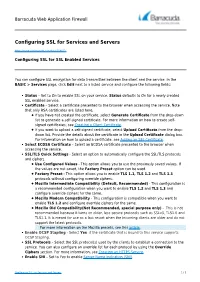

Configuring SSL for Services and Servers

Barracuda Web Application Firewall Configuring SSL for Services and Servers https://campus.barracuda.com/doc/4259877/ Configuring SSL for SSL Enabled Services You can configure SSL encryption for data transmitted between the client and the service. In the BASIC > Services page, click Edit next to a listed service and configure the following fields: Status – Set to On to enable SSL on your service. Status defaults to On for a newly created SSL enabled service. Certificate – Select a certificate presented to the browser when accessing the service. Note that only RSA certificates are listed here. If you have not created the certificate, select Generate Certificate from the drop-down list to generate a self-signed certificate. For more information on how to create self- signed certificates, see Creating a Client Certificate. If you want to upload a self-signed certificate, select Upload Certificate from the drop- down list. Provide the details about the certificate in the Upload Certificate dialog box. For information on how to upload a certificate, see Adding an SSL Certificate. Select ECDSA Certificate – Select an ECDSA certificate presented to the browser when accessing the service. SSL/TLS Quick Settings - Select an option to automatically configure the SSL/TLS protocols and ciphers. Use Configured Values - This option allows you to use the previously saved values. If the values are not saved, the Factory Preset option can be used. Factory Preset - This option allows you to enable TLS 1.1, TLS 1.2 and TLS 1.3 protocols without configuring override ciphers. Mozilla Intermediate Compatibility (Default, Recommended) - This configuration is a recommended configuration when you want to enable TLS 1.2 and TLS 1.3 and configure override ciphers for the same. -

![Arxiv:1911.09312V2 [Cs.CR] 12 Dec 2019](https://docslib.b-cdn.net/cover/5245/arxiv-1911-09312v2-cs-cr-12-dec-2019-485245.webp)

Arxiv:1911.09312V2 [Cs.CR] 12 Dec 2019

Revisiting and Evaluating Software Side-channel Vulnerabilities and Countermeasures in Cryptographic Applications Tianwei Zhang Jun Jiang Yinqian Zhang Nanyang Technological University Two Sigma Investments, LP The Ohio State University [email protected] [email protected] [email protected] Abstract—We systematize software side-channel attacks with three questions: (1) What are the common and distinct a focus on vulnerabilities and countermeasures in the cryp- features of various vulnerabilities? (2) What are common tographic implementations. Particularly, we survey past re- mitigation strategies? (3) What is the status quo of cryp- search literature to categorize vulnerable implementations, tographic applications regarding side-channel vulnerabili- and identify common strategies to eliminate them. We then ties? Past work only surveyed attack techniques and media evaluate popular libraries and applications, quantitatively [20–31], without offering unified summaries for software measuring and comparing the vulnerability severity, re- vulnerabilities and countermeasures that are more useful. sponse time and coverage. Based on these characterizations This paper provides a comprehensive characterization and evaluations, we offer some insights for side-channel of side-channel vulnerabilities and countermeasures, as researchers, cryptographic software developers and users. well as evaluations of cryptographic applications related We hope our study can inspire the side-channel research to side-channel attacks. We present this study in three di- community to discover new vulnerabilities, and more im- rections. (1) Systematization of literature: we characterize portantly, to fortify applications against them. the vulnerabilities from past work with regard to the im- plementations; for each vulnerability, we describe the root cause and the technique required to launch a successful 1. -

Certified Malware: Measuring Breaches of Trust in the Windows Code-Signing PKI

Certified Malware: Measuring Breaches of Trust in the Windows Code-Signing PKI Doowon Kim Bum Jun Kwon Tudor Dumitras, University of Maryland University of Maryland University of Maryland College Park, MD College Park, MD College Park, MD [email protected] [email protected] [email protected] ABSTRACT To establish trust in third-party software, we currently rely on Digitally signed malware can bypass system protection mechanisms the code-signing Public Key Infrastructure (PKI). This infrastruc- that install or launch only programs with valid signatures. It can ture includes Certification Authorities (CAs) that issue certificates also evade anti-virus programs, which often forego scanning signed to software publishers, vouching for their identity. Publishers use binaries. Known from advanced threats such as Stuxnet and Flame, these certificates to sign the software they release, and users rely this type of abuse has not been measured systematically in the on these signatures to decide which software packages to trust broader malware landscape. In particular, the methods, effective- (rather than maintaining a list of trusted packages). If adversaries ness window, and security implications of code-signing PKI abuse can compromise code signing certificates, this has severe impli- are not well understood. We propose a threat model that highlights cations for end-host security. Signed malware can bypass system three types of weaknesses in the code-signing PKI. We overcome protection mechanisms that install or launch only programs with challenges specific to code-signing measurements by introducing valid signatures, and it can evade anti-virus programs, which often techniques for prioritizing the collection of code-signing certificates neglect to scan signed binaries. -

Network Devices Configuration Guide for Packetfence Version 6.5.0 Network Devices Configuration Guide by Inverse Inc

Network Devices Configuration Guide for PacketFence version 6.5.0 Network Devices Configuration Guide by Inverse Inc. Version 6.5.0 - Jan 2017 Copyright © 2017 Inverse inc. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts. A copy of the license is included in the section entitled "GNU Free Documentation License". The fonts used in this guide are licensed under the SIL Open Font License, Version 1.1. This license is available with a FAQ at: http:// scripts.sil.org/OFL Copyright © Łukasz Dziedzic, http://www.latofonts.com, with Reserved Font Name: "Lato". Copyright © Raph Levien, http://levien.com/, with Reserved Font Name: "Inconsolata". Table of Contents About this Guide ............................................................................................................... 1 Other sources of information ..................................................................................... 1 Note on Inline enforcement support ................................................................................... 2 List of supported Network Devices ..................................................................................... 3 Switch configuration .......................................................................................................... 4 Assumptions ............................................................................................................