~DRAFT~ CHAPTER 5: Link Layer

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Networking Packet Broadcast Storms

Lesson Learned Networking Packet Broadcast Storms Primary Interest Groups Balancing Authorities (BAs) Generator Operators (GOPs) Reliability Coordinators (RCs) Transmission Operators (TOPs) Transmission Owners (TOs) that own and operate an Energy Management System (EMS) Problem Statement When a second network cable was connected from a voice over internet protocol (VOIP) phone to a network switch lacking proper settings, a packet broadcast storm prevented network communications from functioning, and supervisory control and data acquisition (SCADA) was lost for several hours. Broadcast storm events have also arisen from substation local area network (LAN) issues. Details A conference room was set up for a training class that needed to accommodate multiple PCs. The bridge protocol data unit (BPDU) packet propagation prevention setting was disabled on a port in the conference room in order to place a network switch off of that port. Upon completion of the training, the network switch was removed; however, the BPDU packet propagation setting was inadvertently not restored. As part of a telephone upgrade project, the traditional phone in this conference room was recently replaced by a VOIP phone. Later, an additional network cable was connected to the output port of this VOIP phone into a secondary network jack within the conference room. When the second network cable was connected from a VOIP phone to a network switch lacking proper settings, a switching loop resulted. Spanning tree protocol is normally used to prevent switching loops from propagating broadcast packets continuously until the network capacity is overwhelmed. A broadcast packet storm from the switching loop prevented network communications from functioning and SCADA was lost for several hours. -

Application of Ethernet Networking Devices Used for Protection and Control Applications in Electric Power Substations

Application of Ethernet Networking Devices Used for Protection and Control Applications in Electric Power Substations Report of Working Group P6 of the Power System Communications and Cybersecurity Committee of the Power and Energy Society of IEEE September 12, 2017 1 IEEE PES Power System Communications and Cybersecurity Committee (PSCCC) Working Group P6, Configuring Ethernet Communications Equipment for Substation Protection and Control Applications, has existed during the course of report development as Working Group H12 of the IEEE PES Power System Relaying Committee (PSRC). The WG designation changed as a result of a recent IEEE PES Technical Committee reorganization. The membership of H12 and P6 at time of approval voting is as follows: Eric A. Udren, Chair Benton Vandiver, Vice Chair Jay Anderson Galina Antonova Alex Apostolov Philip Beaumont Robert Beresh Christoph Brunner Fernando Calero Christopher Chelmecki Thomas Dahlin Bill Dickerson Michael Dood Herbert Falk Didier Giarratano Roman Graf Christopher Huntley Anthony Johnson Marc LaCroix Deepak Maragal Aaron Martin Roger E. Ray Veselin Skendzic Charles Sufana John T. Tengdin 2 IEEE PES PSCCC P6 Report, September 2017 Application of Ethernet Networking Devices Used for Protection and Control Applications in Electric Power Substations Table of Contents 1. Introduction ...................................................................................... 10 2. Ethernet for protection and control .................................................. 10 3. Overview of Ethernet message -

Wireless LAN (WLAN) Switching

TECHNICAL WHITEPAPER Wireless LAN (WLAN) Switching An examination of a long range wireless switching technology to enable large and secure Wi-Fi Deployments TECHNICAL WHITEPAPER Wireless LAN (WLAN) Switching This whitepaper provides a detailed explanation of a new wireless switching technology that will allow for large and secure deployments of WLANS. The explosion of wireless networking on the scene in the past few years has been unprecedented. Many compare the market acceptance of this technology to the advent of the early days of Ethernet. When Ethernet was adopted as a standard, it was quickly embraced by the users of PCs, and the acceptance of wireless LANs has followed a similar path. Comparatively, the adoption of the wireless standard, IEEE 802.11, (also known as Wi-Fi) and use of mobile computing platforms is the basis of the wireless revolution. While this is a very good comparative analysis, in the real sense the adoption rate of wireless LANs has been much higher. The worker of today has morphed into a mobile worker that has grown accustomed to information on the fly, and will demand information inside and outside of the workplace. These factors have converged and are providing the impetus for the hyper acceptance of wireless technologies where by IT professionals are faced with the choice of embracing the technology or having it implemented by their users. Wireless Adoption at Warp Speed The next logical evolution of the technology was to make it ubiquitous to the mobile wireless worker. Intel is helping this technology “cross the chasm” into the mainstream by spending $300 million to market their introduction of the Centrino™ mobile technology, which provides built-in Wi-Fi for the mobile computing platform. -

Wireless Network Bridge Lmbc-650

DIGITAL LIGHTING MANAGEMENT WIRELESS DLM WATTSTOPPER® WIRELESS NETWORK BRIDGE LMBC-650 • Connects any DLM wired devices to a wireless IPv6 Mesh secure self-forming network • Built-in IPv6 Mesh and Bluetooth® low energy technology antenna provides robust signal strength and reliable communication • Two RJ-45 ports for DLM Cat 5e communication and class 2 power • Includes trusted hardware chips for device validation and secure out-of-box wireless AES 128-bit encryption • Tri-color LED indicator displays wireless network health, DLM Cat 5e communication, and ability to identify during commissioning • Plenum rated with mounting hardware for fast installation • In conjunction with an LMBR-650, supports third party integration with BAS through BACnet/IP over IPv6 Wireless Mesh network • Commissioning using LMCS-100 software ® IPv6 MESH Description Operation The LMBC-650 Wireless Network Bridge provides room The wireless network bridge operates using Class 2 power to room network connectivity for rooms with Wattstopper supplied by a DLM local network from one or more DLM room Digital Lighting Management (DLM) devices. The wireless controllers. It connects to the free-topology local network at DLM platform simplifies room to room communication and any convenient location using a standard LMRJ cable. It then installation, and eliminates potential start-up delays caused by securely communicates via encrypted wireless protocol over wiring issues. a mesh network to an LMBR-650 Border Router on the same The DLM local room network must include at least one wired floor of the building. controller. Once the LMBC-650 is connected to LMBR-650 The LMBC-650 monitors the DLM local network and network and then either using LMCS-100 or DLM Dashboard automatically exposes all room devices, settings and software, the system can expose BACnet protocol to revel the calibrations to the LMBR-650 Border Router. -

Gigabit Ethernet Switch User's Guide

Gigabit Ethernet Switch User’s Guide Version 1.0 P/N: ATNSGD8082503 Rev.1 This User's Guide contains important information regarding the installation of your switch. Please read it completely before beginning installation. For future reference, record the serial number of your network switch in the space below: SERIAL number The serial number is located on the bottom of the switch. Amer.com 7259 Bryan Dairy Road, Largo, FL 33777 USA © Amer.com Corp., 1997-2002 All rights reserved. No part of this publication may be reproduced in any form or by any means or used to make any derivative such as translation, transformation, or adaptation without permission from Amer.com, as stipulated by the United States Copyright Act of 1976. Amer.com reserves the right to make changes to this document and the products which it describes without notice. Amer.com shall not be liable for technical or editorial errors or omissions made herein; nor for incidental or consequential damages resulting from the furnishing, performance, or use of this material. Amer.com is a registered trademark of Amer.com. All other trademarks and trade names are properties of their owners. FCC Class A Certification Statement Model SGD8 FCC Class A This device complies with Part 15 of the FCC rules. Operation is subject to the following two conditions: (1)This device may not cause harmful interference, and (2)This device must accept any interference received, including interference that may cause undesired operation. This equipment has been tested and found to comply with the limits for a Class A digital device, pursuant to Part 15 of the FCC Rules. -

Stealth Analysis of Network Topology Using Spanning Tree Protocol

Stealth Analysis of Network Topology using Spanning Tree Protocol Stephen Glackin M.Sc. in Software and Network Security 2015 Computing Department, Letterkenny Institute of Technology, Port Road, Letterkenny, Co. Donegal, Ireland. Stealth Analysis of Network Topology using Spanning Tree Protocol Author: Stephen Glackin Supervised by: John O’ Raw A thesis presented for the award of Master of Science in Software and Network Security. Submitted to the Higher Quality and Qualifications Ireland (QQI) Dearbhú Cáilíochta agus Cáilíochtaí Éireann May 2015 Declaration I hereby certify that the material, which l now submit for assessment on the programmes of study leading to the award of Master of Science in Computing in Software and Network Security, is entirely my own work and has not been taken from the work of others except to the extent that such work has been cited and acknowledged within the text of my own work. No portion of the work contained in this thesis has been submitted in support of an application for another degree or qualification to this or any other institution. Signature of Candidate Date Acknowledgements I would like to thank my wife, kids, family members and extended family members for their support and encouragement, without them this project never would have been completed. Also I would like to offer my greatest appreciation to my supervisor Mr. John O'Raw for all his help and support. His knowledge with regards to everything relating to computing is truly amazing and I am very grateful for the time he has given in assisting me to carry out this project. -

L-240 Owner's Manual

(patent pending) Outdoor Bluetooth/WiFi Amplifier Owner’s Manual Ambisonic Systems LLC 1990 N. McCulloch Blvd. Ste. D 222 Lake Havasu City, AZ 86403 Voice: 928.733.6002 Fax: 928.453.5550 [email protected] www.ambisonicsystems.com Outdoor Bluetooth/WiFi Amplifier Bluetooth Antenna Mounting Bracket L-240 Stereo r INPUT : 100 - 240V AC 50-60Hz 75W OUTPUT : 240W/4ohms 5004938 MODEL: L-240 WiFi Antenna (2) Ambisonic Systems,LLC CONFORMS TO UL STD 1838 CERTIFIED TO CSA STD C22.2 NO.250.7 Ambisonic BT I WiFi o L-Clip LED Indicators R-Clip Power Outputs 120VAC LEFT RIGHT - + + - Speaker Output Terminal Screws Weather Proof Enclosure 120 VAC Input Speaker Outputs Installation Instructions (interior wall, exterior wall, fence or other vertical surface that does not recieve total direct sunlight. THE “ WITH A MINIMUM OF THREE (3) FEET CLEARENCE AROUND THE UNIT FOR PROPER VENTILATION. Place a screw at the proper location to except the keyhole slot in the mounting bracket. switch is in the OFF position. 4) Push the bare wires under the terminal screws on the terminal blocks and tighten the screws securely. 5) Connect the speaker outputs from the “ ”, to the speakers. 6) Plug “ Close and lock the door to ensure your safety and to create a weather tight seal. Ambisonic Systems L-240 All-Weather Outdoor WiFi Amplifier / User & Setup Guide Index Page 2: Introduction Pages 3-7: Quick Start (No Network Connection) Page 7: Network Settings Pages 7-14: Connecting the Ambisonic L-240 to the Network 1 Ambisonic Systems L-240 All-Weather Outdoor WiFi Amplifier / User & Setup Guide Introduction AmbiSonic’s L-240 All-Weather Outdoor WiFi Amplifier stands apart from all others. -

Role of a Small Switch in a Network-Based Data Acquisition System

Role of a Small Switch in a Network- Based Data Acquisition System Item Type text; Proceedings Authors Hildin, John Publisher International Foundation for Telemetering Journal International Telemetering Conference Proceedings Rights Copyright © held by the author; distribution rights International Foundation for Telemetering Download date 28/09/2021 12:00:38 Link to Item http://hdl.handle.net/10150/605943 ROLE OF A SMALL SWITCH IN A NETWORK-BASED DATA ACQUISITION SYSTEM John Hildin Director of Network Systems Teletronics Technology Corporation Newtown, PA USA ABSTRACT Network switches are an integral part of most network-based data acquisition systems. Switches fall into the category of network infrastructure. They support the interconnection of nodes and the movement of data in the overall network. Unlike endpoints such as data acquisition units, recorders, and display modules, switches do not collect, store or process data. They are a necessary expense required to build the network. The goal of this paper is to show how a small integrated network switch can be used to maximize the value proposition of a given switch port in the network. This can be accomplished by maximizing the bandwidth utilization of individual network segments and minimizing the necessary wiring needed to connect all the network components. KEY WORDS Data Acquisition, Network Switch, Network Infrastructure INTRODUCTION An Ethernet-based network data acquisition system is made up of network nodes (e.g., data acquisition units, recorders, etc.) interconnected by switches. While critical to the operation of the overall network, switches are a necessary expense added to the total cost of the system. One metric sometimes used to characterize the impact of the switch component is the cost-per-port. -

5 Or 8 Port 10/100 Ethernet Desktop Switch DS51072 DS81072

5 or 8 Port 10/100 Ethernet Desktop Switch DS51072 DS81072 *DS51072 shown DE: Bedienungsanleitung - de.startech.com FR: Guide de l'utilisateur - fr.startech.com ES: Guía del usuario - es.startech.com IT: Guida per l'uso - it.startech.com NL: Gebruiksaanwijzing - nl.startech.com PT: Guia do usuário - pt.startech.com For the most up-to-date information, please visit: www.startech.com Manual Revision: 12/18/2014 FCC Compliance Statement This equipment has been tested and found to comply with the limits for a Class B digital device, pursuant to part 15 of the FCC Rules. These limits are designed to provide reasonable protection against harmful interference in a residential installation. This equipment generates, uses and can radiate radio frequency energy and, if not installed and used in accordance with the instructions, may cause harmful interference to radio communications. However, there is no guarantee that interference will not occur in a particular installation. If this equipment does cause harmful interference to radio or television reception, which can be determined by turning the equipment off and on, the user is encouraged to try to correct the interference by one or more of the following measures: • Reorient or relocate the receiving antenna. • Increase the separation between the equipment and receiver. • Connect the equipment into an outlet on a circuit different from that to which the receiver is connected. • Consult the dealer or an experienced radio/TV technician for help. Use of Trademarks, Registered Trademarks, and other Protected Names and Symbols This manual may make reference to trademarks, registered trademarks, and other protected names and/or symbols of third-party companies not related in any way to StarTech.com. -

On the Consideration of an UMTS/S-UMTS Based GEO-MSS System Architecture Design

On the Consideration of an UMTS/S-UMTS Based GEO-MSS System Architecture Design Jianjun Wu, Yuxin Cheng, Jiancheng Du, Jian Guo, and Zhenxing Gao State Key Laboratory of Advanced Optical Communication Systems and Networks, EECS, Peking University, Beijing 100871, P.R. China {just,chengyx,dujc,guoj,gaozx}@pku.edu.cn Abstract. To address the special requirements of China’s future public satellite mobile network (PSMN), three system architecture schemes are proposed in this paper basing on different satellite payload processing modeling. Finally, one of them, i.e., the so-called passive part on-board switching based architec- ture, is selected as a proposal for further design and development of China’s PSMN system, which essentially compatible to the 3GPP/UMTS R4 reference model. Keywords: MSS, GEO, UMTS, network architecture. 1 Introduction In order to extend and complement the current PLMN service coverage, to promote the emergency communications ability during disaster rescues, and to protect the rare space resource, that is, 115.5°E & 125.5°E S-band orbits, which China is holding with highest priority till now, the idea of establishing China’s own public satellite mobile network (PSMN) has been proposed repeatly since the 3rd quarter of 2008. Several earlier fundings have been launched respectively by those related national divisions, among which the Ministry of Science and Technology of China (MSTC), is the most progressive one to start the relevant research and development activities. So far, there are several mobile satellite systems which are already in sucessful commercial operation or right now under construction all over the world. -

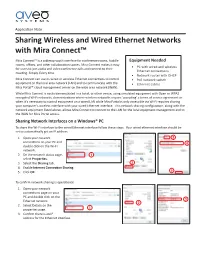

Sharing Wireless and Wired Ethernet Networks with Mira Connect™

TM SYSTEMS Application Note Sharing Wireless and Wired Ethernet Networks with Mira Connect™ Mira Connect™ is a tabletop touch interface for conference rooms, huddle Equipment Needed rooms, offices, and other collaboration spaces. Mira Connect makes it easy • PC with wired and wireless for users to join audio and video conference calls and connect to their Ethernet connections meeting. Simply. Every time. • Network router with DHCP Mira Connect can use its wired or wireless Ethernet connections to control • PoE network switch equipment on the local area network (LAN) and to communicate with the • Ethernet cables Mira Portal™ cloud management server on the wide area network (WAN). While Mira Connect is easily demonstrated in a hotel, or other venue, using simulated equipment with Open or WPA2 encrypted Wi-Fi networks, demonstrations where wireless networks require 'accepting' a terms of service agreement or when it's necessary to control equipment on a wired LAN while Mira Portal is only accessible via Wi-Fi requires sharing your computer's wireless interface with your wired Ethernet interface. This network sharing configuration, along with the network equipment listed above, allows Mira Connect to connect to the LAN for the local equipment management and to the WAN for Mira Portal access. Sharing Network Interfaces on a Windows® PC To share the Wi-Fi interface to the wired Ethernet interface follow these steps. Your wired ethernet interface should be set to automatically get an IP address. 1. Open your network 3 connections on your PC and 4 double click on the Wi-Fi network. 2. On the network status page, 1 select Properties. -

Layer 2 Configuration Guide, Cisco IOS XE Gibraltar 16.10.X (Catalyst 9500 Switches) Americas Headquarters Cisco Systems, Inc

Layer 2 Configuration Guide, Cisco IOS XE Gibraltar 16.10.x (Catalyst 9500 Switches) Americas Headquarters Cisco Systems, Inc. 170 West Tasman Drive San Jose, CA 95134-1706 USA http://www.cisco.com Tel: 408 526-4000 800 553-NETS (6387) Fax: 408 527-0883 © 2018 Cisco Systems, Inc. All rights reserved. CONTENTS CHAPTER 1 Configuring Spanning Tree Protocol 1 Restrictions for STP 1 Information About Spanning Tree Protocol 1 Spanning Tree Protocol 1 Spanning-Tree Topology and BPDUs 2 Bridge ID, Device Priority, and Extended System ID 3 Port Priority Versus Path Cost 4 Spanning-Tree Interface States 4 How a Device or Port Becomes the Root Device or Root Port 7 Spanning Tree and Redundant Connectivity 8 Spanning-Tree Address Management 8 Accelerated Aging to Retain Connectivity 8 Spanning-Tree Modes and Protocols 8 Supported Spanning-Tree Instances 9 Spanning-Tree Interoperability and Backward Compatibility 9 STP and IEEE 802.1Q Trunks 10 Spanning Tree and Device Stacks 10 Default Spanning-Tree Configuration 11 How to Configure Spanning-Tree Features 12 Changing the Spanning-Tree Mode (CLI) 12 Disabling Spanning Tree (CLI) 13 Configuring the Root Device (CLI) 14 Configuring a Secondary Root Device (CLI) 15 Configuring Port Priority (CLI) 16 Configuring Path Cost (CLI) 17 Configuring the Device Priority of a VLAN (CLI) 19 Layer 2 Configuration Guide, Cisco IOS XE Gibraltar 16.10.x (Catalyst 9500 Switches) iii Contents Configuring the Hello Time (CLI) 20 Configuring the Forwarding-Delay Time for a VLAN (CLI) 21 Configuring the Maximum-Aging Time