Art and Science in Visualization Victoria Interrante

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Biological Illustration: Kekkon6 Localization and Biological Pacemakers

Biological Illustration: Kekkon6 localization and biological pacemakers A Major Qualifying Project Report Submitted to the Faculty of WORCESTER POLYTECHNIC INSTITUTE In partial fulfillment of the requirements for the Degree of Bachelor of Science In Interdisciplinary Biological Illustration By Daniel Valerio October 25, 2012 APPROVED BY: Jill Rulfs, PhD Biology WPI Major and Academic Advisor Abstract Illustrations have helped mankind understand the world we inhabit since antiquity. Today biological illustration helps us to understand the biological world. This project demonstrates my abilities as an artist and knowledge as a scientist. As biological illustrator, I have done this by illustrating for two projects. One pertains to localization of Kekkon6, a transmembrane protein, in Drosophila melanogaster. The other relates to developing biological pacemakers with the use of stem cell implants. Acknowledgements I first would like to thank Jill Rulfs, my academic advisor and head of the committee of professors supporting my interdisciplinary individually created Biological Illustration major. Thank you for guiding me through my time here at WPI. And for encouraging me to embrace my passion for biology and the arts with the realization of this Biological Illustration major. And thank you for your endless help and support pertaining to this MQP. I would also like to thank Joe Duffy and Glenn Gaudette for extending interest in my MQP proposal and allowing me to work with you. Your support and reassurance in my abilities and direction as an artist and a scientist were fundamental in this project. To Joe Farbrook, thank you for teaching me everything you have as my professor and allowing me to grow as an artist. -

Art and Science: the Importance of Scientific Illustration in Veterinary Medicine

International Journal of Veterinary Sciences and Animal Husbandry 2021; 6(3): 30-33 ISSN: 2456-2912 VET 2021; 6(3): 30-33 © 2021 VET Art and science: The importance of scientific www.veterinarypaper.com Received: 19-02-2021 illustration in veterinary medicine Accepted: 21-03-2021 Andreia Garcês Andreia Garcês Inno – Serviços Especializados em Veterinária, R. Cândido de Sousa 15, 4710-300 Braga, DOI: https://doi.org/10.22271/veterinary.2021.v6.i3a.357 Portugal Abstract The importance of illustration in veterinary is usually overshadowed by its use in human medicine and forget. Nonetheless, it is important to recognize the importance of illustration in the development of veterinarian, as this is also a profession based on observation. Through history there are several examples of how illustration help to increase and share the knowledge in veterinary sciences. There is no doubt that illustration is an important tool in learning. That makes scientific illustration an important and irreplaceable tool, since they have the ability of takes scientific concepts, from the simplest to the complex, and bring it to life in an attractive and simplified way. Keywords: art, science, scientific illustration, veterinary medicine Introduction Biological illustration, has many branches being one of the medical illustrations. It is a form of illustration that helps to record and disseminate knowledge regarding medicine (e.g., anatomy, [1, 2] virology) . Usually, medical illustration is associated with human medicine, with the great anatomical illustrations of Da Vinci and Andreas Vesalius coming to mind [3, 4], but illustration also has an important role in veterinary medicine. Maybe, illustration has been used longer in veterinary than in human medicine but never is referenced its importance [3]. -

Anatomical Illustration Is Positioned at the Point Where Science Meets

Author: Nina Czegledy Description of Academic Affiliations: KMDI, University of Toronto Studio Arts, Concordia University, Montreal Moholy Nagy University of Art and Design Women at the threshold of art and medicine Key words: anatomical art, pioneer women, education, bio-medical tools In the beginning of the 20th century faith in progress and scientific discovery had a principal influence on scientists and artists. Revolutionary discoveries appeared in the sciences and in the arts a new awareness of a deep rootedness in nature and its processes became evident (1). As a result a conviction that a scientific spirit forms part of a new synthesis emerged in various disciplines (2) including a renewed interest and re-evaluation of scientific visualization (3). Scores of scientific discoveries, radical art activities and numerous technological inventions that we take for granted today, were drafted in this period. While major scientific discoveries such as the theory of quantum physics and the theory of relativity are dating from the first decade of the twentieth century – innovation and change was felt across all domains from economics to socio-political structures - including the first wave of feminism (4). Nevertheless it took decades to press forward for equal professional opportunities for women and even today a century later major discrepancies remain in vital professions. Key medical advances originating from Canada included the world’s first mobile transfusion unit developed Norman Bethune, Wilder Penfield’s surgical treatment of epilepsy in Montreal and most importantly the discovery of Insulin by Nobel prize winners Frederick Banting, Charles Best, JB Clip and JJR Macleod at the University of Toronto (5). -

By Phillip Thurtle. University of Minnesota Press, Posthurnanities

BIOLOGY IN THE GRID: Uncovering these differencesis GRAPHIC DESIGN AND THE politically important but also re ENVISIONING OF LIFE quires understanding how grids by Phillip Thurtle. University of are ordered. Thisinvolves asking Minnesota Press, Posthurnanities questions such as "What are the Volume 46, Minneapolis, MN, 2018. specific values that grids are in 272 pp, illus. Trade, paper. tended to support?" Understand ISBN: ISBN: 978-1517902773; 1517902770. ing life in the grid also demands Reviewed by Amy Ione, TheDiatrope the use of imagination. In this Institute, Berkeley, CA. Email: ione@ way we see how grids can be used diatrope.com. to reorder lives to be less oppres sive and more creative. Strangely, I https:/ doi.org/io.1162/leon_r_01898 it is through a study of the most As I began Phillip Thurtle'swell monotone and bureaucratic of researched Biology in the Grid: terms, "regulation;' that we see Graphic Design and the Envisioning of how closely bound the impulse to Life, I wondered how his "envision - control and the desire to imagine ing of life" would intersect with the coexist through envisioning. (p. 6) abundant evidence that a complex array of grids have served as a foun Thurtle, a biologist who specializes dational element in art, architecture in the cultural and conceptual basis and design production throughout of biology, uses a bifurcated research history. A few examples that quickly strategy to bring together the value of come to mind include those used standardization and the value of envi to construct perfectly proportioned sioning what lies beyond the kind of Egyptian and Aztec temples, Islamic accepted tropes that standardization and Buddhist art, Chuck Close's reinforces. -

The Intersection of Arts and Sciences

The Intersection of Arts and Sciences September 11th, 2012 Written by Elyssa Monzack, Postdoc at NIDCD, FelCom Career Development Subcommittee, with contributions from Pabak Sarkar, Postdoc at NIAAA, FelCom Career Development Subcommittee Felcom’s Career Development Seminar, “The Intersection of Arts and Sciences,” offered an opportunity for NIH Fellows to learn how to blend an interest in the arts with a scientific background from experts who have utilized their scientific skills to produce, render, explain and restore art. The first speaker was Mr. Wyatt Channell, who is a Senior Producer at Science Channel (part of Discovery Communications). Mr. Channell, who has a B.A. in English from the University of Maryland-College Park, began by noting that colleagues with Ph.D.s are scarce in the television industry, where being a compelling storyteller and communicating ideas in the most engaging way possible are the most valued qualities. Mr. Channell says that although he may not understand all the science, he understands enough to determine how to present scientific ideas to a general audience. To ensure factual accuracy, Discovery relies on the outside companies with which they work and contract to produce and ensure accuracy of the content, using vetted experts. Overall, Mr. Channell views his work not as artistry, but rather, the development of a commercial product to be consumed. The artistic component, he said, exists in presenting science and creating a story that is engaging and entertaining as well as informative. It came as a surprise to many in the audience that television networks like Discovery do not create their scientific programs in-house. -

The Association of Medical Illustrators

Vol.AMI 56, Issue 4, Winter 2016 NEWS Jennifer Fairman "Z-Ring Stabilization and Constriction Rate Modulation of the ZapA-ZapB-MatP Protein Network" LIFETIME ACHIEVEMENT AWARD Marcia Hartsock Receives AMI 2015 Lifetime Achievement Award at IN THIS ISSUE: the Cleveland Clinic Presented by Bill Andrews and Gary Schnitz Lifetime Achievement Award . 1 Techniques . 9 The purpose of the Lifetime Achieve- The AMI Lifetime Achievement Award 2016 Budget . .12 ment Award is to acknowledge and honor is the highest honor awarded by the Artists' Rights . 15 a medical illustrator who has been a Association of Medical Illustrators to an Winning Ways . 17 Professional AMI Member for at least 30 individual who has dedicated his or her continuous years, and whose life, work professional life as a medical illustrator. In Up & Coming . 34 and other accomplishments have signifi- doing so, this individual has engaged with 2016 Annual Meeting . 43 cantly contributed to the profession. fellow illustrators, not only to support the and much more . ideals of the profession, but also to insure AMINEWS FROM THE NEWSLETTER TEAM Happy holidays from the Newsletter team! and Jeff Day’s Comics Rx peers into the Committee Chair & Co-Editors fantastic team at Booster Shot Comics. Jodi Slade & Shizuka Aoki We have a bounty of new faces and new Wendy Beth Jackelow reviews the Layout Artist articles this winter, so a lot to be thank- passionate and insightful Do No Harm: Jackie Meyer ful for. We close out 2015 and celebrate Stories of Life, Death, and Brain Surgery Editorial Review Board our Lifetime Achievement Award Winner by Henry Marsh. -

Download Joanne's Résumé

JOANNE HADERER MüLLER, MA, CMI 66 Sargent Street, Melrose, Massachusetts 02176 [email protected] www.haderermuller.com 781.662.0688 DEGREES & CERTIFICATIONS Certified Medical Illustrator—The Board of Certification of Medical Illustrators, 2005–present Master of Arts: Medical & Biological Illustration—The Department of Art as Applied to Medicine, The Johns Hopkins University School of Medicine, Baltimore, Maryland, USA, 2000 Bachelor of Arts: Commercial Art—Millersville University, Millersville, Pennsylvania, USA, 1998—Summa cum laude PROFESSIONAL BACKGROUND positions Founding Partner, Creative Director—Haderer & Müller Biomedical Art, LLC; Melrose, Massachusetts, April 2004–present Project management, client interaction, consultation, and production of visual communication solutions including biomedical illustration, instructional design, and graphic design for clients in the fields of science, medicine, healthcare, and business Chair, Board of Governors—Association of Medical Illustrators; July 2013–present Coordination and direction of approximately 30 committees in their work to advance a 5-year strategic plan improving the educational impact, professional standing, and visibility of this international association; Ongoing business and communications decisions; Board leadership Founding Partner—Haderer & Müller Ilustração Biomédica Lda.; Lisbon, Portugal, September 2000–September 2004 Establishment and operation of the first studio in Portugal to provide biomedical illustration, design, and anaplastology services to clients -

Graduate Program Brochure

The Johns Hopkins University School of Medicine Medical & Biological Illustration Graduate Program The Medical & Biological Illustration (MBI) The Profession graduate program provides broad interdisciplinary There is a growing need for clear accurate visuals to communicate the education and training in medical illustration. This 22-month latest advancements in science and program meets both the scholarship requirements of the University medicine. E!ective medical illustration for a Master of Arts degree and the visual communication needs of can teach a novel surgical procedure, explain a newly discovered molecular today’s health science professionals. mechanism, describe how a medical device works, or depict a disease As part of the Department Art as Applied to Medicine in the Johns Hopkins pathway. Through their work, medical University School of Medicine, students in the MBI program have easy access to all illustrators bridge gaps in medical and healthcare communication. the facilities of the world renowned Johns Hopkins Medical Institutions. The integral connection between the MBI graduate program and the medical illustration services Graduates of the Johns Hopkins Medical provided by faculty of the Department allows students to mentor with practicing and Biological Illustration program have Certified Medical Illustrators (CMI), to use the most technologically advanced a strong history of high employment production equipment, and to observe faculty members as active illustrators in the rates with some students receiving job o!ers prior to graduation. The Hopkins community. graduates from 2016-2020 had an employment rate of 94% within Medical illustration training at Johns Hopkins formally began in 1911 under the the first 6 months. leadership of Max Brödel with an endowment from Henry Walters. -

JENNIFER E. FAIRMAN, CMI, FAMI Curriculum Vitae for Academic Promotion the Johns Hopkins University School of Medicine

JENNIFER E. FAIRMAN, CMI, FAMI Curriculum Vitae for Academic Promotion The Johns Hopkins University School of Medicine (Typed Name) Jennifer Elizabeth Fairman (Date of this version) June 10, 2016 ✦ DEMOGRAPHIC AND PERSONAL INFORMATION CURRENT APPOINTMENTS Assistant Professor Founder and Principal Certified Medical Illustrator Certified Medical Illustrator The Johns Hopkins University School of Medicine Fairman Studios, LLC Department of Art as Applied to Medicine PERSONAL DATA 1830 East Monument Street, Suite 7000 108 Woodlawn Road Baltimore, MD 21205 Baltimore, MD 21210 Phone : 410-955-3213 Phone : 781-647-7510 Fax : 410.955.1085 Fax : 240-597-0366 [email protected] [email protected] EDUCATION AND TRAINING :: Undergraduate 1995 Bachelor of Science Biological Science (Pre-medical track) University of Maryland, College Park, MD 1995 Bachelor of Arts Studio Art (German Language, Minor) University of Maryland, College Park, MD :: Graduate 1999 Master of Arts Medical & Biological Illustration Johns Hopkins University School of Medicine Baltimore, MD Masters Thesis: “Prosauropod and Iguanid Jaw Musculature: A Study on the Evolution of Form and Function” Preceptor: David Weishampel, PhD Professor, Functional Anatomy and Evolution Faculty Advisor: Timothy H. Phelps, FAMI Associate Professor, Art as Applied to Medicine 2016 - Master of Professional Studies Business of Art and Design Candidate (anticipated Dec 2017) Maryland Institute College of Art, Baltimore, MD :: Postgraduate 2007-2014 Continuing Professional Studies Maryland Institute College of Art, Baltimore, MD 2010-2011 Leadership Program for Women Faculty Johns Hopkins School of Medicine, Baltimore, MD Jennifer E. Fairman, CMI, FAMI - CV 06/01/2016 2016 FORMAT 2 PROFESSIONAL EXPERIENCE 05/1991 to 01/1992 Exhibits Assistant The Virginia Living Museum, Newport News, VA Illustrated mammals, birds and plants for education, exhibits and museum newsletter. -

John M. Daugherty, MS CMI FAMI Education M.S. Medical And

John M. Daugherty, MS CMI FAMI Curriculum Vitae Program Director/Clinical Assistant Professor Biomedical Visualization University of Illinois at Chicago 1919 W. Taylor Street, Suite 250 (MC 530) Chicago, IL 60612 312.996.4975 [email protected] Education 1978–1980 M.S. Medical and Biological Illustration University of Michigan 1976–1977 Herron School of Art, Indianapolis 1975–1976 Indiana University (Pharmacology) 1971–1975 B.A. Chemistry and Psychology Indiana University Certification 1994–current Certified Medical Illustrator The Board of Certification of Medical Illustrators Professional Experience 2011-current Program Director, Biomedical Visualization Department of Biomedical and Health Information Sciences Biomedical Visualization Graduate Program The University of Illinois at Chicago 2005-2010 Associate Program Director, Biomedical Visualization Department of Biomedical and Health Information Sciences Biomedical Visualization Graduate Program The University of Illinois at Chicago 1989–current Owner of Highlight Studios Sole proprietor of medical illustration studio 1988–current Clinical Assistant Professor/Associate Graduate Faculty Department of Biomedical and Health Information Sciences Biomedical Visualization Graduate Program The University of Illinois at Chicago 1988–1991 Manager of Illustration Studios Department of Biomedical Visualization The University of Illinois at Chicago 1981–1988 Coordinator of Biomedical Illustration Department of Anatomy and Neurobiology Colorado State University Instructor Department of Anatomy and -

Ernst Heinrich Philipp August Haeckel 16 Feburary 1834 to 09 August 1919

ARTICLE-IN-A-BOX Ernst Heinrich Philipp August Haeckel 16 Feburary 1834 to 09 August 1919 Ernst Haeckel was born in Potsdam (near Berlin), the residence of the Prussian kings in the period preceding the unification of Germany in 1871. His family was highly educated: his father, Karl, was a jurist by training and served in the Prussian court, his mother, Charlotte, was the daughter of a well-known jurist, and his elder brother, Karl, too, was a lawyer. One year after his birth, Haeckel’s family moved from Potsdam to Merseburg, then the capital of Saxony, about 170 km to the south-west of Potsdam. His father was in charge of schools and ecclesiastical affairs at Merseburg, and Haeckel spent the next seventeen years in this relatively small town. At home, the young Ernst benefited from an intellectually rich atmosphere. His father was very fond of philosophy, natural sciences, geology, and travelogues, and often dis- cussed these interests with him. His mother was a great admirer of classical German poetry, especially that of Goethe and Schiller, and instilled a love for poetry in her son that he main- tained throughout his life. He also developed an early passion for sketching and painting, and by his teens, was a fairly proficient artist. His tutor, Karl Gude, introduced him to a wide array of themes in biology ranging from Linnaean classification to the quasi-evolutionary specula- tions of the comparative morphologist Lorenz Oken. In particular, Haeckel read and recalled being greatly inspired by the travelogues written by Alexander von Humboldt and Charles Dar- win (The Voyage of the Beagle, in German translation), as well as Matthias Jakob Schleiden’s book on plants and their life. -

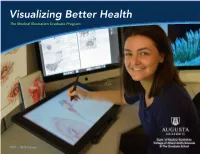

Visualizing Better Health the Medical Illustration Graduate Program

Visualizing Better Health The Medical Illustration Graduate Program Dept. of Medical Illustration College of Allied Health Sciences 2021 – 2023 Edition & The Graduate School This booklet provides an overview of the Dept. of Medical Illustration and the Medical Illustration Graduate Program at Augusta University. Details and dates in this brochure are subject to change; for the most current information about our admission requirements and the application process, please visit our website. For other questions about the program, please contact Prof. Bill Andrews, Chair & Program Director, at [email protected]. 1 Published June 2021 Dept. of Medical Illustration College of Allied Health Sciences Augusta University Pavilion 3, Suite 1101 1474 Laney-Walker Blvd. Augusta, GA 30912 USA Ph: 706-721-3266 email: [email protected] 1. “Corona Radiata,” by C. Hartmann (‘13). 2 2. “Anatomy of the Spine,” by H. Martin (‘10). © 2021 Augusta University. All rights reserved. Individual images are copyright of the artist. What is Medical Illustration? 1. “Glaucoma,” detail, by J. Klein (‘15). “Watch what’s never been done, 2. “The Cochlea, Hair Cells, & Noise- draw what’s never been seen, and 1 Induced Hearing Loss,” by J. Stowe (‘08). tell thousands about it without saying a word.” —Association of Medical Illustrators Medical illustration is a truly interdisciplinary field. It is a synergistic combination of the visual arts, the health sciences, education, and communications. Historically, its roots were fully evident by the Renaissance, as seen in the work of research anatomists and educators such as Andreas Vesalius and in the artwork of the great figurative artists such as Leonardo DaVinci.