PHENOMENOLOGICAL LISTENING: Phe – Nom – E – ‘Nol – O – Gy

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Iannis Xenakis, Roberta Brown, John Rahn Source: Perspectives of New Music, Vol

Xenakis on Xenakis Author(s): Iannis Xenakis, Roberta Brown, John Rahn Source: Perspectives of New Music, Vol. 25, No. 1/2, 25th Anniversary Issue (Winter - Summer, 1987), pp. 16-63 Published by: Perspectives of New Music Stable URL: http://www.jstor.org/stable/833091 Accessed: 29/04/2009 05:06 Your use of the JSTOR archive indicates your acceptance of JSTOR's Terms and Conditions of Use, available at http://www.jstor.org/page/info/about/policies/terms.jsp. JSTOR's Terms and Conditions of Use provides, in part, that unless you have obtained prior permission, you may not download an entire issue of a journal or multiple copies of articles, and you may use content in the JSTOR archive only for your personal, non-commercial use. Please contact the publisher regarding any further use of this work. Publisher contact information may be obtained at http://www.jstor.org/action/showPublisher?publisherCode=pnm. Each copy of any part of a JSTOR transmission must contain the same copyright notice that appears on the screen or printed page of such transmission. JSTOR is a not-for-profit organization founded in 1995 to build trusted digital archives for scholarship. We work with the scholarly community to preserve their work and the materials they rely upon, and to build a common research platform that promotes the discovery and use of these resources. For more information about JSTOR, please contact [email protected]. Perspectives of New Music is collaborating with JSTOR to digitize, preserve and extend access to Perspectives of New Music. http://www.jstor.org XENAKIS ON XENAKIS 47W/ IANNIS XENAKIS INTRODUCTION ITSTBECAUSE he wasborn in Greece?That he wentthrough the doorsof the Poly- technicUniversity before those of the Conservatory?That he thoughtas an architect beforehe heardas a musician?Iannis Xenakis occupies an extraodinaryplace in the musicof our time. -

The Sculpted Voice an Exploration of Voice in Sound Art

The Sculpted Voice an exploration of voice in sound art Author: Olivia Louvel Institution: Digital Music and Sound Art. University of Brighton, U.K. Supervised by Dr Kersten Glandien 2019. Table of Contents 1- The plastic dimension of voice ................................................................................... 2 2- The spatialisation of voice .......................................................................................... 5 3- The extended voice in performing art ........................................................................16 4- Reclaiming the voice ................................................................................................20 Bibliography ....................................................................................................................22 List of audio-visual materials ............................................................................................26 List of works ....................................................................................................................27 List of figures...................................................................................................................28 Cover image: Barbara Hepworth, Pierced Form, 1931. Photographer Paul Laib ©Witt Library Courtauld Institute of Art London. 1 1- The plastic dimension of voice My practice is built upon a long-standing exploration of the voice, sung and spoken and its manipulation through digital technology. My interest lies in sculpting vocal sounds as a compositional -

Experimental Sound & Radio

,!7IA2G2-hdbdaa!:t;K;k;K;k Art weiss, making and criticism have focused experimental mainly on the visual media. This book, which orig- inally appeared as a special issue of TDR/The Drama Review, explores the myriad aesthetic, cultural, and experi- editor mental possibilities of radiophony and sound art. Taking the approach that there is no single entity that constitutes “radio,” but rather a multitude of radios, the essays explore various aspects of its apparatus, practice, forms, and utopias. The approaches include historical, 0-262-73130-4 Jean Wilcox jacket design by political, popular cultural, archeological, semiotic, and feminist. Topics include the formal properties of radiophony, the disembodiment of the radiophonic voice, aesthetic implications of psychopathology, gender differences in broad- experimental sound and radio cast musical voices and in narrative radio, erotic fantasy, and radio as an http://mitpress.mit.edu Cambridge, Massachusetts 02142 Massachusetts Institute of Technology The MIT Press electronic memento mori. The book includes new pieces by Allen S. Weiss and on the origins of sound recording, by Brandon LaBelle on contemporary Japanese noise music, and by Fred Moten on the ideology and aesthetics of jazz. Allen S. Weiss is a member of the Performance Studies and Cinema Studies Faculties at New York University’s Tisch School of the Arts. TDR Books Richard Schechner, series editor experimental edited by allen s. weiss #583606 5/17/01 and edited edited by allen s. weiss Experimental Sound & Radio TDR Books Richard Schechner, series editor Puppets, Masks, and Performing Objects, edited by John Bell Experimental Sound & Radio, edited by Allen S. -

Holmes Electronic and Experimental Music

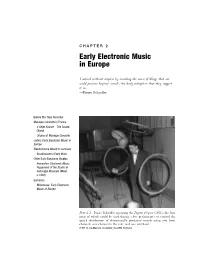

C H A P T E R 2 Early Electronic Music in Europe I noticed without surprise by recording the noise of things that one could perceive beyond sounds, the daily metaphors that they suggest to us. —Pierre Schaeffer Before the Tape Recorder Musique Concrète in France L’Objet Sonore—The Sound Object Origins of Musique Concrète Listen: Early Electronic Music in Europe Elektronische Musik in Germany Stockhausen’s Early Work Other Early European Studios Innovation: Electronic Music Equipment of the Studio di Fonologia Musicale (Milan, c.1960) Summary Milestones: Early Electronic Music of Europe Plate 2.1 Pierre Schaeffer operating the Pupitre d’espace (1951), the four rings of which could be used during a live performance to control the spatial distribution of electronically produced sounds using two front channels: one channel in the rear, and one overhead. (1951 © Ina/Maurice Lecardent, Ina GRM Archives) 42 EARLY HISTORY – PREDECESSORS AND PIONEERS A convergence of new technologies and a general cultural backlash against Old World arts and values made conditions favorable for the rise of electronic music in the years following World War II. Musical ideas that met with punishing repression and indiffer- ence prior to the war became less odious to a new generation of listeners who embraced futuristic advances of the atomic age. Prior to World War II, electronic music was anchored down by a reliance on live performance. Only a few composers—Varèse and Cage among them—anticipated the importance of the recording medium to the growth of electronic music. This chapter traces a technological transition from the turntable to the magnetic tape recorder as well as the transformation of electronic music from a medium of live performance to that of recorded media. -

Electronic Music - Inquiry Description

1 of 15 Electronic Music - Inquiry Description This inquiry leads students through a study of the music industry by studying the history of electric and electronic instruments and music. Today’s students have grown up with ubiquitous access to music throughout the modern internet. The introduction of streaming services and social media in the early 21st century has shown a sharp decline in the manufacturing and sales of physical media like compact discs. This inquiry encourages students to think like historians about the way they and earlier generations consumed and composed music. The questions of artistic and technological innovation and consumption, invite students into the intellectual space that historians occupy by investigating the questions of what a sound is and how it is generated, how accessibility of instrumentation affects artistic trends, and how the availability of streaming publishing and listening services affect consumers. Students will learn about the technical developments and problems of early electric sound generation, how the vacuum tube allowed electronic instruments to become commercially viable, how 1960s counterculture broadcast avant-garde and experimental sounds to a mainstream audience, and track how artistic trends shift overtime when synthesizers, recording equipment, and personal computers become less expensive over time and widely commercially available. As part of their learning about electronic music, students should practice articulating and writing various positions on the historical events and supporting these claims with evidence. The final performance task asks them to synthesize what they have learned and consider how the internet has affected music publishing. This inquiry requires prerequisite knowledge of historical events and ideas, so teachers will want their students to have already studied the 19th c. -

Pierre Schaeffer an Interview with the Pioneer of Musique Concrete by Tim

Pierre Schaeffer an interview with the pioneer of musique concrete by Tim Hodgkinson, 2 April 1986 from Recommended Records Quarterly Magazine, volume 2, number 1, 1987 Introduction: What is Musique Concrete and why is it so important today? Musique Concrete is music made of raw sounds: thunderstorms, steam-engines, waterfalls, steel foundries... The sounds are not produced by traditional acoustic musical instruments. They are captured on tape (originally, before tape, on disk) and manipulated to form sound-structures. The work method is therefore empirical. It starts from the concrete sounds and moves towards a structure. In contrast, traditional classical music starts from an abstract musical schema. This is then notated and only expressed in concrete sound as a last stage, when it is performed. Musique Concrete emerged in Paris in 1948 at the RTF (Radio Television Francais). Its originator, leading researcher and articulate spokesman was Pierre Schaeffer - at that time working as an electro-acoustic engineer with the RTF. Almost immediately, Musique Concrete found itself locked in mortal combat not only with its opponents within traditionally notated music, but also with Electronic Music, which emerged in Cologne in 1950 at the NWDR (Nord West Deutscher Rundfunk). Electronic Music involved the use of precisely controllable electronic equipment to generate the sound material - for example, the oscillator, which can produce any desired wave-form, which can then be shaped, modulated, etc... At the time, the antagonism between Musique Concrete and Electronic music seemed to revolve largely around the difference in sound material. Over the decades, this difference has become less important, so that what we now call 'Electroacoustic Music' is less concerned with the origin of the sound material than with what is done with it afterwards. -

Harpsichord and Its Discourses

Popular Music and Instrument Technology in an Electronic Age, 1960-1969 Farley Miller Schulich School of Music McGill University, Montréal April 2018 A thesis submitted to McGill University in partial fulfilment of the requirements of the degree of Ph.D. in Musicology © Farley Miller 2018 Table of Contents Abstract ................................................................................................................... iv Résumé ..................................................................................................................... v Acknowledgements ................................................................................................ vi Introduction | Popular Music and Instrument Technology in an Electronic Age ............................................................................................................................ 1 0.1: Project Overview .................................................................................................................. 1 0.1.1: Going Electric ................................................................................................................ 6 0.1.2: Encountering and Categorizing Technology .................................................................. 9 0.2: Literature Review and Theoretical Concerns ..................................................................... 16 0.2.1: Writing About Music and Technology ........................................................................ 16 0.2.2: The Theory of Affordances ......................................................................................... -

Alarm/Will/Sound: Sound Design, Modelling, Perception and Composition Cross-Currents Alexander Sigman, Nicolas Misdariis

alarm/will/sound: Sound design, modelling, perception and composition cross-currents Alexander Sigman, Nicolas Misdariis To cite this version: Alexander Sigman, Nicolas Misdariis. alarm/will/sound: Sound design, modelling, perception and composition cross-currents. Organised Sound, Cambridge University Press (CUP), 2019, 10.1017/S1355771819000062. hal-02469295 HAL Id: hal-02469295 https://hal.archives-ouvertes.fr/hal-02469295 Submitted on 28 Apr 2020 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. alarm/will/sound: Sound Design, Modelling, Perception and Composition Cross-Currents Alexander Sigman Nicolas Misdariis International College of Liberal Arts (iCLA) Sound Perception and Design Team Yamanashi Gakuin University STMS Ircam-CNRS-Sorbonne Université 2-7-17 Sakaori Kofu-shi, Yamanashi-ken 1, place Igor-Stravinsky 400-0805 JAPAN 75004 Paris, FRANCE E-mail: [email protected] E-mail: [email protected] ABSTRACT An ongoing international arts-research-industry collaborative project focusing on the design and implementation of innovative car alarm systems, alarm/will/sound has a firm theoretical basis in theories of sound perception and classification of Pierre Schaeffer and the acousmatic tradition. In turn, the timbre perception, modelling and design components of this project have had a significant influence on a range of fixed media, electroacoustic, and media installation works realised in parallel to the experimental research. -

Electric Love Blueprint: a Brief History of Electronic Kunstmuseen Der Welt Auf

Dorothy (Design Studio) ELECTRIC LOVE BLUEPRINT: A BRIEF HISTORY OF ELECTRONIC MUSIC ELIF AKYÜZ Plakat, Siebdruck, 60 x 80 cm, 2. Auflage 2019 Am Morgen des 18. Dezember 1927 berichtete die New York Times unter der Über- tionszeichen resultieren in der Skizze bildimmanente Hotspots, die sich in der Nah- schrift All Paris Thrilled by Radio Invention von einem Konzert, das einen Tag zuvor sicht als grafisch abstrahierte Epizentren der Musik- und Mediengeschichte ausweisen in der Pariser Oper stattgefunden und beträchtliches Aufsehen erregt hatte: »It is no- lassen. Bereits aus der Tatsache, dass alle Funktionsbestandteile des Plans mit Namen teable that most of the eminent musical critics in Paris regard this invention as perhaps von Tüftlern, Komponisten und Interpreten besetzt wurden, lässt sich die komplexe destined to revolutionize the whole field of music« (New York Times, 18. Dezember Verstrickung der Phänomene erahnen, die seit Beginn der elektronischen Klangerzeu- 1927, S.16). Im Zeichen der Schaulust schwärmten Intellektuelle und Connaisseurs, gung nicht nur technisch produzierbar, sondern mit der Erfindung von Aufnahme- und darunter Persönlichkeiten wie der Schriftsteller Paul Valéry oder der Komponist und Wiedergabegeräten gleichzeitig auch massenhaft reproduzierbar geworden waren. Musikkritiker Paul Le Flem, zu der Vorführung, um die Zaubermaschine des russischen Mit jenem Instrument, das es vermochte, dem Äther die Töne zu entlocken, setz- Physikprofessors León Theremin zu sehen. Trotz der Ingeniösität seiner Erfindung, te sich -

In Search of a Concrete Music

In Search of a Concrete Music The publisher gratefully acknowledges the generous support of the Ahmanson Foundation Humanities Endowment Fund of the University of California Press Foundation. ni niVtirp im -tutt) n n )»h yViyn n »i »t m n j w TTtt-i 1111 I'n-m i i i lurfii'ii; i1 In Search of a Concrete Music CALIFORNIA STUDIES IN 20TH-CENTURY MUSIC Richard Taruskin, General Editor 1. Revealing Masks: Exotic Influences and Ritualized Performance in Modernist Music Theater, by W. Anthony Sheppard 2. Russian Opera and the Symbolist Movement, by Simon Morrison 3. German Modernism: Music and the Arts, by Walter Frisch 4. New Music, New Allies: American Experimental Music in West Germany from the Zero Hour to Reunification, by Amy Beal 5. Bartok, Hungary, and the Renewal of Tradition: Case Studies in the Intersection of Modernity and Nationality, by David E. Schneider 6. Classic Chic: Music, Fashion, and Modernism, by Mary E. Davis 7. Music Divided: Bartok's Legacy in Cold War Culture, by Danielle Fosler-Lussier 8. Jewish Identities: Nationalism, Racism, and Utopianism in Twentieth-Century Art Music, by Klara Moricz 9. Brecht at the Opera, by Joy H. Calico 10. Beautiful Monsters: Imagining the Classic in Musical Media, by Michael Long xi. Experimentalism Otherwise: The New York Avant-Garde and Its Limits, by Benjamin Piekut 12. Music and the Elusive Revolution: Cultural Politics and Political Culture in France, 1968-1981, by Eric Drott 13. Music and Politics in San Francisco: From the 1906 Quake to the Second World War, by Leta E. Miller 14. -

Περίληψη : a Music Composer of Greek Origin, Coming from a Family of the Greek Diaspora

IΔΡΥΜA ΜΕΙΖΟΝΟΣ ΕΛΛΗΝΙΣΜΟΥ Συγγραφή : Ροβίθη Χαρά Μετάφραση : Αμπούτη Αγγελική Για παραπομπή : Ροβίθη Χαρά , "Xenakis Iannis", Εγκυκλοπαίδεια Μείζονος Ελληνισμού, Εύξεινος Πόντος URL: <http://www.ehw.gr/l.aspx?id=11573> Περίληψη : A music composer of Greek origin, coming from a family of the Greek Diaspora. During his lifetime, being as a participant of the social, political and cultural life in the years following the Second World War, he followed a course of research and creation serving his vision of art. Iannis Xenakis produced a rich musical, architectural and auctorial work which made him one of the most important progressive creators of the 20th century. Τόπος και Χρόνος Γέννησης 29th of May, 1922 ? (or 1921), Brăila, Romania. Τόπος και Χρόνος Θανάτου 4th of February, 2001, Paris. Κύρια Ιδιότητα Architect, mechanic, composer. 1. The First Years-Basic Studies Iannis Xenakis was born in Brăila of Romania, on the 29th of May, 1922 (the date is uncertain as it is probable that he was born in 1921) of parents who were members of the Greek Diaspora. His father, Klearchos, director of a British import-export company, came from Euboea, and his mother, Fotini Pavlou, came from the island of Limnos. Iannis Xenakis had two younger brothers, Kosmas, an urban planner and a painter, and Iasonas, a philosophy professor. Xenakis was introduced to music by his mother, who was a pianist. It is said that during his early childhood his mother gave him as a present a flute encouraging him to get involved in music. When he was five years old, his mother died from measles and the three children were brought up by French, English and German governesses. -

BEAUTIFUL NOISE Directions in Electronic Music

BEAUTIFUL NOISE Directions in Electronic Music www.ele-mental.org/beautifulnoise/ A WORK IN PROGRESS (3rd rev., Oct 2003) Comments to [email protected] 1 A Few Antecedents The Age of Inventions The 1800s produce a whole series of inventions that set the stage for the creation of electronic music, including the telegraph (1839), the telephone (1876), the phonograph (1877), and many others. Many of the early electronic instruments come about by accident: Elisha Gray’s ‘musical telegraph’ (1876) is an extension of his research into telephone technology; William Du Bois Duddell’s ‘singing arc’ (1899) is an accidental discovery made from the sounds of electric street lights. “The musical telegraph” Elisha Gray’s interesting instrument, 1876 The Telharmonium Thaddeus Cahill's telharmonium (aka the dynamophone) is the most important of the early electronic instruments. Its first public performance is given in Massachusetts in 1906. It is later moved to NYC in the hopes of providing soothing electronic music to area homes, restaurants, and theatres. However, the enormous size, cost, and weight of the instrument (it weighed 200 tons and occupied an entire warehouse), not to mention its interference of local phone service, ensure the telharmonium’s swift demise. Telharmonic Hall No recordings of the instrument survive, but some of Cahill’s 200-ton experiment in canned music, ca. 1910 its principles are later incorporated into the Hammond organ. More importantly, Cahill’s idea of ‘canned music,’ later taken up by Muzak in the 1960s and more recent cable-style systems, is now an inescapable feature of the contemporary landscape.