Spectrum Routing for Big Data V4.1 Install Guide: Cloudera

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Unix Command Line; Editors

Unix command line; editors Karl Broman Biostatistics & Medical Informatics, UW–Madison kbroman.org github.com/kbroman @kwbroman Course web: kbroman.org/AdvData My goal in this lecture is to convince you that (a) command-line-based tools are the things to focus on, (b) you need to choose a powerful, universal text editor (you’ll use it a lot), (c) you want to be comfortable and skilled with each. For your work to be reproducible, it needs to be code-based; don’t touch that mouse! Windows vs. Mac OSX vs. Linux Remote vs. Not 2 The Windows operating system is not very programmer-friendly. Mac OSX isn’t either, but under the hood, it’s just unix. Don’t touch the mouse! Open a terminal window and start typing. I do most of my work directly on my desktop or laptop. You might prefer to work remotely on a server, instead. But I can’t stand having any lag in looking at graphics. If you use Windows... Consider Git Bash (or Cygwin) or turn on the Windows subsystem for linux 3 Cygwin is an effort to get Unix command-line tools in Windows. Git Bash combines git (for version control) and bash (the unix shell); it’s simpler to deal with than Cygwin. Linux is now accessible in Windows 10, but you have to enable it. If you use a Mac... Consider Homebrew and iTerm2 Also the XCode command line tools 4 Homebrew is a packaging system; iTerm2 is a Terminal replacement. The XCode command line tools are a must for most unixy things on a Mac. -

GNU Coreutils Cheat Sheet (V1.00) Created by Peteris Krumins ([email protected], -- Good Coders Code, Great Coders Reuse)

GNU Coreutils Cheat Sheet (v1.00) Created by Peteris Krumins ([email protected], www.catonmat.net -- good coders code, great coders reuse) Utility Description Utility Description arch Print machine hardware name nproc Print the number of processors base64 Base64 encode/decode strings or files od Dump files in octal and other formats basename Strip directory and suffix from file names paste Merge lines of files cat Concatenate files and print on the standard output pathchk Check whether file names are valid or portable chcon Change SELinux context of file pinky Lightweight finger chgrp Change group ownership of files pr Convert text files for printing chmod Change permission modes of files printenv Print all or part of environment chown Change user and group ownership of files printf Format and print data chroot Run command or shell with special root directory ptx Permuted index for GNU, with keywords in their context cksum Print CRC checksum and byte counts pwd Print current directory comm Compare two sorted files line by line readlink Display value of a symbolic link cp Copy files realpath Print the resolved file name csplit Split a file into context-determined pieces rm Delete files cut Remove parts of lines of files rmdir Remove directories date Print or set the system date and time runcon Run command with specified security context dd Convert a file while copying it seq Print sequence of numbers to standard output df Summarize free disk space setuidgid Run a command with the UID and GID of a specified user dir Briefly list directory -

Frank D. Chown, Ahc

Doors & Hardware Institute Remembers Frank D. Chown, ahc HOEVER SAID “NICE GUYS in the love and comfort of a family that finish last” never met Frank did everything together. Frank and Elea- WChown. He was a friend to nor never had a cross word or argument everyone and left a heritage of kindness between them and the infinite patience and graciousness that still lives on today. they bestowed on their active, and not Frank was born in 1918, the son of D.B. always cooperative, sons set an impossi- and Helen Chown. To be born a Chown in bly high standard for the generation to Portland meant one thing—hardware. In come. Together they demonstrated the the year of Frank’s birth, the family busi- power of family, commitment, sacrifice ness was already 39 years old and enter- and love. ing the second generation of ownership. Frank D. Chown, AHC After WWII, Frank was also reunited August 2, 191 – August 7, 2006 Chown Hardware had been founded in with another part of the Chown family, 1879 by Frank’s grandfather F.R. Chown. Frank’s father Chown Hardware. In 1945 he re-entered the family business took over the business the year that Frank was born. and remained actively involved for over 50 years. His first Frank and his younger sister, Carol, grew up in the Hol- major project was to build and open a new store at NW 16th lywood neighborhood of Portland and attended Grant and Flanders, where Chown Hardware is still located today. High School. At Grant, his leadership abilities began to be In the 1950s Frank began Choen’s contract hardware realized and he made many life-long friends. -

Linux File System and Linux Commands

Hands-on Keyboard: Cyber Experiments for Strategists and Policy Makers Review of the Linux File System and Linux Commands 1. Introduction Becoming adept at using the Linux OS requires gaining familiarity with the Linux file system, file permissions, and a base set of Linux commands. In this activity, you will study how the Linux file system is organized and practice utilizing common Linux commands. Objectives • Describe the purpose of the /bin, /sbin, /etc, /var/log, /home, /proc, /root, /dev, /tmp, and /lib directories. • Describe the purpose of the /etc/shadow and /etc/passwd files. • Utilize a common set of Linux commands including ls, cat, and find. • Understand and manipulate file permissions, including rwx, binary and octal formats. • Change the group and owner of a file. Materials • Windows computer with access to an account with administrative rights The Air Force Cyber College thanks the Advanced Cyber Engineering program at the Air Force Research Laboratory in Rome, NY, for providing the information to assist in educating the general Air Force on the technical aspects of cyberspace. • VirtualBox • Ubuntu OS .iso File Assumptions • The provided instructions were tested on an Ubuntu 15.10 image running on a Windows 8 physical machine. Instructions may vary for other OS. • The student has administrative access to their system and possesses the right to install programs. • The student’s computer has Internet access. 2. Directories / The / directory or root directory is the mother of all Linux directories, containing all of the other directories and files. From a terminal users can type cd/ to move to the root directory. -

Freebsd Command Reference

FreeBSD command reference Command structure Each line you type at the Unix shell consists of a command optionally followed by some arguments , e.g. ls -l /etc/passwd | | | cmd arg1 arg2 Almost all commands are just programs in the filesystem, e.g. "ls" is actually /bin/ls. A few are built- in to the shell. All commands and filenames are case-sensitive. Unless told otherwise, the command will run in the "foreground" - that is, you won't be returned to the shell prompt until it has finished. You can press Ctrl + C to terminate it. Colour code command [args...] Command which shows information command [args...] Command which modifies your current session or system settings, but changes will be lost when you exit your shell or reboot command [args...] Command which permanently affects the state of your system Getting out of trouble ^C (Ctrl-C) Terminate the current command ^U (Ctrl-U) Clear to start of line reset Reset terminal settings. If in xterm, try Ctrl+Middle mouse button stty sane and select "Do Full Reset" exit Exit from the shell logout ESC :q! ENTER Quit from vi without saving Finding documentation man cmd Show manual page for command "cmd". If a page with the same man 5 cmd name exists in multiple sections, you can give the section number, man -a cmd or -a to show pages from all sections. man -k str Search for string"str" in the manual index man hier Description of directory structure cd /usr/share/doc; ls Browse system documentation and examples. Note especially cd /usr/share/examples; ls /usr/share/doc/en/books/handbook/index.html cd /usr/local/share/doc; ls Browse package documentation and examples cd /usr/local/share/examples On the web: www.freebsd.org Includes handbook, searchable mailing list archives System status Alt-F1 .. -

UNIX Administration Course

UNIX Administration Course Copyright 1999 by Ian Mapleson BSc. Version 1.0 [email protected] Tel: (+44) (0)1772 893297 Fax: (+44) (0)1772 892913 WWW: http://www.futuretech.vuurwerk.nl/ Detailed Notes for Day 1 (Part 3) UNIX Fundamentals: File Ownership UNIX has the concept of file ’ownership’: every file has a unique owner, specified by a user ID number contained in /etc/passwd. When examining the ownership of a file with the ls command, one always sees the symbolic name for the owner, unless the corresponding ID number does not exist in the local /etc/passwd file and is not available by any system service such as NIS. Every user belongs to a particular group; in the case of the SGI system I run, every user belongs to either the ’staff’ or ’students’ group (note that a user can belong to more than one group, eg. my network has an extra group called ’projects’). Group names correspond to unique group IDs and are listed in the /etc/group file. When listing details of a file, usually the symbolic group name is shown, as long as the group ID exists in the /etc/group file, or is available via NIS, etc. For example, the command: ls -l / shows the full details of all files in the root directory. Most of the files and directories are owned by the root user, and belong to the group called ’sys’ (for system). An exception is my home account directory /mapleson which is owned by me. Another example command: ls -l /home/staff shows that every staff member owns their particular home directory. -

Supplement To: Smith, Edward Bishop, Jillian Chown, and Kevin Gaughan. 2021. “Better in the Shadows? Public Attention, Media C

Supplement to: Smith, Edward Bishop, Jillian Chown, and Kevin Gaughan. 2021. “Better in the Shadows? Public Attention, Media Coverage, and Market Reactions to Female CEO Announcements.” Sociological Science 8: 119- 149. S1 Smith, Chown, and Gaughan Better in the Shadows APPENDIX A: RavenPack (ravenpack.com) uses a generic algorithm for coding the news. One of the categories it uses to classify each news article is Executive Appointment. Although there are further refined subcategories such as Chief Executive Officer within RavenPack’s classification system, not all CEO announcements were included in their predefined category. As such, we extracted all observations from the broader executive appointment category and generated an algorithm to extract the relevant announcements based on keywords in the headline of the news item (i.e. CEO, chief executive, etc.). We then drew a random sample (N = 100), and manually assessed the accuracy of our algorithm by determining whether each announcement was a relevant CEO announcement. The rate of false positives was 16%. Of the false positives identified, more than two-thirds were CEO announcements for a subsidiary of a focal firm (i.e., a business unit). All code is available upon request from the authors. Aside from potentially decreasing the efficiency of the estimators in models containing the full sample, it is possible that this noise may be biasing the estimates as well. For instance, if we assume that a) the majority of false positives are subsidiaries, and b) that CEO announcements for subsidiaries receive less overall media attention than CEO announcements for publicly traded firms, then these observations are likely to have firm size values that are larger than expected and media attention that is lower than expected, conditional on size. -

Gnu Coreutils Core GNU Utilities for Version 5.93, 2 November 2005

gnu Coreutils Core GNU utilities for version 5.93, 2 November 2005 David MacKenzie et al. This manual documents version 5.93 of the gnu core utilities, including the standard pro- grams for text and file manipulation. Copyright c 1994, 1995, 1996, 2000, 2001, 2002, 2003, 2004, 2005 Free Software Foundation, Inc. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.1 or any later version published by the Free Software Foundation; with no Invariant Sections, with no Front-Cover Texts, and with no Back-Cover Texts. A copy of the license is included in the section entitled “GNU Free Documentation License”. Chapter 1: Introduction 1 1 Introduction This manual is a work in progress: many sections make no attempt to explain basic concepts in a way suitable for novices. Thus, if you are interested, please get involved in improving this manual. The entire gnu community will benefit. The gnu utilities documented here are mostly compatible with the POSIX standard. Please report bugs to [email protected]. Remember to include the version number, machine architecture, input files, and any other information needed to reproduce the bug: your input, what you expected, what you got, and why it is wrong. Diffs are welcome, but please include a description of the problem as well, since this is sometimes difficult to infer. See section “Bugs” in Using and Porting GNU CC. This manual was originally derived from the Unix man pages in the distributions, which were written by David MacKenzie and updated by Jim Meyering. -

Some Basic Linux Commands

Some Basic Linux Commands 1. ls: list For long list, use ls -l 2. Linux commands have help or manual available. For example, if you need help for the command ls do ls -- help If help page is too long to be displayed, use ls -- help | more 3. touch: used to create an empty text file. For example to create a file with name foobar do touch foobar 4. rm: used to remove a file or a directory (folder). To delete a file named foobar do rm foobar To avoid an accidental removal of a file you are recommended to use -i option like rm -i foobar It will ask you if you really want to remove the file foobar. Remember once you delete files using rm command you cannot recover them. To remove a folder do use -r or -rf option. For example to delete the folder named test do rm -r test or rm -rf test 5. cp: used to copy a file or a folder. The syntax is cp source destination For example, if you want to copy the file foobar in your current location to a folder named test do cp foobar /home/user/test/ or shortly cp foobar ~/test/ Here we assume that your username is user. 6. mv: used to move a file or a folder. The syntax is the same as cp. The command mv actually does copy and delete. 7. mkdir: used to create a folder. For example to create a folder named test do mkdir test 8. rmdir: used to delete a folder. -

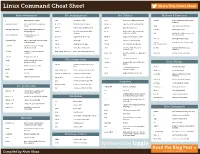

Linux-Cheat-Sheet-Sponsored-By-Loggly.Pdf

Linux Command Cheat Sheet Share This Cheat Sheet Basic commands File management File Utilities Memory & Processes | Pipe (redirect) output find search for a file tr -d translate or delete character free -m display free and used system memory sudo [command] run < command> in superuser ls -a -C -h list content of directory uniq -c -u report or omit repeated lines mode killall stop all process by name rm -r -f remove files and directory split -l split file into pieces nohup [command] run < command> immune to sensors CPU temperature hangup signal locate -i find file, using updatedb(8) wc -w print newline, word, and byte database counts for each file top display current processes, real man [command] display help pages of time monitoring < command> cp -a -R -i copy files or directory head -n output the first part of files kill -1 -9 send signal to process [command] & run < command> and send task du -s disk usage cut -s remove section from file to background service manage or run sysV init script file -b -i identify the file type diff -q file compare, line by line [start|stop|restart] >> [fileA] append to fileA, preserving existing contents mv -f -i move files or directory join -i join lines of two files on a ps aux display current processes, common field snapshot > [fileA] output to fileA, overwriting grep, egrep, fgrep -i -v print lines matching pattern contents more, less view file content, one page at a dmesg -k display system messages time echo -n display a line of text sort -n sort lines in text file xargs build command line from File compression previous output -

User Commands Chown ( 1 ) Chown – Change File Ownership Chown [-Fhr

User Commands chown ( 1 ) NAME chown – change file ownership SYNOPSIS chown [-fhR] owner [ : group] file... DESCRIPTION The chown utility will set the user ID of the file named by each file to the user ID specified by owner, and, optionally, will set the group ID to that specified by group. If chown is invoked by other than the super-user, the set-user-ID bit is cleared. Only the owner of a file (or the super-user) may change the owner of that file. The operating system has a configuration option {_POSIX_CHOWN_RESTRICTED}, to restrict own- ership changes. When this option is in effect the owner of the file is prevented from changing the owner ID of the file. Only the super-user can arbitrarily change owner IDs whether or not this option is in effect. To set this configuration option, include the following line in /etc/system: set rstchown = 1 To disable this option, include the following line in /etc/system: set rstchown = 0 {_POSIX_CHOWN_RESTRICTED} is enabled by default. See system(4) and fpathconf(2). OPTIONS The following options are supported: -f Do not report errors. -h If the file is a symbolic link, change the owner of the symbolic link. Without this option, the owner of the file referenced by the symbolic link is changed. -R Recursive. chown descends through the directory, and any subdirectories, setting the ownership ID as it proceeds. When a symbolic link is encountered, the owner of the target file is changed (unless the -h option is specified), but no recursion takes place. OPERANDS The following operands are supported: owner[: group] A user ID and optional group ID to be assigned to file. -

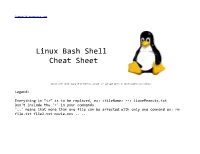

Linux (BASH) Cheat Sheet

freeworld.posterous.com Linux Bash Shell Cheat Sheet (works with about every distribution, except for apt-get which is Ubuntu/Debian exclusive) Legend: Everything in “<>” is to be replaced, ex: <fileName> --> iLovePeanuts.txt Don't include the '=' in your commands '..' means that more than one file can be affected with only one command ex: rm file.txt file2.txt movie.mov .. .. Linux Bash Shell Cheat Sheet Basic Commands Basic Terminal Shortcuts Basic file manipulation CTRL L = Clear the terminal cat <fileName> = show content of file CTRL D = Logout (less, more) SHIFT Page Up/Down = Go up/down the terminal head = from the top CTRL A = Cursor to start of line -n <#oflines> <fileName> CTRL E = Cursor the end of line CTRL U = Delete left of the cursor tail = from the bottom CTRL K = Delete right of the cursor -n <#oflines> <fileName> CTRL W = Delete word on the left CTRL Y = Paste (after CTRL U,K or W) mkdir = create new folder TAB = auto completion of file or command mkdir myStuff .. CTRL R = reverse search history mkdir myStuff/pictures/ .. !! = repeat last command CTRL Z = stops the current command (resume with fg in foreground or bg in background) cp image.jpg newimage.jpg = copy and rename a file Basic Terminal Navigation cp image.jpg <folderName>/ = copy to folder cp image.jpg folder/sameImageNewName.jpg ls -a = list all files and folders cp -R stuff otherStuff = copy and rename a folder ls <folderName> = list files in folder cp *.txt stuff/ = copy all of *<file type> to folder ls -lh = Detailed list, Human readable ls -l *.jpg = list jpeg files only mv file.txt Documents/ = move file to a folder ls -lh <fileName> = Result for file only mv <folderName> <folderName2> = move folder in folder mv filename.txt filename2.txt = rename file cd <folderName> = change directory mv <fileName> stuff/newfileName if folder name has spaces use “ “ mv <folderName>/ .