Fake News and Misinformation Policy Lab Practicum (Spring 2017)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Performing Politics Emma Crewe, SOAS, University of London

Westminster MPs: performing politics Emma Crewe, SOAS, University of London Intentions My aim is to explore the work of Westminster Members of Parliament (MPs) in parliament and constituencies and convey both the diversity and dynamism of their political performances.1 Rather than contrasting MPs with an idealised version of what they might be, I interpret MPs’ work as I see it. If I have any moral and political intent, it is to argue that disenchantment with politics is misdirected – we should target our critiques at politicians in government rather than in their parliamentary role – and to call for fuller citizens’ engagement with political processes. Some explanation of my fieldwork in the UK’s House of Commons will help readers understand how and why I arrived at this interpretation. In 2011 then Clerk of the House, Sir Malcolm Jack, who I knew from doing research in the House of Lords (1998-2002), ascertained that the Speaker was ‘content for the research to proceed’, and his successor, Sir Robert Rogers, issued me with a pass and assigned a sponsor. I roamed all over the Palace, outbuildings and constituencies during 2012 (and to a lesser extent in 2013) listening, watching and conversing wherever I went. This entailed (a) observing interaction in debating chambers, committee rooms and in offices (including the Table Office) in Westminster and constituencies, (b) over 100 pre-arranged unstructured interviews with MPs, former MPs, officials, journalists, MPs’ staff and peers, (c) following four threads: media/twitter exchanges, the Eastleigh by-election with the three main parties, scrutiny of the family justice part of the Children and Families Bill, and constituency surgeries, (d) advising parliamentary officials on seeking MPs’ feedback on House services. -

Automated Meme Magic: an Exploration Into the Implementations and Imaginings of Bots on Reddit”

1 “Automated Meme Magic: An Exploration into the Implementations and Imaginings of Bots on Reddit” Jonathan Murthy | [email protected] | 2018 2 Table of Contents Acknowledgments......................................................................................................................................3 Abstract......................................................................................................................................................4 1.2 Research Questions.........................................................................................................................6 1.2.1 Why Reddit..............................................................................................................................7 1.2.2 Bots..........................................................................................................................................9 1.3 Outline...........................................................................................................................................10 2 Bot Research.........................................................................................................................................11 2.1. Functional Bots.............................................................................................................................13 2.2 Harmful Bots.................................................................................................................................14 2.2.1 The Rise of Socialbots...........................................................................................................16 -

Pizzagate: from Rumor, to Hashtag, to Gunfire in D.C. Real Consequences of Fake News Leveled on a D.C

Pizzagate: From rumor, to hashtag, to gunfire in D.C. Real consequences of fake news leveled on a D.C. pizzeria and other nearby restaurants Comet Ping Pong customers came out to support the restaurant after a gunman entered it with an assault rifle, firing it at least once. (Video: Whitney Shefte/Photo: Matt McClain/The Washington Post) By Marc Fisher, John Woodrow Cox, and Peter Hermann December 6, 2016 What was finally real was Edgar Welch, driving from North Carolina to Washington to rescue sexually abused children he believed were hidden in mysterious tunnels beneath a neighborhood pizza joint. What was real was Welch — a father, former firefighter and sometime movie actor who was drawn to dark mysteries he found on the Internet — terrifying customers and workers with his assault-style rifle as he searched Comet Ping Pong, police said. He found no hidden children, no secret chambers, no evidence of a child sex ring run by the failed Democratic candidate for president of the United States, or by her campaign chief, or by the owner of the pizza place. What was false were the rumors he had read, stories that crisscrossed the globe about a charming little pizza place that features ping-pong tables in its back room. The story of Pizzagate is about what is fake and what is real. It’s a tale of a scandal that never was, and of a fear that has spread through channels that did not even exist until recently. Pizzagate — the belief that code words and satanic symbols point to a sordid underground along an ordinary retail strip in the nation’s capital — is possible only because science has produced the most powerful tools ever invented to find and disseminate information. -

The Parallax View: How Conspiracy Theories and Belief in Conspiracy Shape American Politics

Bard College Bard Digital Commons Senior Projects Spring 2020 Bard Undergraduate Senior Projects Spring 2020 The Parallax View: How Conspiracy Theories and Belief in Conspiracy Shape American Politics Liam Edward Shaffer Bard College, [email protected] Follow this and additional works at: https://digitalcommons.bard.edu/senproj_s2020 Part of the American Politics Commons, and the Political History Commons This work is licensed under a Creative Commons Attribution-Noncommercial-No Derivative Works 4.0 License. Recommended Citation Shaffer, Liam Edward, "The Parallax View: How Conspiracy Theories and Belief in Conspiracy Shape American Politics" (2020). Senior Projects Spring 2020. 236. https://digitalcommons.bard.edu/senproj_s2020/236 This Open Access work is protected by copyright and/or related rights. It has been provided to you by Bard College's Stevenson Library with permission from the rights-holder(s). You are free to use this work in any way that is permitted by the copyright and related rights. For other uses you need to obtain permission from the rights- holder(s) directly, unless additional rights are indicated by a Creative Commons license in the record and/or on the work itself. For more information, please contact [email protected]. The Parallax View: How Conspiracy Theories and Belief in Conspiracy Shape American Politics Senior Project Submitted to The Division of Social Studies of Bard College by Liam Edward Shaffer Annandale-on-Hudson, New York May 2020 Acknowledgements To Simon Gilhooley, thank you for your insight and perspective, for providing me the latitude to pursue the project I envisioned, for guiding me back when I would wander, for keeping me centered in an evolving work and through a chaotic time. -

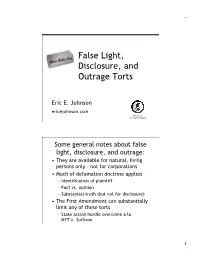

False Light, Disclosure, and Outrage Torts

_ False Light, Disclosure, and Outrage Torts Eric E. Johnson ericejohnson.com Konomark Most rights sharable Some general notes about false light, disclosure, and outrage: • They are available for natural, living persons only – not for corporations • Much of defamation doctrine applies – Identification of plaintiff – Fact vs. opinion – Substantial truth (but not for disclosure) • The First Amendment can substantially limit any of these torts – State action hurdle overcome a la NYT v. Sullivan 1 _ False Light The Elements: 1. A public statement 2. Made with actual malice 3. Placing the plaintiff in a false light 4. That is highly offensive to the reasonable person False Light Defenses: • Essentially the same as for defamation • So, for example: – A public figure will have to prove actual malice.* – A private figure, if a matter of public concern, must prove actual malice or negligence + special damages.* *That is, if actual malice is not required as a prima facie element, which it generally, but not always, is. 2 _ Disclosure The Elements: 1. A public disclosure 2. Of private facts 3. That is highly offensive to the reasonable person Disclosure Defenses: • Legitimate public interest or concern – a/k/a “newsworthiness privilege” – First Amendment requires this, even if common law in a jurisdiction would not 3 _ Outrage (a/k/a Intentional Infliction of Emotional Distress) The Elements: 1. Intentional or reckless conduct that is 2. Extreme and outrageous 3. Causing severe emotional distress Review Intrusion The Elements: 1. Physical or other intrusion 2. Into a zone in which the plaintiff has a reasonable expectation of privacy 3. -

Real Talk About Fake News

Real Talk About Fake News WLA Conference 2018 Real Talk About Fake News Website: http://bit.ly/2qgeaQZ Slide Deck: http://bit.ly/2SihcE9 About Us Shana Ferguson, Teacher Librarian & National Board Certified Teacher, Columbia River HS, Vancouver Public Schools, Vancouver, WA @medialitnerd www.medialitnerd.com Katie Nedved, Teacher Librarian & National Board Certified Teacher, Henrietta Lacks Health & Bioscience High School, Evergreen Public Schools, Vancouver, WA @katienedved www.helacell.org/library Di Zhang, Adult Services Librarian, The Seattle Public Library, Seattle, WA [email protected] Session Overview Public Libraries & Community Engagement School Libraries & Teacher Librarians Sample lessons & topics Evaluation Overview Biggest Topics/Pro Tips Pulse of the Room Which level of library do you serve? Have you taught misinformation (“fake news”) lessons? Where do your patrons get their news? Public Library- Why We Started Talking About Fake News -Community members asked us (confusion over fraudulent news stories and misinformation during 2016 elections) -Fake news is here to stay and ramping up -Digital information literacy is a necessary skill in 21st Century -Librarians, educators and journalists all have roles to play in combating fake news Photo credit: University Sunrise Rotary Club Seattle Public Library’s Approach- Research & Collaboration - Read wide and deep into issue (with help from other libraries, news orgs). - Create curriculum for 2 hour class. - Promote widely, especially with existing media connections. - Encourage and support staff to teach and assist in classes, adapt curriculum to needs of audience. Seattle Public Library’s Approach- Partner with Local Orgs MOHAI Community Conversation on Fake News 9-27-18 Partnerships with: - MOHAI - King County TV Outcomes: - Follow up program w/ MOHAI - Panelists & attendees are future potential partners Seattle Public Library’s Approach- Roadshow - There is an audience- find them. -

Case 1:13-Cv-03994-WHP Document 42-1 Filed 09/04/13 Page 1 of 15

Case 1:13-cv-03994-WHP Document 42-1 Filed 09/04/13 Page 1 of 15 UNITED STATES DISTRICT COURT SOUTHERN DISTRICT OF NEW YORK AMERICAN CIVIL LIBERTIES UNION; AMERICAN CIVIL LIBERTIES UNION FOUNDATION; NEW YORK CIVIL LIBERTIES UNION; and NEW YORK CIVIL LIBERTIES UNION FOUNDATION, Plaintiffs, v. No. 13-cv-03994 (WHP) JAMES R. CLAPPER, in his official capacity as Director of National Intelligence; KEITH B. ALEXANDER, in his ECF CASE official capacity as Director of the National Security Agency and Chief of the Central Security Service; CHARLES T. HAGEL, in his official capacity as Secretary of Defense; ERIC H. HOLDER, in his official capacity as Attorney General of the United States; and ROBERT S. MUELLER III, in his official capacity as Director of the Federal Bureau of Investigation, Defendants. BRIEF AMICI CURIAE OF THE REPORTERS COMMITTEE FOR FREEDOM OF THE PRESS AND 18 NEWS MEDIA ORGANIZATIONS IN SUPPORT OF PLAINTIFFS’ MOTION FOR A PRELIMINARY INJUNCTION Of counsel: Michael D. Steger Bruce D. Brown Counsel of Record Gregg P. Leslie Steger Krane LLP Rob Tricchinelli 1601 Broadway, 12th Floor The Reporters Committee New York, NY 10019 for Freedom of the Press (212) 736-6800 1101 Wilson Blvd., Suite 1100 [email protected] Arlington, VA 22209 (703) 807-2100 Case 1:13-cv-03994-WHP Document 42-1 Filed 09/04/13 Page 2 of 15 TABLE OF CONTENTS TABLE OF AUTHORITIES .......................................................................................................... ii STATEMENT OF INTEREST ....................................................................................................... 1 SUMMARY OF ARGUMENT…………………………………………………………………1 ARGUMENT……………………………………………………………………………………2 I. The integrity of a confidential reporter-source relationship is critical to producing good journalism, and mass telephone call tracking compromises that relationship to the detriment of the public interest……………………………………….2 A There is a long history of journalists breaking significant stories by relying on information from confidential sources…………………………….4 B. -

In the Court of Chancery of the State of Delaware Karen Sbriglio, Firemen’S ) Retirement System of St

EFiled: Aug 06 2021 03:34PM EDT Transaction ID 66784692 Case No. 2018-0307-JRS IN THE COURT OF CHANCERY OF THE STATE OF DELAWARE KAREN SBRIGLIO, FIREMEN’S ) RETIREMENT SYSTEM OF ST. ) LOUIS, CALIFORNIA STATE ) TEACHERS’ RETIREMENT SYSTEM, ) CONSTRUCTION AND GENERAL ) BUILDING LABORERS’ LOCAL NO. ) 79 GENERAL FUND, CITY OF ) BIRMINGHAM RETIREMENT AND ) RELIEF SYSTEM, and LIDIA LEVY, derivatively on behalf of Nominal ) C.A. No. 2018-0307-JRS Defendant FACEBOOK, INC., ) ) Plaintiffs, ) PUBLIC INSPECTION VERSION ) FILED AUGUST 6, 2021 v. ) ) MARK ZUCKERBERG, SHERYL SANDBERG, PEGGY ALFORD, ) ) MARC ANDREESSEN, KENNETH CHENAULT, PETER THIEL, JEFFREY ) ZIENTS, ERSKINE BOWLES, SUSAN ) DESMOND-HELLMANN, REED ) HASTINGS, JAN KOUM, ) KONSTANTINOS PAPAMILTIADIS, ) DAVID FISCHER, MICHAEL ) SCHROEPFER, and DAVID WEHNER ) ) Defendants, ) -and- ) ) FACEBOOK, INC., ) ) Nominal Defendant. ) SECOND AMENDED VERIFIED STOCKHOLDER DERIVATIVE COMPLAINT TABLE OF CONTENTS Page(s) I. SUMMARY OF THE ACTION...................................................................... 5 II. JURISDICTION AND VENUE ....................................................................19 III. PARTIES .......................................................................................................20 A. Plaintiffs ..............................................................................................20 B. Director Defendants ............................................................................26 C. Officer Defendants ..............................................................................28 -

Nate Silver's Punditry Revolution

Nate Silver’s punditry revolution By Cameron S. Brown Jerusalem Post, November 6, 2012 Whether or not US President Barack Obama is re-elected will not just determine the future of politics, it is also likely to influence the future of political punditry as well. The media has been following the US elections with two very, very different narratives. Apart from the obvious – i.e. Democrat versus Republican—opinions were divided between those who thought the race was way too close to call and those who predicted with great confidence that Obama was far more likely to win. Most of the media—including the most respected outlets—continued with the traditional focus on the national polls. Even when state-level polls were cited, analyses often cherry-picked the more interesting polls or ones that suited the specific bias of the pundit. While on occasion this analysis has given one candidate or another an advantage of several points, more often than not they declared the race “neck-and-neck,” and well within any given poll’s margin of error. The other narrative was born out of a new, far more sophisticated political analysis than has ever been seen in the mass media before. Nate Silver, a young statistician who was part of the “Moneyball” revolution in professional baseball, has brought that same methodological rigor to political analysis. The result is that Silver is leading a second revolution: this time in the world of political punditry. In his blog, “Five Thirty-Eight,” (picked up this year by the New York Times), Silver put his first emphasis on the fact that whoever wins the national vote does not actually determine who wins the presidency. -

ASD-Covert-Foreign-Money.Pdf

overt C Foreign Covert Money Financial loopholes exploited by AUGUST 2020 authoritarians to fund political interference in democracies AUTHORS: Josh Rudolph and Thomas Morley © 2020 The Alliance for Securing Democracy Please direct inquiries to The Alliance for Securing Democracy at The German Marshall Fund of the United States 1700 18th Street, NW Washington, DC 20009 T 1 202 683 2650 E [email protected] This publication can be downloaded for free at https://securingdemocracy.gmfus.org/covert-foreign-money/. The views expressed in GMF publications and commentary are the views of the authors alone. Cover and map design: Kenny Nguyen Formatting design: Rachael Worthington Alliance for Securing Democracy The Alliance for Securing Democracy (ASD), a bipartisan initiative housed at the German Marshall Fund of the United States, develops comprehensive strategies to deter, defend against, and raise the costs on authoritarian efforts to undermine and interfere in democratic institutions. ASD brings together experts on disinformation, malign finance, emerging technologies, elections integrity, economic coercion, and cybersecurity, as well as regional experts, to collaborate across traditional stovepipes and develop cross-cutting frame- works. Authors Josh Rudolph Fellow for Malign Finance Thomas Morley Research Assistant Contents Executive Summary �������������������������������������������������������������������������������������������������������������������� 1 Introduction and Methodology �������������������������������������������������������������������������������������������������� -

FAKE NEWS!”: President Trump’S Campaign Against the Media on @Realdonaldtrump and Reactions to It on Twitter

“FAKE NEWS!”: President Trump’s Campaign Against the Media on @realdonaldtrump and Reactions To It on Twitter A PEORIA Project White Paper Michael Cornfield GWU Graduate School of Political Management [email protected] April 10, 2019 This report was made possible by a generous grant from William Madway. SUMMARY: This white paper examines President Trump’s campaign to fan distrust of the news media (Fox News excepted) through his tweeting of the phrase “Fake News (Media).” The report identifies and illustrates eight delegitimation techniques found in the twenty-five most retweeted Trump tweets containing that phrase between January 1, 2017 and August 31, 2018. The report also looks at direct responses and public reactions to those tweets, as found respectively on the comment thread at @realdonaldtrump and in random samples (N = 2500) of US computer-based tweets containing the term on the days in that time period of his most retweeted “Fake News” tweets. Along with the high percentage of retweets built into this search, the sample exhibits techniques and patterns of response which are identified and illustrated. The main findings: ● The term “fake news” emerged in public usage in October 2016 to describe hoaxes, rumors, and false alarms, primarily in connection with the Trump-Clinton presidential contest and its electoral result. ● President-elect Trump adopted the term, intensified it into “Fake News,” and directed it at “Fake News Media” starting in December 2016-January 2017. 1 ● Subsequently, the term has been used on Twitter largely in relation to Trump tweets that deploy it. In other words, “Fake News” rarely appears on Twitter referring to something other than what Trump is tweeting about. -

Cyberattack Attribution As Empowerment and Constraint 3

A HOOVER INSTITUTION ESSAY Cyberattack Attribution as Empowerment and Constraint KRISTEN E. EICHENSEHR Aegis Series Paper No. 2101 Introduction When a state seeks to defend itself against a cyberattack, must it first identify the perpetrator responsible? The US policy of “defend forward” and “persistent engagement” in cyberspace raises the stakes of this attribution question as a matter of both international and domestic law. International law addresses in part the question of when attribution is required. The international law on state responsibility permits a state that has suffered an internationally wrongful act to take countermeasures, but only against the state responsible. This limitation implies that attribution is a necessary prerequisite to countermeasures. But international Law and Technology, Security, National law is silent about whether attribution is required for lesser responses, which may be more common. Moreover, even if states agree that attribution is required in order to take countermeasures, ongoing disagreements about whether certain actions, especially violations of sovereignty, count as internationally wrongful acts are likely to spark disputes about when states must attribute cyberattacks in order to respond lawfully. Under domestic US law, attributing a cyberattack to a particular state bolsters the authority of the executive branch to take action. Congress has authorized the executive to respond to attacks from particular countries and nonstate actors in both recent cyber-specific statutory provisions and the