C 2019 Sathwik Tejaswi Madhusudhan EXPLORING DEEP LEARNING BASED METHODS for INFORMATION RETRIEVAL in INDIAN CLASSICAL MUSIC

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Education ______Ornamentation: an Introduction ______To the Mordent/Battement

Education _______ _______ Ornamentation: An Introduction _______ to the Mordent/Battement By Michael Lynn, One of my favorite ornaments is the I’ll use the French term [email protected] mordent. It often has a lively musical character but can also be drawn out to battement ... and mordent his is the second article I have make a more expressive ornament. interchangeably. written that covers ornaments that Players are often confused about weT might expect to encounter in Baroque the different names for various orna- 17th-century music, which may be music for the recorder. If you haven’t read ments, as well as the different signs played on recorder. the previous article in this series on used to signify them. To add to the Some of the terms we see being ornamentation, it may be helpful complications, the basic term mordent applied to this ornament are: to you to read the Fall 2020 AR was used to mean something com- • mordent installment, which covers trills and pletely different in the 19th century— • mordant appoggiaturas. different from its meaning in the • battement In this issue, we will discuss the Baroque period. • pincé. mordent or battement. In this series of articles on learning I’ll use the French term to interpret ornament signs, I focus on battement (meaning to “hit” or “beat”) music from the years 1680-1750. If you and mordent interchangeably look much outside of these boundaries, throughout this article—remember the situation gets more complicated. that they mean exactly the same thing. These dates encompass all of the Below is an example showing the Baroque recorder literature, with common signs and basic execution the exception of the early Baroque of the mordent. -

Performance Commentary

PERFORMANCE COMMENTARY . It seems, however, far more likely that Chopin Notes on the musical text 3 The variants marked as ossia were given this label by Chopin or were intended a different grouping for this figure, e.g.: 7 added in his hand to pupils' copies; variants without this designation or . See the Source Commentary. are the result of discrepancies in the texts of authentic versions or an 3 inability to establish an unambiguous reading of the text. Minor authentic alternatives (single notes, ornaments, slurs, accents, Bar 84 A gentle change of pedal is indicated on the final crotchet pedal indications, etc.) that can be regarded as variants are enclosed in order to avoid the clash of g -f. in round brackets ( ), whilst editorial additions are written in square brackets [ ]. Pianists who are not interested in editorial questions, and want to base their performance on a single text, unhampered by variants, are recom- mended to use the music printed in the principal staves, including all the markings in brackets. 2a & 2b. Nocturne in E flat major, Op. 9 No. 2 Chopin's original fingering is indicated in large bold-type numerals, (versions with variants) 1 2 3 4 5, in contrast to the editors' fingering which is written in small italic numerals , 1 2 3 4 5 . Wherever authentic fingering is enclosed in The sources indicate that while both performing the Nocturne parentheses this means that it was not present in the primary sources, and working on it with pupils, Chopin was introducing more or but added by Chopin to his pupils' copies. -

Basic Dynamic Markings

Basic Dynamic Markings • ppp pianississimo "very, very soft" • pp pianissimo "very soft" • p piano "soft" • mp mezzo-piano "moderately soft" • mf mezzo-forte "moderately loud" • f forte "loud" • ff fortissimo "very loud" • fff fortississimo "very, very loud" • sfz sforzando “fierce accent” • < crescendo “becoming louder” • > diminuendo “becoming softer” Basic Anticipation Markings Staccato This indicates the musician should play the note shorter than notated, usually half the value, the rest of the metric value is then silent. Staccato marks may appear on notes of any value, shortening their performed duration without speeding the music itself. Spiccato Indicates a longer silence after the note (as described above), making the note very short. Usually applied to quarter notes or shorter. (In the past, this marking’s meaning was more ambiguous: it sometimes was used interchangeably with staccato, and sometimes indicated an accent and not staccato. These usages are now almost defunct, but still appear in some scores.) In string instruments this indicates a bowing technique in which the bow bounces lightly upon the string. Accent Play the note louder, or with a harder attack than surrounding unaccented notes. May appear on notes of any duration. Tenuto This symbol indicates play the note at its full value, or slightly longer. It can also indicate a slight dynamic emphasis or be combined with a staccato dot to indicate a slight detachment. Marcato Play the note somewhat louder or more forcefully than a note with a regular accent mark (open horizontal wedge). In organ notation, this means play a pedal note with the toe. Above the note, use the right foot; below the note, use the left foot. -

Interpreting Tempo and Rubato in Chopin's Music

Interpreting tempo and rubato in Chopin’s music: A matter of tradition or individual style? Li-San Ting A thesis in fulfilment of the requirements for the degree of Doctor of Philosophy University of New South Wales School of the Arts and Media Faculty of Arts and Social Sciences June 2013 ABSTRACT The main goal of this thesis is to gain a greater understanding of Chopin performance and interpretation, particularly in relation to tempo and rubato. This thesis is a comparative study between pianists who are associated with the Chopin tradition, primarily the Polish pianists of the early twentieth century, along with French pianists who are connected to Chopin via pedagogical lineage, and several modern pianists playing on period instruments. Through a detailed analysis of tempo and rubato in selected recordings, this thesis will explore the notions of tradition and individuality in Chopin playing, based on principles of pianism and pedagogy that emerge in Chopin’s writings, his composition, and his students’ accounts. Many pianists and teachers assume that a tradition in playing Chopin exists but the basis for this notion is often not made clear. Certain pianists are considered part of the Chopin tradition because of their indirect pedagogical connection to Chopin. I will investigate claims about tradition in Chopin playing in relation to tempo and rubato and highlight similarities and differences in the playing of pianists of the same or different nationality, pedagogical line or era. I will reveal how the literature on Chopin’s principles regarding tempo and rubato relates to any common or unique traits found in selected recordings. -

Pipa by Moshe Denburg.Pdf

Pipa • Pipa [ Picture of Pipa ] Description A pear shaped lute with 4 strings and 19 to 30 frets, it was introduced into China in the 4th century AD. The Pipa has become a prominent Chinese instrument used for instrumental music as well as accompaniment to a variety of song genres. It has a ringing ('bass-banjo' like) sound which articulates melodies and rhythms wonderfully and is capable of a wide variety of techniques and ornaments. Tuning The pipa is tuned, from highest (string #1) to lowest (string #4): a - e - d - A. In piano notation these notes correspond to: A37 - E 32 - D30 - A25 (where A37 is the A below middle C). Scordatura As with many stringed instruments, scordatura may be possible, but one needs to consult with the musician about it. Use of a capo is not part of the pipa tradition, though one may inquire as to its efficacy. Pipa Notation One can utilize western notation or Chinese. If western notation is utilized, many, if not all, Chinese musicians will annotate the music in Chinese notation, since this is their first choice. It may work well for the composer to notate in the western 5 line staff and add the Chinese numbers to it for them. This may be laborious, and it is not necessary for Chinese musicians, who are quite adept at both systems. In western notation one writes for the Pipa at pitch, utilizing the bass and treble clefs. In Chinese notation one utilizes the French Chevé number system (see entry: Chinese Notation). In traditional pipa notation there are many symbols that are utilized to call for specific techniques. -

Unicode Technical Note: Byzantine Musical Notation

1 Unicode Technical Note: Byzantine Musical Notation Version 1.0: January 2005 Nick Nicholas; [email protected] This note documents the practice of Byzantine Musical Notation in its various forms, as an aid for implementors using its Unicode encoding. The note contains a good deal of background information on Byzantine musical theory, some of which is not readily available in English; this helps to make sense of why the notation is the way it is.1 1. Preliminaries 1.1. Kinds of Notation. Byzantine music is a cover term for the liturgical music used in the Orthodox Church within the Byzantine Empire and the Churches regarded as continuing that tradition. This music is monophonic (with drone notes),2 exclusively vocal, and almost entirely sacred: very little secular music of this kind has been preserved, although we know that court ceremonial music in Byzantium was similar to the sacred. Byzantine music is accepted to have originated in the liturgical music of the Levant, and in particular Syriac and Jewish music. The extent of continuity between ancient Greek and Byzantine music is unclear, and an issue subject to emotive responses. The same holds for the extent of continuity between Byzantine music proper and the liturgical music in contemporary use—i.e. to what extent Ottoman influences have displaced the earlier Byzantine foundation of the music. There are two kinds of Byzantine musical notation. The earlier ecphonetic (recitative) style was used to notate the recitation of lessons (readings from the Bible). It probably was introduced in the late 4th century, is attested from the 8th, and was increasingly confused until the 15th century, when it passed out of use. -

GUIDO Additional Specifications

The GUIDO Music Notation Format Version 1.3.8 Additionnal and New Specifications Grame 2009/08 1 Contents 1. Articulations.....................................................................................................................................3 1.1 Fermatas....................................................................................................................................3 1.2 Pizzicati.....................................................................................................................................3 1.3 Staccato / staccatissimo............................................................................................................4 1.4 Harmonics.................................................................................................................................4 2. Ornaments.........................................................................................................................................5 2.1 Trill...........................................................................................................................................5 2.2 Mordents...................................................................................................................................5 2.3 Turn...........................................................................................................................................6 2 1. Articulations 1.1 Fermatas \fermata indicates a fermata at the current metric position (for use between notes) \fermata(notes) indicates -

Standard Music Font Layout

SMuFL Standard Music Font Layout Version 0.5 (2013-07-12) Copyright © 2013 Steinberg Media Technologies GmbH Acknowledgements This document reproduces glyphs from the Bravura font, copyright © Steinberg Media Technologies GmbH. Bravura is released under the SIL Open Font License and can be downloaded from http://www.smufl.org/fonts This document also reproduces glyphs from the Sagittal font, copyright © George Secor and David Keenan. Sagittal is released under the SIL Open Font License and can be downloaded from http://sagittal.org This document also currently reproduces some glyphs from the Unicode 6.2 code chart for the Musical Symbols range (http://www.unicode.org/charts/PDF/U1D100.pdf). These glyphs are the copyright of their respective copyright holders, listed on the Unicode Consortium web site here: http://www.unicode.org/charts/fonts.html 2 Version history Version 0.1 (2013-01-31) § Initial version. Version 0.2 (2013-02-08) § Added Tick barline (U+E036). § Changed names of time signature, tuplet and figured bass digit glyphs to ensure that they are unique. § Add upside-down and reversed G, F and C clefs for canzicrans and inverted canons (U+E074–U+E078). § Added Time signature + (U+E08C) and Time signature fraction slash (U+E08D) glyphs. § Added Black diamond notehead (U+E0BC), White diamond notehead (U+E0BD), Half-filled diamond notehead (U+E0BE), Black circled notehead (U+E0BF), White circled notehead (U+E0C0) glyphs. § Added 256th and 512th note glyphs (U+E110–U+E113). § All symbols shown on combining stems now also exist as separate symbols. § Added reversed sharp, natural, double flat and inverted flat and double flat glyphs (U+E172–U+E176) for canzicrans and inverted canons. -

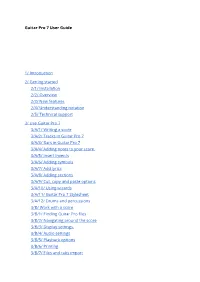

Guitar Pro 7 User Guide 1/ Introduction 2/ Getting Started

Guitar Pro 7 User Guide 1/ Introduction 2/ Getting started 2/1/ Installation 2/2/ Overview 2/3/ New features 2/4/ Understanding notation 2/5/ Technical support 3/ Use Guitar Pro 7 3/A/1/ Writing a score 3/A/2/ Tracks in Guitar Pro 7 3/A/3/ Bars in Guitar Pro 7 3/A/4/ Adding notes to your score. 3/A/5/ Insert invents 3/A/6/ Adding symbols 3/A/7/ Add lyrics 3/A/8/ Adding sections 3/A/9/ Cut, copy and paste options 3/A/10/ Using wizards 3/A/11/ Guitar Pro 7 Stylesheet 3/A/12/ Drums and percussions 3/B/ Work with a score 3/B/1/ Finding Guitar Pro files 3/B/2/ Navigating around the score 3/B/3/ Display settings. 3/B/4/ Audio settings 3/B/5/ Playback options 3/B/6/ Printing 3/B/7/ Files and tabs import 4/ Tools 4/1/ Chord diagrams 4/2/ Scales 4/3/ Virtual instruments 4/4/ Polyphonic tuner 4/5/ Metronome 4/6/ MIDI capture 4/7/ Line In 4/8 File protection 5/ mySongBook 1/ Introduction Welcome! You just purchased Guitar Pro 7, congratulations and welcome to the Guitar Pro family! Guitar Pro is back with its best version yet. Faster, stronger and modernised, Guitar Pro 7 offers you many new features. Whether you are a longtime Guitar Pro user or a new user you will find all the necessary information in this user guide to make the best out of Guitar Pro 7. 2/ Getting started 2/1/ Installation 2/1/1 MINIMUM SYSTEM REQUIREMENTS macOS X 10.10 / Windows 7 (32 or 64-Bit) Dual-core CPU with 4 GB RAM 2 GB of free HD space 960x720 display OS-compatible audio hardware DVD-ROM drive or internet connection required to download the software 2/1/2/ Installation on Windows Installation from the Guitar Pro website: You can easily download Guitar Pro 7 from our website via this link: https://www.guitar-pro.com/en/index.php?pg=download Once the trial version downloaded, upgrade it to the full version by entering your licence number into your activation window. -

Performing Chinese Contemporary Art Song

Performing Chinese Contemporary Art Song: A Portfolio of Recordings and Exegesis Qing (Lily) Chang Submitted in fulfilment of the requirements for the degree of Doctor of Philosophy Elder Conservatorium of Music Faculty of Arts The University of Adelaide July 2017 Table of contents Abstract Declaration Acknowledgements List of tables and figures Part A: Sound recordings Contents of CD 1 Contents of CD 2 Contents of CD 3 Contents of CD 4 Part B: Exegesis Introduction Chapter 1 Historical context 1.1 History of Chinese art song 1.2 Definitions of Chinese contemporary art song Chapter 2 Performing Chinese contemporary art song 2.1 Singing Chinese contemporary art song 2.2 Vocal techniques for performing Chinese contemporary art song 2.3 Various vocal styles for performing Chinese contemporary art song 2.4 Techniques for staging presentations of Chinese contemporary art song i Chapter 3 Exploring how to interpret ornamentations 3.1 Types of frequently used ornaments and their use in Chinese contemporary art song 3.2 How to use ornamentation to match the four tones of Chinese pronunciation Chapter 4 Four case studies 4.1 The Hunchback of Notre Dame by Shang Deyi 4.2 I Love This Land by Lu Zaiyi 4.3 Lullaby by Shi Guangnan 4.4 Autumn, Pamir, How Beautiful My Hometown Is! by Zheng Qiufeng Conclusion References Appendices Appendix A: Romanized Chinese and English translations of 56 Chinese contemporary art songs Appendix B: Text of commentary for 56 Chinese contemporary art songs Appendix C: Performing Chinese contemporary art song: Scores of repertoire for examination Appendix D: University of Adelaide Ethics Approval Number H-2014-184 ii NOTE: 4 CDs containing 'Recorded Performances' are included with the print copy of the thesis held in the University of Adelaide Library. -

Lilypond Music Glossary

LilyPond The music typesetter Music Glossary The LilyPond development team ☛ ✟ This glossary provides definitions and translations of musical terms used in the documentation manuals for LilyPond version 2.21.82. ✡ ✠ ☛ ✟ For more information about how this manual fits with the other documentation, or to read this manual in other formats, see Section “Manuals” in General Information. If you are missing any manuals, the complete documentation can be found at http://lilypond.org/. ✡ ✠ Copyright ⃝c 1999–2020 by the authors Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.1 or any later version published by the Free Software Foundation; with no Invariant Sections. A copy of the license is included in the section entitled “GNU Free Documentation License”. For LilyPond version 2.21.82 1 1 Musical terms A-Z Languages in this order. • UK - British English (where it differs from American English) • ES - Spanish • I - Italian • F - French • D - German • NL - Dutch • DK - Danish • S - Swedish • FI - Finnish 1.1 A • ES: la • I: la • F: la • D: A, a • NL: a • DK: a • S: a • FI: A, a See also Chapter 3 [Pitch names], page 87. 1.2 a due ES: a dos, I: a due, F: `adeux, D: ?, NL: ?, DK: ?, S: ?, FI: kahdelle. Abbreviated a2 or a 2. In orchestral scores, a due indicates that: 1. A single part notated on a single staff that normally carries parts for two players (e.g. first and second oboes) is to be played by both players. -

Dorico 3.1.10 Version History

Version history Known issues & solutions February 2020 Steinberg Media Technologies GmbH Contents Dorico 3.1.10 ....................................................................................................................................................................................................... 4 Issues resolved ............................................................................................................................................................................................... 4 Dorico 3.1 ............................................................................................................................................................................................................. 8 New features ................................................................................................................................................................................................... 8 Condensing changes ............................................................................................................................................................................... 8 Dynamics lane ......................................................................................................................................................................................... 11 Bracketed noteheads ............................................................................................................................................................................ 14 Horizontal