Improved Linear Classifier Model with Nystrжm

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Archaeological Observation on the Exploration of Chu Capitals

Archaeological Observation on the Exploration of Chu Capitals Wang Hongxing Key words: Chu Capitals Danyang Ying Chenying Shouying According to accurate historical documents, the capi- In view of the recent research on the civilization pro- tals of Chu State include Danyang 丹阳 of the early stage, cess of the middle reach of Yangtze River, we may infer Ying 郢 of the middle stage and Chenying 陈郢 and that Danyang ought to be a central settlement among a Shouying 寿郢 of the late stage. Archaeologically group of settlements not far away from Jingshan 荆山 speaking, Chenying and Shouying are traceable while with rice as the main crop. No matter whether there are the locations of Danyang and Yingdu 郢都 are still any remains of fosses around the central settlement, its oblivious and scholars differ on this issue. Since Chu area must be larger than ordinary sites and be of higher capitals are the political, economical and cultural cen- scale and have public amenities such as large buildings ters of Chu State, the research on Chu capitals directly or altars. The site ought to have definite functional sec- affects further study of Chu culture. tions and the cemetery ought to be divided into that of Based on previous research, I intend to summarize the aristocracy and the plebeians. The relevant docu- the exploration of Danyang, Yingdu and Shouying in ments and the unearthed inscriptions on tortoise shells recent years, review the insufficiency of the former re- from Zhouyuan 周原 saying “the viscount of Chu search and current methods and advance some personal (actually the ruler of Chu) came to inform” indicate that opinion on the locations of Chu capitals and later explo- Zhou had frequent contact and exchange with Chu. -

The History of Holt Cheng Starts 88Th

The Very Beginning (written with great honor by cousin Basilio Chen 鄭/郑华树) The Roots Chang Kee traces his family roots as the 87th descendant of Duke Huan of Zheng (鄭桓公), thus posthumorously, Dr. Holt Cheng is referred to in the ancient family genealogical tradition Duke Holt Cheng, descendant of the royal family Zhou (周) from the Western Zhou Dynasty. The roots and family history of Chang Kee starts over 2,800 years ago in the Zhou Dynasty (周朝) when King Xuan (周宣王, 841 BC - 781 BC), the eleventh King of the Zhou Dynasty, made his younger brother Ji You (姬友, 806 BC-771 BC) the Duke of Zheng, establishing what would be the last bastion of Western Zhou (西周朝) and at the same time establishing the first person to adopt the surname Zheng (also Romanized as Cheng in Wades-Giles Dictionary of Pronunciation). The surname Zheng (鄭) which means "serious" or " solemn", is also unique in that is the only few surname that also has a City-State name associated it, Zhengzhou city (鄭國 or鄭州in modern times). Thus, the State of Zheng (鄭國) was officially established by the first Zheng (鄭,) Duke Huan of Zheng (鄭桓公), in 806 BC as a city-state in the middle of ancient China, modern Henan Province. Its ruling house had the surname Ji (姬), making them a branch of the Zhou royal house, and were given the rank of bo (伯,爵), corresponding roughly to an earl. Later, this branch adopted officially the surname Zheng (鄭) and thus Ji You (or Earl Ji You, as it would refer to in royal title) was known posthumously as Duke Huan of Zheng (鄭桓公) becoming the first person to adopt the family surname of Zheng (鄭), Chang Kee’s family name in Chinese. -

Table of Contents (PDF)

Cancer Prevention Research Table of Contents June 2017 * Volume 10 * Number 6 RESEARCH ARTICLES 355 Combined Genetic Biomarkers and Betel Quid Chewing for Identifying High-Risk Group for 319 Statin Use, Serum Lipids, and Prostate Oral Cancer Occurrence Inflammation in Men with a Negative Prostate Chia-Min Chung, Chien-Hung Lee, Mu-Kuan Chen, Biopsy: Results from the REDUCE Trial Ka-Wo Lee, Cheng-Che E. Lan, Aij-Lie Kwan, Emma H. Allott, Lauren E. Howard, Adriana C. Vidal, Ming-Hsui Tsai, and Ying-Chin Ko Daniel M. Moreira, Ramiro Castro-Santamaria, Gerald L. Andriole, and Stephen J. Freedland 363 A Presurgical Study of Lecithin Formulation of Green Tea Extract in Women with Early 327 Sleep Duration across the Adult Lifecourse and Breast Cancer Risk of Lung Cancer Mortality: A Cohort Study in Matteo Lazzeroni, Aliana Guerrieri-Gonzaga, Xuanwei, China Sara Gandini, Harriet Johansson, Davide Serrano, Jason Y. Wong, Bryan A. Bassig, Roel Vermeulen, Wei Hu, Massimiliano Cazzaniga, Valentina Aristarco, Bofu Ning, Wei Jie Seow, Bu-Tian Ji, Debora Macis, Serena Mora, Pietro Caldarella, George S. Downward, Hormuzd A. Katki, Gianmatteo Pagani, Giancarlo Pruneri, Antonella Riva, Francesco Barone-Adesi, Nathaniel Rothman, Giovanna Petrangolini, Paolo Morazzoni, Robert S. Chapman, and Qing Lan Andrea DeCensi, and Bernardo Bonanni 337 Bitter Melon Enhances Natural Killer–Mediated Toxicity against Head and Neck Cancer Cells Sourav Bhattacharya, Naoshad Muhammad, CORRECTION Robert Steele, Jacki Kornbluth, and Ratna B. Ray 371 Correction: New Perspectives of Curcumin 345 Bioactivity of Oral Linaclotide in Human in Cancer Prevention Colorectum for Cancer Chemoprevention David S. Weinberg, Jieru E. Lin, Nathan R. -

Originally, the Descendants of Hua Xia Were Not the Descendants of Yan Huang

E-Leader Brno 2019 Originally, the Descendants of Hua Xia were not the Descendants of Yan Huang Soleilmavis Liu, Activist Peacepink, Yantai, Shandong, China Many Chinese people claimed that they are descendants of Yan Huang, while claiming that they are descendants of Hua Xia. (Yan refers to Yan Di, Huang refers to Huang Di and Xia refers to the Xia Dynasty). Are these true or false? We will find out from Shanhaijing ’s records and modern archaeological discoveries. Abstract Shanhaijing (Classic of Mountains and Seas ) records many ancient groups of people in Neolithic China. The five biggest were: Yan Di, Huang Di, Zhuan Xu, Di Jun and Shao Hao. These were not only the names of groups, but also the names of individuals, who were regarded by many groups as common male ancestors. These groups first lived in the Pamirs Plateau, soon gathered in the north of the Tibetan Plateau and west of the Qinghai Lake and learned from each other advanced sciences and technologies, later spread out to other places of China and built their unique ancient cultures during the Neolithic Age. The Yan Di’s offspring spread out to the west of the Taklamakan Desert;The Huang Di’s offspring spread out to the north of the Chishui River, Tianshan Mountains and further northern and northeastern areas;The Di Jun’s and Shao Hao’s offspring spread out to the middle and lower reaches of the Yellow River, where the Di Jun’s offspring lived in the west of the Shao Hao’s territories, which were near the sea or in the Shandong Peninsula.Modern archaeological discoveries have revealed the authenticity of Shanhaijing ’s records. -

«Iiteiii«T(M I Ie

THE ST. JdfflJS NEWS. VOLUME XVIU—Na 38. THE ST. JOHNS ^NEWS, THURSDAY AFTERNOON. Maj 2. PAGES. ONE DOUJM A TBAB. BURIED LAST FRIDAY IMV M me SOCIAL EVENTS mSTALUTNW BONN lDMRi m I Hl VimKR.\i« HKRVIC’ES <IF l^\TIC NAI>PE:NIN€4H tiE THE WEEK IN MELD TFKSDAY BY 'THE NATIUK- I IE MlUi. .RARV Mc<liKK. IN HT. 4€»HNH WMTETl'. FUKIlin .AL PRflTKCTYVK UBOfON. lit*. Mftry A. McKee, whose death \l4»Hk ON THE CHAPMAN P.YC- MUs Anna Kyan and Miss MInnis AIMIII. KNDN BTITN THt^NDMR The new officers of the local lodge YKAK IMY WWUi MB OD April It. was related In last week's Harrinston entertained the teachers of National ProtectIre Laglon were C'RliUNAL AND CIVIL MA*] 111 m. JOHNS. laana, buried Friday. The fun- 1(;||Y TO BE ItCMHEII. of the city schiMds PrUlay evenInK In NWIRM AND PRRieBE. Installed Tuesday eeenlng by suite OP THE PART WEB erml aanrfces were conducted at the a ver>' pleasant an«l nx»vel manner. deputy J. It. Wyckoff of Grand Rap raaUMlce of her eon K. M> K«*e by At 7:10 the guests all In costume as ids as follows: Raw. O. 8. Northrop, the Iniermeni be- sembled at the home of Ml«s Ityan. Past president. Gserge O. Wilson: READY m THE HDUDAYS Um m*de In the Victor cemetery be- BY THE FIRST OF HIRE corner of Ottawa gnd Baldwin streets, THE COLDEST IN » YEARS pres.. Rosetta M. Drake; rice pram., it. NNSm B OONNi OVBt akla her husl»and who |uiee«-«l swax where they were ushered Into a room Herton K, Clark; eec'y. -

Official Colours of Chinese Regimes: a Panchronic Philological Study with Historical Accounts of China

TRAMES, 2012, 16(66/61), 3, 237–285 OFFICIAL COLOURS OF CHINESE REGIMES: A PANCHRONIC PHILOLOGICAL STUDY WITH HISTORICAL ACCOUNTS OF CHINA Jingyi Gao Institute of the Estonian Language, University of Tartu, and Tallinn University Abstract. The paper reports a panchronic philological study on the official colours of Chinese regimes. The historical accounts of the Chinese regimes are introduced. The official colours are summarised with philological references of archaic texts. Remarkably, it has been suggested that the official colours of the most ancient regimes should be the three primitive colours: (1) white-yellow, (2) black-grue yellow, and (3) red-yellow, instead of the simple colours. There were inconsistent historical records on the official colours of the most ancient regimes because the composite colour categories had been split. It has solved the historical problem with the linguistic theory of composite colour categories. Besides, it is concluded how the official colours were determined: At first, the official colour might be naturally determined according to the substance of the ruling population. There might be three groups of people in the Far East. (1) The developed hunter gatherers with livestock preferred the white-yellow colour of milk. (2) The farmers preferred the red-yellow colour of sun and fire. (3) The herders preferred the black-grue-yellow colour of water bodies. Later, after the Han-Chinese consolidation, the official colour could be politically determined according to the main property of the five elements in Sino-metaphysics. The red colour has been predominate in China for many reasons. Keywords: colour symbolism, official colours, national colours, five elements, philology, Chinese history, Chinese language, etymology, basic colour terms DOI: 10.3176/tr.2012.3.03 1. -

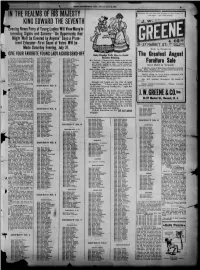

1909-07-26 [P

■ ■ ■ — —— 1 »» mmtmmmmm July 10th, store closed Saturdays at noon, during July ΠΝ the realms of his majesty (Beginning and August. Open Friday evenings. KINC EDWARD THE SEVENTH Evening News Party of Young Ladies Will View Many In- i teresting Sights and Scenes—"An Opportunity that Might Wei be Coveted by Anyone" Says a Prom- inent Educator-First Count of Votes Will be Made Saturday Evening, July 31. Now in Progress L GIVE YOUR FAVORITE YOUNG LADY ACOODSEND-OFF Anty Drudge Tells How to Avoid The Greatest August The plan of the EVENING NEWS IMss Ingabord Oksen Mies Lyda Lyttle Sunday Soaking. to send abroad tea young ladies for Miss Charlotte Law Mise Margaret Williams Mrs. Hurryup—"I always put my clothes to soak on Sun- a trip to the tropics continues to ex- Miss Tina Friedman Mira Nellie Knott cite comment throughout the city Miss Florence Gassmati Misa Ιλο Reed day night. Then I get an early start on Monday and Furniture Sale and county. Miss Florence Sofleld Mise May Ludwlg get through washing by noon. I don't consider it The offer seems so generous and Miss Maude Sofleld Miss Beatrice William· Ever Held in Newark breaking the for cleanliness is next to the plan so praiseworthy that, as the Miss Lulu Dunham Mies Mabel Corson Sabbath, god- features come more and more gener- Mies Louise Dover Miss Anna Fountain liness, you know." A stock of Grade known the venture Miss Emma Fraser Mies Grace Braden gigantic High Furnittfre, Carpets, ally the success of 'Anty Drudge—"Yee, but godliness comes first, my dear. -

Yinghua (Andy) Jin, Phd

Yinghua (Andy) Jin, PhD. Address: Contact information: 833 Skyline Dr. Phone: (001) 650-889-9433 Daly City, CA 94015 E-mail: [email protected] USA or [email protected] _______________________________________________________________________ Education: Ph.D., Public Economics, Georgia State University (Atlanta, GA, USA) 2010 M.A., Economics, Georgia State University (Atlanta, GA, USA) 2005 B.A., Trade Economics, Nanjing University of Finance and Economics (Nanjing, Jiangsu, China) 1996 Work Experiences: Assistant Professor, Assistant to Dean, Zhongnan University of Economics & Law, China 07/2013-07/2016 Visiting Assistant Professor, Georgia Southern University, GA, USA 08/2010-06/2013 Economic Analyst Intern, Cobb County Superior Court, Marietta, GA, USA 05-08/2004 Teaching and Research Interests: Principle and Intermediate levels of Macro- and Micro-Economics, Public Finance, State and Local Public Finance, Development Economics, General Theory of Economics, Chinese Economy Peer-reviewed Publications: "Fiscal Decentralization and China's Regional Infant Mortality" (with Gregory Brock and Tong Zeng). Journal of Policy Modeling. 2015. 37(2). 175-188. "Fiscal Decentralization and Horizontal Fiscal Inequality in China: New Evidence from the Metropolitan Areas" (with Leng Ling, Hongfeng Peng and Pingping Song). The Chinese Economy. 2013.46(3).6-22. "The Evolution of Fiscal Decentralization in China and India: A Comparative Study of Design and Performance" (with Jenny Ligthart and Mark Rider). Journal of Emerging Knowledge on Emerging Markets. 2011 (November). Volume 3. 553-580. 1 "Does Fiscal Decentralization Improve Healthcare Outcomes? Empirical Evidence from China" (with Rui Sun). Public Finance and Management. 2011. 11(3). 234-261. Working Papers in Progress: . Did FDI Really Cause Chinese Economic Growth? A Meta Analysis (with Philip Gunby and W. -

![[Hal-00921702, V2] Targeted Update -- Aggressive](https://docslib.b-cdn.net/cover/2740/hal-00921702-v2-targeted-update-aggressive-622740.webp)

[Hal-00921702, V2] Targeted Update -- Aggressive

Author manuscript, published in "ESOP - 23rd European Symposium on Programming - 2014 (2014)" Targeted Update | Aggressive Memory Abstraction Beyond Common Sense and its Application on Static Numeric Analysis Zhoulai Fu ? IMDEA Software Abstract. Summarizing techniques are widely used in the reasoning of unbounded data structures. These techniques prohibit strong update unless certain restricted safety conditions are satisfied. We find that by setting and enforcing the analysis boundaries to a limited scope of pro- gram identifiers, called targets in this paper, more cases of strong update can be shown sound, not with regard to the entire heap, but with regard to the targets. We have implemented the analysis for inferring numeric properties in Java programs. The experimental results show a tangible precision enhancement compared with classical approaches while pre- serving a high scalability. Keywords: abstract interpretation, points-to analysis, abstract numeric do- main, abstract semantics, strong update 1 Introduction Static analysis of heap-manipulating programs has received much attention due to its fundamental role supporting a growing list of other analyses (Blanchet et al., 2003b; Chen et al., 2003; Fink et al., 2008). Summarizing techniques, where the heap is partitioned into finite groups,can manipulate unbounded data struc- tures through summarized dimensions (Gopan et al., 2004). These techniques hal-00921702, version 2 - 23 Jan 2014 have many possible uses in heap analyses, such as points-to analysis (Emami et al., 1994) and TVLA (Lev-Ami and Sagiv, 2000), and also have been inves- tigated as a basis underpinning the extension of classic numeric abstract do- mains to pointer-aware programs (Fu, 2013). -

Bofutsushosan Improves Gut Barrier Function with a Bloom Of

www.nature.com/scientificreports OPEN Bofutsushosan improves gut barrier function with a bloom of Akkermansia muciniphila and improves glucose metabolism in mice with diet-induced obesity Shiho Fujisaka1*, Isao Usui1,2, Allah Nawaz1,3, Yoshiko Igarashi1, Keisuke Okabe1,4, Yukihiro Furusawa5, Shiro Watanabe6, Seiji Yamamoto7, Masakiyo Sasahara7, Yoshiyuki Watanabe1, Yoshinori Nagai8, Kunimasa Yagi1, Takashi Nakagawa 3 & Kazuyuki Tobe1* Obesity and insulin resistance are associated with dysbiosis of the gut microbiota and impaired intestinal barrier function. Herein, we report that Bofutsushosan (BFT), a Japanese herbal medicine, Kampo, which has been clinically used for constipation in Asian countries, ameliorates glucose metabolism in mice with diet–induced obesity. A 16S rRNA sequence analysis of fecal samples showed that BFT dramatically increased the relative abundance of Verrucomicrobia, which was mainly associated with a bloom of Akkermansia muciniphila (AKK). BFT decreased the gut permeability as assessed by FITC-dextran gavage assay, associated with increased expression of tight-junction related protein, claudin-1, in the colon. The BFT treatment group also showed signifcant decreases of the plasma endotoxin level and expression of the hepatic lipopolysaccharide-binding protein. Antibiotic treatment abrogated the metabolic efects of BFT. Moreover, many of these changes could be reproduced when the cecal contents of BFT-treated donors were transferred to antibiotic-pretreated high fat diet-fed mice. These data demonstrate that BFT modifes the gut microbiota with an increase in AKK, which may contribute to improving gut barrier function and preventing metabolic endotoxemia, leading to attenuation of diet-induced infammation and glucose intolerance. Understanding the interaction between a medicine and the gut microbiota may provide insights into new pharmacological targets to improve glucose metabolism. -

Proof of Investors' Binding Borrowing Constraint Appendix 2: System Of

Appendix 1: Proof of Investors’ Binding Borrowing Constraint PROOF: Use the Kuhn-Tucker condition to check whether the collateral constraint is binding. We have h I RI I mt[mt pt ht + ht − bt ] = 0 If (11) is not binding, then mt = 0: We can write the investor’s FOC Equation (18) as: I I I I h I I I I i Ut;cI ct ;ht ;nt = bIEt (1 + it)Ut+1;cI ct+1;ht+1;nt+1 (42) At steady state, we have bI (1 + i) = 1 However from (6); we know bR (1 + i) = 1 at steady state. With parameter restrictions that bR > bI; therefore bI (1 + i) < 1; contradiction. Therefore we cannot have mt = 0: Therefore, mt > 0; and I h I RI thus we have bt = mt pt ht + ht : Q.E.D. Appendix 2: System of Steady-State Conditions This appendix lays out the system of equilibrium conditions in steady state. Y cR + prhR = + idR (43) N R R R r R R R UhR c ;h = pt UcR c ;h (44) R R R R R R UnR c ;h = −WUcR c ;h (45) 1 = bR(1 + i) (46) Y cI + phd hI + hRI + ibI = + I + prhRI (47) t N I I I h I I I h [1 − bI (1 − d)]UcI c ;h p = UhI c ;h + mmp (48) I I I h I I I r h [1 − bI (1 − d)]UcI c ;h p = UcI c ;h p + mmp (49) I I I [1 − bI (1 + i)]UcI c ;h = m (50) bI = mphhI (51) 26 ©International Monetary Fund. -

The Later Han Empire (25-220CE) & Its Northwestern Frontier

University of Pennsylvania ScholarlyCommons Publicly Accessible Penn Dissertations 2012 Dynamics of Disintegration: The Later Han Empire (25-220CE) & Its Northwestern Frontier Wai Kit Wicky Tse University of Pennsylvania, [email protected] Follow this and additional works at: https://repository.upenn.edu/edissertations Part of the Asian History Commons, Asian Studies Commons, and the Military History Commons Recommended Citation Tse, Wai Kit Wicky, "Dynamics of Disintegration: The Later Han Empire (25-220CE) & Its Northwestern Frontier" (2012). Publicly Accessible Penn Dissertations. 589. https://repository.upenn.edu/edissertations/589 This paper is posted at ScholarlyCommons. https://repository.upenn.edu/edissertations/589 For more information, please contact [email protected]. Dynamics of Disintegration: The Later Han Empire (25-220CE) & Its Northwestern Frontier Abstract As a frontier region of the Qin-Han (221BCE-220CE) empire, the northwest was a new territory to the Chinese realm. Until the Later Han (25-220CE) times, some portions of the northwestern region had only been part of imperial soil for one hundred years. Its coalescence into the Chinese empire was a product of long-term expansion and conquest, which arguably defined the egionr 's military nature. Furthermore, in the harsh natural environment of the region, only tough people could survive, and unsurprisingly, the region fostered vigorous warriors. Mixed culture and multi-ethnicity featured prominently in this highly militarized frontier society, which contrasted sharply with the imperial center that promoted unified cultural values and stood in the way of a greater degree of transregional integration. As this project shows, it was the northwesterners who went through a process of political peripheralization during the Later Han times played a harbinger role of the disintegration of the empire and eventually led to the breakdown of the early imperial system in Chinese history.