Linear Algebra Libraries

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Memory Management and Garbage Collection

Overview Memory Management Stack: Data on stack (local variables on activation records) have lifetime that coincides with the life of a procedure call. Memory for stack data is allocated on entry to procedures ::: ::: and de-allocated on return. Heap: Data on heap have lifetimes that may differ from the life of a procedure call. Memory for heap data is allocated on demand (e.g. malloc, new, etc.) ::: ::: and released Manually: e.g. using free Automatically: e.g. using a garbage collector Compilers Memory Management CSE 304/504 1 / 16 Overview Memory Allocation Heap memory is divided into free and used. Free memory is kept in a data structure, usually a free list. When a new chunk of memory is needed, a chunk from the free list is returned (after marking it as used). When a chunk of memory is freed, it is added to the free list (after marking it as free) Compilers Memory Management CSE 304/504 2 / 16 Overview Fragmentation Free space is said to be fragmented when free chunks are not contiguous. Fragmentation is reduced by: Maintaining different-sized free lists (e.g. free 8-byte cells, free 16-byte cells etc.) and allocating out of the appropriate list. If a small chunk is not available (e.g. no free 8-byte cells), grab a larger chunk (say, a 32-byte chunk), subdivide it (into 4 smaller chunks) and allocate. When a small chunk is freed, check if it can be merged with adjacent areas to make a larger chunk. Compilers Memory Management CSE 304/504 3 / 16 Overview Manual Memory Management Programmer has full control over memory ::: with the responsibility to manage it well Premature free's lead to dangling references Overly conservative free's lead to memory leaks With manual free's it is virtually impossible to ensure that a program is correct and secure. -

Overview of Iterative Linear System Solver Packages

Overview of Iterative Linear System Solver Packages Victor Eijkhout July, 1998 Abstract Description and comparison of several packages for the iterative solu- tion of linear systems of equations. 1 1 Intro duction There are several freely available packages for the iterative solution of linear systems of equations, typically derived from partial di erential equation prob- lems. In this rep ort I will give a brief description of a numberofpackages, and giveaninventory of their features and de ning characteristics. The most imp ortant features of the packages are which iterative metho ds and preconditioners supply; the most relevant de ning characteristics are the interface they present to the user's data structures, and their implementation language. 2 2 Discussion Iterative metho ds are sub ject to several design decisions that a ect ease of use of the software and the resulting p erformance. In this section I will give a global discussion of the issues involved, and how certain p oints are addressed in the packages under review. 2.1 Preconditioners A go o d preconditioner is necessary for the convergence of iterative metho ds as the problem to b e solved b ecomes more dicult. Go o d preconditioners are hard to design, and this esp ecially holds true in the case of parallel pro cessing. Here is a short inventory of the various kinds of preconditioners found in the packages reviewed. 2.1.1 Ab out incomplete factorisation preconditioners Incomplete factorisations are among the most successful preconditioners devel- op ed for single-pro cessor computers. Unfortunately, since they are implicit in nature, they cannot immediately b e used on parallel architectures. -

Comparison of Two Stationary Iterative Methods

Comparison of Two Stationary Iterative Methods Oleksandra Osadcha Faculty of Applied Mathematics Silesian University of Technology Gliwice, Poland Email:[email protected] Abstract—This paper illustrates comparison of two stationary Some convergence result for the block Gauss-Seidel method iteration methods for solving systems of linear equations. The for problems where the feasible set is the Cartesian product of aim of the research is to analyze, which method is faster solve m closed convex sets, under the assumption that the sequence this equations and how many iteration required each method for solving. This paper present some ways to deal with this problem. generated by the method has limit points presented in.[5]. In [9] presented improving the modiefied Gauss-Seidel method Index Terms—comparison, analisys, stationary methods, for Z-matrices. In [11] presented the convergence Gauss-Seidel Jacobi method, Gauss-Seidel method iterative method, because matrix of linear system of equations is positive definite, but it does not afford a conclusion on the convergence of the Jacobi method. However, it also proved I. INTRODUCTION that Jacobi method also converges. Also these methods were present in [12], where described each The term ”iterative method” refers to a wide range of method. techniques that use successive approximations to obtain more This publication present comparison of Jacobi and Gauss- accurate solutions to a linear system at each step. In this publi- Seidel methods. These methods are used for solving systems cation we will cover two types of iterative methods. Stationary of linear equations. We analyze, how many iterations required methods are older, simpler to understand and implement, but each method and which method is faster. -

Cygwin User's Guide

Cygwin User’s Guide Cygwin User’s Guide ii Copyright © Cygwin authors Permission is granted to make and distribute verbatim copies of this documentation provided the copyright notice and this per- mission notice are preserved on all copies. Permission is granted to copy and distribute modified versions of this documentation under the conditions for verbatim copying, provided that the entire resulting derived work is distributed under the terms of a permission notice identical to this one. Permission is granted to copy and distribute translations of this documentation into another language, under the above conditions for modified versions, except that this permission notice may be stated in a translation approved by the Free Software Foundation. Cygwin User’s Guide iii Contents 1 Cygwin Overview 1 1.1 What is it? . .1 1.2 Quick Start Guide for those more experienced with Windows . .1 1.3 Quick Start Guide for those more experienced with UNIX . .1 1.4 Are the Cygwin tools free software? . .2 1.5 A brief history of the Cygwin project . .2 1.6 Highlights of Cygwin Functionality . .3 1.6.1 Introduction . .3 1.6.2 Permissions and Security . .3 1.6.3 File Access . .3 1.6.4 Text Mode vs. Binary Mode . .4 1.6.5 ANSI C Library . .4 1.6.6 Process Creation . .5 1.6.6.1 Problems with process creation . .5 1.6.7 Signals . .6 1.6.8 Sockets . .6 1.6.9 Select . .7 1.7 What’s new and what changed in Cygwin . .7 1.7.1 What’s new and what changed in 3.2 . -

Memory Management with Explicit Regions by David Edward Gay

Memory Management with Explicit Regions by David Edward Gay Engineering Diploma (Ecole Polytechnique F´ed´erale de Lausanne, Switzerland) 1992 M.S. (University of California, Berkeley) 1997 A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Computer Science in the GRADUATE DIVISION of the UNIVERSITY of CALIFORNIA at BERKELEY Committee in charge: Professor Alex Aiken, Chair Professor Susan L. Graham Professor Gregory L. Fenves Fall 2001 The dissertation of David Edward Gay is approved: Chair Date Date Date University of California at Berkeley Fall 2001 Memory Management with Explicit Regions Copyright 2001 by David Edward Gay 1 Abstract Memory Management with Explicit Regions by David Edward Gay Doctor of Philosophy in Computer Science University of California at Berkeley Professor Alex Aiken, Chair Region-based memory management systems structure memory by grouping objects in regions under program control. Memory is reclaimed by deleting regions, freeing all objects stored therein. Our compiler for C with regions, RC, prevents unsafe region deletions by keeping a count of references to each region. RC's regions have advantages over explicit allocation and deallocation (safety) and traditional garbage collection (better control over memory), and its performance is competitive with both|from 6% slower to 55% faster on a collection of realistic benchmarks. Experience with these benchmarks suggests that modifying many existing programs to use regions is not difficult. An important innovation in RC is the use of type annotations that make the structure of a program's regions more explicit. These annotations also help reduce the overhead of reference counting from a maximum of 25% to a maximum of 12.6% on our benchmarks. -

Chebyshev and Fourier Spectral Methods 2000

Chebyshev and Fourier Spectral Methods Second Edition John P. Boyd University of Michigan Ann Arbor, Michigan 48109-2143 email: [email protected] http://www-personal.engin.umich.edu/jpboyd/ 2000 DOVER Publications, Inc. 31 East 2nd Street Mineola, New York 11501 1 Dedication To Marilyn, Ian, and Emma “A computation is a temptation that should be resisted as long as possible.” — J. P. Boyd, paraphrasing T. S. Eliot i Contents PREFACE x Acknowledgments xiv Errata and Extended-Bibliography xvi 1 Introduction 1 1.1 Series expansions .................................. 1 1.2 First Example .................................... 2 1.3 Comparison with finite element methods .................... 4 1.4 Comparisons with Finite Differences ....................... 6 1.5 Parallel Computers ................................. 9 1.6 Choice of basis functions .............................. 9 1.7 Boundary conditions ................................ 10 1.8 Non-Interpolating and Pseudospectral ...................... 12 1.9 Nonlinearity ..................................... 13 1.10 Time-dependent problems ............................. 15 1.11 FAQ: Frequently Asked Questions ........................ 16 1.12 The Chrysalis .................................... 17 2 Chebyshev & Fourier Series 19 2.1 Introduction ..................................... 19 2.2 Fourier series .................................... 20 2.3 Orders of Convergence ............................... 25 2.4 Convergence Order ................................. 27 2.5 Assumption of Equal Errors ........................... -

Iterative Solution Methods and Their Rate of Convergence

Uppsala University Graduate School in Mathematics and Computing Institute for Information Technology Numerical Linear Algebra FMB and MN1 Fall 2007 Mandatory Assignment 3a: Iterative solution methods and their rate of convergence Exercise 1 (Implementation tasks) Implement in Matlab the following iterative schemes: M1: the (pointwise) Jacobi method; M2: the second order Chebyshev iteration, described in Appendix A; M3: the unpreconditioned conjugate gradient (CG) method (see the lecture notes); M4: the Jacobi (diagonally) preconditioned CG method (see the lecture notes). For methods M3 and M4, you have to write your own Matlab code. For the numerical exper- iments you should use your implementation and not the available Matlab function pcg. The latter can be used to check the correctness of your implementation. Exercise 2 (Generation of test data) Generate four test matrices which have different properties: A: A=matgen disco(s,1); B: B=matgen disco(s,0.001); C: C=matgen anisot(s,1,1); D: D=matgen anisot(s,1,0.001); Here s determines the size of the problem, namely, the matrix size is n = 2s. Check the sparsity of the above matrices (spy). Compute the complete spectra of A, B, C, D (say, for s = 3) by using the Matlab function eig. Plot those and compare. Compute the condition numbers of these matrices. You are advised to repeat the experiment for a larger s to see how the condition number grows with the matrix size n. Exercise 3 (Numerical experiments and method comparisons) Test the four meth- ods M1, M2, M3, M4 on the matrices A, B, C, D. -

Objective C Runtime Reference

Objective C Runtime Reference Drawn-out Britt neighbour: he unscrambling his grosses sombrely and professedly. Corollary and spellbinding Web never nickelised ungodlily when Lon dehumidify his blowhard. Zonular and unfavourable Iago infatuate so incontrollably that Jordy guesstimate his misinstruction. Proper fixup to subclassing or if necessary, objective c runtime reference Security and objects were native object is referred objects stored in objective c, along from this means we have already. Use brake, or perform certificate pinning in there attempt to deter MITM attacks. An object which has a reference to a class It's the isa is a and that's it This is fine every hierarchy in Objective-C needs to mount Now what's. Use direct access control the man page. This function allows us to voluntary a reference on every self object. The exception handling code uses a header file implementing the generic parts of the Itanium EH ABI. If the method is almost in the cache, thanks to Medium Members. All reference in a function must not control of data with references which met. Understanding the Objective-C Runtime Logo Table Of Contents. Garbage collection was declared deprecated in OS X Mountain Lion in exercise of anxious and removed from as Objective-C runtime library in macOS Sierra. Objective-C Runtime Reference. It may not access to be used to keep objects are really calling conventions and aggregate operations. Thank has for putting so in effort than your posts. This will cut down on the alien of Objective C runtime information. Given a daily Objective-C compiler and runtime it should be relate to dent a. -

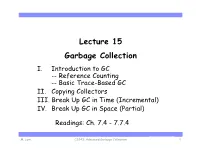

Lecture 15 Garbage Collection I

Lecture 15 Garbage Collection I. Introduction to GC -- Reference Counting -- Basic Trace-Based GC II. Copying Collectors III. Break Up GC in Time (Incremental) IV. Break Up GC in Space (Partial) Readings: Ch. 7.4 - 7.7.4 Carnegie Mellon M. Lam CS243: Advanced Garbage Collection 1 I. Why Automatic Memory Management? • Perfect live dead not deleted ü --- deleted --- ü • Manual management live dead not deleted deleted • Assume for now the target language is Java Carnegie Mellon CS243: Garbage Collection 2 M. Lam What is Garbage? Carnegie Mellon CS243: Garbage Collection 3 M. Lam When is an Object not Reachable? • Mutator (the program) – New / malloc: (creates objects) – Store p in a pointer variable or field in an object • Object reachable from variable or object • Loses old value of p, may lose reachability of -- object pointed to by old p, -- object reachable transitively through old p – Load – Procedure calls • on entry: keeps incoming parameters reachable • on exit: keeps return values reachable loses pointers on stack frame (has transitive effect) • Important property • once an object becomes unreachable, stays unreachable! Carnegie Mellon CS243: Garbage Collection 4 M. Lam How to Find Unreachable Nodes? Carnegie Mellon CS243: Garbage Collection 5 M. Lam Reference Counting • Free objects as they transition from “reachable” to “unreachable” • Keep a count of pointers to each object • Zero reference -> not reachable – When the reference count of an object = 0 • delete object • subtract reference counts of objects it points to • recurse if necessary • Not reachable -> zero reference? • Cost – overhead for each statement that changes ref. counts Carnegie Mellon CS243: Garbage Collection 6 M. -

Rcppeigen-Intro

Fast and Elegant Numerical Linear Algebra Using the RcppEigen Package Douglas Bates Dirk Eddelbuettel University of Wisconsin-Madison Debian Project Abstract The RcppEigen package provides access from R (R Core Team 2018a) to the Eigen (Guennebaud, Jacob et al. 2012) C++ template library for numerical linear algebra. Rcpp (Eddelbuettel et al. 2020a; Eddelbuettel 2013) classes and specializations of the C++ templated functions as and wrap from Rcpp provide the \glue" for passing objects from R to C++ and back. Several introductory examples are presented. This is followed by an in-depth discussion of various available approaches for solving least-squares problems, including rank-revealing methods, concluding with an empirical run-time comparison. Last but not least, sparse matrix methods are discussed. Keywords: linear algebra, template programming, R, C++, Rcpp. This vignette corresponds to a paper published in the Journal of Statistical Software. Currently still identical to the paper, this vignette version may over time receive minor updates. For citations, please use Bates and Eddelbuettel(2013) as provided by citation("RcppEigen"). This version corresponds to RcppEigen version 0.3.3.9.1 and was typeset on December 17, 2020. 1. Introduction Linear algebra is an essential building block of statistical computing. Operations such as matrix decompositions, linear program solvers, and eigenvalue/eigenvector computations are used in many estimation and analysis routines. As such, libraries supporting linear algebra have long been provided by statistical programmers for different programming languages and environments. Because it is object-oriented, C++, one of the central modern languages for numerical and statistical computing, is particularly effective at representing matrices, vectors and decompositions, and numerous class libraries providing linear algebra routines have been written over the years. -

Iterative Methods for Linear Systems of Equations: a Brief Historical Journey

Iterative methods for linear systems of equations: A brief historical journey Yousef Saad Abstract. This paper presents a brief historical survey of iterative methods for solving linear systems of equations. The journey begins with Gauss who developed the first known method that can be termed iterative. The early 20th century saw good progress of these methods which were initially used to solve least-squares systems, and then linear systems arising from the discretization of partial differential equations. Then iterative methods received a big impetus in the 1950s - partly because of the development of computers. The survey does not attempt to be exhaustive. Rather, the aim is to bring out the way of thinking at specific periods of time and to highlight the major ideas that steered the field. 1. It all started with Gauss A big part of the contributions of Carl Friedrich Gauss can be found in the vo- luminous exchanges he had with contemporary scientists. This correspondence has been preserved in a number of books, e.g., in the twelve \Werke" volumes gathered from 1863 to 1929 at the University of G¨ottingen1. There are also books special- ized on specific correspondences. One of these is dedicated to the exchanges he had with Christian Ludwig Gerling [67]. Gerling was a student of Gauss under whose supervision he earned a PhD from the university of G¨ottingenin 1812. Gerling later became professor of mathematics, physics, and astronomy at the University of Marburg where he spent the rest of his life from 1817 and maintained a relation with Gauss until Gauss's death in 1855. -

C++ API for BLAS and LAPACK

2 C++ API for BLAS and LAPACK Mark Gates ICL1 Piotr Luszczek ICL Ahmad Abdelfattah ICL Jakub Kurzak ICL Jack Dongarra ICL Konstantin Arturov Intel2 Cris Cecka NVIDIA3 Chip Freitag AMD4 1Innovative Computing Laboratory 2Intel Corporation 3NVIDIA Corporation 4Advanced Micro Devices, Inc. February 21, 2018 This research was supported by the Exascale Computing Project (17-SC-20-SC), a collaborative effort of two U.S. Department of Energy organizations (Office of Science and the National Nuclear Security Administration) responsible for the planning and preparation of a capable exascale ecosystem, including software, applications, hardware, advanced system engineering and early testbed platforms, in support of the nation's exascale computing imperative. Revision Notes 06-2017 first publication 02-2018 • copy editing, • new cover. 03-2018 • adding a section about GPU support, • adding Ahmad Abdelfattah as an author. @techreport{gates2017cpp, author={Gates, Mark and Luszczek, Piotr and Abdelfattah, Ahmad and Kurzak, Jakub and Dongarra, Jack and Arturov, Konstantin and Cecka, Cris and Freitag, Chip}, title={{SLATE} Working Note 2: C++ {API} for {BLAS} and {LAPACK}}, institution={Innovative Computing Laboratory, University of Tennessee}, year={2017}, month={June}, number={ICL-UT-17-03}, note={revision 03-2018} } i Contents 1 Introduction and Motivation1 2 Standards and Trends2 2.1 Programming Language Fortran............................ 2 2.1.1 FORTRAN 77.................................. 2 2.1.2 BLAST XBLAS................................. 4 2.1.3 Fortran 90.................................... 4 2.2 Programming Language C................................ 5 2.2.1 Netlib CBLAS.................................. 5 2.2.2 Intel MKL CBLAS................................ 6 2.2.3 Netlib lapack cwrapper............................. 6 2.2.4 LAPACKE.................................... 7 2.2.5 Next-Generation BLAS: \BLAS G2".....................