Reductive Aspects of Thermal Physics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Bluff Your Way in the Second Law of Thermodynamics

Bluff your way in the Second Law of Thermodynamics Jos Uffink Department of History and Foundations of Science Utrecht University, P.O.Box 80.000, 3508 TA Utrecht, The Netherlands e-mail: uffi[email protected] 5th July 2001 ABSTRACT The aim of this article is to analyse the relation between the second law of thermodynamics and the so-called arrow of time. For this purpose, a number of different aspects in this arrow of time are distinguished, in particular those of time-(a)symmetry and of (ir)reversibility. Next I review versions of the second law in the work of Carnot, Clausius, Kelvin, Planck, Gibbs, Caratheodory´ and Lieb and Yngvason, and investigate their connection with these aspects of the arrow of time. It is shown that this connection varies a great deal along with these formulations of the second law. According to the famous formulation by Planck, the second law expresses the irreversibility of natural processes. But in many other formulations irreversibility or even time-asymmetry plays no role. I therefore argue for the view that the second law has nothing to do with the arrow of time. KEY WORDS: Thermodynamics, Second Law, Irreversibility, Time-asymmetry, Arrow of Time. 1 INTRODUCTION There is a famous lecture by the British physicist/novelist C. P. Snow about the cul- tural abyss between two types of intellectuals: those who have been educated in literary arts and those in the exact sciences. This lecture, the Two Cultures (1959), characterises the lack of mutual respect between them in a passage: A good many times I have been present at gatherings of people who, by the standards of the traditional culture, are thought highly educated and who have 1 with considerable gusto been expressing their incredulity at the illiteracy of sci- entists. -

Einstein Solid 1 / 36 the Results 2

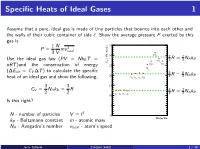

Specific Heats of Ideal Gases 1 Assume that a pure, ideal gas is made of tiny particles that bounce into each other and the walls of their cubic container of side `. Show the average pressure P exerted by this gas is 1 N 35 P = mv 2 3 V total SO 30 2 7 7 (J/K-mole) R = NAkB Use the ideal gas law (PV = NkB T = V 2 2 CO2 C H O CH nRT )and the conservation of energy 25 2 4 Cl2 (∆Eint = CV ∆T ) to calculate the specific 5 5 20 2 R = 2 NAkB heat of an ideal gas and show the following. H2 N2 O2 CO 3 3 15 CV = NAkB = R 3 3 R = NAkB 2 2 He Ar Ne Kr 2 2 10 Is this right? 5 3 N - number of particles V = ` 0 Molecule kB - Boltzmann constant m - atomic mass NA - Avogadro's number vtotal - atom's speed Jerry Gilfoyle Einstein Solid 1 / 36 The Results 2 1 N 2 2 N 7 P = mv = hEkini 2 NAkB 3 V total 3 V 35 30 SO2 3 (J/K-mole) V 5 CO2 C N k hEkini = NkB T H O CH 2 A B 2 25 2 4 Cl2 20 H N O CO 3 3 2 2 2 3 CV = NAkB = R 2 NAkB 2 2 15 He Ar Ne Kr 10 5 0 Molecule Jerry Gilfoyle Einstein Solid 2 / 36 Quantum mechanically 2 E qm = `(` + 1) ~ rot 2I where l is the angular momen- tum quantum number. -

Statistics of a Free Single Quantum Particle at a Finite

STATISTICS OF A FREE SINGLE QUANTUM PARTICLE AT A FINITE TEMPERATURE JIAN-PING PENG Department of Physics, Shanghai Jiao Tong University, Shanghai 200240, China Abstract We present a model to study the statistics of a single structureless quantum particle freely moving in a space at a finite temperature. It is shown that the quantum particle feels the temperature and can exchange energy with its environment in the form of heat transfer. The underlying mechanism is diffraction at the edge of the wave front of its matter wave. Expressions of energy and entropy of the particle are obtained for the irreversible process. Keywords: Quantum particle at a finite temperature, Thermodynamics of a single quantum particle PACS: 05.30.-d, 02.70.Rr 1 Quantum mechanics is the theoretical framework that describes phenomena on the microscopic level and is exact at zero temperature. The fundamental statistical character in quantum mechanics, due to the Heisenberg uncertainty relation, is unrelated to temperature. On the other hand, temperature is generally believed to have no microscopic meaning and can only be conceived at the macroscopic level. For instance, one can define the energy of a single quantum particle, but one can not ascribe a temperature to it. However, it is physically meaningful to place a single quantum particle in a box or let it move in a space where temperature is well-defined. This raises the well-known question: How a single quantum particle feels the temperature and what is the consequence? The question is particular important and interesting, since experimental techniques in recent years have improved to such an extent that direct measurement of electron dynamics is possible.1,2,3 It should also closely related to the question on the applicability of the thermodynamics to small systems on the nanometer scale.4 We present here a model to study the behavior of a structureless quantum particle moving freely in a space at a nonzero temperature. -

Otto Sackur's Pioneering Exploits in the Quantum Theory Of

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Catalogo dei prodotti della ricerca Chapter 3 Putting the Quantum to Work: Otto Sackur’s Pioneering Exploits in the Quantum Theory of Gases Massimiliano Badino and Bretislav Friedrich After its appearance in the context of radiation theory, the quantum hypothesis rapidly diffused into other fields. By 1910, the crisis of classical traditions of physics and chemistry—while taking the quantum into account—became increas- ingly evident. The First Solvay Conference in 1911 pushed quantum theory to the fore, and many leading physicists responded by embracing the quantum hypoth- esis as a way to solve outstanding problems in the theory of matter. Until about 1910, quantum physics had drawn much of its inspiration from two sources. The first was the complex formal machinery connected with Max Planck’s theory of radiation and, above all, its close relationship with probabilis- tic arguments and statistical mechanics. The fledgling 1900–1901 version of this theory hinged on the application of Ludwig Boltzmann’s 1877 combinatorial pro- cedure to determine the state of maximum probability for a set of oscillators. In his 1906 book on heat radiation, Planck made the connection with gas theory even tighter. To illustrate the use of the procedure Boltzmann originally developed for an ideal gas, Planck showed how to extend the analysis of the phase space, com- monplace among practitioners of statistical mechanics, to electromagnetic oscil- lators (Planck 1906, 140–148). In doing so, Planck identified a crucial difference between the phase space of the gas molecules and that of oscillators used in quan- tum theory. -

Intermediate Statistics in Thermoelectric Properties of Solids

Intermediate statistics in thermoelectric properties of solids André A. Marinho1, Francisco A. Brito1,2 1 Departamento de Física, Universidade Federal de Campina Grande, 58109-970 Campina Grande, Paraíba, Brazil and 2 Departamento de Física, Universidade Federal da Paraíba, Caixa Postal 5008, 58051-970 João Pessoa, Paraíba, Brazil (Dated: July 23, 2019) Abstract We study the thermodynamics of a crystalline solid by applying intermediate statistics manifested by q-deformation. We based part of our study on both Einstein and Debye models, exploring primarily de- formed thermal and electrical conductivities as a function of the deformed Debye specific heat. The results revealed that the q-deformation acts in two different ways but not necessarily as independent mechanisms. It acts as a factor of disorder or impurity, modifying the characteristics of a crystalline structure, which are phenomena described by q-bosons, and also as a manifestation of intermediate statistics, the B-anyons (or B-type systems). For the latter case, we have identified the Schottky effect, normally associated with high-Tc superconductors in the presence of rare-earth-ion impurities, and also the increasing of the specific heat of the solids beyond the Dulong-Petit limit at high temperature, usually related to anharmonicity of interatomic interactions. Alternatively, since in the q-bosons the statistics are in principle maintained the effect of the deformation acts more slowly due to a small change in the crystal lattice. On the other hand, B-anyons that belong to modified statistics are more sensitive to the deformation. PACS numbers: 02.20-Uw, 05.30-d, 75.20-g arXiv:1907.09055v1 [cond-mat.stat-mech] 21 Jul 2019 1 I. -

Restricted Agents in Thermodynamics and Quantum Information Theory

Research Collection Doctoral Thesis Restricted agents in thermodynamics and quantum information theory Author(s): Krämer Gabriel, Lea Philomena Publication Date: 2016 Permanent Link: https://doi.org/10.3929/ethz-a-010858172 Rights / License: In Copyright - Non-Commercial Use Permitted This page was generated automatically upon download from the ETH Zurich Research Collection. For more information please consult the Terms of use. ETH Library Diss. ETH No. 23972 Restricted agents in thermodynamics and quantum information theory A thesis submitted to attain the degree of DOCTOR OF SCIENCES of ETH ZURICH (Dr. sc. ETH Zurich) presented by Lea Philomena Kr¨amer Gabriel MPhysPhil, University of Oxford born on 18th July 1990 citizen of Germany accepted on the recommendation of Renato Renner, examiner Giulio Chiribella, co-examiner Jakob Yngvason, co-examiner 2016 To my family Acknowledgements First and foremost, I would like to thank my thesis supervisor, Prof. Renato Renner, for placing his trust in me from the beginning, and giving me the opportunity to work in his group. I am grateful for his continuous support and guidance, and I have always benefited greatly from the discussions we had | Renato without doubt has a clear vision, a powerful intuition, and a deep understanding of physics and information theory. Perhaps even more importantly, he has an exceptional gift for explaining complex subjects in a simple and understandable way. I would also like to thank my co-examiners Giulio Chiribella and Jakob Yngvason for agreeing to be part of my thesis committee, and for their input and critical questions in the discussions and conversations we had. -

Physics 357 – Thermal Physics - Spring 2005

Physics 357 – Thermal Physics - Spring 2005 Contact Info MTTH 9-9:50. Science 255 Doug Juers, Science 244, 527-5229, [email protected] Office Hours: Mon 10-noon, Wed 9-10 and by appointment or whenever I’m around. Description Thermal physics involves the study of systems with lots (1023) of particles. Since only a two-body problem can be solved analytically, you can imagine we will look at these systems somewhat differently from simple systems in mechanics. Rather than try to predict individual trajectories of individual particles we will consider things on a larger scale. Probability and statistics will play an important role in thinking about what can happen. There are two general methods of dealing with such systems – a top down approach and a bottom up approach. In the top down approach (thermodynamics) one doesn’t even really consider that there are particles there, and instead thinks about things in terms of quantities like temperature heat, energy, work, entropy and enthalpy. In the bottom up approach (statistical mechanics) one considers in detail the interactions between individual particles. Building up from there yields impressive predictions of bulk properties of different materials. In this course we will cover both thermodynamics and statistical mechanics. The first part of the term will emphasize the former, while the second part of the term will emphasize the latter. Both topics have important applications in many fields, including solid-state physics, fluids, geology, chemistry, biology, astrophysics, cosmology. The great thing about thermal physics is that in large part it’s about trying to deal with reality. -

Einstein's Physics

Einstein’s Physics Albert Einstein at Barnes Foundation in Merion PA, c.1947. Photography by Laura Delano Condax; gift to the author from Vanna Condax. Einstein’s Physics Atoms, Quanta, and Relativity Derived, Explained, and Appraised TA-PEI CHENG University of Missouri–St. Louis Portland State University 3 3 Great Clarendon Street, Oxford, OX2 6DP, United Kingdom Oxford University Press is a department of the University of Oxford. It furthers the University’s objective of excellence in research, scholarship, and education by publishing worldwide. Oxford is a registered trade mark of Oxford University Press in the UK and in certain other countries © Ta-Pei Cheng 2013 The moral rights of the author have been asserted Impression: 1 All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, without the prior permission in writing of Oxford University Press, or as expressly permitted by law, by licence or under terms agreed with the appropriate reprographics rights organization. Enquiries concerning reproduction outside the scope of the above should be sent to the Rights Department, Oxford University Press, at the address above You must not circulate this work in any other form and you must impose this same condition on any acquirer British Library Cataloguing in Publication Data Data available ISBN 978–0–19–966991–2 Printed and bound by CPI Group (UK) Ltd, Croydon, CR0 4YY Links to third party websites are provided by Oxford in good faith and for information only. Oxford disclaims any responsibility for the materials contained in any third party website referenced in this work. -

MOLAR HEAT of SOLIDS the Dulong–Petit Law, a Thermodynamic

MOLAR HEAT OF SOLIDS The Dulong–Petit law, a thermodynamic law proposed in 1819 by French physicists Dulong and Petit, states the classical expression for the molar specific heat of certain crystals. The two scientists conducted experiments on three dimensional solid crystals to determine the heat capacities of a variety of these solids. They discovered that all investigated solids had a heat capacity of approximately 25 J mol-1 K-1 room temperature. The result from their experiment was explained as follows. According to the Equipartition Theorem, each degree of freedom has an average energy of 1 = 2 where is the Boltzmann constant and is the absolute temperature. We can model the atoms of a solid as attached to neighboring atoms by springs. These springs extend into three-dimensional space. Each direction has 2 degrees of freedom: one kinetic and one potential. Thus every atom inside the solid was considered as a 3 dimensional oscillator with six degrees of freedom ( = 6) The more energy that is added to the solid the more these springs vibrate. Figure 1: Model of interaction of atoms of a solid Now the energy of each atom is = = 3 . The energy of N atoms is 6 = 3 2 = 3 where n is the number of moles. To change the temperature by ΔT via heating, one must transfer Q=3nRΔT to the crystal, thus the molar heat is JJ CR=3 ≈⋅ 3 8.31 ≈ 24.93 molK molK Similarly, the molar heat capacity of an atomic or molecular ideal gas is proportional to its number of degrees of freedom, : = 2 This explanation for Petit and Dulong's experiment was not sufficient when it was discovered that heat capacity decreased and going to zero as a function of T3 (or, for metals, T) as temperature approached absolute zero. -

First Principles Study of the Vibrational and Thermal Properties of Sn-Based Type II Clathrates, Csxsn136 (0 ≤ X ≤ 24) and Rb24ga24sn112

Article First Principles Study of the Vibrational and Thermal Properties of Sn-Based Type II Clathrates, CsxSn136 (0 ≤ x ≤ 24) and Rb24Ga24Sn112 Dong Xue * and Charles W. Myles Department of Physics and Astronomy, Texas Tech University, Lubbock, TX 79409-1051, USA; [email protected] * Correspondence: [email protected]; Tel.: +1-806-834-4563 Received: 12 May 2019; Accepted: 11 June 2019; Published: 14 June 2019 Abstract: After performing first-principles calculations of structural and vibrational properties of the semiconducting clathrates Rb24Ga24Sn112 along with binary CsxSn136 (0 ≤ x ≤ 24), we obtained equilibrium geometries and harmonic phonon modes. For the filled clathrate Rb24Ga24Sn112, the phonon dispersion relation predicts an upshift of the low-lying rattling modes (~25 cm−1) for the Rb (“rattler”) compared to Cs vibration in CsxSn136. It is also found that the large isotropic atomic displacement parameter (Uiso) exists when Rb occupies the “over-sized” cage (28 atom cage) rather than the 20 atom counterpart. These guest modes are expected to contribute significantly to minimizing the lattice’s thermal conductivity (κL). Our calculation of the vibrational contribution to the specific heat and our evaluation on κL are quantitatively presented and discussed. Specifically, the heat capacity diagram regarding CV/T3 vs. T exhibits the Einstein-peak-like hump that is mainly attributable to the guest oscillator in a 28 atom cage, with a characteristic temperature 36.82 K for Rb24Ga24Sn112. Our calculated rattling modes are around 25 cm−1 for the Rb trapped in a 28 atom cage, and 65.4 cm−1 for the Rb encapsulated in a 20 atom cage. -

Hostatmech.Pdf

A computational introduction to quantum statistics using harmonically trapped particles Martin Ligare∗ Department of Physics & Astronomy, Bucknell University, Lewisburg, PA 17837 (Dated: March 15, 2016) Abstract In a 1997 paper Moore and Schroeder argued that the development of student understanding of thermal physics could be enhanced by computational exercises that highlight the link between the statistical definition of entropy and the second law of thermodynamics [Am. J. Phys. 65, 26 (1997)]. I introduce examples of similar computational exercises for systems in which the quantum statistics of identical particles plays an important role. I treat isolated systems of small numbers of particles confined in a common harmonic potential, and use a computer to enumerate all possible occupation-number configurations and multiplicities. The examples illustrate the effect of quantum statistics on the sharing of energy between weakly interacting subsystems, as well as the distribution of energy within subsystems. The examples also highlight the onset of Bose-Einstein condensation in small systems. PACS numbers: 1 I. INTRODUCTION In a 1997 paper in this journal Moore and Schroeder argued that the development of student understanding of thermal physics could be enhanced by computational exercises that highlight the link between the statistical definition of entropy and the second law of thermodynamics.1 The first key to their approach was the use of a simple model, the Ein- stein solid, for which it is straightforward to develop an exact formula for the number of microstates of an isolated system. The second key was the use of a computer, rather than analytical approximations, to evaluate these formulas. -

Physics, M.S. 1

Physics, M.S. 1 PHYSICS, M.S. DEPARTMENT OVERVIEW The Department of Physics has a strong tradition of graduate study and research in astrophysics; atomic, molecular, and optical physics; condensed matter physics; high energy and particle physics; plasma physics; quantum computing; and string theory. There are many facilities for carrying out world-class research (https://www.physics.wisc.edu/ research/areas/). We have a large professional staff: 45 full-time faculty (https://www.physics.wisc.edu/people/staff/) members, affiliated faculty members holding joint appointments with other departments, scientists, senior scientists, and postdocs. There are over 175 graduate students in the department who come from many countries around the world. More complete information on the graduate program, the faculty, and research groups is available at the department website (http:// www.physics.wisc.edu). Research specialties include: THEORETICAL PHYSICS Astrophysics; atomic, molecular, and optical physics; condensed matter physics; cosmology; elementary particle physics; nuclear physics; phenomenology; plasmas and fusion; quantum computing; statistical and thermal physics; string theory. EXPERIMENTAL PHYSICS Astrophysics; atomic, molecular, and optical physics; biophysics; condensed matter physics; cosmology; elementary particle physics; neutrino physics; experimental studies of superconductors; medical physics; nuclear physics; plasma physics; quantum computing; spectroscopy. M.S. DEGREES The department offers the master science degree in physics, with two named options: Research and Quantum Computing. The M.S. Physics-Research option (http://guide.wisc.edu/graduate/physics/ physics-ms/physics-research-ms/) is non-admitting, meaning it is only available to students pursuing their Ph.D. The M.S. Physics-Quantum Computing option (http://guide.wisc.edu/graduate/physics/physics-ms/ physics-quantum-computing-ms/) (MSPQC Program) is a professional master's program in an accelerated format designed to be completed in one calendar year..