Lightweight Algorithms for Depth Sensor Equipped Embedded Devices

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Retail Prices in RED Color Are Sale Prices. Limit One Per Customer

Retail prices in RED color are sale prices. Limit one per customer. Limited to stock on hand. Cases The parts listed below are normal stock items. However, we also have access to and can special order Addtronics, A-Top, Boomrack, Coolermaster, Enlight, Foxconn, Intel, InWin, Lite-On, PC Power & Cooling, Startech and SuperMicro. Description Manufacturer Model# Mid-Tower ATX Cases Retail Stock Black Elite 350 Mid-Tower ATX Case, Includes 500Watt Power Supply, (4) RC-350- Cooler Master 5.25" & (1) 3.5" External Bays, (6) 3.5" Internal Bays, 16.1"Hx7.1"Wx17.9"D, $95.00 3 KKR500 w/2-USB Front Ports, Includes (1) 120MM Rear Fan SGC-2000- Storm Scout SGC-2000-KKN1-GP Black Steel / Plastic ATX Mid Tower Cooler Master $95.00 1 KKN1-GP Computer Case No Power Supply Included RC-912- HAF 912 RC-912-KKN1 Black SECC/ ABS Plastic ATX Mid Tower Computer Cooler Master $75.00 0 KKN1 Case, No Power Supply V3 Black Edition VL80001W2Z Black SECC / Plastic ATX Mid Tower Thermaltake VL80001W2Z $70.00 4 Computer Case VN900A1W2 Commander Series Commander MS-II Black SECC ATX Mid Tower Computer Thermaltake $80.00 1 N Case Zalman Z9 PLUS Z9 Plus Black Steel / Plastic ATX Mid Tower Computer Case $80.00 1 Z11 PLUS Zalman ZALMAN Z11 Plus HF1 Black Steel / Plastic ATX Mid Tower Computer Case $95.00 1 HF1 Manufacturer Model# Retail Stock 2.5in SATA Hard Drive to 3.5in Drive Bay Mounting Kit (Includes 1 x BRACKET25 Combined 7+15 pin SATA plus LP4 Power to SATA Cable Connectors 1x Startech $15.00 7 SAT SATA 7 pin Female Connector Connectors 1x SATA 15 pin Female Connector Connectors 1x LP4 Male Connector) CD-ROM, CD-Burners, DVD-ROM, DVD-Burners and Media The parts listed below are normal stock items. -

Intel® Compute Stick STK2M3W64CC STK2MV64CC STK2M364CC Technical Product Specification

Intel® Compute Stick STK2M3W64CC STK2MV64CC STK2M364CC Technical Product Specification October 2017 Order Number: H86345-004 Revision History Revision Revision History Date 001 First release February 2016 002 Updated Section 1.3 Operating System Overview May 2016 003 Updated Section 1.3 Operating System Overview June 2016 004 Updated HDMI audio section October 2017 Disclaimer This product specification applies to only the standard Intel® Compute Stick with BIOS identifier CCSKLM30.86A or CCSKLM5V.86A. INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL® PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL’S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER, AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. UNLESS OTHERWISE AGREED IN WRITING BY INTEL, THE INTEL PRODUCTS ARE NOT DESIGNED NOR INTENDED FOR ANY APPLICATION IN WHICH THE FAILURE OF THE INTEL PRODUCT COULD CREATE A SITUATION WHERE PERSONAL INJURY OR DEATH MAY OCCUR. All Compute Sticks are evaluated as Information Technology Equipment (I.T.E.) for installation in homes, offices, schools, computer rooms, and similar locations. The suitability of this product for other PC or embedded non-PC applications or other environments, such as medical, industrial, alarm systems, test equipment, etc. may not be supported without further evaluation by Intel. Intel Corporation may have patents or pending patent applications, trademarks, copyrights, or other intellectual property rights that relate to the presented subject matter. -

Extracting and Mapping Industry 4.0 Technologies Using Wikipedia

Computers in Industry 100 (2018) 244–257 Contents lists available at ScienceDirect Computers in Industry journal homepage: www.elsevier.com/locate/compind Extracting and mapping industry 4.0 technologies using wikipedia T ⁎ Filippo Chiarelloa, , Leonello Trivellib, Andrea Bonaccorsia, Gualtiero Fantonic a Department of Energy, Systems, Territory and Construction Engineering, University of Pisa, Largo Lucio Lazzarino, 2, 56126 Pisa, Italy b Department of Economics and Management, University of Pisa, Via Cosimo Ridolfi, 10, 56124 Pisa, Italy c Department of Mechanical, Nuclear and Production Engineering, University of Pisa, Largo Lucio Lazzarino, 2, 56126 Pisa, Italy ARTICLE INFO ABSTRACT Keywords: The explosion of the interest in the industry 4.0 generated a hype on both academia and business: the former is Industry 4.0 attracted for the opportunities given by the emergence of such a new field, the latter is pulled by incentives and Digital industry national investment plans. The Industry 4.0 technological field is not new but it is highly heterogeneous (actually Industrial IoT it is the aggregation point of more than 30 different fields of the technology). For this reason, many stakeholders Big data feel uncomfortable since they do not master the whole set of technologies, they manifested a lack of knowledge Digital currency and problems of communication with other domains. Programming languages Computing Actually such problem is twofold, on one side a common vocabulary that helps domain experts to have a Embedded systems mutual understanding is missing Riel et al. [1], on the other side, an overall standardization effort would be IoT beneficial to integrate existing terminologies in a reference architecture for the Industry 4.0 paradigm Smit et al. -

Exhibitor Service Manual

EXHIBITOR SERVICE MANUAL FPEA Florida Home School Convention FPEA21 Rosen Shingle Creek Resort | Orlando, F May 27-29, 2021 Shepard 1531 Carroll Dr NW, Atlanta, GA 30318 | phone: 404-720-8600 | fax: 404-720-8755 TABLE OF CONTENTS FPEA Florida Home School Convention FPEA21 Rosen Shingle Creek Resort | Orlando, F May 27-29, 2021 Show Information .........................................................................................................3 Graphics & Signs .........................................................................................................98 Information .......................................................................................................................4 Uploading Graphics 101 .........................................................................................99 Shepard Graphic Guidelines ............................................................................100 Online Ordering .............................................................................................................6 Elevate Your Exhibit ............................................................................................... 102 Method of Payment ....................................................................................................7 Shields & Barriers ..................................................................................................... 103 Terms & Conditions......................................................................................................8 Exhibit Counter -

Intel® Compute Stick STCK1A32WFC, STCK1A8LFC Product Brief

PRODUCT BRIEF Intel® Compute Stick STCK1A32WFC STCK1A8LFC We shrunk the package, not the performance. Transform where and how you compute In the office, computer lab, or at home, the Intel® Compute Stick gives you the power to work anywhere there is an HDMI* display. The Intel® Compute Stick is a new standard for stick A cost-effective computer for home, business, and PC-like computing devices that enables you to transform a display embedded applications, the Intel Compute Stick is small into a fully functional computer. Just plug the Intel Compute enough to fit in the palm of your hand, yet big enough for Stick into any HDMI* TV or monitor, connect your wireless a quad-core Intel® Atom™ processor and your choice of keyboard and mouse and you’re ready to stream media, operating systems: Windows 8.1* or Linux* Ubuntu. The Intel work, or play games. The built-in WiFi and on-board storage Compute Stick delivers a Mini PC with full-size performance, enables you to be productive immediately. We mean it when reliability, and ease of use so you can work when, where, we say ready to go out-of-the box. and how you want. It’s the quality and value you’ve come to expect from Intel in a product designed and built by the company itself. PRODUCT BRIEF Intel® Compute Stick STCK1A32WFC, STCK1A8LFC Anywhere you have a TV, you also have a Plug, play, and produce: Linux*-based computer Windows*-based computer makes collaboration at work simple Plug the innovative form factor into an HDMI port on a The Intel Compute Stick with Ubuntu has all the perfor- television or display, connect a wireless keyboard and mance you need for running thin client, embedded, mouse, and you have a fully functional, Windows 8.1- collaboration, or cloud applications. -

The GPU Computing Revolution

The GPU Computing Revolution From Multi-Core CPUs to Many-Core Graphics Processors A Knowledge Transfer Report from the London Mathematical Society and Knowledge Transfer Network for Industrial Mathematics By Simon McIntosh-Smith Copyright © 2011 by Simon McIntosh-Smith Front cover image credits: Top left: Umberto Shtanzman / Shutterstock.com Top right: godrick / Shutterstock.com Bottom left: Double Negative Visual Effects Bottom right: University of Bristol Background: Serg64 / Shutterstock.com THE GPU COMPUTING REVOLUTION From Multi-Core CPUs To Many-Core Graphics Processors By Simon McIntosh-Smith Contents Page Executive Summary 3 From Multi-Core to Many-Core: Background and Development 4 Success Stories 7 GPUs in Depth 11 Current Challenges 18 Next Steps 19 Appendix 1: Active Researchers and Practitioner Groups 21 Appendix 2: Software Applications Available on GPUs 23 References 24 September 2011 A Knowledge Transfer Report from the London Mathematical Society and the Knowledge Transfer Network for Industrial Mathematics Edited by Robert Leese and Tom Melham London Mathematical Society, De Morgan House, 57–58 Russell Square, London WC1B 4HS KTN for Industrial Mathematics, Surrey Technology Centre, Surrey Research Park, Guildford GU2 7YG 2 THE GPU COMPUTING REVOLUTION From Multi-Core CPUs To Many-Core Graphics Processors AUTHOR Simon McIntosh-Smith is head of the Microelectronics Research Group at the Univer- sity of Bristol and chair of the Many-Core and Reconfigurable Supercomputing Conference (MRSC), Europe’s largest conference dedicated to the use of massively parallel computer architectures. Prior to joining the university he spent fifteen years in industry where he designed massively parallel hardware and software at companies such as Inmos, STMicro- electronics and Pixelfusion, before co-founding ClearSpeed as Vice-President of Architec- ture and Applications. -

GPU Developments 2017T

GPU Developments 2017 2018 GPU Developments 2017t © Copyright Jon Peddie Research 2018. All rights reserved. Reproduction in whole or in part is prohibited without written permission from Jon Peddie Research. This report is the property of Jon Peddie Research (JPR) and made available to a restricted number of clients only upon these terms and conditions. Agreement not to copy or disclose. This report and all future reports or other materials provided by JPR pursuant to this subscription (collectively, “Reports”) are protected by: (i) federal copyright, pursuant to the Copyright Act of 1976; and (ii) the nondisclosure provisions set forth immediately following. License, exclusive use, and agreement not to disclose. Reports are the trade secret property exclusively of JPR and are made available to a restricted number of clients, for their exclusive use and only upon the following terms and conditions. JPR grants site-wide license to read and utilize the information in the Reports, exclusively to the initial subscriber to the Reports, its subsidiaries, divisions, and employees (collectively, “Subscriber”). The Reports shall, at all times, be treated by Subscriber as proprietary and confidential documents, for internal use only. Subscriber agrees that it will not reproduce for or share any of the material in the Reports (“Material”) with any entity or individual other than Subscriber (“Shared Third Party”) (collectively, “Share” or “Sharing”), without the advance written permission of JPR. Subscriber shall be liable for any breach of this agreement and shall be subject to cancellation of its subscription to Reports. Without limiting this liability, Subscriber shall be liable for any damages suffered by JPR as a result of any Sharing of any Material, without advance written permission of JPR. -

Porting Linux 2.6.9 to the PXA270 Based Development Platform Intel

Porting Linux to Intel's Glencoe Platform Porting Linux 2.6.9 to the PXA270 based development platform Intel Glencoe Beyond3G Final Technical Report as part of a research collaboration between Intel and Carnegie Mellon University Author: Matthias Ihmig, [email protected] Last Change: 05/11/2005 Matthias Ihmig, [email protected] page 1 Porting Linux to Intel's Glencoe Platform Table of Contents Porting Linux 2.6.9..................................................................................................................... 1 1. Introduction.......................................................................................................................... 4 2. The Linux Boot Loader BLOB................................................................................................ 5 2.1. Defaults and configuration values................................................................................. 5 2.1.1. Clock settings......................................................................................................... 5 2.1.2. Flash partition table............................................................................................... 6 2.1.3. Other default settings............................................................................................ 7 2.2. How to build BLOB......................................................................................................... 7 2.3. How to flash BLOB to the Glencoe board....................................................................... 8 2.3.1. Using -

Revenge of Hump Day 2017-01-11

The January 11th, 2017 Edition of THE REVENGE HUMP DAY! Page 1 of 39 Welcome to the January 11th, 2017 Edition of THE REVENGE HUMP DAY! This has been the week of hell around Casa Bolgeo. Last Wednesday, Beth, Destroyer of Worlds, was staying over for a few hours and was going to take a shower down in my bathroom. She called up that the shower was filling up with water. Yep, it started to back up. Well, we got a plumber out that night hand he said that the septic tank was filled. Well, that was kind of strange since we got the system pumped out about 1.5 years ago. Well, it was slowly draining so we decided to try to live with it until we could get a pump truck out the next day. Then the threat of snow hit and nothing was traveling on Thursday. Then the whole system plugged up all the way. By Friday, Casa Bolgeo was unlivable, so I packed up SHE WHO MUST BE OBEYED, Popee (my 92 year old father-in-law, and myself and we headed over to Brandy’s house to spend the weekend. I slept in a recliner, SWMBO on the couch and Popee in a bedroom. We enjoyed staying with Brandy and the kids, but it’s not home. On Monday, we got a pump truck out to pump the septic tank. According to the company who did the work, the people who did it before didn’t get the muck all out of the bottom of the tank the last time. -

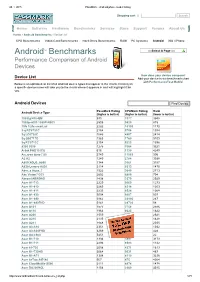

Androidtm Benchmarks

28. 1. 2015 PassMark Android phone model listing Shopping cart | Search Home Software Hardware Benchmarks Services Store Support Forums About Us Home » Android Benchmarks » Device List CPU Benchmarks Video Card Benchmarks Hard Drive Benchmarks RAM PC Systems Android iOS / iPhone TM Select A Page Android Benchmarks Performance Comparison of Android Devices How does your device compare? Device List Add your device to our benchmark chart with PerformanceTest Mobile! Below is an alphabetical list of all Android device types that appear in the charts. Clicking on a specific device name will take you to the charts where it appears in and will highlight it for you. Android Devices Find Device PassMark Rating CPUMark Rating Rank Android Device Type (higher is better) (higher is better) (lower is better) 1005tg N10QM 935 3377 3948 1080pn003 1080PN003 2505 9820 815 1life 1Life.smart.air 2282 10103 1170 3q RC9731C 2154 5756 1394 3q LC0720C 1646 4897 2414 3q QS0717D 1363 1760 3109 3q RC9712C 2154 5223 1396 9300 9300 1275 3364 3321 Alink PAD10 ICS 616 1130 4249 A.c.ryan dyno 7.85 2749 11065 596 A2 A2 1240 2784 3388 A800 XOLO_A800 1344 3661 3157 A830 Lenovo A830 2114 8313 1470 Abs_a Aqua_7 1522 3640 2713 Abc Vision7DCI 2602 6880 704 Abroad ABROAD 1438 3379 2929 Acer A1713 2229 9069 1273 Acer A1810 2265 8314 1203 Acer A1811 2233 8524 1268 Acer A1830 3004 9207 507 Acer A1840 3962 23996 267 Acer A1840FHD 5141 28720 58 Acer A101 1577 3758 2586 Acer A110 1964 8623 1822 Acer A200 1559 3822 2621 Acer A210 2135 8315 1428 Acer A211 1848 8130 2035 Acer A3A10 2351 8128 1032 Acer A3A20FHD 3269 11265 428 Acer AA3600 5451 22392 22 Acer B1710 1336 3897 3173 Acer B1711 2293 8583 1142 Acer b1720 2058 4371 1613 Acer B1730HD 3064 9031 487 Acer B1A71 1308 4119 3236 Acer beTouch E140 567 475 4264 Acer CloudMobile S500 2111 4874 1478 Acer DA220HQL 1156 2960 3545 http://www.androidbenchmark.net/device_list.php 1/71 28. -

Intel® Compute Stick STK1AW32SC STK1A32SC Technical Product Specification

Intel® Compute Stick STK1AW32SC STK1A32SC Technical Product Specification February 2016 Order Number: H91231-004 Revision History Revision Revision History Date 001 First release November 2015 002 Changed URL for BIOS Update and BIOS Recovery information January 2016 003 Corrected MTBF number January 2016 004 Added Section 2.3 Mechanical Considerations and updated Section 2.4 Reliability February 2016 Disclaimer This product specification applies to only the standard Intel® Compute Stick with BIOS identifier SCCHTAX5.86A. INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL® PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL’S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER, AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. UNLESS OTHERWISE AGREED IN WRITING BY INTEL, THE INTEL PRODUCTS ARE NOT DESIGNED NOR INTENDED FOR ANY APPLICATION IN WHICH THE FAILURE OF THE INTEL PRODUCT COULD CREATE A SITUATION WHERE PERSONAL INJURY OR DEATH MAY OCCUR. All Intel Compute Sticks are evaluated as Information Technology Equipment (I.T.E.) for installation in homes, offices, schools, computer rooms, and similar locations. The suitability of this product for other PC or embedded non-PC applications or other environments, such as medical, industrial, alarm systems, test equipment, etc. may not be supported without further evaluation by Intel. Intel Corporation may have patents or pending patent applications, trademarks, copyrights, or other intellectual property rights that relate to the presented subject matter. -

HP Omnibook 6000 Datasheet

HP OmniBook 6000 Notebook PC Reliable Mobility in a New, Contemporary Next-Generation Notebook That’s Easy to Carry, data Notebook PC Ready to Work If you specify notebook PCs for your company, On top of all these performance features, this notebook Hewlett-Packard understands the complexities you face PC offers a sleek new image and outstanding portability. It comes in a new, two-tone magnesium in identifying a vendor and a set of products that meet your package that not only looks good, but end-user and business needs. That’s why we’re committed to is also durable to protect what’s inside. delivering a comprehensive notebook PC family that meets And it’s only about 5 pounds and both sets of requirements. 1.26 inches thin. Our newest product within this family—the HP OmniBook To make our newest HP OmniBook 6000 notebook PC—combines all the best features of our notebook PC even easier to use, we current product line with an entirely new design for high- incorporated many new, high-quality ergonomic features. For performance business computing. This notebook PC offers example, we shortened the palm rest for more comfortable the reliability, compatibility, industry-leading security, and typing. Moved the speakers and adapted their mounting for low cost of ownership your IT department can’t afford better sound quality. Changed the module bay latch design to compromise, plus the performance, expandability, for easy module removal and positive locking indication. portability, and ergonomics your users are demanding— Adjusted the shape of our unique dual pointing devices for all in an upscale, professional-looking package they’ll be easier activation.