The Role of Negative Information in Expert Evaluations for Novel Projects

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

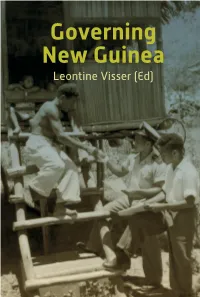

Governing New Guinea New

Governing New Guinea New Guinea Governing An oral history of Papuan administrators, 1950-1990 Governing For the first time, indigenous Papuan administrators share their experiences in governing their country with an inter- national public. They were the brokers of development. After graduating from the School for Indigenous Administrators New Guinea (OSIBA) they served in the Dutch administration until 1962. The period 1962-1969 stands out as turbulent and dangerous, Leontine Visser (Ed) and has in many cases curbed professional careers. The politi- cal and administrative transformations under the Indonesian governance of Irian Jaya/Papua are then recounted, as they remained in active service until retirement in the early 1990s. The book brings together 17 oral histories of the everyday life of Papuan civil servants, including their relationship with superiors and colleagues, the murder of a Dutch administrator, how they translated ‘development’ to the Papuan people, the organisation of the first democratic institutions, and the actual political and economic conditions leading up to the so-called Act of Free Choice. Finally, they share their experiences in the UNTEA and Indonesian government organisation. Leontine Visser is Professor of Development Anthropology at Wageningen University. Her research focuses on governance and natural resources management in eastern Indonesia. Leontine Visser (Ed.) ISBN 978-90-6718-393-2 9 789067 183932 GOVERNING NEW GUINEA KONINKLIJK INSTITUUT VOOR TAAL-, LAND- EN VOLKENKUNDE GOVERNING NEW GUINEA An oral history of Papuan administrators, 1950-1990 EDITED BY LEONTINE VISSER KITLV Press Leiden 2012 Published by: KITLV Press Koninklijk Instituut voor Taal-, Land- en Volkenkunde (Royal Netherlands Institute of Southeast Asian and Caribbean Studies) P.O. -

The Art of Writing Proposals by Adam Przeworski and Frank Salomon

1988, 1995 The Art of Writing Proposals By Adam Przeworski and Frank Salomon Writing proposals for research funding is a peculiar facet of North American academic culture, and as with all things cultural, its attributes rise only partly into public consciousness. A proposal's overt function is to persuade a committee of scholars that the project shines with the three kinds of merit all disciplines value, namely, conceptual innovation, methodological rigor, and rich, substantive content. But to make these points stick, a proposal writer needs a feel for the unspoken customs, norms, and needs that govern the selection process itself. These are not really as arcane or ritualistic as one might suspect. For the most part, these customs arise from the committee's efforts to deal in good faith with its own problems: incomprehension among disciplines, work overload, and the problem of equitably judging proposals that reflect unlike social and academic circumstances. Writing for committee competition is an art quite different from research work itself. After long deliberation, a committee usually has to choose among proposals that all possess the three virtues mentioned above. Other things being equal, the proposal that is awarded funding is the one that gets its merits across more forcefully because it addresses these unspoken needs and norms as well as the overt rules. The purpose of these pages is to give competitors for Council fellowships and funding a more even start by making explicit some of those normally unspoken customs and needs. Capture -

Imagination and Thematic Reality in the African Novel: a New Vision for African Novelists

Advances in Literary Study, 2018, 6, 8-18 http://www.scirp.org/journal/als ISSN Online: 2327-4050 ISSN Print: 2327-4034 Imagination and Thematic Reality in the African Novel: A New Vision for African Novelists Abdoulaye Hakibou Language Department, University of Parakou, Parakou, Benin How to cite this paper: Hakibou, A. Abstract (2018). Imagination and Thematic Reality in the African Novel: A New Vision for The present study on the topic “Imagination and thematic reality in the Afri- African Novelists. Advances in Literary can novel: a new vision to African novelists” aims to show the limitation of Study, 6, 8-18. the contribution of the African literary works to the good governance and de- https://doi.org/10.4236/als.2018.61002 velopment process of African countries through the thematic choices and to Received: October 17, 2017 propose a new vision in relation to those thematic choices and to the structur- Accepted: December 18, 2017 al organisation of those literary works. The study is carried out through the Published: December 21, 2017 theory of narratology by Genette (1980) and the narrative study by Chatman Copyright © 2018 by author and (1978) as applied to the novels by Chinua Achebe, essentially on the notion of Scientific Research Publishing Inc. order by Genette and the elements of a narrative by Chatman. It is a thematic This work is licensed under the Creative and structural analysis that helps the researcher to be aware of the limitation Commons Attribution International License (CC BY 4.0). of the contribution of African fiction to the good governance of African States http://creativecommons.org/licenses/by/4.0/ and their real development, for the reason that themes and the structural or- Open Access ganisation of those works are past-oriented. -

Pappg) (Nsf 20-1)

THE NATIONAL SCIENCE FOUNDATION PROPOSAL AND AWARD POLICIES AND PROCEDURES GUIDE Effective June 1, 2020 NSF 20-1 OMB Control Number 3145-0058 Significant Changes and Clarifications to the Proposal & Award Policies & Procedures Guide (PAPPG) (NSF 20-1) Effective Date June 1, 2020 Overall Document Editorial changes have been made throughout to either clarify or enhance the intended meaning of a sentence or section. References to the Large Facilities Manual have been updated to the new title of Major Facilities Guide. Significant Changes • Chapters I.G, How to Submit Proposals, II.C.1.d, Proposal Certifications and XI.A, Non- Discrimination Statutes and Regulations have been updated to implement Office of Management and Budget Memorandum M-18-24, “Strategies to Reduce Grant Recipient Reporting Burden”. Proposing organizations must submit government-wide representations and certifications in the System for Award Management (SAM). These certifications must be re-certified annually. NSF-specific proposal certifications must still be provided via the Authorized Organizational Representative function in NSF’s electronic systems. Prior exhibits in Chapter II on the Drug Free Workplace Certification, Debarment and Suspension, Lobbying and Nondiscrimination have been deleted. Reference to the Certification of Compliance/Civil Rights Certifications in Chapter XI.A.2 has been removed as Nondiscrimination Certifications will now be provided in SAM. • Chapter II.C.2.f, Biographical Sketches, has been modified to require use of an NSF-approved format in submission of the biographical sketch. NSF will only accept PDFs that are generated through use of an NSF-approved format. Note: The requirement to use an NSF-approved format for preparation of the biographical sketch will go into effect for new proposals submitted or due on or after October 5, 2020. -

Read Ebook {PDF EPUB} Visser by K.A. Applegate Visser — K.A

Read Ebook {PDF EPUB} Visser by K.A. Applegate Visser — K.A. Applegate. In an hour or so, once I was out of sight of land, I would lower my sails and wait for a Bug fighter to come lift me off the deck. The engine backwash of the Bug fighter would capsize the boat. Or / might put the Taxxon pilot to the test and see if he could ram the low-slung boat. That would puzzle the humans. Either way, my body would never be found. My time of lying low was over. I would spearhead the invasion of Earth. I would take charge of our greatest conquest. I would stand alone atop the Yeerk military hierarchy. I was to become Visser One. Her human name is Eva. There was a time when she had a loving husband and a son, Marco. When she had a wonderful career. But that was before she was infested by Edriss 562. Before the invasion of Earth. Now, Edriss 562 lives in Eva's head and controls her every movement. And through Eva, Edriss has become the highest-ranking general in the Yeerk empire, surpassing even her arch rival, Visser Three. She is Visser One. But, it has become known that Visser One's tactics for attaining her current position were less than acceptable -- even to the Yeerks. Now she is on trial for treason. If she's found innocent she'll continue to rule. But if she's found guilty, she'll lose her life -- and possibly the life of her host, Eva. -

![Arxiv:1008.3376V1 [Gr-Qc]](https://docslib.b-cdn.net/cover/7061/arxiv-1008-3376v1-gr-qc-4217061.webp)

Arxiv:1008.3376V1 [Gr-Qc]

Thin-shell wormholes in d-dimensional general relativity: Solutions, properties, and stability Gonçalo A. S. Dias∗ Centro de Física do Porto - CFP Departamento de Física e Astronomia Faculdade de Ciências da Universidade do Porto - FCUP Rua do Campo Alegre, 4169-007 Porto, Portugal, José P. S. Lemos† Centro Multidisciplinar de Astrofísica - CENTRA Departamento de Física, Instituto Superior Técnico - IST, Universidade Técnica de Lisboa - UTL, Av. Rovisco Pais 1, 1049-001 Lisboa, Portugal & Institute of Theoretical Physics - ITP, Freie Universität Berlin, Arnimallee 14 D-14195 Berlin, Germany. We construct thin-shell electrically charged wormholes in d-dimensional general relativity with a cosmological constant. The wormholes constructed can have different throat geometries, namely, spherical, planar and hyperbolic. Unlike the spherical geometry, the planar and hyperbolic geome- tries allow for different topologies and in addition can be interpreted as higher-dimensional domain walls or branes connecting two universes. In the construction we use the cut-and-paste procedure by joining together two identical vacuum spacetime solutions. Properties such as the null energy condition and geodesics are studied. A linear stability analysis around the static solutions is carried out. A general result for stability is obtained from which previous results are recovered. PACS numbers: 04.20.Gz, 04.20.Jb, 04.40.-b I. INTRODUCTION A. Visser’s book In physics, in many situations, the study of a system passes through three stages. First, one finds a solution that emulates the system itself. Second, in turn, through the solution one studies the peculiarities that the system might have. Third, one performs a stability analysis on the solution to gather whether the system can be realistic or not. -

Grade 5 Supplemental Literature List

Clovis Unified School District Supplemental Literature for Grade 5 Book Title Author Summary th 13 Floor Fleischman, Sid When his older sister disappears, twelve-year-old Buddy Stebbins follows her back in time and finds himself aboard a seventeenth-century pirate ship captained by a distant relative. Abraham Lincoln The story of President Lincoln, from his birth in Kentucky in 1809 to his life in Washington and the end of the Civil War. Across Five Aprils Hunt, Irene The effects of the Civil War on the Creighton family, farmers in Southern Illinois, are depicted as the older men are pulled one by one into the war, leaving the youngest, Jethro, to keep their farm going. The novel is based on the author's family records, letters, and grandfather's stories. Adventures of Blue Avenger, The Howe, Norma On his sixteenth birthday, still trying to cope with the unexpected death of his father, David Schumacher decides--or does he--to change his name to Blue Avenger, hoping to find a way to make a difference in his Oakland neighborhood and in the world. Afternoon of the Elves Lisle, Janet Taylor As Hillary works in the miniature village, allegedly built by elves, in Sara-Kate's backyard, she becomes more and more curious about Sara-Kate's real life inside her big, gloomy house with her mysterious, silent mother. Al Capone does my Shirts Choldenko, Gennifer A twelve-year-old boy named Moose moves to Alcatraz Island in 1935 when guards' families were housed there, and has to contend with his extraordinary new environment in addition to life with his autistic sister Natalie. -

Proposal for a Migration and Asylum Crisis Regulation

EUROPEAN COMMISSION Brussels, 23.9.2020 COM(2020) 613 final 2020/0277 (COD) Proposal for a REGULATION OF THE EUROPEAN PARLIAMENT AND OF THE COUNCIL addressing situations of crisis and force majeure in the field of migration and asylum (Text with EEA relevance) EN EN EXPLANATORY MEMORANDUM 1. CONTEXT OF THE PROPOSAL 1.1. Reasons for and objectives of the proposal In September 2019, European Commission President Ursula von der Leyen announced a New Pact on Migration and Asylum, involving a comprehensive approach to external borders, asylum and return systems, the Schengen area of free movement and the external dimension. The Communication on a New Pact on Migration and Asylum, presented together with a set of legislative proposals, including this proposal addressing situations of crisis and force majeure in the field of migration and asylum, represents a fresh start on migration. The aim is to put in place a broad framework based on a comprehensive approach to migration management, promoting mutual trust among Member States. Based on the overarching principles of solidarity and a fair sharing of responsibility, the new Pact advocates integrated policy-making, bringing together policies in the areas of asylum, migration, return, external border protection and relations with third countries. The New Pact builds on the Commission proposals to reform the Common European Asylum System from 2016 and 2018 and it adds new elements to ensure the balance needed for a common framework that contributes to the comprehensive approach to migration management through integrated-policy making in the field of asylum and migration management, including both its internal and external components. -

Preparing Research Proposals for the HWS Institutional Review Board: an Informal Guide for Faculty and Students

Preparing research proposals for the HWS Institutional Review Board: An informal guide for faculty and students Ron Gerrard, HWS Psychology Department on behalf of the Hobart and William Smith Institutional Review Board Hobart and William Smith colleges, like all educational institutions receiving federal research money, is required by law to have an Institutional Research Board (IRB) to protect the welfare of its research subjects. It does so by evaluating proposed research projects to make sure they meet accepted legal and ethical standards. As someone who has both served on the IRB and submitted research proposals to be evaluated by the IRB, I have experienced the review process from both sides. I understand the effort required by researchers to prepare IRB proposals, as well as their concern that the review process might seriously delay the start (and therefore the completion) of intended research projects. Researchers are understandably frustrated when their proposals are returned from the IRB with requests for revisions, especially when the reasoning behind such requests seems arcane or unclear. On the other hand, as an IRB member required to carefully read and evaluate several dozen proposals a year, it is frustrating to see the same sorts of easily avoidable errors appear over and over again, proposal after proposal. My goal in preparing this handout is to better communication between the two sides, hopefully improving the quality of proposal and reducing frustration all around. I hope to give researchers a glimpse into the mindset of the IRB as it reads various types of proposals, yielding a better sense how and why different issues are considered by the committee in judging proposed research. -

Adapting Pride and Prejudice

IV (2017) 2, 349–359 This work is licensed under a Creative Commons Attribution 4.0 International License. Ovaj rad dostupan je za upotrebu pod licencom Creative Commons Imenovanje 4.0 međunarodna. Anđelka RAGUŽ UDK 821.111-31.09 Austen, J.=111 University of Mostar 791=111 Matice hrvatske bb Mostar 88 000 DOI: 10.29162/ANAFORA.v4i2.10 Bosnia and Herzegovina Pregledni članak [email protected] Review Article Primljeno 2. studenog 2017. Received: 2 November 2017 Prihvaćeno 14. prosinca 2017. Accepted: 14 December 2017 “TILL THIS MOMENT I NEVER KNEW MYSELF”: ADAPTING PRIDE AND PREJUDICE Abstract Adaptations are always a matter of hard choices: the scriptwriter and the director have their interpretations of what an adaptation should be, very much like every reader has his/her own vision of the characters and the plot, and very rarely do the two visions coincide. This paper was inspired by the on-going debate amongst Jane Austen fans on Internet forums as to which adaptation of Pride and Prejudice is more faithful to the 1813 novel. The main two contenders appear to be the 1995 BBC mini-series starring Jennifer Ehle and Colin Firth, and Joe Wright’s 2005 film with Keira Knightley and Matthew Macfadyen in the lead roles. This paper will attempt to identify the cardinal points of Austen’s Pride and Prejudice to illustrate that both the 1995 and 2005 adaptations are faithful to the original. Furthermore, it shall look at the strengths and weaknesses of the mini-series and the feature film as genres, before analysing the respective strengths and weaknesses of the adaptations them- selves. -

35 Glar Alarm System

# The Proposal My name is Marco. But you can call me "Marco the Mighty." Or "Most Exalted Destroyer of My Pride." You can cower before my mighty thumbs and beg for mercy, but you'll be crushed just the same. For I am the lord of the PlayStation. Pick a game. Any game. Tekken. Duke Nukem. NFL Blitz. Whatever. Practice all you want. I'll still beat you. I'll crush you like Doc Martens crush ants. I'll - "The phone's ringing," my dad said, setting down his controller. "You can't stop now," I cried. "I was gonna score on this next play!" 2 "It's fifty-six to nothing," he muttered. "I'll forfeit this one." "But-" But he'd already picked up the phone. "Hello? Oh, hi! How are you?" His voice was so sweet and sticky you could have poured it over pancakes. "Oh, brother," I mumbled. "I'm doing great," he continued, a big dopey smile on his face. "Marco and I were just playing video games. Uh-huh. Sure." He looked at me. "Nora says hi." I nodded. I grabbed the remote control. Switched the TV back to cable mode and turned the volume up loud enough to drown out his voice. My dad has a girlfriend. And I think it's serious. I'm used to this quiet, low-key, unexpressive guy. But ever since he started dating this woman, he's been Mr. Personality. Smiling for no reason. Singing in the shower. Laughing at all my lame jokes like I was Chris Rock.