Transformation and Sample Size

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Gpindex: Generalized Price and Quantity Indexes

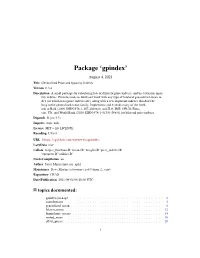

Package ‘gpindex’ August 4, 2021 Title Generalized Price and Quantity Indexes Version 0.3.4 Description A small package for calculating lots of different price indexes, and by extension quan- tity indexes. Provides tools to build and work with any type of bilateral generalized-mean in- dex (of which most price indexes are), along with a few important indexes that don't be- long to the generalized-mean family. Implements and extends many of the meth- ods in Balk (2008, ISBN:978-1-107-40496-0) and ILO, IMF, OECD, Euro- stat, UN, and World Bank (2020, ISBN:978-1-51354-298-0) for bilateral price indexes. Depends R (>= 3.5) Imports stats, utils License MIT + file LICENSE Encoding UTF-8 URL https://github.com/marberts/gpindex LazyData true Collate 'helper_functions.R' 'means.R' 'weights.R' 'price_indexes.R' 'operators.R' 'utilities.R' NeedsCompilation no Author Steve Martin [aut, cre, cph] Maintainer Steve Martin <[email protected]> Repository CRAN Date/Publication 2021-08-04 06:10:06 UTC R topics documented: gpindex-package . .2 contributions . .3 generalized_mean . .8 lehmer_mean . 12 logarithmic_means . 15 nested_mean . 18 offset_prices . 20 1 2 gpindex-package operators . 22 outliers . 23 price_data . 25 price_index . 26 transform_weights . 33 Index 37 gpindex-package Generalized Price and Quantity Indexes Description A small package for calculating lots of different price indexes, and by extension quantity indexes. Provides tools to build and work with any type of bilateral generalized-mean index (of which most price indexes are), along with a few important indexes that don’t belong to the generalized-mean family. Implements and extends many of the methods in Balk (2008, ISBN:978-1-107-40496-0) and ILO, IMF, OECD, Eurostat, UN, and World Bank (2020, ISBN:978-1-51354-298-0) for bilateral price indexes. -

Inequalities Among Complementary Means of Heron Mean and Classical Means

Advances and Applications in Mathematical Sciences Volume 20, Issue 7, May 2021, Pages 1249-1258 © 2021 Mili Publications INEQUALITIES AMONG COMPLEMENTARY MEANS OF HERON MEAN AND CLASSICAL MEANS AMBIKA M. HEJIB, K. M. NAGARAJA, R. SAMPATHKUMAR and B. S. VENKATARAMANA Department of Mathematics R N S Institute of Technology Uttarahalli-Kengeri Main Road R Rnagar post, Bengaluru-98, Karnataka, India E-mail: [email protected] [email protected] Department of Mathematics J.S.S. Academy of Technical Education Uttarahalli-Kengeri Main Road Bengaluru-60, Karnataka, India E-mail: [email protected] Department of Mathematics K S Institute of Technology Kannakapura Main Road Bengaluru - 560 109, Karnataka, India E-mail: [email protected] Abstract In this paper, the complementary means of arithmetic, geometric, harmonic and contra harmonic with respect to Heron mean are defined and verified them as means. Further, inequalities among them and classical means are established. I. Introduction In Pythagorean school ten Greek means are defined based on proportion, the following are the familiar means in literature and are given as follows: 2010 Mathematics Subject Classification: Primary 26D10, secondary 26D15. Keywords: Complementary mean, Heron mean, Classical means. Received September 7, 2020; Accepted February 18, 2021 1250 HEJIB, NAGARAJA, SAMPATHKUMAR and VENKATARAMANA u v For two real numbers u and v which are positive, Au , v 1 1 ; 1 1 1 1 2 2 2 2u1v1 u1 v1 Gu1, v1 u1v1 ; Hu1, v1 and Cu1, v1 . These are u1 v1 u1 v1 called Arithmetic, Geometric, Harmonic and Contra harmonic mean respectively. The Hand book of Means and their Inequalities, by Bullen [1], gave the tremendous work on Mathematical means and the corresponding inequalities involving huge number of means. -

Digital Image Processing Chapter 5: Image Restoration Concept of Image Restoration

Digital Image Processing Chapter 5: Image Restoration Concept of Image Restoration Image restoration is to restore a degraded image back to the original image while image enhancement is to manipulate the image so that it is suitable for a specific application. Degradation model: g(x, y) = f (x, y) ∗h(x, y) +η(x, y) where h(x,y) is a system that causes image distortion and η(x,y) is noise. (Images from Rafael C. Gonzalez and Richard E. Wood, Digital Image Processing, 2nd Edition. Concept of Image Restoration g(x, y) = f (x, y) ∗h(x, y) +η(x, y) We can perform the same operations in the frequency domain, where convolution is replaced by multiplication, and addition remains as addition, because of the linearity of the Fourier transform. G(u,v) = F(u,v)H(u,v) + N(u,v) If we knew the values of H and N we could recover F by writing the above equation as N(u,v) F(u,v) = G(u,v) − H(u,v) However, as we shall see, this approach may not be practical. Even though we may have some statistical information about the noise, we will not know the value of n(x,y) or N(u,v) for all, or even any, values. A s we ll, di vidi ng b y H(i, j) will cause diff ulti es if there are values which are close to, or equal to, zero. Noise WdfiibddiihiilWe may define noise to be any degradation in the image signal, caused by external disturbance. -

EEM 463 Introduction to Image Processing Week 5: Filtering in the Frequency Domain

EEM 463 Introduction to Image Processing Week 5: Image Restoration and Reconstruction Fall 2013 Instructor: Hatice Çınar Akakın, Ph.D. [email protected] Anadolu University 12.11.2013 Image Restoration • Image restoration: recover an image that has been degraded by using a prior knowledge of the degradation phenomenon. • Model the degradation and applying the inverse process in order to recover the original image. • The principal goal of restoration techniques is to improve an image in some predefined sense. • Although there are areas of overlap, image enhancement is largely a subjective process, while restoration is for the most part an objective process. 12.11.2013 A Model of the Image Degradation/Restoration Process • The degraded image in the spatial domain: 푔 푥, 푦 = ℎ 푥, 푦 ∗ 푓 푥, 푦 + 휂 푥, 푦 • Frequency domain representation 퐺 푢, 푣 = 퐻 푢, 푣 퐹 푢, 푣 + 푁(푢, 푣) 12.11.2013 Noise Models • The principal sources of noise in digital images arise during image acquisition and/or transmission • Light levels and sensor temperature during acquisition • Lightning or other atmospheric disturbance in wireless network during transmission • White noise: Fourier spectrum this noise is constant • carryover from the physical proporties of White light, which contains nearly all frequencies in the visible spectrum in equal proportions. • With the exception of spatially periodic noise, we assume • Noise is independent of spatial coordinates • Noise is uncorrelated with respect to the image itself 12.11.2013 Gaussian Noise • The pdf of a Gaussian random variable, z, is given by 1 2 푝 푧 = 푒−(푧−푧) /2휎2 2휋휎 where z represents intensity, 푧 is the mean (average) value of z , and σ is its standard deviation. -

Computer Vision & Digital Image Processing Outline

Computer Vision & Digital Image Processing Image Restoration and Reconstruction II Electrical & Computer Engineering Dr. D. J. Jackson Lecture 12-1 Outline • Periodic noise • Estimation of noise parameters • Restoration in the presence of noise only –spatial filtering Electrical & Computer Engineering Dr. D. J. Jackson Lecture 12-2 Periodic noise • Periodic noise typically arises from interference during image acquisition • Spatially dependent noise type • Can be effectively reduced via frequency domain filtering Electrical & Computer Engineering Dr. D. J. Jackson Lecture 12-3 Sample periodic images and their spectra 50 50 100 100 150 150 200 200 250 250 50 100 150 200 250 50 100 150 200 250 50 50 100 100 150 150 200 200 250 250 50 100 150 200 250 50 100 150 200 250 Electrical & Computer Engineering Dr. D. J. Jackson Lecture 12-4 Sample periodic images and their spectra 50 50 100 100 150 150 200 200 250 250 50 100 150 200 250 50 100 150 200 250 122 124 126 128 130 132 134 136 105 110 115 120 125 130 135 140 145 150 Electrical & Computer Engineering Dr. D. J. Jackson Lecture 12-5 Estimation of noise parameters • Noise parameters can often be estimated by observing the Fourier spectrum of the image – Periodic noise tends to produce frequency spikes • Parameters of noise PDFs may be known (partially) from sensor specification – Can still estimate them for a particular imaging setup – One method • Capture a set of “flat” images from a known setup (i.e. a uniform gray surface under uniform illumination) • Study characteristics of resulting image(s) to develop an indicator of system noise Electrical & Computer Engineering Dr. -

Optimal Inequalities for Bounding Toader Mean by Arithmetic and Quadratic Means

Zhao et al. Journal of Inequalities and Applications (2017)2017:26 DOI 10.1186/s13660-017-1300-8 R E S E A R C H Open Access Optimal inequalities for bounding Toader mean by arithmetic and quadratic means Tie-Hong Zhao1, Yu-Ming Chu1* and Wen Zhang2 *Correspondence: [email protected] Abstract 1School of Mathematics and Computation Sciences, Hunan City In this paper, we present the best possible parameters α(r)andβ(r) such that the University, Yiyang, 413000, China double inequality Full list of author information is available at the end of the article α(r)Ar(a, b)+(1–α(r))Qr(a, b) 1/r < TD A(a, b), Q(a, b) < β(r)Ar(a, b)+(1–β(r))Qr(a, b) 1/r ≤ holds for all r 1anda, b >0with a = b, and we provide new√ bounds for the complete elliptic integral E(r)= π/2(1 – r2 sin2 )1/2 d (r ∈ (0, 2/2)) of the second √ 0 θ θ 2 π/2 2 2 2 2 kind, where√ TD(a, b)= π 0 a cos θ + b sin θ dθ, A(a, b)=(a + b)/2 and Q(a, b)= (a2 + b2)/2 are the Toader, arithmetic, and quadratic means of a and b, respectively. MSC: 26E60 Keywords: arithmetic mean; Toader mean; quadratic mean; complete elliptic integral 1 Introduction For p ∈ [, ], q ∈ R and a, b >witha = b,thepth generalized Seiffert mean Sp(a, b), qth Gini mean Gq(a, b), qth power mean Mq(a, b), qth Lehmer mean Lq(a, b), harmonic mean H(a, b), geometric mean G(a, b), arithmetic mean A(a, b), quadratic mean Q(a, b), Toader mean TD(a, b)[], centroidal mean C(a, b), contraharmonic mean C(a, b)are,respectively, defined by ⎧ ⎨⎪ p(a – b) ,<p ≤ , S (a, b)= arctan[p(a – b)/(a + b)] p ⎩⎪ (a + b)/, p =, ⎧ ⎨ [(aq– + bq–)/(a + b)]/(q–), q =, Gq(a, b)=⎩ (aabb)/(a+b), q =, ⎧ ⎨ [(aq + bq)/]/q, q =, √ Mq(a, b)=⎩ ab, q =, © The Author(s) 2017. -

The Biharmonic Mean of the Divisors of N

THE BIHARMONIC MEAN MARCO ABRATE, STEFANO BARBERO, UMBERTO CERRUTI, NADIR MURRU University of Turin, Department of Mathematics Via Carlo Alberto 10, Turin, Italy [email protected], [email protected] [email protected], [email protected] Abstract We briefly describe some well–known means and their properties, focusing on the relationship with integer sequences. In particular, the harmonic numbers, de- riving from the harmonic mean, motivate the definition of a new kind of mean that we call the biharmonic mean. The biharmonic mean allows to introduce the bihar- monic numbers, providing a new characterization for primes. Moreover, we highlight some interesting divisibility properties and we characterize the semi–prime biharmonic numbers showing their relationship with linear recurrent sequences that solve certain Diophantine equations. Keyword: arithmetic mean, divisibility, geometric mean, harmonic mean, harmonic numbers, inte- ger sequences. AMS Subject Classification: 11N80, 26E60. 1 INTRODUCTION The need to explore the Nature and establish from direct observations its rules, encouraged the ancient thinkers in finding appropriate mathematical tools, able to extrapolate numerical data. The arithmetic mean is one of the arXiv:1601.03081v1 [math.NT] 12 Jan 2016 oldest quantities introduced for this purpose, in order to find an unique ap- proximate value of some physical quantity from the empirical data. It has been probably used for the first time in the third century B.C., by the ancient Babylonian astronomers, in their studies on the positions and motions of ce- lestial bodies. The mathematical relevance of the arithmetic mean has been enhanced by the Greek astronomer Hipparchus (190–120 B.C.). -

Heron and Alpha-Centroidal Mean Filters with Exponential Factors For

International Journal of Recent Technology and Engineering (IJRTE) ISSN: 2277-3878, Volume-9 Issue-1, May 2020 Heron and Alpha-Centroidal Mean Filters with Exponential factors for Deep Space Images Vivek B A, Keshav V Bharadwaj, Shankar Anbalagan, Abhinav Narayan, Sampath Kumar R white respectively, this is an 8-bit representation. There are floats and double representations for more accurate image Abstract: In this article, we discuss empirical methods that can description. Digital Image can be represented as a matrix be add-on to enhance spatial image filters. Discussing on the function mathematically. Consider a function f with two implementation of such methods for Deep-Sky images from space th th telescopes. Spatial image filtering houses mean filters that use parameters m row and n column. Fig. 1 shows are kernel method to filter a digital image in the spatial domain. To presentation of the function f in image pixel space. The improve the performance of the existing filters and aid the new Mathematical function f can be a grey image or a color image. upcoming filter, a technique is developed that can be added to the Where in a grey image contains pixel values from 0 to 1. filter to improve their performance. This study gives a detailed Color images have three channels Red (R), Blue (B), Green analysis of an empirical weight factor and exponential factoring methods for the mean filters that are proposed and implemented. (G) or simply known as RGB. Each channel can have values The exponential factoring is applied to the recent mean filters from 0 to 255 regions for an 8-bit image. -

Sharp Inequalities Between Harmonic, Seiffert, Quadratic and Contraharmonic Means

Sharp Inequalities between Harmonic, Seiffert, Quadratic and Contraharmonic Means Gen-Di Wang1, Chen-Yan Yang2 and Yu-Ming Chu1 1Department of Mathematics, Huzhou Teachers College, Huzhou 313000, China; 2Department of Mathematics, Zhejiang Sci-Tech University, Hangzhou 310018, China. Correspondence should be addressed to Yu-Ming Chu, [email protected] Abstract: In this paper, we present the greatest values α, λ and p, and the least values β, µ and q such that the double inequalities αD(a, b) + (1 α)H(a, b) < T (a, b) < βD(a, b) + (1 β)H(a, b), λD(a, b) + (1 λ)H−(a, b) <C(a, b) < µD(a, b)+(1 µ)H(a, b)− and pD(a, b)+(1 p)H(a, b) −< Q(a, b) < qD(a, b)+(1 q)H(a, b−) hold for all a,b > 0 with −a = b, where − 6 H(a, b)=2ab/(a + b), T (a, b)=(a b)/[2 arctan((a b)/(a + b))], Q(a, b)= (a2 + b2)/2, C(a, b)=(a2 + b2)/(−a + b) and D(a,− b)=(a3 + b3)/(a2 + b2) are the harmonic, Seiffert, quadratic, first contraharmonic and second con- p traharmonic means of a and b, respectively. 2010 Mathematics Subject Classification: 26E60. Keywords: harmonic mean, Seiffert mean, quadratic mean, first contrahar- monic mean, second contraharmonic mean. arXiv:1210.3875v1 [math.CA] 15 Oct 2012 1 Introduction For a,b > 0 with a = b the harmonic mean H(a, b), Seiffert mean 6 T (a, b), quadratic mean Q(a, b), first contraharmonic mean C(a, b) and sec- ond contraharmonic mean D(a, b) are defined by H(a, b)=2ab/(a + b), T (a, b)=(a b)/[2 arctan((a b)/(a+b))], Q(a, b)= (a2 + b2)/2, C(a, b)= (a2 + b2)/(a−+ b) and D(a, b)=(− a3 + b3)/(a2 + b2), respectively. -

Module 3 Image Restoration and Reconstruction Noise Models

Module 3 Image Restoration and Reconstruction A Model of the image Degradation/ Restoration Process The degradation process is model ed as a degradation function that, together within a additive noise term ,operates on an input image f(x,y) to produce a degraded image g(x,y),some knowledge about the degradation function H,and some knowledge about the additive noise term ɳ(x,y), the objective of restoration is to obtain an estimate (x,y)of the original image.We want the estimate to be as close as possible to the original input image and, in general, the more we know about H and ɳ,the closer (x,y) will be to f(x,y). If H is a linear,position-invariant process,then the degraded image is given in the spatial domain by g(x,y)=h(x,y)*f(x,y)+ɳ(x,y) Where h(x,y) is the spatial representation of the degradation function and, the symbol “ * “ indicates convolution. We know from the discussion that convolution in the spatial domain is analogous to multiplication in the frequency domain, so we may write the model in an equivalent frequency domain representation: G(u,v) = H(u,v)F(u,v)+N(u,v) Where terms in capital letters are the Fourier transforms of the corresponding terms. Noise Models The principal sources of noise in digital images arise during image acquisition and /or transmission. The performance of imaging sensors is affected by a variety of factors , such as environmental conditions during image acquisition, and by the quality of the sensing elements themselves. -

Image Enhancement

Image Enhancement Introduction to Signal and Image Processing Prof. Dr. Philippe Cattin MIAC, University of Basel April 5th/12th, 2016 Introduction to Signal and Image Processing April 5th/12th, 2016 1 of 188 22.02.2016 09:18 Ph. Cattin: Image Enhancement Contents Contents Abstract 2 1 Geometrical Image Corrections 1.1 Geometrical Image Transformations Introduction 5 Geometrical Image Transformations 6 Basic Steps of a Geometric Transform 7 The Hierarchy of Geometric Transformations 8 Class I: Isometries 9 Class I: Isometries (2) 10 Class I: Isometries Example 11 Class I: Practical Issues 12 Class II: Similarity Transformations 13 Class II: Similarity Transformations (2) 14 Class II: Similarity Transformations Example 15 Class II: Practical Issues 16 Class III: Affine Transformations 17 Class III: Affine Transformations (2) 18 Class III: Affine Transformation Example 19 Class III: Practical Issues 20 Class IV: Projective Transformations 21 Class IV: Projective Transformations (2) 22 Class IV: Projective Transformation Example 23 Practical Issues and Common Pitfalls 24 Determination of the Matrix Coefficients 25 Determination of the Matrix Coefficients (2) 26 1.2 Pixel Interpolation Methods Applying Spatial Transformations to Images 28 Introduction to Signal and Image Processing April 5th/12th, 2016 2 of 188 22.02.2016 09:18 Forward Mapping 29 Forward Mapping Example 30 Backward Mapping 31 Interpolation Methods 32 Nearest Neighbour Interpolation 33 Nearest Neighbour Interpolation Example 34 Bilinear Interpolation 35 Bilinear Interpolation Example -

Noise Removal)

Digital Image Processing Image Restoration and Reconstruction (Noise Removal) Christophoros Nikou [email protected] University of Ioannina - Department of Computer Science and Engineering Image Restoration and 2 Reconstruction Things which we see are not by themselves what we see… It remains completely unknown to us what the objects may be by themselves and apart from the receptivity of our senses. We know nothing but our manner of perceiving them. Immanuel Kant C. Nikou – Digital Image Processing (E12) 3 Contents In this lecture we will look at image restoration techniques used for noise removal – What is image restoration? – Noise and images – Noise models – Noise removal using spatial domain filtering – Noise removal using frequency domain filtering C. Nikou – Digital Image Processing (E12) 4 What is Image Restoration? Image restoration attempts to restore images that have been degraded – Identify the degradation process and attempt to reverse it – Similar to image enhancement, but more objective C. Nikou – Digital Image Processing (E12) 5 Noise and Images The sources of noise in digital images arise during image acquisition (digitization) and transmission – Imaging sensors can be affected by ambient conditions – Interference can be added to an image during transmission C. Nikou – Digital Image Processing (E12) 6 Noise Model We can consider a noisy image to be modelled as follows: g(x, y) f (x, y) (x, y) where f (x, y) is the original image pixel, η(x, y) is the noise term and g(x, y) is the resulting noisy pixel If we can estimate the noise model we can figure out how to restore the image C.