Finalist Reports 2011-2012

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

55Th Legislature - State of New Mexico - First Session, 2021

HOUSE TAXATION AND REVENUE COMMITTEE SUBSTITUTE FOR 1 HOUSE BILL 285 2 55TH LEGISLATURE - STATE OF NEW MEXICO - FIRST SESSION, 2021 3 4 5 6 7 8 9 10 AN ACT 11 RELATING TO CAPITAL EXPENDITURES; AUTHORIZING THE ISSUANCE OF 12 SEVERANCE TAX BONDS; AUTHORIZING EXPENDITURES FROM CERTAIN 13 FUNDS AND BALANCES; CLARIFYING CONDITIONS FOR THE ISSUANCE OF 14 BONDS; ESTABLISHING CONDITIONS FOR THE EXPENDITURE OF SEVERANCE 15 TAX BOND PROCEEDS; PROVIDING CONTINGENT AUTHORIZATION TO THE 16 PUBLIC EDUCATION DEPARTMENT TO REQUEST BUDGET INCREASES FOR 17 CERTAIN CAPITAL EXPENDITURES; ESTABLISHING CONDITIONS FOR THE 18 REVERSION OF UNEXPENDED BALANCES; MAKING APPROPRIATIONS; ] = delete = ] 19 DECLARING AN EMERGENCY. 20 21 BE IT ENACTED BY THE LEGISLATURE OF THE STATE OF NEW MEXICO: 22 SECTION 1. SEVERANCE TAX BONDS--AUTHORIZATIONS-- 23 APPROPRIATION OF PROCEEDS.-- 24 A. The state board of finance may issue and sell underscored material = new = material underscored material [bracketed 25 severance tax bonds in compliance with the Severance Tax .220673.1 HTRC/HB 285 1 Bonding Act in an amount not to exceed the total of the amounts 2 authorized for purposes specified in this act. The state board 3 of finance shall schedule the issuance and sale of the bonds in 4 the most expeditious and economical manner possible upon a 5 finding by the board that the project has been developed 6 sufficiently to justify the issuance and that the project can 7 proceed to contract within a reasonable time. The state board 8 of finance shall further take the appropriate steps necessary 9 to comply with the federal Internal Revenue Code of 1986, as 10 amended. -

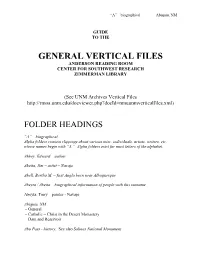

General Vertical Files Anderson Reading Room Center for Southwest Research Zimmerman Library

“A” – biographical Abiquiu, NM GUIDE TO THE GENERAL VERTICAL FILES ANDERSON READING ROOM CENTER FOR SOUTHWEST RESEARCH ZIMMERMAN LIBRARY (See UNM Archives Vertical Files http://rmoa.unm.edu/docviewer.php?docId=nmuunmverticalfiles.xml) FOLDER HEADINGS “A” – biographical Alpha folders contain clippings about various misc. individuals, artists, writers, etc, whose names begin with “A.” Alpha folders exist for most letters of the alphabet. Abbey, Edward – author Abeita, Jim – artist – Navajo Abell, Bertha M. – first Anglo born near Albuquerque Abeyta / Abeita – biographical information of people with this surname Abeyta, Tony – painter - Navajo Abiquiu, NM – General – Catholic – Christ in the Desert Monastery – Dam and Reservoir Abo Pass - history. See also Salinas National Monument Abousleman – biographical information of people with this surname Afghanistan War – NM – See also Iraq War Abousleman – biographical information of people with this surname Abrams, Jonathan – art collector Abreu, Margaret Silva – author: Hispanic, folklore, foods Abruzzo, Ben – balloonist. See also Ballooning, Albuquerque Balloon Fiesta Acequias – ditches (canoas, ground wáter, surface wáter, puming, water rights (See also Land Grants; Rio Grande Valley; Water; and Santa Fe - Acequia Madre) Acequias – Albuquerque, map 2005-2006 – ditch system in city Acequias – Colorado (San Luis) Ackerman, Mae N. – Masonic leader Acoma Pueblo - Sky City. See also Indian gaming. See also Pueblos – General; and Onate, Juan de Acuff, Mark – newspaper editor – NM Independent and -

NM MESA Celebrates STEM Day and MESA Day at the NM Legislature

NM MESA Celebrates STEM Day More Diversity in NM MESA and MESA Day at the NM Legislature Toney Begay Executive Director Kim Scheerer students for college majors and careers in deed, more than 100 MESA students from We are in the midst of the 2016-2017 CI Regional Coordinator mathematics, engineering and science. Cibola, McKinley, Rio Arriba, Sandoval, school year throughout the state of New This is in accordance with Senate Me- San Miguel and Santa Fe counties brought Mexico. We, New Mexico Mathematics, On January 18th, the Legislators of morial Bill 10, introduced by Senator Pete more than 1,000 letters of support for NM Engineering, Science Achievement, Inc. Santa Fe celebrated the first official STEM Campos from District 8 encompassing MESA to deliver to Representatives and (NM MESA) have been very busy meet- (science, technology, engineering and Colfax, Mora, Harding and much of San Senators from all over the Land of En- ing our mission, even with the additional mathematics) Day at the Roundhouse! Or- Miguel County. The bill recognizes NM chantment. challenges of reduced funding. We are ganized and supported by the Los Alamos MESA for its contributions to science edu- Students heard special presentations by reaching students throughout the state who cation achievement, college preparation, may or may not be thinking about attend- career guidance, field trips, academic com- ing college and majoring in the Science, petitions, leadership workshops, summer Technology, Engineering and Mathematics programs, scholarship incentives, com- (STEM) fields. munity service and teacher professional Our student participants vary and re- development. flect the diversity of our state. -

Early Voting: 19 Early Voting Election Day: 69 Voting Convenience Centers (Vccs) Are Open Th Convenience Centers (Evccs) Are Tuesday, November 5 from 7 A.M

Early Voting: 19 Early Voting Election Day: 69 Voting Convenience Centers (VCCs) are open Convenience Centers (EVCCs) are Tuesday, November 5th from 7 a.m. to 7 p.m. open October 19th - November 2nd Monday – Saturday from 8 a.m. to A. MONTOYA ELEMENTARY SCHOOL LYNDON B JOHNSON MIDDLE SCHOOL 24 PUBLIC SCHOOL RD. 6811 TAYLOR RANCH RD NW 8 p.m. All Early Voting sites are ADOBE ACRES ELEMENTARY SCHOOL MADISON MIDDLE SCHOOL open on Election Day. 1724 CAMINO DEL VALLE SW 3501 MOON ST NE ALBUQUERQUE HIGH SCHOOL MANZANO HIGH SCHOOL 98TH & CENTRAL 800 ODELIA RD NE 12200 LOMAS BLVD NE 120 98TH ST NW SUITE B101, B102 ARROYO DEL OSO ELEMENTARY SCHOOL MANZANO MESA ELEMENTARY SCHOOL ALAMEDA WEST 6504 HARPER DR NE 801 ELIZABETH ST SE 10131 COORS RD NW SUITE C-02 BANDELIER ELEMENTRAY SCHOOL MCKINLEY MIDDLE SCHOOL 3309 PERSHING AVE SE 4500 COMANCHE RD NE BERNALILLO COUNTY VISITOR CENTER 6080 ISLETA BLVD SW BELLEHAVEN ELEMENTARY SCHOOL MONTEZUMA ELEMENTARY SCHOOL 8701 PRINCESS JEANNE AVE NE 3100 INDIAN SCHOOL RD NE CARACOL PLAZA CHAPARRAL ELEMENTARY SCHOOL MOUNTAIN VIEW COMMUNITY CENTER 12500 MONTGOMERY BLVD NE STE 101 6325 MILNE RD NW 201 PROSPERITY AVE SE CENTRAL MERCADO CIBOLA HIGH SCHOOL ONATE ELEMENTARY SCHOOL 301 SAN PEDRO DR SE SUITE B, C, D, E 1510 ELLISON DR NW 12415 BRENTWOOD HILLS BLVD NE CLERKS ANNEX DEL NORTE HIGH SCHOOL PAJARITO ELEMENTARY SCHOOL 1500 LOMAS BLVD. NW STE. A 5323 MONTGOMERY BLVD NE 2701 DON FELIPE SW DASKALOS PLAZA DOUBLE EAGLE ELEMENTARY SCHOOL POLK MIDDLE SCHOOL 5339 MENAUL BLVD NE 8901 LOWELL DR NE 2220 RAYMAC RD SW RAYMOND -

National Blue Ribbon Schools Recognized 1982-2015

NATIONAL BLUE RIBBON SCHOOLS PROGRAM Schools Recognized 1982 Through 2015 School Name City Year ALABAMA Academy for Academics and Arts Huntsville 87-88 Anna F. Booth Elementary School Irvington 2010 Auburn Early Education Center Auburn 98-99 Barkley Bridge Elementary School Hartselle 2011 Bear Exploration Center for Mathematics, Science Montgomery 2015 and Technology School Beverlye Magnet School Dothan 2014 Bob Jones High School Madison 92-93 Brewbaker Technology Magnet High School Montgomery 2009 Brookwood Forest Elementary School Birmingham 98-99 Buckhorn High School New Market 01-02 Bush Middle School Birmingham 83-84 C.F. Vigor High School Prichard 83-84 Cahaba Heights Community School Birmingham 85-86 Calcedeaver Elementary School Mount Vernon 2006 Cherokee Bend Elementary School Mountain Brook 2009 Clark-Shaw Magnet School Mobile 2015 Corpus Christi School Mobile 89-90 Crestline Elementary School Mountain Brook 01-02, 2015 Daphne High School Daphne 2012 Demopolis High School Demopolis 2008 East Highland Middle School Sylacauga 84-85 Edgewood Elementary School Homewood 91-92 Elvin Hill Elementary School Columbiana 87-88 Enterprise High School Enterprise 83-84 EPIC Elementary School Birmingham 93-94 Eura Brown Elementary School Gadsden 91-92 Forest Avenue Academic Magnet Elementary School Montgomery 2007 Forest Hills School Florence 2012 Fruithurst Elementary School Fruithurst 2010 George Hall Elementary School Mobile 96-97 George Hall Elementary School Mobile 2008 1 of 216 School Name City Year Grantswood Community School Irondale 91-92 Guntersville Elementary School Guntersville 98-99 Heard Magnet School Dothan 2014 Hewitt-Trussville High School Trussville 92-93 Holtville High School Deatsville 2013 Holy Spirit Regional Catholic School Huntsville 2013 Homewood High School Homewood 83-84 Homewood Middle School Homewood 83-84, 96-97 Indian Valley Elementary School Sylacauga 89-90 Inverness Elementary School Birmingham 96-97 Ira F. -

000124 APS Primer.Indd

ALBUQUERQUE PUBLIC SCHOOLS SStatustatus Quo?Quo? ¿¿Qué?Qué? NoNo Way!Way! AAnn AAPSPS PrimerPrimer 22013-2014013-2014 There’s Nothing Status Quo About APS A message from Superintendent Winston Brooks Status quo. It’s a popular catch phrase among critics of public education. It implies that those who have dedicated their lives to helping the next generation are satisfi ed with mediocrity, are in it for the paycheck, are dispassionate and uncaring. Walk into an Albuquerque Public Schools classroom and you know that’s hardly the case. We’re dedicated to our profession. We appreciate the enormity of the task. We’re up for the challenge. And it certainly is a challenge. Teaching children who face so many diffi culties -- whether they be mental, physical, language barriers, poverty or others -- means personalizing education. It means a willingness to try new things, admit failure, regroup, start again. It means anything but status quo. To those who say, “Status Quo,” we say “What?” or in Spanish, “¿Quéé? No Way!” We invite you to learn more about APS in the pages of this 2013-2014 Primer. We’ll fi ll you in on some of our successes over the past few years and the plans we have for the future as we continue to provide the foundation for happy and successful lives for all of our students. To those who say, “Status Quo,” we say “What? ¿Quéé? No Way!” APS Goals Goal One: Academic Achievement APS will implement an academic plan aimed at im- proving achievement for all students with an intensi- fi ed focus on closing the achievement gap. -

Press Release

NM MESA, INC. 1015 Tijeras Ave NW; Ste. 200 Albuquerque, NM 8710 Phone (505) 366-2500 Fax (505) 366-2529 Press Release Contact: Anita Gonzales FOR IMMEDIATE RELEASE Phone: 505-718-9517 September 29, 2020 NM MESA to Award $113,239 to 2020 Graduating Seniors for Loyalty Award ALBUQUERQUE, NM: Congratulations to our 2020 Loyalty Award Recipients! 144 students from our NM MESA High Schools earned $113,239 to be used for their secondary education expenses. To be eligible for the award, students must: be active in NM MESA for two or more years; demonstrate program loyalty by earning a minimum of 175 activity points; meet or exceed GPA standards and/or standardized test scores; enroll in an academic program the semester following graduation or enlist for military service; and submit all required paperwork to participate in NM MESA and receive a financial award. The amount of the award is determined by overall activity participation for all years in the NM MESA program, academic performance, STEM course completion, and any leadership positions held while in the NM MESA program. In total, NM MESA students are eligible for an award of up to $1000 upon graduation. NM MESA acknowledges the hard work and achievement of all of our graduating students and announces the 2020 Loyalty Award Recipients: Graduating High Name School Current College Enrolled Major New Mexico State Mechanical Aaron Lopez Onate High School University Engineering Abigail Deferred Clarke Manzano High School Enrollment* Adreana South Valley University of New Porras Academy Mexico -

Manzano High School Band Handbook Pride, Tradition, Excellence

Manzano High School Band Handbook 2019-2020 The handbook has been developed to foster a sense of dedication, commitment and responsibility in each student; qualities that are essential to the success of the band program and the individual. Phil Perez Director of Bands 559-2200, ext. 23432 [email protected] Pride, Tradition, Excellence Page 1 of 25 Table of Contents STAFF .......................................................................................................................................................... 3 DRUM MAJORS .......................................................................................................................................... 3 SECTION LEADERS ...................................................................................................................................... 3 VOLUNTEER INFORMATION ....................................................................................................................... 4 BAND BOOSTERS, 2017-2018 ..................................................................................................................... 5 MANZANO HIGH SCHOOL BAND CALENDAR 2019/2020 ............................. Error! Bookmark not defined. BAND CAMP ............................................................................................................................................... 9 EXPECTATIONS OF STUDENTS .................................................................................................................. 10 MARCHING BAND .................................................................................................................................... -

Developing the Bilingual Seal Criteria and Assessment for the Albuquerque Public School District

DEVELOPING THE BILINGUAL SEAL CRITERIA AND ASSESSMENT FOR THE ALBUQUERQUE PUBLIC SCHOOL DISTRICT Lynne Rosen- APS Language & Cultural Equity Denise Sandy-Sánchez- Dual Language Education of NM Luisa Castillo – West Mesa High School Marisa Silva- Valley High School Susan Gandert- Albuquerque High School 2008 La Cosecha Conference Presentation Agenda Provide the historical background for the APS Bilingual Seal Share the process of defining the criteria and designing the assessments & process Describe the criteria and assessment process Provide assessment examples Share next steps Invite questions and comments Purpose & Desired Outcomes: Identify the academic criteria needed to receive the Bilingual Seal. Courses requirements G.P.A. Required level for Spanish course Teacher recommendations Design the Bilingual Seal assessment areas that achieves fidelity across the district, yet allows for flexibility. Reading/Comprehension Writing Oral/Interview APS Bilingual Seal- Historical Background Began as grassroots project at Rio Grande HS through a Title VII grant 4 additional high schools modeled their bilingual seal criteria after Rio Grande HS APS Board of Education Members requested that we move from a school bilingual seal towards a district bilingual seal (2004) APS Bilingual Seal- Historical Background… LCE met with bilingual coordinators and curriculum assistant principals to determine district criteria for seniors to earn a bilingual seal on their diploma and transcript (2004-05) LCE presented Bilingual Seal Criteria -

ACCOUNTING APPLICATIONS SERIES AWARDS REPORT Place Name School Alt

ACCOUNTING APPLICATIONS SERIES AWARDS REPORT Place Name School Alt 1 Elijah Ruiz Eldorado High School 2 Kaylee Brown La Cueva High School 3 Marissa Padilla La Cueva High School 4 Mason Gomez-valenzuela Albuquerque High School 5 Delijlah Palacios Highland High School 6 Dave Barraza Sandia High School 7 Rachel Sandoval Clovis High School 8 Vy Chau Valley High School 9 Carlos Lovato Albuquerque High School Printed: 03/27/19 10:15 AM Page 1 of 51 APPAREL & ACCESSORIES MARKETING SERIES AWARDS REPORT Place Name School Alt 1 Ryan Langeler Sandia High School 2 Nick Petty Eldorado High School 3 Travis Pierre Sandia High School 4 Silvia Bonilla Atrisco Heritage Academy 5 Destiny Jaramillo Rio Rancho High School 6 Sharlotte Holmes La Cueva High School 7 Alejandra Vazquez West Mesa High School 8 Angelo Dimas Cleveland High School 9 Gabriel Lucero Cleveland High School 10 Charles Pacheco Cibola High School Printed: 03/27/19 10:15 AM Page 2 of 51 AUTOMOTIVE SERVICES MARKETING SERIES AWARDS REPORT Place Name School Alt 1 Dominic Velasquez Volcano Vista High School 2 Austin Famiglietta Volcano Vista High School 3 V. Derick Hooper Highland High School 4 Felicia Lopez Manzano High School 5 Dominick Durkin Sandia High School 6 Connor Leonard Valley High School 7 Adrian Castro Highland High School 8 Adam Kent Volcano Vista High School Printed: 03/27/19 10:15 AM Page 3 of 51 BUSINESS FINANCE SERIES AWARDS REPORT Place Name School Alt 1 Anish Kumar Albuquerque Academy 2 Nathan Abraha Albuquerque Academy 3 Daniel Topa Manzano High School 4 Hunter Jaramillo -

Annual Report 2016-2017

UNM STEM-H Center for Outreach, Research & Education ANNUAL REPORT 2016-2017 MSC 09 5233 – Health Sciences & Services Building, Suite 102 1 University of New Mexico Albuquerque, NM 87131-0001 (505) 277-4916 STEM-H Center (505) 272-2728 Office for Diversity STEM-H Center Website http://stemed.unm.edu NM STEM-H Connection Statewide Collaborative Website http://www.nmstemh.org 1 TABLE OF CONTENTS Mission & Vision 3 STEM-H Center Team Values 3 From the Director & Advisory Board 4 Host Institution 5 Outreach Research Challenge 6 Science Olympiad 7 Junior Science & Humanities Symposium 7 Education Educator Professional Development Workshops 8 Student Project Development Bootcamp 8 UNM STEM-H Resource Center/Lending Library 9 Research/Policy/Advocacy UNM STEM-H Connection Statewide Website 9 Development of Strategic Collaborations/Partnerships 9 Policy/Advocacy 10 Board & Staff 11 Financial Information Current Information & Implications 12 FY17 Business/Community Group Donors 14 FY17 Individual Donors 18 Schools Served 20 Appendices Volunteer Hours & Value of Time 23 Research Challenge Statistics 24 Science Olympiad Statistics 25 JSHS Statistics 26 Combined Programs Statistics 27 Awards Values 28 Awards Donors List 29 2 MISSION The UNM STEM-H Center advances K-12 STEM-H* teaching and learning through outreach, research and education activities. *STEM-H = Science Technology Engineering Math & Health Our mission is accomplished by, but not limited to… Cultivating strategic STEM-H education focused partnerships and collaborations among UNM faculty/staff -

Eric Rombach-Kendall

ERIC ROMBACH-KENDALL Director of Bands: University of New Mexico Past President: College Band Directors National Association EDUCATION The University of Michigan, Ann Arbor, Michigan. 1986-1988. MM (Wind Conducting) The University of Michigan, Ann Arbor, Michigan. 1983-1984. MM (Music Education) University of Puget Sound, Tacoma, Washington. 1975-1979. BM (Music Education), Cum Laude TEACHING EXPERIENCE University of New Mexico, Albuquerque, New Mexico. 1993 to Present. Professor of Music, Director of Bands. Provide leadership and artistic vision for comprehensive band program. Regular Courses: Wind Symphony, Graduate Wind Conducting, Undergraduate Conducting, Instrumental Repertory. Additional Courses: Instrumental Music Methods, Introduction to Music Education. Boston University, Boston, Massachusetts. 1989-1993. Assistant Professor of Music, Conductor Wind Ensemble. Courses: Wind Ensemble, Chamber Winds, Concert Band, Contemporary Music Ensemble, Conducting, Instrumental Literature and Materials, Philosophical Foundations of Music Education. Carleton College, Northfield, Minnesota. 1988-1989. Instructor of Music (sabbatical replacement). Courses: Wind Ensemble, Jazz Ensemble, Chamber Music. The University of Michigan, Ann Arbor, Michigan. 1986-1988. Graduate Assistant: University Bands. Conductor of Campus Band, Assistant Conductor Michigan Youth Band. The University of Michigan, 1984. Graduate Assistant in Music Education. Instructor of wind and percussion techniques for undergraduate music education students. Oak Harbor School District, Oak Harbor, Washington. 1985-1986. Director of Bands, Oak Harbor High School. Courses taught: Wind Ensemble, Symphonic Band, Jazz Band, Marching Band. Peninsula School District, Gig Harbor, Washington. 1979-1983, 1984-1985. Director of Bands, Gig Harbor High School, Goodman Middle School. Courses taught: Symphonic Band, Concert Band, Jazz Band, Marching Band/Pep Band, elementary instrumental music. RELATED PROFESSIONAL EXPERIENCE College Band Directors National Association National President, 2011-2013.