The Angel on Your Shoulder: Prompting Employees to Do the Right Thing Through the Use of Wearables Timothy L

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

THE WONDERFUL APPEARANCE of an Angel, Devil & Ghost, to a GENTLEMAN in the Town of Boston, in the Nights of the 14Th, 15Th, and 16Th of October, 1774" (Boston, 1774)

National Humanities Center My Neighbor, My Enemy: How American Colonists Became Patriots and Loyalists "THE WONDERFUL APPEARANCE of an Angel, Devil & Ghost, to a GENTLEMAN in the Town of Boston, In the Nights of the 14th, 15th, and 16th of October, 1774" (Boston, 1774) A little known political document, "The Wonderful Appearance" is sort of a colonial American version of A Christmas Carol in which three apparitions try to convert a sinner. It was published in Boston in the summer of 1774, the moment of greatest popular rage against royal officials in Massachusetts. It asked the ordinary reader to consider carefully what might happen to someone who tried to remain neutral—or worse, gave comfort to the enemy—during a revolutionary crisis. Although the story contains marvelous humor, it reminds us of the pressure a community could bring to bear on enemies and skeptics. The narrator, who sympathizes with the British, returns to his room after a supper with some jovial companions and is struck by terror when he hears an awful sound nearby. The noise continues until a "violent wrap against" his window signals the entrance of an angel, who pulls up a chair and delivers two messages. First, the angel says, if the narrator does not cease to oppress his countrymen, he will end up in hell. Second, the Devil is going to drop by tomorrow night to tell him so in person. Like Scrooge, the narrator tries to convince himself that his first nocturnal visitor was merely a delusion, but he never quite succeeds. The next night, as predicted, the Devil—a rational, cool- headed gentleman—appears and asks the narrator how he became such an enemy to his country. -

Bible Knowledge Sword and Had Kefa (Peter) Arrested? and Find Victory in Today

BATTLEFIELD NEWS Vol. 5 No. 12 ONE ARMY December 2014 The Official Publication of The Whole Body of Christ Alliance $1.00 Letter and Spirit The Cry From Hell Revolve/B-Shoc By: R. D. Hempton By: Richard Dean “The situation we are in at this very moment,” “People say there is no hell, that when you’re Concert Deon Gerber began, “is that we have no home dead, that’s it,” said Pastor Carol Patman of the By: R. D. Hempton and no income. There is the promise of God and Miracle Deliverance churches as she spoke at The Jefferson Civic Center was the scene of an the provision of God. What do you do when you the 3-day revival held at Miracle deliverance extraordinary event on 14 November. Patsy are waiting for the promise and the provision of Commerce. “I just stopped by on my way to God to link up? The Holy Spirit said I will minister to everyone in this church that is in a Deon Gerber Pastor Carol Patman B-Shoc, the Christian Rap artist, ministered. similar situation. Ez 36:26 says, ‘I will give you heaven to tell you the devil is a liar. People a new heart and put a new spirit within you; I don’t believe there is a hell because nobody Garrett, pastor of Jefferson Gospel Tabernacle, will take the heart of stone out of your flesh and preaches on it any more. We are having revival arranged the concert for the community and the give you a heart of flesh.’ Through the blood of because the saints need reviving and (see pg 5) unchurched. -

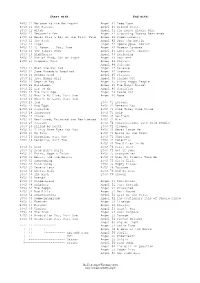

Buffy & Angel Watching Order

Start with: End with: BtVS 11 Welcome to the Hellmouth Angel 41 Deep Down BtVS 11 The Harvest Angel 41 Ground State BtVS 11 Witch Angel 41 The House Always Wins BtVS 11 Teacher's Pet Angel 41 Slouching Toward Bethlehem BtVS 12 Never Kill a Boy on the First Date Angel 42 Supersymmetry BtVS 12 The Pack Angel 42 Spin the Bottle BtVS 12 Angel Angel 42 Apocalypse, Nowish BtVS 12 I, Robot... You, Jane Angel 42 Habeas Corpses BtVS 13 The Puppet Show Angel 43 Long Day's Journey BtVS 13 Nightmares Angel 43 Awakening BtVS 13 Out of Mind, Out of Sight Angel 43 Soulless BtVS 13 Prophecy Girl Angel 44 Calvary Angel 44 Salvage BtVS 21 When She Was Bad Angel 44 Release BtVS 21 Some Assembly Required Angel 44 Orpheus BtVS 21 School Hard Angel 45 Players BtVS 21 Inca Mummy Girl Angel 45 Inside Out BtVS 22 Reptile Boy Angel 45 Shiny Happy People BtVS 22 Halloween Angel 45 The Magic Bullet BtVS 22 Lie to Me Angel 46 Sacrifice BtVS 22 The Dark Age Angel 46 Peace Out BtVS 23 What's My Line, Part One Angel 46 Home BtVS 23 What's My Line, Part Two BtVS 23 Ted BtVS 71 Lessons BtVS 23 Bad Eggs BtVS 71 Beneath You BtVS 24 Surprise BtVS 71 Same Time, Same Place BtVS 24 Innocence BtVS 71 Help BtVS 24 Phases BtVS 72 Selfless BtVS 24 Bewitched, Bothered and Bewildered BtVS 72 Him BtVS 25 Passion BtVS 72 Conversations with Dead People BtVS 25 Killed by Death BtVS 72 Sleeper BtVS 25 I Only Have Eyes for You BtVS 73 Never Leave Me BtVS 25 Go Fish BtVS 73 Bring on the Night BtVS 26 Becoming, Part One BtVS 73 Showtime BtVS 26 Becoming, Part Two BtVS 74 Potential BtVS 74 -

The Angels Loyalty

Hermetic Angel Messages PDF version 24 degrees Libra The Angels of Loyalty Also known as The Angels of Istaroth Beloved, Opening the heart to the purity of loyalty is one of the greatest blessings possible on earth. Sacred loyalty opens ways to serve peace on earth. Loyalty is essentially a commitment, or meditation, upon loving impeccability. Those who hold this concentration upon loyalty have made a commitment to someone or some worthy quality. A vow is made never to fall below a lofty, inspired standard of ethical behavior and commitment on every level of awareness. A loyal person is purified and uplifted by the very act of staying true to the commitment to uphold the high standard, and never forsake it. One of the special gifts bestowed by loyalty upon the person who has taken on its mantle is inner peace, a paradoxical gift: by giving it away toward another, you receive the solace yourself of always dwelling where there is no betrayal. By being completely loyal to the true spiritual essence of those you are committed to, and staying true to your commitment, you embark upon an exquisite journey of transformation to a special Garden of Eden, wherein the paths of mastery and sainthood unite. Although loyalty appears to limit ones options and restrict freedom, on the contrary, committing to be loyal opens a beautiful, inner world of refined emotions and peace of mind which is not accessible by any other means. In any relationship, we inspire each person so that they will never be disloyal. We help you discover anything that would cause infidelity, as you go along, so that you prevent it before it even starts. -

Ever Faithful

Ever Faithful Ever Faithful Race, Loyalty, and the Ends of Empire in Spanish Cuba David Sartorius Duke University Press • Durham and London • 2013 © 2013 Duke University Press. All rights reserved Printed in the United States of America on acid-free paper ∞ Tyeset in Minion Pro by Westchester Publishing Services. Library of Congress Cataloging- in- Publication Data Sartorius, David A. Ever faithful : race, loyalty, and the ends of empire in Spanish Cuba / David Sartorius. pages cm Includes bibliographical references and index. ISBN 978- 0- 8223- 5579- 3 (cloth : alk. paper) ISBN 978- 0- 8223- 5593- 9 (pbk. : alk. paper) 1. Blacks— Race identity— Cuba—History—19th century. 2. Cuba— Race relations— History—19th century. 3. Spain— Colonies—America— Administration—History—19th century. I. Title. F1789.N3S27 2013 305.80097291—dc23 2013025534 contents Preface • vii A c k n o w l e d g m e n t s • xv Introduction A Faithful Account of Colonial Racial Politics • 1 one Belonging to an Empire • 21 Race and Rights two Suspicious Affi nities • 52 Loyal Subjectivity and the Paternalist Public three Th e Will to Freedom • 94 Spanish Allegiances in the Ten Years’ War four Publicizing Loyalty • 128 Race and the Post- Zanjón Public Sphere five “Long Live Spain! Death to Autonomy!” • 158 Liberalism and Slave Emancipation six Th e Price of Integrity • 187 Limited Loyalties in Revolution Conclusion Subject Citizens and the Tragedy of Loyalty • 217 Notes • 227 Bibliography • 271 Index • 305 preface To visit the Palace of the Captain General on Havana’s Plaza de Armas today is to witness the most prominent stone- and mortar monument to the endur- ing history of Spanish colonial rule in Cuba. -

Virtual Communities in the Buffyerse

Buffy, Angel, and the Creation of Virtual Communities By Mary Kirby Diaz Professor, Sociology-Anthropology Department Farmingdale State University of New York Abstract The Internet has provided a powerful medium for the creation of virtual communities. In the case of Buffy the Vampire Slayer and Angel: the Series, the community is comprised of fans whose commonality is their love of the universe created by the producers, writers, cast and crew of these two television series. This paper will explore the current status of the major ongoing communities of Buffy and Angel fans, and the support that binds them into a “real” virtual community. “The Internet, you know... The bitch goddess that I love and worship and hate. You know, we found out we have a fan base on the Internet. They came together as a family on the Internet, a huge, goddamn deal. It's so important to everything the show has been and everything the show has done – I can't say enough about it.' (http://filmforce.ign.com/articles/425/425492p10.html: IGGN. Introduction [1]This is the first of a projected series of short sociological studies dealing with the general subject of the Buffyverse fandom. Subsequent project topics include (1) canonical and non-canonical love in the Buffyverse (Bangels, Spuffies, Spangels, etc.), (2) fanfiction (writers and readers), (3) unpopular canonical decisions, (4) a review of the relevant literature on fandom, (5) the delineation of character loyalty, (6) the fancon, (7) the Buffyverse as entrepreneur, and (8) predictions about the Buffista and Angelista fandom over the next five years. -

Angel's First Loyalty Card Goes Live!

Angel’s first loyalty 33/ card goes live! In response to huge business demand we’re proud to present.... 15 Swipii, the Angel’s first loyalty card. We’ve signed up everyone’s favourite independent shops and eateries offering coffee, lunch, drinks and dinner, plus flowers, cosmetics, electricals and toys, with rewards you actually like! To get started, just pick up a card from one of these 20 businesses Pizzeria Oregano, Cuppacha bubble tea, After Noah, Angel Deli, Angel NEWS Flowers, L’Erbolario Italian natural beauty products, Tenshi Japanese, Snappy Snaps, Colibri fashion boutique, Angel Inn cafe, The Old Red Lion, Rayshack electrical, 5 Star dry cleaners, Chilango, Amorino Italian The newsletter from gelataria, Chana chemist, Diverse boutique, Radicals & Victuallers, Angel Business Tatami Health and Kimantra Spa. Improvement District Swipii works with one swipe of a card or a phone app on an iPad as you pay at the counter. Each business designs their own reward scheme but you only need one card for everything at the Angel - and it works across London too. “We’ve had requests for a loyalty scheme from businesses, and people working and living here, and we’re delighted to have found Swipii which is quick and easy to use,” says angel.london Chief Executive Christine Lovett. “It’s all about rewarding customer loyalty to everyone’s favourite brands and keeping the quirky independent flavour of businesses at the Angel, which brings people back time and again.” Don’t miss out - contact us for cards or to sign up your business 020 7288 4377 or [email protected] www.swipiicard Has your business Leap into dance – it’s a won an award? Tell us and we’ll tell stone’s throw away everyone else. -

National Angel Ring Project National Rollout of Life Saving Buoys for Rockfishers

National Angel Ring Project National Rollout of Life Saving Buoys for Rockfishers Stan Konstantaras April 2018 FRDC Project No 2011/404 Version 1.0 1 July 2013 © Year Fisheries Research and Development Corporation. All rights reserved. ISBN-13:9780-646-98533-6 National Angel Ring Project 2011/404 2018 Ownership of Intellectual property rights Unless otherwise noted, copyright (and any other intellectual property rights, if any) in this publication is owned by the Fisheries Research and Development Corporation Australian National Sportfishing Association This publication (and any information sourced from it) should be attributed to Konstantaras. S., Australian National Sportfishing Associtaion, 2017, National Angel Ring Project, Sydney, February. CC BY 3.0 Creative Commons licence All material in this publication is licensed under a Creative Commons Attribution 3.0 Australia Licence, save for content supplied by third parties, logos and the Commonwealth Coat of Arms. Creative Commons Attribution 3.0 Australia Licence is a standard form licence agreement that allows you to copy, distribute, transmit and adapt this publication provided you attribute the work. A summary of the licence terms is available from creativecommons.org/licenses/by/3.0/au/deed.en. The full licence terms are available from creativecommons.org/licenses/by/3.0/au/legalcode. Inquiries regarding the licence and any use of this document should be sent to: [email protected] Disclaimer The authors do not warrant that the information in this document is free from errors or omissions. The authors do not accept any form of liability, be it contractual, tortious, or otherwise, for the contents of this document or for any consequences arising from its use or any reliance placed upon it. -

The Third Angel's Message, and Proclaimed It in the Power of the Holy Spirit, the Lord Would Have Wrought Mightily with Their Efforts

The Third Angel’s Message (1897) February 9, 1897 The Spirit of Prophecy. - No. 1. A. T. Jones (Tuesday Forenoon, Feb. 9, 1897.) I SUPPOSE there is no one in this room who does not think but that he truly believes in the Spirit of Prophecy; that is, that the Spirit of Prophecy belongs to the church,-to this message as is manifested through Sister White, and that these things are believed, professedly believed at least, so far as the idea and the Scriptures that prove that such things are a part of this work. But that is not where the trouble lies, for we are in trouble now. If we do not know it, we are much worse off than if we were in trouble and did know it. And more than that, the cause of God, as well as you and I, are in such trouble that we are in danger day by day of incurring the wrath of God because we are where we are. The Lord tells us that more than once, and he tells us how we got there, and he tells us how to get out of it. And the only thing I know how to tell you here, is to study the Spirit of Prophecy, and get out of it what you need. That is only one of the statements that is made. In knowing these statements, and having known them for some time, I would have been glad to stay at home and go on with the work there, because there is so much to be done and so many involved. -

2015 TX STAAR Grade 5 Reading Released Book

GRADE 5 Reading Administered March 2015 RELEASED Copyright © 2015, Texas Education Agency. All rights reserved. Reproduction of all or portions of this work is prohibited without express written permission from the Texas Education Agency. READING Page 3 Read the selection and choose the best answer to each question. Then fill in the answer on your answer document. from Princess for a Week by Betty Ren Wright 1 “You don’t even know for sure you’re getting a dog,” Jacob grumbled. “We might be doing all this work for nothing.” 2 “I do know for sure,” Roddy corrected him. “I was there when my mom’s friend Linda called this morning. She shows dogs for rich people, and she’s taking one to a show in Philadelphia today. Her neighbor’s supposed to come in and look after things when Linda’s away, but the neighbor has the flu. So Linda needs someone to take care of her own dog, Princess, for a week. My mom said okay. And,” Roddy finished triumphantly, “the minute I heard that I remembered this doghouse.” 3 “Still a lot of work for one week,” Jacob mumbled. 4 Roddy didn’t argue. He’d wanted a dog for as long as he could remember. Now he had a week to prove to his mom that he was old enough to take care of one himself. 5 “You taking that thing to the dump?” 6 Both boys jumped. Neither one had noticed the girl coming toward them. 7 “Want some help?” she asked coolly. “I don’t mind.” 8 “No, thanks,” Roddy said. -

BLIND DATE and So the Stage Transforms, Perhaps to the Blind

GRIMM TALES RETOLD by Philip Holyman — Draft 1 — 15/08/17 BLIND DATE And so the stage transforms, perhaps to the Blind Date theme music, perhaps to an appropriately themed song. A private side room in a hospital. MUMMY TO BE is lying on the bed, heavily pregnant. DADDY TO BE is sitting in a chair nearby. She looks at him with hate-filled eyes. A DOCTOR is with them. DOCTOR: The test results are back. And I want to reassure you that everything is alright. We’ve been lucky, this time. It was just a false alarm. But we’re going to keep you in now, because you’re so close to full term. We’ll do everything we can, but it’s really important that you avoid any more stress from now on. It’s extremely bad for you and extremely bad for your baby. I can’t emphasise that seriously enough. We’ll come and check on you again in a little while, okay? DADDY TO BE: Thankyou, Doctor. MUMMY TO BE glowers at DADDY TO BE. The DOCTOR leaves. DADDY TO BE: That’s good news, then, isn’t it—? MUMMY TO BE: This is all your fault! You’re the reason this is happening! DADDY TO BE: I told you, I keep telling you — I tried my best—! 1 GRIMM TALES RETOLD by Philip Holyman — Draft 1 — 15/08/17 MUMMY TO BE: Your best’s not good enough, though, is it? It never is! DADDY TO BE: I’m doing everything I can! I’ve been everywhere looking for it! Nobody’s got any! I went to Marks and Sparks! I even went to Waitrose! MUMMY TO BE: It’s the only thing I want! The only thing I’ve ever asked you for! But what I want doesn’t really matter to you, does it? DADDY TO BE: That’s not true, -

THE COLLECTED POEMS of HENRIK IBSEN Translated by John Northam

1 THE COLLECTED POEMS OF HENRIK IBSEN Translated by John Northam 2 PREFACE With the exception of a relatively small number of pieces, Ibsen’s copious output as a poet has been little regarded, even in Norway. The English-reading public has been denied access to the whole corpus. That is regrettable, because in it can be traced interesting developments, in style, material and ideas related to the later prose works, and there are several poems, witty, moving, thought provoking, that are attractive in their own right. The earliest poems, written in Grimstad, where Ibsen worked as an assistant to the local apothecary, are what one would expect of a novice. Resignation, Doubt and Hope, Moonlight Voyage on the Sea are, as their titles suggest, exercises in the conventional, introverted melancholy of the unrecognised young poet. Moonlight Mood, To the Star express a yearning for the typically ethereal, unattainable beloved. In The Giant Oak and To Hungary Ibsen exhorts Norway and Hungary to resist the actual and immediate threat of Prussian aggression, but does so in the entirely conventional imagery of the heroic Viking past. From early on, however, signs begin to appear of a more personal and immediate engagement with real life. There is, for instance, a telling juxtaposition of two poems, each of them inspired by a female visitation. It is Over is undeviatingly an exercise in romantic glamour: the poet, wandering by moonlight mid the ruins of a great palace, is visited by the wraith of the noble lady once its occupant; whereupon the ruins are restored to their old splendour.