Research Report: Hybrid Clarinet Project

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The KNIGHT REVISION of HORNBOSTEL-SACHS: a New Look at Musical Instrument Classification

The KNIGHT REVISION of HORNBOSTEL-SACHS: a new look at musical instrument classification by Roderic C. Knight, Professor of Ethnomusicology Oberlin College Conservatory of Music, © 2015, Rev. 2017 Introduction The year 2015 marks the beginning of the second century for Hornbostel-Sachs, the venerable classification system for musical instruments, created by Erich M. von Hornbostel and Curt Sachs as Systematik der Musikinstrumente in 1914. In addition to pursuing their own interest in the subject, the authors were answering a need for museum scientists and musicologists to accurately identify musical instruments that were being brought to museums from around the globe. As a guiding principle for their classification, they focused on the mechanism by which an instrument sets the air in motion. The idea was not new. The Indian sage Bharata, working nearly 2000 years earlier, in compiling the knowledge of his era on dance, drama and music in the treatise Natyashastra, (ca. 200 C.E.) grouped musical instruments into four great classes, or vadya, based on this very idea: sushira, instruments you blow into; tata, instruments with strings to set the air in motion; avanaddha, instruments with membranes (i.e. drums), and ghana, instruments, usually of metal, that you strike. (This itemization and Bharata’s further discussion of the instruments is in Chapter 28 of the Natyashastra, first translated into English in 1961 by Manomohan Ghosh (Calcutta: The Asiatic Society, v.2). The immediate predecessor of the Systematik was a catalog for a newly-acquired collection at the Royal Conservatory of Music in Brussels. The collection included a large number of instruments from India, and the curator, Victor-Charles Mahillon, familiar with the Indian four-part system, decided to apply it in preparing his catalog, published in 1880 (this is best documented by Nazir Jairazbhoy in Selected Reports in Ethnomusicology – see 1990 in the timeline below). -

Following the Science

November 2020 Following the Science: A systematic literature review of studies surrounding singing and brass, woodwind and bagpipe playing during the COVID-19 pandemic Authors: John Wallace, Lio Moscardini, Andrew Rae and Alan Watson Music Education MEPGScotland Partnership Group MEPGScotland.org @MusicEducation10 Table of Contents Overview 1 Introduction Research Questions Research Method 2 Systematic Review Consistency Checklist Results 5 Thematic Categories Discussion 7 Breathing Singing Brass playing Woodwind playing Bagpipes Summary Conclusions 14 Recommended measures to mitigate risk 15 Research Team 17 Appendix 18 Matrix of identified papers References 39 Overview Introduction The current COVID-19 situation has resulted in widespread concern and considerable uncertainty relating to the position of musical performance and in particular potential risks associated with singing and brass, woodwind and bagpipe playing. There is a wide range of advice and guidance available but it is important that any guidance given should be evidence- based and the sources of this evidence should be known. The aim of the study was to carry out a systematic literature review in order to gather historical as well as the most current and relevant information which could provide evidence-based guidance for performance practice. This literature was analysed in order to determine the evidence of risk attached to singing and brass , woodwind and bagpipe playing, in relation to the spread of airborne pathogens such as COVID-19, through droplets and aerosol. -

Program for Meet the Plumbers (April 20

The PLUMBING FACTORY BRASS BAND Henry Meredith, Director – Meet the PLUMBERS – Music for various types of brass instruments in small and large ensembles APRIL 20, 2016 Byron United Church – 420 Boler Road (@ Baseline), LONDON, Ontario __________________________________________ TUTTI – Introducing our members according to the types of instruments they play, from the bottom up Joy, Peace & Happiness Richard Phillips (born 1962) CORNETS – The sweeter, mellower cousins of the trumpets can still sound like trumpets for fanfares! Tuba Mirum from the Manzoni Requiem (1874) Giuseppe Verdi (1813-1901), arr. H. Meredith Fanfare for Prince Henry (1984) Jeff Smallman (born 1965) BRASS BAND Instrument Predecessors –Parforce Horns for the hunt Polka Mazur & Österreichesche Jägerlied Anton Wunderer (1850-1906) Le Rendez-vous de Chasse (1828) Gioachino Rossini (1792-1868) TROMBONES – The “perfect” instrument remains basically unchanged after five centuries! Two Newfoundland Folk Songs arr. Kenneth Knowles Let Me Fish Off Cape St. Mary’s & Lots of Fish in Bonavist’ Harbor Jazz Invention No. 1 Lennie Niehaus (born 1929) More Predecessors of BRASS BAND Instruments – Valveless Trumpets - The instruments of kings Fanfares pour 4 Trompettes naturelles (1833) Sigismund Neukomm (1778-1858) Allegro & Polonaise Quartuor No. 1 – Marche François-Georges-Auguste Dauverné (1799-1874) TUBAS/ EUPHONIUMS – Bass and Baritone Horns of the Saxhorn type – Going places! Indiana Polka (from Peters’ Saxhorn Journal, Cincinnati, 1859) Edmund Jaeger, J. Schatzman Pennsylvania Polka Lester Lee (1903-1956) and Zeke Manners (1911-2000) Washington Post March (1889) John Philip Sousa (1854-1932) TUTTI – Another important place name – how the West was won Dodge City (2001) Jeff Smallman Muted CORNETS and Flugelhorns – The Pink Panther (1964) Henry Mancini (1924-1994), arr. -

Mystery Instrument Trivia!

Mystery Instrument Trivia! ACTIVITY SUMMARY In this activity, students will use online sources to research a variety of unusual wind- blown instruments, matching the instrument name with the clues provided. Students will then select one of the instruments for a deeper dive into the instrument’s origins and usage, and will create a multimedia presentation to share with the class. STUDENTS WILL BE ABLE TO identify commonalities and differences in music instruments from various cultures. (CA VAPA Music 3.0 Cultural Context) gather information from sources to answer questions. (CA CCSS W 8) describe objects with relevant detail. (CACCSS SL 4) Meet Our Instruments: HORN Video Demonstration STEPS: 1. Review the HORN video demonstration by San Francisco Symphony Assistant Principal Horn Bruce Roberts: Meet Our Instruments: HORN Video Demonstration. Pay special attention to the section where he talks about buzzing the lips to create sound, and how tightening and loosening the buzzing lips create different sounds as the air passes through the instrument’s tubing. 2. Tell the students: There many instruments that create sound the very same way—but without the valves to press. These instruments use ONLY the lips to play different notes! Mystery Instrument Trivia! 3. Students will explore some unusual and distinctive instruments that make sound using only the buzzing lips and lip pressure—no valves. Students should use online searches to match the trivia clues with the instrument names! Mystery Instrument Trivia - CLUES: This instrument was used in many places around the world to announce that the mail had arrived. Even today, a picture of this instrument is the official logo of the national mail delivery service in many countries. -

Measurements and Simulations of Aerosol Released

1 Measurements and Simulations of Aerosol 2 Released while Singing and Playing Wind 3 Instruments 4 Tehya Stockmana*, Shengwei Zhub, Abhishek Kumarc, Lingzhe Wangd, Sameer Patele, James Weaverf, 5 Mark Spedeg, Don Miltonh, Jean Hertzbergi, Darin Tooheyj, Marina Vancek, Jelena Srebricl, Shelly L. 6 Millerm* 7 8 a Department of Civil, Environmental, and Architectural Engineering, University of Colorado Boulder, 9 Boulder, CO, 80309, USA 10 b Department of Mechanical Engineering, University of Maryland, College Park, 20742, MD, USA 11 c Department of Mechanical Engineering, University of Colorado Boulder, Boulder, 80309, CO, USA 12 d Department of Mechanical Engineering, University of Maryland, College Park, 20742, MD, USA 13 e Department of Civil Engineering, Indian Institute of Technology, Gandhinagar, Gujrat, 382355, India 14 f National Federation of State High School Associations, Indianapolis, IN, 46402, USA 15 g Department of Performing Arts, Clemson University, Clemson, SC, 29634, USA 16 h Maryland Institute for Applied Environmental Health, School of Public Health, University of Maryland, 17 College Park, MD, 20740, USA 18 i Department of Mechanical Engineering, University of Colorado Boulder, Boulder, 80309, CO, USA 19 j Department of Atmospheric and Oceanic Sciences, University of Colorado Boulder, Boulder, 80309, 20 CO, USA 21 k Department of Mechanical Engineering, University of Colorado Boulder, Boulder, 80309, CO, USA 22 l Department of Mechanical Engineering, University of Maryland, College Park, 20742, MD, USA 23 m Department of Mechanical Engineering, University of Colorado Boulder, Boulder, 80309, CO, USA 24 *Corresponding authors emails: [email protected], [email protected] 25 26 27 28 Abstract 29 Outbreaks from choir performances, such as the Skagit Valley Choir, showed that singing brings potential 30 risk of COVID-19 infection. -

The Alphorn in North America: “Blown Yodeling” Within a Transnational Community

City University of New York (CUNY) CUNY Academic Works School of Arts & Sciences Theses Hunter College Fall 1-6-2021 The Alphorn in North America: “Blown Yodeling” Within A Transnational Community Maureen E. Kelly CUNY Hunter College How does access to this work benefit ou?y Let us know! More information about this work at: https://academicworks.cuny.edu/hc_sas_etds/682 Discover additional works at: https://academicworks.cuny.edu This work is made publicly available by the City University of New York (CUNY). Contact: [email protected] The Alphorn in North America: “Blown Yodeling” Within A Transnational Community by Maureen E. Kelly Submitted in partial fulfillment of the requirements for the degree of Master of Arts in Music, Hunter College The City University of New York 2021 01/06/2021 Barbara Hampton Date Thesis Sponsor 01/06/2021 Barbara Oldham Date Second Reader CONTENTS CHAPTER I: Meeting the North American Alphorn Community 1 1. Structure of Research 2. Data Gathering 3. Studying the Alphorn a. The History of the Alphorn b. Imagined Communities and Identity c. Cultural Tourism d. Organology CHAPTER II: “Auslanders:” Travels to Switzerland 8 1. Bill Hopson 2. Laura Nelson 3. Gary Bang CHAPTER III: Establishing North American Alphorn Schools 31 1. Utah 2. West Virginia 3. The Midwest CHAPTER IV: “We All Serve the Same Master:” The Alphorn Trade 49 CHAPTER V: An Instrument of Kinship 69 Bibliography 75 LIST OF ILLUSTRATIONS Bill Hopson in Frauenfeld 12 Harmonic Series 13 Excerpt, Brahms Symphony no. 1 in c minor 13 Albumblatt für Clara Schumann 14 Excerpt, Mozart Concerto no. -

Fall 2020 AR

Published by the American Recorder Society, Vol. LXI , No. 3 • www.americanrecorder.org fall 2020 fall Editor’s ______Note ______ ______ ______ ______ Volume LXI, Number 3 Fall 2020 Features y 1966 copy of On Playing the Flute Giving Voice to Music: by J.J. Quantz is dog-eared and has a The Art of Articulation . 9-22, 26-33 numberM of sticky tabs hanging out, showing By Beverly R. Lomer and my years of referring to its ideas; the binding María Esther Jiménez Capriles is releasing the pages consulted most often. After reading the articles in this issue, you Articulating Arcadelt’s Swan . 23 may discover why and find your own copy. By Wendy Powers This isn’t a typical AR issue. With few Departments events taking place, and thus not much news Advertiser Index and Classified Ads. 48 in Tidings, it gives us the opportunity to run an entire large article on articulation in one Education . 38 issue, plus a shorter one on madrigals—with Michael Lynn decodes what you should play the authors outlining historical guidelines when you see those small signs in the music (like Quantz) and living sources, then pro- viding music demonstrating ways you can President’s Message . 3 apply articulation on your own (page 9). ARS President David Podeschi recaps the past few Quantz also makes a brief appearance months, and looks ahead, thanking those who have in the first of a series of Education pieces been guiding the ARS’s efforts on ornamentation. In this issue (page 38), Michael Lynn covers the appoggiatura Reviews and trill, with the promise of more infor- Recording . -

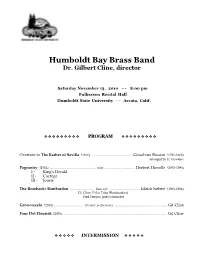

Prog HBBB '10 11-13-10 .Cwk

Humboldt Bay Brass Band Dr. Gilbert Cline, director Saturday November 13, 2010 - - 8:00 pm Fulkerson Recital Hall Humboldt State University - - Arcata, Calif. ❖ ❖ ❖ ❖ ❖ ❖ ❖ ❖ ❖ PROGRAM ❖ ❖ ❖ ❖ ❖ ❖ ❖ ❖ ❖ Overture to The Barber of Seville (1816) ........................................ Gioachino Rossini (1792-1868) arranged by G. Hawkins Pageantry (1934) .............................................. suite .............................. Herbert Howells (1892-1983) I - King’s Herald II - Cortege III - Jousts The Bombastic Bombardon ......................... Bass solo .............................. Edrich Siebert (1903-1984) Gil Cline, E-flat Tuba (Bombardon) Fred Tempas, guest conductor Groo-vuzela (2010) .............................. Premier performance .................................................... Gil Cline Four Dot Flourish (2005) ......................................................................................................... Gil Cline ❖ ❖ ❖ ❖ ❖ INTERMISSION ❖ ❖ ❖ ❖ ❖ ❖ ❖ ❖ ❖ ❖ AFTER THE INTERVAL ❖ ❖ ❖ ❖ ❖ Arnhem .......................................................... march .............................................. A. E. Kelly (b. 1914) Battaglia Die Schlacht (1551) ............ Premier performance ................ Clement Janequin (1485-1558) arranged by Gilberti Clini American Patrol (1891) ........................ Premier performance .................... F. W. Meacham (1850-1896) arranged by G. Cline December 7th (2001) .................................... chorale ....................................... Hans -

Canadian Brass

SCHOOL DAY PERFORMANCES FOR PUBLIC, PRIVATE, AND HOME SCHOOLS, GRADES PreK-12 Canadian Brass IMPACT BRASS CLASS ON THE This season we invite school communities to explore the Did you know that the didgeridoo, the shofar, the vuvuzela, and the performing arts through a selection of topics that reveal the conch are all brass instruments? The didgeridoo, an instrument created IMPACT of the Arts for Youth. by Aboriginal Australians, is typically made from a hard wood like eucalyptus or even from thick bamboo. Literally a horn from a ram, the shofar is the ancient instrument of Jewish tradition. The South African MAP Introduction to the arts vuvuzela can be crafted from plastic or aluminum and a conch is the CANADIAN BRASS ORIGINATED IN Meaning and cultural context shell from a sea snail or a mollusk. But the saxophone is made of brass TORONTO, ONTARIO, CANADA. and it’s a woodwind instrument. And some flutes are formed from CURRENT MEMBERS LIVE IN CITIES brass too. So, what’s a brass instrument anyway? Production ALL ACROSS NORTH AMERICA. Art-making and creativity Your task: investigate what makes an instrument suitable for the ABOUT THE ARTISTS brass classification, then choose a brass instrument to research in more Careers detail. You could choose one of the four instruments played by the With their “unbeatable blend of Canadian Brass or choose a less typical member of the brass family. TORONTO, ONTARIO, virtuosity, spontaneity, and humor,” Training Part of your research can take place during the Canadian Brass concert CANADA as you listen and learn from the musicians. -

Magisterská Diplomová Práce Brno 2018 Mgr. Olga Macíčková

MASARYKOVA UNIVERZITA FILOZOFICKÁ FAKULTA ÚSTAV SLAVISTIKY Magisterská diplomová práce Brno 2018 Mgr. Olga Macíčková Masarykova univerzita Filozofická fakulta Ústav slavistiky Ukrajinský jazyk a literatura Mgr. Olga Macíčková Česko-ukrajinská terminologie sémantického pole Aerofony (Чесько-українська термінологія семантичного поля Аерофони) Magisterská diplomová práce Vedoucí práce: PhDr. Petr Kalina, Ph.D. 2018 2 Prohlašuji, že jsem diplomovou práci vypracovala samostatně s využitím uvedených pramenů a literatury. …………………………………………….. Podpis autora práce 3 Poděkování Zde bych chtěla poděkovat vedoucímu práce PhDr. Petru Kalinovi, Ph.D. za odborné vedení mé diplomové práce, cenné a přínosné rady a věnovaný čas. Rovněž bych chtěla poděkovat Ondrovi Musilovi a Marku Slanému za laskavé zapůjčení literatury. Také bych moc chtěla poděkovat svému milovanému manželi Martinu Macíčkoví za absolutní podporu během psaní této práce. 4 Obsah ÚVOD ............................................................................................................................................................................ 7 1. TEORETICKÁ ČÁST ...................................................................................................................................... 12 1.1 UKRAJINSKÁ HUDEBNÍ INSTRUMENTÁLNÍ KULTURA .................................................................................... 12 1.2 ČESKÁ HUDEBNÍ INSTRUMENTÁLNÍ KULTURA ............................................................................................. 14 1.3 SHRNUTÍ -

Shomrai Nursery Weekly Glimpse Sep 15 2017

Parshas Nitzavim / Shomrai Nursery September 15, 2017 Vayeilech 25 Elul 5777 Candlelighting: 6:58 PM Havdalah: 7:55 PM Weekly Glimpse Back to School Night High quality schools are built upon strong relationships. We invite our parents to school because they are co-constructors of the school community and we share important information regarding their children's education, what a typical day looks like, and an opportunity to interact and get to know their child's teachers. Thank you for joining us on Tuesday night! "Similar to the assortment of threads in an intricately woven tapestry, the lives of young children also include numerous and varied threads or environments such as family, school, peers, and community. The ground on which we walk, schools we attend, and retail establishments in the community we frequent are all common denominators. The relationships we have with each other within these physical locations are the connecting denominators. It is the interconnectedness of these threads that create the tapestries of young children's lives.” Sandra Duncan, Jody Martin and Rebecca Kreth in their book, Rethinking the Classroom Landscape. Daily thoughts, expressions, interests, communications, explorations, collaborations, adventures, research and discoveries, as experienced by students at Shomrai Nursery 2017 YISE SHOMRAI NURSERY Kitat Prachim Explores Apples & Shofar In the spirit of Rosh Hashana, the children are learning about apples. We read the Discovery book series that illustrated and taught us how apples originate from small seeds. These seeds sprout into trees that carry different variations. The children observed and tasted three apple types: olden Delicious, Macintosh, and Red Delicious. -

COVID-19 Transmission Risks from Singing and Playing Wind Instruments – What We Know So

SYNOPSIS 11/18/2020 COVID-19 Transmission from Singing and Playing Wind Instruments – What We Know So Far Introduction Public Health Ontario (PHO) is actively monitoring, reviewing and assessing relevant information related to Coronavirus Disease 2019 (COVID-19). “What We Know So Far” documents provide a rapid review of the evidence related to a specific aspect or emerging issue related to COVID-19. Updates to our Latest Version In the latest version of COVID-19 Transmission from Singing and Playing Wind Instruments – What We Know So Far, we present results of an updated literature search and rapid review. The updated version provides additional evidence concerning potential COVID-19 transmission during singing and playing wind instruments, including the addition of 22 new articles. We identified 10 new studies on experimental transmission during singing or playing wind instruments; four additional articles reported observational studies on transmission during singing; and there were two reviews of transmission risks during performances. We identified six articles on transmission risks during performances from the grey literature. There have been no published reports on COVID-19 transmission from wind instruments. The findings from this updated rapid review do not change our previous assessment of COVID-19 transmission during singing and playing wind instruments. Key Findings Singing generates respiratory droplets and aerosols; however, the degree to which each of these particles contributes to COVID-19 transmission is unclear. The evidence supporting COVID-19 transmission during singing is limited to a small number of observational studies and experimental models. Thirteen of 1,548 (<1%) documented superspreading events have been associated with singing.