Rate-Constrained Coder Control and Comparison of Video Coding Standards

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Enabling Hardware Accelerated Video Decode on Intel® Atom™ Processor D2000 and N2000 Series Under Fedora 16

Enabling Hardware Accelerated Video Decode on Intel® Atom™ Processor D2000 and N2000 Series under Fedora 16 Application Note October 2012 Order Number: 509577-003US INFORMATIONLegal Lines and Disclaimers IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL'S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. A “Mission Critical Application” is any application in which failure of the Intel Product could result, directly or indirectly, in personal injury or death. SHOULD YOU PURCHASE OR USE INTEL'S PRODUCTS FOR ANY SUCH MISSION CRITICAL APPLICATION, YOU SHALL INDEMNIFY AND HOLD INTEL AND ITS SUBSIDIARIES, SUBCONTRACTORS AND AFFILIATES, AND THE DIRECTORS, OFFICERS, AND EMPLOYEES OF EACH, HARMLESS AGAINST ALL CLAIMS COSTS, DAMAGES, AND EXPENSES AND REASONABLE ATTORNEYS' FEES ARISING OUT OF, DIRECTLY OR INDIRECTLY, ANY CLAIM OF PRODUCT LIABILITY, PERSONAL INJURY, OR DEATH ARISING IN ANY WAY OUT OF SUCH MISSION CRITICAL APPLICATION, WHETHER OR NOT INTEL OR ITS SUBCONTRACTOR WAS NEGLIGENT IN THE DESIGN, MANUFACTURE, OR WARNING OF THE INTEL PRODUCT OR ANY OF ITS PARTS. Intel may make changes to specifications and product descriptions at any time, without notice. Designers must not rely on the absence or characteristics of any features or instructions marked “reserved” or “undefined”. -

ROS Robotics by Example Second Edition

ROS Robotics By Example Second Edition Learning to control wheeled, limbed, and flying robots using ROS Kinetic Kame Carol Fairchild Dr. Thomas L. Harman BIRMINGHAM - MUMBAI ROS Robotics By Example Second Edition Copyright © 2017 Packt Publishing All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, without the prior written permission of the publisher, except in the case of brief quotations embedded in critical articles or reviews. Every effort has been made in the preparation of this book to ensure the accuracy of the information presented. However, the information contained in this book is sold without warranty, either express or implied. Neither the authors, nor Packt Publishing, and its dealers and distributors will be held liable for any damages caused or alleged to be caused directly or indirectly by this book. Packt Publishing has endeavored to provide trademark information about all of the companies and products mentioned in this book by the appropriate use of capitals. However, Packt Publishing cannot guarantee the accuracy of this information. First published: June 2016 Second edition: November 2017 Production reference: 1301117 Published by Packt Publishing Ltd. Livery Place 35 Livery Street Birmingham B3 2PB, UK. ISBN 978-1-78847-959-2 www.packtpub.com Credits Authors Copy Editor Carol Fairchild Safis Editing Dr. Thomas L. Harman Proofreader Reviewer Safis Editing Lentin Joseph Indexer Acquisition Editor Aishwarya Gangawane Frank Pohlmann Graphics Project Editor Kirk D'Penha Alish Firasta Production Coordinator Content Development Editor Nilesh Mohite Venugopal Commuri Technical Editor Bhagyashree Rai About the Authors Carol Fairchild is the owner and principal engineer of Fairchild Robotics, a robotics development and integration company. -

Release Notes for Debian 11 (Bullseye), 64-Bit PC

Release Notes for Debian 11 (bullseye), 64-bit PC The Debian Documentation Project (https://www.debian.org/doc/) October 1, 2021 Release Notes for Debian 11 (bullseye), 64-bit PC This document is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License, version 2, as published by the Free Software Foundation. This program is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details. You should have received a copy of the GNU General Public License along with this program; if not, write to the Free Software Foundation, Inc., 51 Franklin Street, Fifth Floor, Boston, MA 02110-1301 USA. The license text can also be found at https://www.gnu.org/licenses/gpl-2.0.html and /usr/ share/common-licenses/GPL-2 on Debian systems. ii Contents 1 Introduction 1 1.1 Reporting bugs on this document . 1 1.2 Contributing upgrade reports . 1 1.3 Sources for this document . 2 2 What’s new in Debian 11 3 2.1 Supported architectures . 3 2.2 What’s new in the distribution? . 3 2.2.1 Desktops and well known packages . 3 2.2.2 Driverless scanning and printing . 4 2.2.2.1 CUPS and driverless printing . 4 2.2.2.2 SANE and driverless scanning . 4 2.2.3 New generic open command . 5 2.2.4 Control groups v2 . 5 2.2.5 Persistent systemd journal . -

(12) United States Patent (10) Patent No.: US 8,654,842 B2 Ganesh Et Al

USOO8654842B2 (12) United States Patent (10) Patent No.: US 8,654,842 B2 Ganesh et al. (45) Date of Patent: Feb. 18, 2014 (54) ACCELERATED VIDEO ENCODING 6,044,408 A 3/2000 Engstrom et al. 6,101,276 A 8, 2000 Adiletta et al. 6,128,026 A 10/2000 Brothers, III (75) Inventors: SNESN RWSRS 6,252,905 B1 6/2001 Pokrinchak et al. onald J. Yunsil, Kirkland, (US); 6,434,196 B1* 8/2002 Sethuraman et al. ... 375/240. 12 Gary J. Sullivan, Redmond, WA (US); 6,721,359 B1 4/2004 Bist et al. Glenn F. Evans, Kirkland, WA (US); (Continued) Shyam Sadhwani, Bellevue, WA (US); Stephen J. Estrop, Carnation, WA (US) FOREIGN PATENT DOCUMENTS (73) Assignee: Microsoft Corporation, Redmond, WA EP 1347,650 9, 2003 (US) WO WOO2O7446 A2 1, 2001 WO WOO219095 3, 2002 (*) Notice: Subject to any disclaimer, the term of this OTHER PUBLICATIONS patent is extended or adjusted under 35 U.S.C. 154(b) by 1651 days. Rossi, “Multicore Signal Processing Platform with Heterogeneous Configurable Hardware Accelerators', 2013, IEEE, p. 1-14.* (21) Appl. No.: 11/673,423 (Continued) (22) Filed: Feb. 9, 2007 Primary Examiner — Taghi Arani (65) Prior Publication Data Assistant Examiner — Gregory Lane (74) Attorney, Agent, or Firm — Carole A Boelitz: Raghu US 2007/O2O1562 A1 Aug. 30, 2007 Chinagudabha; Micky Minhas Related U.S. Application Data (57) ABSTRACT (63) Continuation-in-part of application No. 1 1/276,336, A video encoding acceleration service to increase one or filed on Feb. 24, 2006. more of the speed and quality of video encoding is described. -

PC Hardware Contents

PC Hardware Contents 1 Computer hardware 1 1.1 Von Neumann architecture ...................................... 1 1.2 Sales .................................................. 1 1.3 Different systems ........................................... 2 1.3.1 Personal computer ...................................... 2 1.3.2 Mainframe computer ..................................... 3 1.3.3 Departmental computing ................................... 4 1.3.4 Supercomputer ........................................ 4 1.4 See also ................................................ 4 1.5 References ............................................... 4 1.6 External links ............................................. 4 2 Central processing unit 5 2.1 History ................................................. 5 2.1.1 Transistor and integrated circuit CPUs ............................ 6 2.1.2 Microprocessors ....................................... 7 2.2 Operation ............................................... 8 2.2.1 Fetch ............................................. 8 2.2.2 Decode ............................................ 8 2.2.3 Execute ............................................ 9 2.3 Design and implementation ...................................... 9 2.3.1 Control unit .......................................... 9 2.3.2 Arithmetic logic unit ..................................... 9 2.3.3 Integer range ......................................... 10 2.3.4 Clock rate ........................................... 10 2.3.5 Parallelism ......................................... -

HAIHAO XIANG ([email protected]) Hardware Acceleration Path Based on VA-API in Gstreamer

gfxmfx, gfxmsdk and MediaSDK Update HAIHAO XIANG ([email protected]) Hardware acceleration path based on VA-API in gstreamer Media Application based on Gstreamer GstMfx GstMsdk GstVaapi MediaMSDK Legend Non MSDK path Libva (vaapi) MSDK path iHD i965 Other vaapi back-end drivers MSDK & Non MSDK Intel Gen Graphics Other HWs VAAPI VAAPI (Video Acceleration API): API specific for hardware accelerated video decoding, encoding and processing. ◦ 10+ years, libva is the implementation (https://github.com/intel/libva) ◦ Back-end drivers for Intel Gen graphics: i965, iHD ◦ I965 (https://github.com/intel/intel-vaapi-driver/) supports legacy platforms only. ◦ iHD (https://github.com/intel/media-driver) was open sourced in 2017 and supports the new Intel platforms. It provides more features than i965, especially in VPP and video encoding. MSDK MediaSDK: Provides APIs to access hardware accelerated video decode, encode and filtering on Intel platform ◦ Open sourced in 2017 (https://github.com/Intel-Media- SDK/MediaSDK) ◦ On Linux, it is based on libva (VAAPI) ◦ Work well with iHD only GstMfx vs GstMsdk ◦ Two gstreamer plugins based on MediaSDK ◦ GstMfx is located at https://github.com/intel/gstreamer-media-SDK however GstMsdk is a plugin in gst- plugins-bad ◦ Different Licenses ◦ GstMfx: LGPL 2.1+ ◦ GstMsdk: BSD3 ◦ GstMfx and GstMsdk provide similar features, and the performance of GstMsdk is a little better than GstMfx for most cases. ◦ We will add more features, such as HEVC Main10, Main12 etc, fix the performance gaps for those failure cases in GstMsdk. GstMfx vs GstMsdk gstmfx gstmsdk H264/AVC decoder/encoder decoder/encoder H265/HEVC decoder/encoder decoder/encoder VP8 decoder decoder/encoder VP9 decoder decoder JPEG decoder/encoder decoder/encoder MPEG-2 decoder decoder/encoder VC-1 decoder decoder VPP Yes Yes Sink Yes N (*) (*): gstmsdk works well with glimagesink although gstmsdk doesn’t provide sink element. -

MSDK Driver Download BIOSLINUXMCSDK

MSDK Driver Download BIOSLINUXMCSDK. SYS/BIOS and Linux Multicore Software Development Kits (MCSDK) for C66x, C647x, C645x Processors. BIOSLINUXMCSDK. Overview. NOTE: K2x, C665x and C667x devices are now actively maintained on the Processor-SDK release stream. See links above. Our Multicore Software Development Kits (MCSDK) provide highly-optimized bundles of foundational, platform-specific drivers to enable development on selected TI ARM and DSP devices. The MCSDK gives developers the ability to evaluate hardware and software capabilities of the evaluation platform and to rapidly develop applications. The MCSDK enables applications to use SYS/BIOS and/or Linux on one platform. Individual cores can be assigned to operate Linux applications as a control plane while other cores are simultaneously assigned high-performance signal processing operations. This heterogeneous configuration provides flexibility for software developers to implement full solutions on TI’s multicore devices including DSP and ARM processors. Features. MCSDK Provides foundational software for ARM+DSP devices. It encapsulates a collection of software elements and tools intended to enable customer application development. The foundational components include: SYS/BIOS real-time embedded operating system on DSP cores Linux high-level operating system running on ARM (SMP mode for multicore ARM) DSP chip support libraries, DSP/ARM drivers, and basic platform utilities Interprocessor communication for communication across cores and devices SoC resource management Optimized application-specific (small cell, transport) and application non-specific algorithm libraries Trace debug and instrumentation Bootloaders and boot utilities, power-on self test Demonstrations and examples ARM software libraries available in Linux devkit or via Arago/Yocto Latest toolchain (ARM Linaro, DSP TI CodeGen) Host tools, integrated development environment. -

Tmam1de1.Pdf (3076Mb)

ADVERTIMENT . La consulta d’aquesta tesi queda condicionada a l’acceptació de les següents condicions d'ús: La difusió d’aquesta tesi per mitjà del servei TDX ( www.tesisenxarxa.net ) ha estat autoritzada pels titulars dels drets de propietat intel·lectual únicament per a usos privats emmarcats en activitats d’investigació i docència. No s’autoritza la seva reproducció amb finalitats de lucre ni la seva difusió i posada a disposició des d’un lloc aliè al servei TDX. No s’autoritza la presentació del seu contingut en una finestra o marc aliè a TDX (framing). Aquesta reserva de drets afecta tant al resum de presentació de la tesi com als seus continguts. En la utilització o cita de parts de la tesi és obligat indicar el nom de la persona autora. ADVERTENCIA . La consulta de esta tesis queda condicionada a la aceptación de las siguientes condiciones de uso: La difusión de esta tesis por medio del servicio TDR ( www.tesisenred.net ) ha sido autorizada por los titulares de los derechos de propiedad intelectual únicamente para usos privados enmarcados en actividades de investigación y docencia. No se autoriza su reproducción con finalidades de lucro ni su difusión y puesta a disposición desde un sitio ajeno al servicio TDR. No se autoriza la presentación de su contenido en una ventana o marco ajeno a TDR (framing). Esta reserva de derechos afecta tanto al resumen de presentación de la tesis como a sus contenidos. En la utilización o cita de partes de la tesis es obligado indicar el nombre de la persona autora. -

University of Vaasa

UNIVERSITY OF VAASA FACULTY OF TECHNOLOGY AUTOMATION TECHNOLOGY Kari Koivuporras ENHANCING A NEUROSURGICAL IMAGING SYSTEM WITH A PC-BASED VIDEO PROCESSING SOLUTION Master’s thesis for the degree of Master of Science in Technology submitted for inspection, Vaasa, 15 March 2011. Supervisor Jarmo Alander Instructor Petri Välisuo 1 PREFACE This thesis is a contribution to CLIN-NIRce, a subproject of the FIELD NIRce project, concentrating on clinical applications. The research in FIELD NIRce is focused on de- veloping portable, self-contained solutions for near-infrared spectroscopy in the field, as opposed to a laboratory environment. The research in FIELD NIRce is funded by the European Union (EU) via the Botnia-Atlantica programme and several public funders in Finland and Sweden. The purpose of this thesis is to explore and implement new features for a particular med- ical imaging system, built upon a neurosurgical operating microscope at Töölö Hospital, Helsinki. The collaborative efforts on this topic were initiated in August 2008 by the automation research group working at the Department of Electrical Engineering and En- ergy Technology in University of Vaasa and a group of neurosurgeons at the Department of Neurosurgery of Helsinki University Central Hospital (HUCS). The work included in this thesis was done between April 2010 and March 2011. The majority of the work was done at the University of Vaasa and at home in Vaasa and Raasepori. The project also included several meetings at Töölö Hospital for gathering feedback from the surgeons about the implemented features and to get ideas for new ones. This work was funded by the FIELD NIRce project. -

Enabling Hardware Accelerated Playback for Intel® Atom™ N2000/D2000 Series Processors V0.03

Enabling Hardware Accelerated Playback for Intel® Atom™ N2000/D2000 Series Processors v0.03 Enabling Hardware Accelerated Playback for ® Intel Atom™ Processor N2000/D2000 Series Case Study Using MPlayer on Fedora 16 June 2012 Intel Corporation, Proprietary and Confidential Information Page 1 of 18 Enabling Hardware Accelerated Playback for Intel® Atom™ N2000/D2000 Series Processors v0.03 Document Revision History Revision Date Description 0.01 6/13/12 Initial Version 0.03 6/14/12 General cleanup after testing instructions. Intel and Intel Atom are trademarks of Intel Corporation in the U.S. and/or other countries. * Other names and brands may be claimed as the property of others. Copyright 2012, Intel Corporation. All rights reserved. Intel Corporation, Proprietary and Confidential Information Page 2 of 18 Enabling Hardware Accelerated Playback for Intel® Atom™ N2000/D2000 Series Processors v0.03 Table of Contents 1 Executive Summary ............................................................................................................................................... 4 2 Overview of Hardware Accelerated Decode ......................................................................................................... 5 3 Build and Installation Overview ............................................................................................................................ 7 4 Step-by-Step Instructions...................................................................................................................................... -

Enabling Hardware Accelerated Playback for Intel(R) Atom(TM)

White Paper Ishu Verma Enabling Hardware Software Technical Marketing Engineer Accelerated Intel Corporation Playback for Intel® Atom™/Intel® US15W Platform and IEGD Case Study Using MPlayer on Moblin March, 2010 445351 Enabling Hardware Accelerated Playback for Intel® Atom™/Intel® US15W Platform and IEGD 2 Enabling Hardware Accelerated Playback for Intel® Atom™/Intel® US15W Platform and IEGD Executive Summary Media playback on the Intel® Atom™ platform is optimal when the video decoding is handled by the video engine instead of the CPU. Using a video engine can reduce the CPU workload by more than 50%. Using a video engine to decode video reduces CPU workload by more than 50%. It requires the application, such as a media player, to use VA API to communicate with Intel® Embedded Graphics Driver (IEGD). To use the video engine, the media player application, MPlayer, for example, needs to use the appropriate API to communicate with the video driver that controls this video engine. This case study provides instructions on how to enable MPlayer to interface with Intel Embedded Graphics Driver (IEGD) video driver through the VA API. This case study was done on a platform with the following characteristics: Intel Atom with US15W, Moblin-IVI 2.1 or Ubuntu 8, IEGD 10.2. However, the findings are applicable to other Intel Atom platforms using IEGD 10.x. The Intel® Embedded Design Center provides qualified developers with web-based access to technical resources. Access Intel Confidential design materials, step-by step guidance, application reference solutions, training, Intel’s tool loaner program, and connect with an e-help desk and the embedded community. -

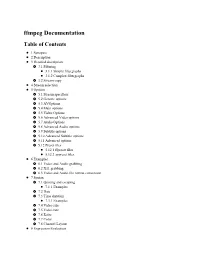

Ffmpeg Documentation Table of Contents

ffmpeg Documentation Table of Contents 1 Synopsis 2 Description 3 Detailed description 3.1 Filtering 3.1.1 Simple filtergraphs 3.1.2 Complex filtergraphs 3.2 Stream copy 4 Stream selection 5 Options 5.1 Stream specifiers 5.2 Generic options 5.3 AVOptions 5.4 Main options 5.5 Video Options 5.6 Advanced Video options 5.7 Audio Options 5.8 Advanced Audio options 5.9 Subtitle options 5.10 Advanced Subtitle options 5.11 Advanced options 5.12 Preset files 5.12.1 ffpreset files 5.12.2 avpreset files 6 Examples 6.1 Video and Audio grabbing 6.2 X11 grabbing 6.3 Video and Audio file format conversion 7 Syntax 7.1 Quoting and escaping 7.1.1 Examples 7.2 Date 7.3 Time duration 7.3.1 Examples 7.4 Video size 7.5 Video rate 7.6 Ratio 7.7 Color 7.8 Channel Layout 8 Expression Evaluation 9 Codec Options 10 Decoders 11 Video Decoders 11.1 rawvideo 11.1.1 Options 12 Audio Decoders 12.1 ac3 12.1.1 AC-3 Decoder Options 12.2 flac 12.2.1 FLAC Decoder options 12.3 ffwavesynth 12.4 libcelt 12.5 libgsm 12.6 libilbc 12.6.1 Options 12.7 libopencore-amrnb 12.8 libopencore-amrwb 12.9 libopus 13 Subtitles Decoders 13.1 dvbsub 13.1.1 Options 13.2 dvdsub 13.2.1 Options 13.3 libzvbi-teletext 13.3.1 Options 14 Encoders 15 Audio Encoders 15.1 aac 15.1.1 Options 15.2 ac3 and ac3_fixed 15.2.1 AC-3 Metadata 15.2.1.1 Metadata Control Options 15.2.1.2 Downmix Levels 15.2.1.3 Audio Production Information 15.2.1.4 Other Metadata Options 15.2.2 Extended Bitstream Information 15.2.2.1 Extended Bitstream Information - Part 1 15.2.2.2 Extended Bitstream Information - Part 2 15.2.3