One System for Two Tasks: a Commonsense Algorithm Memory That Solves Problems and Comprehends Language

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Grazia Di Michele, “Il Tempio” Da Oggi On-Line Il Video,Dj E Produttori Per Un Evento a Pozzuoli: Sabato La

Collezioni da ascoltare: la Collezione MAXXI Arte raccontata da cinque voci straordinarie. A partire da oggi, domenica 15 novembre prende il via la seconda edizione del progetto Collezione da ascoltare per un MAXXI ancora più accessibile, inclusivo, accogliente. Il progetto consiste in cinque audiodescrizioni di altrettante opere tra le più significative della Collezione MAXXI Arte, raccontate da quattro attori e una scrittrice che hanno prestato le loro voci. Per 5 settimane ogni domenica alle 12.30, il palinsesto online del MAXXI #nonfermiamoleidee si arricchisce dunque, di un nuovo contenuto. Sonia Bergamasco, Luca Zingaretti, Isabella Ragonese, Luigi Lo Cascio, Michela Murgia: cinque voci straordinarie per cinque tra le opere più iconiche della Collezione MAXXI: e così sia… di Bruna Esposito, Plegaria Muda di Doris Salcedo, The Emancipation Approximation di Kara Walker, Inventory. The Fountains of Za’atari di Margherita Moscardini (esposta nella mostra REAL_ITALY) e Senza titolo di Maria Lai. Le audiodescrizioni pensate per e con le persone cieche, sono anche uno strumento per tutti, per approfondire contenuti su artisti e opere, cogliendone la “fisicità” e percependole anche con gli occhi chiusi. I testi delle 5 letture redatti da Sofia Bilotta, responsabile dell’Ufficio Public Engagement del MAXXI, in collaborazione con Rosella Frittelli e Luciano Pulerà, partecipanti non vedenti ai programmi di P.E. del MAXXI – ricostruiscono la forma e le tecniche delle opere ma anche le sensazioni provate nell’esplorarle tattilmente. Bruna Esposito, E Cosi Sia…, MAXXI, © Luis do Rosario Oggi è stato raccontata l’opera e così sia… un grande mandala di Bruna Esposito realizzato con semi e legumi dall’artista insieme ai suoi assistenti, con un paziente lavoro durato tre mesi. -

''What Lips These Lips Have Kissed'': Refiguring the Politics of Queer Public Kissing

Communication and Critical/Cultural Studies Vol. 3, No. 1, March 2006, pp. 1Á/26 ‘‘What Lips These Lips Have Kissed’’: Refiguring the Politics of Queer Public Kissing Charles E. Morris III & John M. Sloop In this essay, we argue that man-on-man kissing, and its representations, have been insufficiently mobilized within apolitical, incremental, and assimilationist pro-gay logics of visibility. In response, we call for a perspective that understands man-on-man kissing as a political imperative and kairotic. After a critical analysis of man-on-man kissing’s relation to such politics, we discuss how it can be utilized as a juggernaut in a broader project of queer world making, and investigate ideological, political, and economic barriers to the creation of this queer kissing ‘‘visual mass.’’ We conclude with relevant implications regarding same-sex kissing and the politics of visible pleasure. Keywords: Same-Sex Kissing; Queer Politics; Public Sex; Gay Representation In general, one may pronounce kissing dangerous. A spark of fire has often been struck out of the collision of lips that has blown up the whole magazine of 1 virtue.*/Anonymous, 1803 Kissing, in certain figurations, has lost none of its hot promise since our epigraph was penned two centuries ago. Its ongoing transformative combustion may be witnessed in two extraordinarily divergent perspectives on its cultural representation and political implications. In 2001, queer filmmaker Bruce LaBruce offered in Toronto’s Eye Weekly a noteworthy rave of the sophomoric buddy film Dude, Where’s My Car? One scene in particular inspired LaBruce, in which we find our stoned protagonists Jesse (Ashton Kutcher) and Chester (Seann William Scott) idling at a stoplight next to superhunk Fabio and his equally alluring female passenger. -

Indice Pag. 4 Agenzie Stampa (137) 11 Quotidiani (409)

Indice Pag. 4 Agenzie stampa (137) 11 Quotidiani (409) 29 Emittenti radiofoniche (876) 68 Siti d’informazione (1.124) 117 Emittenti televisive (668) 146 Periodici (1.774) 3 Come utilizzare l’elenco Gran parte dei siti d’informazione e dei periodici esplicita il proprio tema nel titolo, come la Gazzetta dello sport o il Corriere del Trentino. Quindi conviene selezionare le testate soggettivamente più interessanti e farne una query con un semplice copia-incolla, per arrivare al sito e alle e-mail aggiornate. Una mailing- list o un comunque archivio di 20-30 nominativi può essere sufficiente sia per giornalisti che per addetti stampa. Dove possibile, inviate il testo al redattore specifico e non genericamente alla redazione. Se potete scegliere, preferite quei siti che hanno un form di comunicazione con l’esterno, rispetto quelli che hanno solo un elenco di email della redazione. Durante la lavorazione di questo archivio, alcune testate sono diventate in operative ed altre sono nate. Non c’è da sorprendersi: così è il web. 4 AGENZIA STAMPA ADISTA ADNKRONOS ADNKRONOS - REDAZIONE DI BOLOGNA ADNKRONOS - REDAZIONE DI MILANO ADNKRONOS SALUTE ADV EXPRESS AGA (AGENZIA GIORNALI ASSOCIATI) AGC (AGENZIA GIORNALISTICA CONI) AGENCE FRANCE PRESSE AGENPARL AGENZIA A.GI.PA. PRESS AGENZIA ABA NEWS AGENZIA ADN KRONOS AGENZIA ADNKRONOS AGENZIA AGENFAX ALESSANDRIA AGENZIA AGI AGENZIA AGI AGENZIA AGIPRESS 5 AGENZIA AGO PRESS (AGO AGENZIA GIORN. ON-LINE) AGENZIA AGR AGENZIA AGR RCS AGENZIA AGSA AGENZIA AGUS AGENZIA AIR PRESS AGENZIA AIS NEWS AGENZIA AKROPOLIS AGENZIA AMI AGENZIA ANSA AGENZIA ASA PRESS AGENZIA ASCA TOSCANA AGENZIA ASTERISCO INFORMAZIONI AGENZIA ASTERISCO S.R.L. -

KISS 100, KISS 101 and KISS 105 Request to Change Formats to Share All Programming (Consultation on Proposed Level of National DAB Coverage)

KISS 100, KISS 101 and KISS 105 Request to change Formats to share all programming (consultation on proposed level of national DAB coverage) Consultation Publication date: 01 November 2010 Closing date for responses: 26 November 2010 Request to change Formats – Kiss 100, Kiss 101 and Kiss 105 Contents Section Page 1 Executive summary 2 2 Details of the request 3 Annex Page 1 Responding to this consultation 5 2 Ofcom’s consultation principles 7 3 Consultation response cover sheet 8 4 Consultation question 10 5 Request to change the Formats of Kiss 100, Kiss 101 and Kiss 105 11 1 Request to change Formats – Kiss 100, Kiss 101 and Kiss 105 Section 1 1 Executive summary 1.1 Ofcom has received a Format change request from Bauer Radio Ltd in relation to its three FM local commercial radio services which comprise the Kiss network. These are: • Kiss 100 – Greater London • Kiss 101 – Severn Estuary • Kiss 105 – East of England 1.2 Bauer’s proposal is for all three stations to be able to share all programming, and therefore to remove the requirements in each Format that a specified amount of programming is made by each station in the area it serves. As a result, a new ‘national’ version of Kiss would be transmitted in each analogue licence area which would also be available on local DAB multiplexes across the UK. 1.3 This proposal is consistent with Ofcom’s policy of allowing regional analogue stations to share programming, as set out in our localness guidelines1. This policy, designed to encourage the growth of UK-wide DAB services, allows for the owners of specified local FM stations (those which provide regional coverage) to request the removal from their Formats of obligations relating to local material and locally-made programming in return for providing their service across the UK on DAB. -

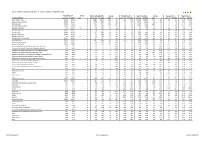

Hallett Arendt Rajar Topline Results - Wave 3 2019/Last Published Data

HALLETT ARENDT RAJAR TOPLINE RESULTS - WAVE 3 2019/LAST PUBLISHED DATA Population 15+ Change Weekly Reach 000's Change Weekly Reach % Total Hours 000's Change Average Hours Market Share STATION/GROUP Last Pub W3 2019 000's % Last Pub W3 2019 000's % Last Pub W3 2019 Last Pub W3 2019 000's % Last Pub W3 2019 Last Pub W3 2019 Bauer Radio - Total 55032 55032 0 0% 18083 18371 288 2% 33% 33% 156216 158995 2779 2% 8.6 8.7 15.3% 15.9% Absolute Radio Network 55032 55032 0 0% 4743 4921 178 4% 9% 9% 35474 35522 48 0% 7.5 7.2 3.5% 3.6% Absolute Radio 55032 55032 0 0% 2151 2447 296 14% 4% 4% 16402 17626 1224 7% 7.6 7.2 1.6% 1.8% Absolute Radio (London) 12260 12260 0 0% 729 821 92 13% 6% 7% 4279 4370 91 2% 5.9 5.3 2.1% 2.2% Absolute Radio 60s n/p 55032 n/a n/a n/p 125 n/a n/a n/p *% n/p 298 n/a n/a n/p 2.4 n/p *% Absolute Radio 70s 55032 55032 0 0% 206 208 2 1% *% *% 699 712 13 2% 3.4 3.4 0.1% 0.1% Absolute 80s 55032 55032 0 0% 1779 1824 45 3% 3% 3% 9294 9435 141 2% 5.2 5.2 0.9% 1.0% Absolute Radio 90s 55032 55032 0 0% 907 856 -51 -6% 2% 2% 4008 3661 -347 -9% 4.4 4.3 0.4% 0.4% Absolute Radio 00s n/p 55032 n/a n/a n/p 209 n/a n/a n/p *% n/p 540 n/a n/a n/p 2.6 n/p 0.1% Absolute Radio Classic Rock 55032 55032 0 0% 741 721 -20 -3% 1% 1% 3438 3703 265 8% 4.6 5.1 0.3% 0.4% Hits Radio Brand 55032 55032 0 0% 6491 6684 193 3% 12% 12% 53184 54489 1305 2% 8.2 8.2 5.2% 5.5% Greatest Hits Network 55032 55032 0 0% 1103 1209 106 10% 2% 2% 8070 8435 365 5% 7.3 7.0 0.8% 0.8% Greatest Hits Radio 55032 55032 0 0% 715 818 103 14% 1% 1% 5281 5870 589 11% 7.4 7.2 0.5% -

Hallett Arendt Rajar Topline Results - Wave 1 2020/Last Published Data

HALLETT ARENDT RAJAR TOPLINE RESULTS - WAVE 1 2020/LAST PUBLISHED DATA Population 15+ Change Weekly Reach 000's Change Weekly Reach % Total Hours 000's Change Average Hours Market Share STATION/GROUP Last Pub W1 2020 000's % Last Pub W1 2020 000's % Last Pub W1 2020 Last Pub W1 2020 000's % Last Pub W1 2020 Last Pub W1 2020 Bauer Radio - Total 55032 55032 0 0% 18160 17986 -174 -1% 33% 33% 155537 154249 -1288 -1% 8.6 8.6 15.9% 15.7% Absolute Radio Network 55032 55032 0 0% 4908 4716 -192 -4% 9% 9% 34837 33647 -1190 -3% 7.1 7.1 3.6% 3.4% Absolute Radio 55032 55032 0 0% 2309 2416 107 5% 4% 4% 16739 18365 1626 10% 7.3 7.6 1.7% 1.9% Absolute Radio (London) 12260 12260 0 0% 715 743 28 4% 6% 6% 5344 5586 242 5% 7.5 7.5 2.7% 2.8% Absolute Radio 60s 55032 55032 0 0% 136 119 -17 -13% *% *% 359 345 -14 -4% 2.6 2.9 *% *% Absolute Radio 70s 55032 55032 0 0% 212 230 18 8% *% *% 804 867 63 8% 3.8 3.8 0.1% 0.1% Absolute 80s 55032 55032 0 0% 1420 1459 39 3% 3% 3% 7020 7088 68 1% 4.9 4.9 0.7% 0.7% Absolute Radio 90s 55032 55032 0 0% 851 837 -14 -2% 2% 2% 3518 3593 75 2% 4.1 4.3 0.4% 0.4% Absolute Radio 00s 55032 55032 0 0% 217 186 -31 -14% *% *% 584 540 -44 -8% 2.7 2.9 0.1% 0.1% Absolute Radio Classic Rock 55032 55032 0 0% 740 813 73 10% 1% 1% 4028 4209 181 4% 5.4 5.2 0.4% 0.4% Hits Radio Brand 55032 55032 0 0% 6657 6619 -38 -1% 12% 12% 52607 52863 256 0% 7.9 8.0 5.4% 5.4% Greatest Hits Network 55032 55032 0 0% 1264 1295 31 2% 2% 2% 9347 10538 1191 13% 7.4 8.1 1.0% 1.1% Greatest Hits Radio 55032 55032 0 0% 845 892 47 6% 2% 2% 6449 7146 697 11% 7.6 8.0 0.7% -

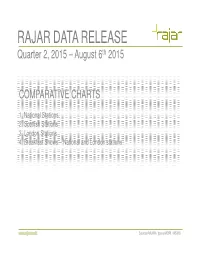

Comparative Data Chartspdf

RAJAR DATA RELEASE Quarter 2, 2015 – August 6 th 2015 COMPARATIVE CHARTS 1. National Stations 2. Scottish Stations 3. London Stations 4. Breakfast Shows – National and London stations Source RAJAR / Ipsos MORI / RSMB RAJAR DATA RELEASE Quarter 2, 2015 – August 6 th 2015 NATIONAL STATIONS SAMPLE SIZE: TERMS WEEKLY The number in thousands of the UK/area adult population w ho listen to a station for at least 5 minutes in the Survey period - Q2 2015 REACH: course of an average w eek. SHARE OF Code Q (Quarter): 22,340 Adults 15+ LISTENING: The percentage of total listening time accounted for by a station in the area (TSA) in an average w eek. TOTAL Code H (Half year): 46,216 Adults 15+ HOURS: The overall number of hours of adult listening to a station in the UK/area in an average w eek. TOTAL HOURS (in thousands): ALL BBC Q2 14 546723 Q1 15 553852 Q2 15 554759 TOTAL HOURS (in thousands): ALL COMMERCIAL Q2 14 443326 Q1 15 435496 Q2 15 464053 STATIONS SURVEY REACH REACH REACH % CHANGE % CHANGE SHARE SHARE SHARE PERIOD '000 '000 '000 REACH Y/Y REACH Q/Q % % % Q2 14 Q1 15 Q2 15 Q2 15 vs. Q2 14 Q2 15 vs. Q1 15 Q2 14 Q1 15 Q2 15 ALL RADIO Q 48052 47799 48184 0.3% 0.8% 100.0 100.0 100.0 ALL BBC Q 35227 34872 35016 -0.6% 0.4% 53.3 54.4 53.0 15-44 Q 15258 14583 14687 -3.7% 0.7% 39.6 40.5 38.4 45+ Q 19970 20290 20329 1.8% 0.2% 62.4 63.2 62.4 ALL BBC NETWORK RADIO Q 32255 31671 31926 -1.0% 0.8% 45.5 46.9 45.5 BBC RADIO 1 Q 10795 9699 10436 -3.3% 7.6% 6.8 6.4 6.4 BBC RADIO 2 Q 15496 15087 15141 -2.3% 0.4% 17.7 18.1 17.6 BBC RADIO 3 Q 1884 2084 1894 0.5% -9.1% 1.0 1.2 1.3 BBC RADIO 4 (INCLUDING 4 EXTRA) Q 10786 11265 10965 1.7% -2.7% 12.5 14.0 12.8 BBC RADIO 4 Q 10528 10886 10574 0.4% -2.9% 11.6 12.8 11.7 BBC RADIO 4 EXTRA Q 1570 2172 1954 24.5% -10.0% 0.9 1.2 1.2 BBC RADIO 5 LIVE (INC. -

Curriculum Vitae

Stefano Goffredo CV CURRICULUM VITAE Updated January 11, 2021 Stefano Goffredo Ph.D. Coral Ecology and Biology & Citizen Science Laboratories Marine Science Group Department of Biological, Geological, and Environmental Sciences Alma Mater Studiorum – University of Bologna Via F. Selmi 3 I-40126 Bologna, Italy European Union Tel: +39 051 2094244; mobile +39 339 5991481 Email: [email protected] Web: www.marinesciencegroup.org --- Home: via di mezzo ponente 9/2, Calderara di Reno, 40012 (BO), Italy, European Union. Date of birth: January 27, 1969 Place of birth: Bologna, Italy Civil Service: 1996-1997 Marital Status: Married 1 Stefano Goffredo CV 1. EDUCATION Master and Ph.D. Year University/Institute Department Degree 1995 Alma Mater Studiorum - University of Department of Evolutionary and Experimental Master Bologna Biology (M. Sc.) 2000 Alma Mater Studiorum - University of Department of Evolutionary and Experimental Research Bologna Biology Doctorate (Ph. D.) Title of Master Thesis (M. Sc. Thesis): Growth of Ctenactis echinata (Pallas, 1766) and Fungia fungites (Linnaeus, 1758) (Scleractinia, Fungiidae) on a coral reef at Sharm el Sheikh, Red Sea, South Sinai, Egypt. Supervisor: Prof. Francesco Zaccanti Title of Research Doctorate Thesis (Ph. D. Thesis): Population dynamics and reproductive biology of the solitary coral Balanophyllia europaea (Anthozoa, Scleractinia) in the northern Tyrrhenian Sea. Supervisor: Prof. Francesco Zaccanti Post Doctorate Year University/Institute Department Research field Fellowship Funds -

QUARTERLY SUMMARY of RADIO LISTENING Survey Period Ending 29Th March 2009

QUARTERLY SUMMARY OF RADIO LISTENING Survey Period Ending 29th March 2009 PART 1 - UNITED KINGDOM (INCLUDING CHANNEL ISLANDS AND ISLE OF MAN) Adults aged 15 and over: population 50,735,000 Survey Weekly Reach Average Hours Total Hours Share in Period '000 % per head per listener '000 TSA % ALL RADIO Q 45762 90 20.2 22.4 1024910 100.0 ALL BBC Q 33809 67 11.4 17.1 577172 56.3 ALL BBC 15-44 Q 15732 62 8.2 13.1 206443 45.9 ALL BBC 45+ Q 18077 71 14.6 20.5 370729 64.5 All BBC Network Radio¹ Q 30261 60 9.5 15.9 481292 47.0 BBC Local/Regional Q 9589 19 1.9 10.0 95880 9.4 ALL COMMERCIAL Q 31498 62 8.4 13.5 425902 41.6 ALL COMMERCIAL 15-44 Q 17697 70 9.2 13.1 232289 51.6 ALL COMMERCIAL 45+ Q 13800 54 7.6 14.0 193613 33.7 All National Commercial¹ Q 13315 26 2.1 7.9 104827 10.2 All Local Commercial Q 25608 50 6.3 12.5 321075 31.3 Other Listening Q 3406 7 0.4 6.4 21836 2.1 Source: RAJAR/Ipsos MORI/RSMB ¹ See note on back cover. For survey periods and other definitions please see back cover. Embargoed until 7.00 am Enquires to: RAJAR, Paramount House, 162-170 Wardour Street, London W1F 8ZX 7th May 2009 Telephone: 020 7292 9040 Facsimile: 020 7292 9041 e mail: [email protected] Internet: www.rajar.co.uk ©Rajar 2009. -

Annual Report 2020-2021 About This Document

Annual Report 2020-2021 About this document This report summarises the activities of the Audio Content Fund from April 2020 – March 2021. It breaks down the bids received, and details the successful projects and their intended outcomes. This edition is labelled an Interim Report since, at the time of writing, several of the later projects have not yet entered production or been broadcast. It will be superseded by a Final Report once the final project has been broadcast. Author: Sam Bailey, Managing Director, Audio Content Fund Date: 15 June 2021 Contents 4 Executive Summary 5 Sam Bailey, Managing Director of the ACF 5 Helen Boaden, Chair of the Independent Funding Panel 6 Background to the Audio Content Fund 6 Summary of Payments 7 Summary of Successful Bids 8 Companies with Successful Bids 11 Bidding Guidelines 11 Independent Funding Panel 12 Assessment Process 12 Evaluation Criteria 14 Details of Funded Projects 16 Funded Projects 76 Projects still to be completed 88 References 89 Closing Statement Executive Summary 1. The Audio Content Fund (ACF) exists 8. 74% of the funded projects were from to finance the creation of original, high suppliers based outside of London. quality, crafted, public-service material for Projects were funded for broadcast on broadcast on commercial and community local stations in all four nations of the UK, radio. It is part of a pilot Contestable Fund, with content produced in English, Gaelic, funded by the UK Government. Irish and Ulster Scots. 2. The industry trade bodies AudioUK and 9. All bids are assessed for the diversity of Radiocentre set up the ACF in 2018, and their representation, and 1 in 5 of the it distributed grant funding totalling funded projects were primarily focused £655,898 in financial year 2019-2020. -

We Are the Fair

Hello, We are The Fair Event experts for the best possible experience. Welcome to The Fair, an award-winning Event Production agency based in East London. The Fair is Big Cat Group’s dedicated events division; we are large-scale event delivery specialists focused on three things: safety, quality and budget. Since 2000, we have delivered world class large-scale events and festivals through our expert teams specialising in Event Production, Licensing & Health and Safety. Company We Keep Perfect Production Partner 92 festivals under our belts this season alone... We know how much work goes into promoting and growing a festival, from branding to ticketing partnerships through to your comms planning and much much more, it’s a full time job. We also know our strengths and support our clients by planning and delivering your festival seamlessly, leaving you to focus on what you do best. We work in partnership with our clients and are invested in ensuring every detail is right from stakeholder management and liaising with the Relevant Authorities through to site planning and technical production. The relationships we have built in the industry over the past 18 years enable us to get your festival live without a hitch. “Having had several teething issues in our first year we entrusted The Fair to handle all production and licensing aspects for GALA in 2017. Despite some typically rough British weather, the festival ran so smoothly it’s given us the confidence to grow in size next summer. The Fair have played a big role in our success as a festival and without them we’re sure GALA’s future would not look as bright.” Giles Napier, Director – This Is Gala Perfect Production Partner WATF’s work spans several areas including specialist event Health and Safety consultancy, Licensing and Production for festivals and large-scale, complex events internationally involving huge stakeholder groups within both the corporate and live sectors. -

French Fury Over Stiffer Quotas'

advertisement KELLY WATCH THE STARS the new single MAY 9, 1998 out now Music Volume 15, Issue 19 £3.95 DM11 FFR35 US$7 DFL11.50 SOURCE Media.we to ll it4co M&M chart toppers this week French fury over stiffer quotas' of 100 Singles by Renzi Bouton Trautmann's April to discuss the sit- Catherine reportedly INE DION commission uation-is Trautmann proposing to effectively art Will Go On PARIS -The prospect of a strengthen- believessta- ing of domestic French -language radiotions are not put a ceiling on the bia) quota laws-including a limit on rota- respecting numberofrotations tions-has been greeted with dismay bythe "new tal- deployed by individual France's radio community. ent" part of stations by not count- A commission of professionals, setthe quotas- ing plays of a record up earlier this year by minister of cul-to meet the beyond a certain num- ture and communication Catherineregulations,stations haveusually ber of rotations as part of the station's Trautmann to advise and make pro-increased rotations on a small number offrancophone content. It is due to meet posals to the ministry in the musicFrench -language titles. As a result, the the CSA again before the end of June. field, is poised to recommend an exten-commission suggests, the quotas have "Thisistotalnonsense,"says sion of the existing quota regulations. not succeeded in expanding the variety CHRIUrbanSkyrockprogramming The quota law requires stations to of artists played on French radio. manager Laurent Bouneau. "To estab- play 40 percent French -language music; The commission-which met Frenchlish new talent, you need heavy rota - 50 percent of this must be from new acts.