The $100 Genome: Implications for the Dod 5B

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Attitudes Towards Personal Genomics Among Older Swiss Adults: an Exploratory Study

Research Collection Journal Article Attitudes towards personal genomics among older Swiss adults: An exploratory study Author(s): Mählmann, Laura; Röcke, Christina; Brand, Angela; Hafen, Ernst; Vayena, Effy Publication Date: 2016 Permanent Link: https://doi.org/10.3929/ethz-b-000114241 Originally published in: Applied and Translational Genomics 8, http://doi.org/10.1016/j.atg.2016.01.009 Rights / License: Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International This page was generated automatically upon download from the ETH Zurich Research Collection. For more information please consult the Terms of use. ETH Library Applied & Translational Genomics 8 (2016) 9–15 Contents lists available at ScienceDirect Applied & Translational Genomics journal homepage: www.elsevier.com/locate/atg Attitudes towards personal genomics among older Swiss adults: An exploratory study Laura Mählmann a,b, Christina Röcke c, Angela Brand b, Ernst Hafen a,EffyVayenad,⁎ a Institute of Molecular Systems Biology, ETH Zurich, Auguste-Piccard-Hof 1, 8093 Zürich, Switzerland b Institute for Public Health Genomics, Faculty of Health, Medicine and Life Sciences, Maastricht University, PO Box 616, 6200 MD Maastricht, The Netherlands c University Research Priority Program “Dynamics of Healthy Aging”, University of Zurich, Andreasstrasse 15/Box 2, 8050 Zurich, Switzerland d Health Ethics and Policy Lab, Institute of Epidemiology, Biostatistics and Prevention, University of Zurich, Hirschengraben 84, 8001 Zurich, Switzerland article info abstract Objectives: To explore attitudes of Swiss older adults towards personal genomics (PG). Keywords: Personal genomics Methods: Using an anonymized voluntary paper-and-pencil survey, data were collected from 151 men and Attitudes of older adults women aged 60–89 years attending the Seniorenuniversität Zurich, Switzerland (Seniors' University). -

CBIT Annual Report 2012

Centre for Bio-Inspired Technology Annual Report 2012 Centre for Bio-Inspired Technology Institute of Biomedical Engineering Imperial College London South Kensington London SW7 2AZ T +44 (0)20 7594 0701 F +44 (0)20 7594 0704 [email protected] www.imperial.ac.uk/bioinspiredtechnology Centre for Bio-Inspired Technology Annual Report 2012 3 Director’s foreword 22 Medical science in the 21st century: a young engineer’s view 4 Founding Sponsor’s foreword 24 Clinical assessment of the 5 People bio-inspired artificial pancreas in 7 News subjects with type 1 diabetes 7 Honours and awards 26 Development of a genetic analysis 8 People and events platform to create innovative and 10 News from the interactive databases combining genotypic Centre’s ‘spin-out’ companies and phenotypic information for genetic detection devices 12 Research funding 29 Chip gallery 13 Research themes 33 Who we are 14 Metabolic technology 35 Academic staff profiles 15 Cardiovascular technology 40 Administrative staff profiles 16 Genetic technology 42 Staff research reports 17 Neural interfaces & neuroprosthetics 56 Research Students’ and 19 Research facilities Assistants’ reports 1 2 Centre for Bio-Inspired Technology | Annual Report 2012 Director’s foreword This Research Report for 2012 describes research institute, our collaborators include the research activities of The Centre for engineers, scientists, medical researchers and clinicians. We include here some brief reports of these Bio-Inspired Technology as it completes colleagues and how their current research has its third year of research. It is a privilege developed from their work in the Centre. to be Director of the Centre and to work One of the prime aims of the Institute of Biomedical with my colleagues at Imperial and Engineering, from which the Centre developed, was to around the world on a range of transfer technology from the laboratory into a innovative projects. -

In Human-Computer Interaction Published, Sold and Distributed By: Now Publishers Inc

Full text available at: http://dx.doi.org/10.1561/1100000067 Communicating Personal Genomic Information to Non-experts: A New Frontier for Human-Computer Interaction Orit Shaer Wellesley College, Wellesley, MA, USA [email protected] Oded Nov New York University, USA [email protected] Lauren Westendorf Wellesley College, Wellesley, MA, USA [email protected] Madeleine Ball Open Humans Foundation, Boston, MA, USA [email protected] Boston — Delft Full text available at: http://dx.doi.org/10.1561/1100000067 Foundations and Trends R in Human-Computer Interaction Published, sold and distributed by: now Publishers Inc. PO Box 1024 Hanover, MA 02339 United States Tel. +1-781-985-4510 www.nowpublishers.com [email protected] Outside North America: now Publishers Inc. PO Box 179 2600 AD Delft The Netherlands Tel. +31-6-51115274 The preferred citation for this publication is O. Shaer, O. Nov, L. Wstendorf, and M. Ball. Communicating Personal Genomic Information to Non-experts: A New Frontier for Human-Computer Interaction. Foundations and Trends R in Human-Computer Interaction, vol. 11, no. 1, pp. 1–62, 2017. R This Foundations and Trends issue was typeset in LATEX using a class file designed by Neal Parikh. Printed on acid-free paper. ISBN: 978-1-68083-254-9 c 2017 O. Shaer, O. Nov, L. Wstendorf, and M. Ball All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, mechanical, photocopying, recording or otherwise, without prior written permission of the publishers. Photocopying. In the USA: This journal is registered at the Copyright Clearance Cen- ter, Inc., 222 Rosewood Drive, Danvers, MA 01923. -

Next-Generation Sequencing for Cancer Diagnostics: a Practical Perspective

Review Article Next-Generation Sequencing for Cancer Diagnostics: a Practical Perspective Cliff Meldrum,1,4† Maria A Doyle,2† *Richard W Tothill3 1Molecular Pathology, Hunter Area Pathology Service, Newcastle, NSW 2310; 2Bioinformatics and 3Next-generation DNA Sequencing Facility Research Department and 4Pathology Department, Peter MacCallum Cancer Centre, Melbourne, Vic. 3002, Australia. †These authors contributed equally to this manuscript. *For correspondence: Dr Richard Tothill, [email protected] Abstract Next-generation sequencing (NGS) is arguably one of the most significant technological advances in the biological sciences of the last 30 years. The second generation sequencing platforms have advanced rapidly to the point that several genomes can now be sequenced simultaneously in a single instrument run in under two weeks. Targeted DNA enrichment methods allow even higher genome throughput at a reduced cost per sample. Medical research has embraced the technology and the cancer field is at the forefront of these efforts given the genetic aspects of the disease. World-wide efforts to catalogue mutations in multiple cancer types are underway and this is likely to lead to new discoveries that will be translated to new diagnostic, prognostic and therapeutic targets. NGS is now maturing to the point where it is being considered by many laboratories for routine diagnostic use. The sensitivity, speed and reduced cost per sample make it a highly attractive platform compared to other sequencing modalities. Moreover, as we identify more genetic determinants of cancer there is a greater need to adopt multi-gene assays that can quickly and reliably sequence complete genes from individual patient samples. Whilst widespread and routine use of whole genome sequencing is likely to be a few years away, there are immediate opportunities to implement NGS for clinical use. -

(12) United States Patent (10) Patent No.: US 9,334,531 B2 Li Et Al

USOO933.4531B2 (12) United States Patent (10) Patent No.: US 9,334,531 B2 Li et al. (45) Date of Patent: *May 10, 2016 (54) NUCLECACIDAMPLIFICATION (56) References Cited U.S. PATENT DOCUMENTS (71) Applicant: LIFE TECHNOLOGIES 5,223,414 A 6/1993 Zarling et al. CORPORATION, Carlsbad, CA (US) 5,616,478 A 4/1997 Chetverin et al. 5,670,325 A 9/1997 Lapidus et al. (72) Inventors: Chieh-Yuan Li, El Cerrito, CA (US); 5,928,870 A 7/1999 Lapidus et al. David Ruff, San Francisco, CA (US); 5,958,698 A 9, 1999 Chetverinet al. Shiaw-Min Chen, Fremont, CA (US); 6,001,568 A 12/1999 Chetverinet al. 6,033,881 A 3/2000 Himmler et al. Jennifer O'Neil, Wakefield, MA (US); 6,074,853. A 6/2000 Pati et al. Rachel Kasinskas, Amesbury, MA (US); 6,306,590 B1 10/2001 Mehta et al. Jonathan Rothberg, Guilford, CT (US); 6.432,360 B1 8, 2002 Church Bin Li, Palo Alto, CA (US); Kai Qin 6,440,706 B1 8/2002 Vogelstein et al. Lao, Pleasanton, CA (US) 6,511,803 B1 1/2003 Church et al. 6,929,915 B2 8, 2005 Benkovic et al. (73) Assignee: Life Technologies Corporation, 7,270,981 B2 9, 2007 Armes et al. 7,282,337 B1 10/2007 Harris Carlsbad, CA (US) 7,399,590 B2 7/2008 Piepenburg et al. 7.432,055 B2 * 10/2008 Pemov et al. ................ 435/6.11 (*) Notice: Subject to any disclaimer, the term of this 7,435,561 B2 10/2008 Piepenburg et al. -

Multigenic Condition Risk Assessment in Direct-To-Consumer Genomic Services Melanie Swan, MBA

ARTICLE Multigenic condition risk assessment in direct-to-consumer genomic services Melanie Swan, MBA Purpose: Gene carrier status and pharmacogenomic data may be de- November 2009, cover 127, 46, and 28 conditions for $429, $985, tectable from single nucleotide polymorphisms (SNPs), but SNP-based and $999, respectively. These 3 services allow consumers to down- research concerning multigenic common disease such as diabetes, can- load their raw genotyping data, which can then be reviewed in 1 cers, and cardiovascular disease is an emerging field. The many SNPs other genome browsers such as the wiki-based SNPedia, where and loci that may relate to common disease have not yet been compre- there are 35 publicly available whole and partial human genomes hensively identified and understood scientifically. In the interim, direct- as of November 2009. Pathway Genomics and Gene Essence have to-consumer (DTC) genomic companies have forged ahead in develop- launched more recently, in mid-2009, and cover 71 and 84 condi- ing composite risk interpretations for multigenic conditions. It is useful tions for $299 and $1,195 respectively. SeqWright also provides a to understand how variance in risk interpretation may arise. Methods: basic service, genotyping 1 million SNPs for $998. 23andMe, A comprehensive study was conducted to analyze the 213 conditions deCODEme, and Pathway Genomics provide 2 services, health covered by the 5 identifiable genome-wide DTC genomic companies, and ancestry testing. Not a lot is known about the overall DTC and the total SNPs (401) and loci (224) assessed in the 20 common genomic testing market size; however, 23andMe reported having 2 disease conditions with the greatest overlapping coverage. -

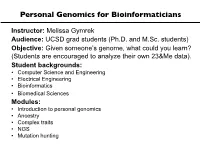

Personal Genomics for Bioinformaticians

Personal Genomics for Bioinformaticians Instructor: Melissa Gymrek Audience: UCSD grad students (Ph.D. and M.Sc. students) Objective: Given someone’s genome, what could you learn? (Students are encouraged to analyze their own 23&Me data). Student backgrounds: • Computer Science and Engineering • Electrical Engineering • Bioinformatics • Biomedical Sciences Modules: • Introduction to personal genomics • Ancestry • Complex traits • NGS • Mutation hunting Course Resources Course website: https://gymreklab.github.io/teaching/personal_genomics/personal_genomics_2017.html Syllabus: https://s3-us-west-2.amazonaws.com/cse291personalgenomics/genome_course_syllabus_winter17.pdf Materials for generating problem sets: https://github.com/gymreklab/personal-genomics-course-2017 Contact: [email protected] Bioinforma)cs/Genomics Courses @UCLA ¤ h"p://www.bioinformacs.ucla.edu/graduate-courses/ ¤ h"p://www.bioinformacs.ucla.edu/undergraduate-courses/ ¤ All courses require 1 year of programming, a probability course ¤ 3 courses are combined Graduate and Undergraduate Courses ¤ Undergraduate Courses enrollment are primarily students from Computer Science and Undergradaute Bioinformacs Minor (Mostly Life Science Students) ¤ Graduate Enrollment is mostly from Bioinformacs Ph.D. program, Gene0cs and Genomics Ph.D. program, CS Ph.D. Program. ¤ 3 courses are only Graduate Courses ¤ Enrollment is mostly Bioinformacs Ph.D. program and CS Ph.D. Program ¤ Courses are taught by faculty in Computer Science or Stas0cs (or have joint appointments there) Bioinforma)cs/Genomics Courses @UCLA ¤ CM221. Introduc0on to Bioinformacs ¤ Taught by Christopher Lee ¤ Focuses on Classical Bioinformacs Problems and Stas0cal Inference ¤ CM222. Algorithms in Bioinformacs ¤ Taught by Eleazar Eskin ¤ Focuses on Algorithms in Sequencing ¤ CM224. Computaonal Gene0cs ¤ Taught by Eran Halperin ¤ Focuses on Stas0cal Gene0cs and Machine Learning Methods ¤ CM225. Computaonal Methods in Genomics ¤ Taught by Jason Ernst and Bogdan Pasaniuc ¤ Has component of reading recent papers ¤ CM226. -

Personal Genomic Information Management and Personalized Medicine: Challenges, Current Solutions, and Roles of HIM Professionals

Personal Genomic Information Management and Personalized Medicine: Challenges, Current Solutions, and Roles of HIM Professionals Personal Genomic Information Management and Personalized Medicine: Challenges, Current Solutions, and Roles of HIM Professionals by Amal Alzu’bi, MS; Leming Zhou, PhD; and Valerie Watzlaf, PhD, RHIA, FAHIMA Abstract In recent years, the term personalized medicine has received more and more attention in the field of healthcare. The increasing use of this term is closely related to the astonishing advancement in DNA sequencing technologies and other high-throughput biotechnologies. A large amount of personal genomic data can be generated by these technologies in a short time. Consequently, the needs for managing, analyzing, and interpreting these personal genomic data to facilitate personalized care are escalated. In this article, we discuss the challenges for implementing genomics-based personalized medicine in healthcare, current solutions to these challenges, and the roles of health information management (HIM) professionals in genomics-based personalized medicine. Introduction Personalized medicine utilizes personal medical information to tailor strategies and medications for diagnosing and treating diseases in order to maintain people’s health.1 In this medical practice, physicians combine results from all available patient data (such as symptoms, traditional medical test results, medical history and family history, and certain personal genomic information) so that they can make accurate diagnoses and determine -

Genomics Education in the Era of Personal Genomics: Academic, Professional, and Public Considerations

International Journal of Molecular Sciences Review Genomics Education in the Era of Personal Genomics: Academic, Professional, and Public Considerations Kiara V. Whitley , Josie A. Tueller and K. Scott Weber * Department of Microbiology and Molecular Biology, 4007 Life Sciences Building, 701 East University Parkway, Brigham Young University, Provo, UT 84602, USA; [email protected] (K.V.W.); [email protected] (J.A.T.) * Correspondence: [email protected]; Tel.: +1-801-422-6259 Received: 31 December 2019; Accepted: 22 January 2020; Published: 24 January 2020 Abstract: Since the completion of the Human Genome Project in 2003, genomic sequencing has become a prominent tool used by diverse disciplines in modern science. In the past 20 years, the cost of genomic sequencing has decreased exponentially,making it affordable and accessible. Bioinformatic and biological studies have produced significant scientific breakthroughs using the wealth of genomic information now available. Alongside the scientific benefit of genomics, companies offer direct-to-consumer genetic testing which provide health, trait, and ancestry information to the public. A key area that must be addressed is education about what conclusions can be made from this genomic information and integrating genomic education with foundational genetic principles already taught in academic settings. The promise of personal genomics providing disease treatment is exciting, but many challenges remain to validate genomic predictions and diagnostic correlations. Ethical and societal concerns must also be addressed regarding how personal genomic information is used. This genomics revolution provides a powerful opportunity to educate students, clinicians, and the public on scientific and ethical issues in a personal way to increase learning. -

Personal Genomics Wynn K Meyer, Phd Bioscience in the 21St Century Lehigh University November 18, 2019 Draw Your Genome

Personal Genomics Wynn K Meyer, PhD Bioscience in the 21st Century Lehigh University November 18, 2019 Draw your genome. On your drawing, please label: • The region(s) that can help you figure out where your ancestors lived. • The region(s) that determine whether you will fall asleep in this class. • The region(s) that determine your eye color. • The region(s) that determine your skin color. • The region(s) that contribute to your risk of cancer. Guessing is fine! What does your drawing look like? Public domain photo / NASA] Courtesy: National Human Genome Research Institute [Public domain] If things were simple: Heart disease Eye color Skin color Cancer Stroke Diabetes Arthritis Courtesy: National Human Genome Research Institute [Public domain] Why not? How do we link phenotypes with the genetic variants that underlie them? Arthritis Heart disease Cancer Skin color Diabetes Stroke Eye color What are genetic variants? The term “allele” can refer either Zoom WAY in… to the nucleotide at the SNP Allele 1 (A/G or T/A) … …TTGACCCGTTATTG… or to the combination across both SNPs (AT/GA). …TTGGCCCGTTATAG… Allele 2 Either way, it represents one “version” of the sequence. Single Nucleotide Polymorphisms The combination of alleles that a (SNPs) person has at a SNP is called a genotype. What process generates SNPs? E.g., for SNP1: AA, AG, and GG. What do genetic variants do? Mostly nothing! From the 1000 Genomes Project Consortium (Nature 2015): • We find that a typical genome differs from Why? the reference human genome at 4.1 million to 5.0 million sites (out of 3.2 billion). -

Personal Genomes and Ethical Issues

Personal Genomes and Ethical Issues 02-223 How to Analyze Your Own Genome Declining Cost of Genome Sequencing • The genome sequencing is expected to happen rouAnely in the near future Schadt, MSB, 2012 The era of big data: the genome data are already being collected in a large scale and being mined for scienAfic discovery to drive more accurate descripAve and predicAve models that inform decision making for the best diagnosis and treatment choice for a given paent. Would you post your genome on the web? Genomes and Privacy • DNA sequence data contain informaon that can be used to uniquely idenAfy an individual (i.e., genome sequences are like fingerprints) • Balancing the need for scienAfic study and privacy Genomes and Privacy • Privacy concerns – Genome sequence data and other related types of data (gene expressions, clinical records, epigeneAc data, etc.) are collected for a large number of paents for medical research – Most types of data are freely available through internet except for genotype data • NCBI GEO database for gene expression data • The cancer genome atlas data portals – Genotype data are available to scienAsts through restricted access – ProtecAng parAcipants’ privacy through informed consent hWps://tcga-data.nci.nih.gov/tcga/tcgaHome2.jsp The Cancer Genome Atlas (TCGA) Data The Cancer Genome Atlas (TCGA) Data hWps://tcga-data.nci.nih.gov/tcga/tcgaCancerDetails.jsp? diseaseType=LAML&diseaseName=Acute%20Myeloid%20Leukemia Access Control for TCGA Data • Open access data Aer – De-idenAfied clinical and demographic data – Gene -

Meeting Report: “Metagenomics, Metadata and Meta-Analysis” (M3) Workshop at the Pacific Symposium on Biocomputing 2010

Standards in Genomic Sciences (2010) 2:357-360 DOI:10.4056/sigs.802738 Meeting Report: “Metagenomics, Metadata and Meta-analysis” (M3) Workshop at the Pacific Symposium on Biocomputing 2010 Lynette Hirschman1, Peter Sterk2,3, Dawn Field2, John Wooley4, Guy Cochrane5, Jack Gilbert6, Eugene Kolker7, Nikos Kyrpides8, Folker Meyer9, Ilene Mizrachi10, Yasukazu Nakamura11, Susanna-Assunta Sansone5, Lynn Schriml12, Tatiana Tatusova10, Owen White12 and Pelin Yilmaz13 1 Information Technology Center, The MITRE Corporation, Bedford, MA, USA 2 NERC Center for Ecology and Hydrology, Oxford, OX1 3SR, UK 3 Wellcome Trust Sanger Institute, Hinxton, Cambridge, UK 4 University of California San Diego, La Jolla, CA, USA 5 European Molecular Biology Laboratory (EMBL), European Bioinformatics Institute (EBI), Hinxton, Cambridge, UK 6 Plymouth Marine Laboratory (PML), Plymouth, UK 7 Seattle Children’s Hospital, Seattle, WA, USA 8 Genome Biology Program, DOE Joint Genome Institute, Walnut Creek, California, USA 9 Argonne National Laboratory, 9700 South Cass Avenue, Argonne, IL 60439, USA 10 National Center for Biotechnology Information, National Library of Medicine, National Institutes of Health, Bethesda, MD, USA 11 DNA Data Bank of Japan, National Institute of Genetics, Yata, Mishima, Japan 12 University of Maryland School of Medicine, Baltimore, MD, USA 13 Microbial Genomics Group, Max Planck Institute for Marine Microbiology & Jacobs University Bremen, Bremen, Germany This report summarizes the M3 Workshop held at the January 2010 Pacific Symposium on Biocomputing. The workshop, organized by Genomic Standards Consortium members, in- cluded five contributed talks, a series of short presentations from stakeholders in the genom- ics standards community, a poster session, and, in the evening, an open discussion session to review current projects and examine future directions for the GSC and its stakeholders.