Lecture 17 Outliers & Influential Observations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Review on Outlier/Anomaly Detection in Time Series Data

A review on outlier/anomaly detection in time series data ANE BLÁZQUEZ-GARCÍA and ANGEL CONDE, Ikerlan Technology Research Centre, Basque Research and Technology Alliance (BRTA), Spain USUE MORI, Intelligent Systems Group (ISG), Department of Computer Science and Artificial Intelligence, University of the Basque Country (UPV/EHU), Spain JOSE A. LOZANO, Intelligent Systems Group (ISG), Department of Computer Science and Artificial Intelligence, University of the Basque Country (UPV/EHU), Spain and Basque Center for Applied Mathematics (BCAM), Spain Recent advances in technology have brought major breakthroughs in data collection, enabling a large amount of data to be gathered over time and thus generating time series. Mining this data has become an important task for researchers and practitioners in the past few years, including the detection of outliers or anomalies that may represent errors or events of interest. This review aims to provide a structured and comprehensive state-of-the-art on outlier detection techniques in the context of time series. To this end, a taxonomy is presented based on the main aspects that characterize an outlier detection technique. Additional Key Words and Phrases: Outlier detection, anomaly detection, time series, data mining, taxonomy, software 1 INTRODUCTION Recent advances in technology allow us to collect a large amount of data over time in diverse research areas. Observations that have been recorded in an orderly fashion and which are correlated in time constitute a time series. Time series data mining aims to extract all meaningful knowledge from this data, and several mining tasks (e.g., classification, clustering, forecasting, and outlier detection) have been considered in the literature [Esling and Agon 2012; Fu 2011; Ratanamahatana et al. -

Applied Biostatistics Mean and Standard Deviation the Mean the Median Is Not the Only Measure of Central Value for a Distribution

Health Sciences M.Sc. Programme Applied Biostatistics Mean and Standard Deviation The mean The median is not the only measure of central value for a distribution. Another is the arithmetic mean or average, usually referred to simply as the mean. This is found by taking the sum of the observations and dividing by their number. The mean is often denoted by a little bar over the symbol for the variable, e.g. x . The sample mean has much nicer mathematical properties than the median and is thus more useful for the comparison methods described later. The median is a very useful descriptive statistic, but not much used for other purposes. Median, mean and skewness The sum of the 57 FEV1s is 231.51 and hence the mean is 231.51/57 = 4.06. This is very close to the median, 4.1, so the median is within 1% of the mean. This is not so for the triglyceride data. The median triglyceride is 0.46 but the mean is 0.51, which is higher. The median is 10% away from the mean. If the distribution is symmetrical the sample mean and median will be about the same, but in a skew distribution they will not. If the distribution is skew to the right, as for serum triglyceride, the mean will be greater, if it is skew to the left the median will be greater. This is because the values in the tails affect the mean but not the median. Figure 1 shows the positions of the mean and median on the histogram of triglyceride. -

Outlier Identification.Pdf

STATGRAPHICS – Rev. 7/6/2009 Outlier Identification Summary The Outlier Identification procedure is designed to help determine whether or not a sample of n numeric observations contains outliers. By “outlier”, we mean an observation that does not come from the same distribution as the rest of the sample. Both graphical methods and formal statistical tests are included. The procedure will also save a column back to the datasheet identifying the outliers in a form that can be used in the Select field on other data input dialog boxes. Sample StatFolio: outlier.sgp Sample Data: The file bodytemp.sgd file contains data describing the body temperature of a sample of n = 130 people. It was obtained from the Journal of Statistical Education Data Archive (www.amstat.org/publications/jse/jse_data_archive.html) and originally appeared in the Journal of the American Medical Association. The first 20 rows of the file are shown below. Temperature Gender Heart Rate 98.4 Male 84 98.4 Male 82 98.2 Female 65 97.8 Female 71 98 Male 78 97.9 Male 72 99 Female 79 98.5 Male 68 98.8 Female 64 98 Male 67 97.4 Male 78 98.8 Male 78 99.5 Male 75 98 Female 73 100.8 Female 77 97.1 Male 75 98 Male 71 98.7 Female 72 98.9 Male 80 99 Male 75 2009 by StatPoint Technologies, Inc. Outlier Identification - 1 STATGRAPHICS – Rev. 7/6/2009 Data Input The data to be analyzed consist of a single numeric column containing n = 2 or more observations. -

A Machine Learning Approach to Outlier Detection and Imputation of Missing Data1

Ninth IFC Conference on “Are post-crisis statistical initiatives completed?” Basel, 30-31 August 2018 A machine learning approach to outlier detection and imputation of missing data1 Nicola Benatti, European Central Bank 1 This paper was prepared for the meeting. The views expressed are those of the authors and do not necessarily reflect the views of the BIS, the IFC or the central banks and other institutions represented at the meeting. A machine learning approach to outlier detection and imputation of missing data Nicola Benatti In the era of ready-to-go analysis of high-dimensional datasets, data quality is essential for economists to guarantee robust results. Traditional techniques for outlier detection tend to exclude the tails of distributions and ignore the data generation processes of specific datasets. At the same time, multiple imputation of missing values is traditionally an iterative process based on linear estimations, implying the use of simplified data generation models. In this paper I propose the use of common machine learning algorithms (i.e. boosted trees, cross validation and cluster analysis) to determine the data generation models of a firm-level dataset in order to detect outliers and impute missing values. Keywords: machine learning, outlier detection, imputation, firm data JEL classification: C81, C55, C53, D22 Contents A machine learning approach to outlier detection and imputation of missing data ... 1 Introduction .............................................................................................................................................. -

LSAR: Efficient Leverage Score Sampling Algorithm for the Analysis

LSAR: Efficient Leverage Score Sampling Algorithm for the Analysis of Big Time Series Data Ali Eshragh∗ Fred Roostay Asef Nazariz Michael W. Mahoneyx December 30, 2019 Abstract We apply methods from randomized numerical linear algebra (RandNLA) to de- velop improved algorithms for the analysis of large-scale time series data. We first develop a new fast algorithm to estimate the leverage scores of an autoregressive (AR) model in big data regimes. We show that the accuracy of approximations lies within (1 + O (")) of the true leverage scores with high probability. These theoretical results are subsequently exploited to develop an efficient algorithm, called LSAR, for fitting an appropriate AR model to big time series data. Our proposed algorithm is guaranteed, with high probability, to find the maximum likelihood estimates of the parameters of the underlying true AR model and has a worst case running time that significantly im- proves those of the state-of-the-art alternatives in big data regimes. Empirical results on large-scale synthetic as well as real data highly support the theoretical results and reveal the efficacy of this new approach. 1 Introduction A time series is a collection of random variables indexed according to the order in which they are observed in time. The main objective of time series analysis is to develop a statistical model to forecast the future behavior of the system. At a high level, the main approaches for this include the ones based on considering the data in its original time domain and arXiv:1911.12321v2 [stat.ME] 26 Dec 2019 those arising from analyzing the data in the corresponding frequency domain [31, Chapter 1]. -

Effects of Outliers with an Outlier

• The mean is a good measure to use to describe data that are close in value. • The median more accurately describes data Effects of Outliers with an outlier. • The mode is a good measure to use when you have categorical data; for example, if each student records his or her favorite color, the color (a category) listed most often is the mode of the data. In the data set below, the value 12 is much less than Additional Example 2: Exploring the Effects of the other values in the set. An extreme value such as Outliers on Measures of Central Tendency this is called an outlier. The data shows Sara’s scores for the last 5 35, 38, 27, 12, 30, 41, 31, 35 math tests: 88, 90, 55, 94, and 89. Identify the outlier in the data set. Then determine how the outlier affects the mean, median, and x mode of the data. x x xx x x x 10 12 14 16 18 20 22 24 26 28 30 32 34 36 38 40 42 55, 88, 89, 90, 94 outlier 55 1 Additional Example 2 Continued Additional Example 2 Continued With the Outlier Without the Outlier 55, 88, 89, 90, 94 55, 88, 89, 90, 94 outlier 55 mean: median: mode: mean: median: mode: 55+88+89+90+94 = 416 55, 88, 89, 90, 94 88+89+90+94 = 361 88, 89,+ 90, 94 416 5 = 83.2 361 4 = 90.25 2 = 89.5 The mean is 83.2. The median is 89. There is no mode. -

How Significant Is a Boxplot Outlier?

Journal of Statistics Education, Volume 19, Number 2(2011) How Significant Is A Boxplot Outlier? Robert Dawson Saint Mary’s University Journal of Statistics Education Volume 19, Number 2(2011), www.amstat.org/publications/jse/v19n2/dawson.pdf Copyright © 2011 by Robert Dawson all rights reserved. This text may be freely shared among individuals, but it may not be republished in any medium without express written consent from the author and advance notification of the editor. Key Words: Boxplot; Outlier; Significance; Spreadsheet; Simulation. Abstract It is common to consider Tukey’s schematic (“full”) boxplot as an informal test for the existence of outliers. While the procedure is useful, it should be used with caution, as at least 30% of samples from a normally-distributed population of any size will be flagged as containing an outlier, while for small samples (N<10) even extreme outliers indicate little. This fact is most easily seen using a simulation, which ideally students should perform for themselves. The majority of students who learn about boxplots are not familiar with the tools (such as R) that upper-level students might use for such a simulation. This article shows how, with appropriate guidance, a class can use a spreadsheet such as Excel to explore this issue. 1. Introduction Exploratory data analysis suddenly got a lot cooler on February 4, 2009, when a boxplot was featured in Randall Munroe's popular Webcomic xkcd. 1 Journal of Statistics Education, Volume 19, Number 2(2011) Figure 1 “Boyfriend” (Feb. 4 2009, http://xkcd.com/539/) Used by permission of Randall Munroe But is Megan justified in claiming “statistical significance”? We shall explore this intriguing question using Microsoft Excel. -

Lecture 14 Testing for Kurtosis

9/8/2016 CHE384, From Data to Decisions: Measurement, Kurtosis Uncertainty, Analysis, and Modeling • For any distribution, the kurtosis (sometimes Lecture 14 called the excess kurtosis) is defined as Testing for Kurtosis 3 (old notation = ) • For a unimodal, symmetric distribution, Chris A. Mack – a positive kurtosis means “heavy tails” and a more Adjunct Associate Professor peaked center compared to a normal distribution – a negative kurtosis means “light tails” and a more spread center compared to a normal distribution http://www.lithoguru.com/scientist/statistics/ © Chris Mack, 2016Data to Decisions 1 © Chris Mack, 2016Data to Decisions 2 Kurtosis Examples One Impact of Excess Kurtosis • For the Student’s t • For a normal distribution, the sample distribution, the variance will have an expected value of s2, excess kurtosis is and a variance of 6 2 4 1 for DF > 4 ( for DF ≤ 4 the kurtosis is infinite) • For a distribution with excess kurtosis • For a uniform 2 1 1 distribution, 1 2 © Chris Mack, 2016Data to Decisions 3 © Chris Mack, 2016Data to Decisions 4 Sample Kurtosis Sample Kurtosis • For a sample of size n, the sample kurtosis is • An unbiased estimator of the sample excess 1 kurtosis is ∑ ̅ 1 3 3 1 6 1 2 3 ∑ ̅ Standard Error: • For large n, the sampling distribution of 1 24 2 1 approaches Normal with mean 0 and variance 2 1 of 24/n 3 5 • For small samples, this estimator is biased D. N. Joanes and C. A. Gill, “Comparing Measures of Sample Skewness and Kurtosis”, The Statistician, 47(1),183–189 (1998). -

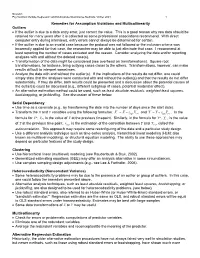

Remedies for Assumption Violations and Multicollinearity Outliers • If the Outlier Is Due to a Data Entry Error, Just Correct the Value

Newsom Psy 522/622 Multiple Regression and Multivariate Quantitative Methods, Winter 2021 1 Remedies for Assumption Violations and Multicollinearity Outliers • If the outlier is due to a data entry error, just correct the value. This is a good reason why raw data should be retained for many years after it is collected as some professional associations recommend. With direct computer entry during interviews, entry errors cannot always be determined for certain. • If the outlier is due to an invalid case because the protocol was not followed or the inclusion criteria was incorrectly applied for that case, the researcher may be able to just eliminate that case. I recommend at least reporting the number of cases excluded and the reason. Consider analyzing the data and/or reporting analyses with and without the deleted case(s). • Transformation of the data might be considered (see overhead on transformations). Square root transformations, for instance, bring outlying cases closer to the others. Transformations, however, can make results difficult to interpret sometimes. • Analyze the data with and without the outlier(s). If the implications of the results do not differ, one could simply state that the analyses were conducted with and without the outlier(s) and that the results do not differ substantially. If they do differ, both results could be presented and a discussion about the potential causes of the outlier(s) could be discussed (e.g., different subgroup of cases, potential moderator effect). • An alternative estimation method could be used, such as least absolute residuals, weighted least squares, bootstrapping, or jackknifing. See discussion of these below. -

Random Variables and Applications

Random Variables and Applications OPRE 6301 Random Variables. As noted earlier, variability is omnipresent in the busi- ness world. To model variability probabilistically, we need the concept of a random variable. A random variable is a numerically valued variable which takes on different values with given probabilities. Examples: The return on an investment in a one-year period The price of an equity The number of customers entering a store The sales volume of a store on a particular day The turnover rate at your organization next year 1 Types of Random Variables. Discrete Random Variable: — one that takes on a countable number of possible values, e.g., total of roll of two dice: 2, 3, ..., 12 • number of desktops sold: 0, 1, ... • customer count: 0, 1, ... • Continuous Random Variable: — one that takes on an uncountable number of possible values, e.g., interest rate: 3.25%, 6.125%, ... • task completion time: a nonnegative value • price of a stock: a nonnegative value • Basic Concept: Integer or rational numbers are discrete, while real numbers are continuous. 2 Probability Distributions. “Randomness” of a random variable is described by a probability distribution. Informally, the probability distribution specifies the probability or likelihood for a random variable to assume a particular value. Formally, let X be a random variable and let x be a possible value of X. Then, we have two cases. Discrete: the probability mass function of X specifies P (x) P (X = x) for all possible values of x. ≡ Continuous: the probability density function of X is a function f(x) that is such that f(x) h P (x < · ≈ X x + h) for small positive h. -

Calculating Variance and Standard Deviation

VARIANCE AND STANDARD DEVIATION Recall that the range is the difference between the upper and lower limits of the data. While this is important, it does have one major disadvantage. It does not describe the variation among the variables. For instance, both of these sets of data have the same range, yet their values are definitely different. 90, 90, 90, 98, 90 Range = 8 1, 6, 8, 1, 9, 5 Range = 8 To better describe the variation, we will introduce two other measures of variation—variance and standard deviation (the variance is the square of the standard deviation). These measures tell us how much the actual values differ from the mean. The larger the standard deviation, the more spread out the values. The smaller the standard deviation, the less spread out the values. This measure is particularly helpful to teachers as they try to find whether their students’ scores on a certain test are closely related to the class average. To find the standard deviation of a set of values: a. Find the mean of the data b. Find the difference (deviation) between each of the scores and the mean c. Square each deviation d. Sum the squares e. Dividing by one less than the number of values, find the “mean” of this sum (the variance*) f. Find the square root of the variance (the standard deviation) *Note: In some books, the variance is found by dividing by n. In statistics it is more useful to divide by n -1. EXAMPLE Find the variance and standard deviation of the following scores on an exam: 92, 95, 85, 80, 75, 50 SOLUTION First we find the mean of the data: 92+95+85+80+75+50 477 Mean = = = 79.5 6 6 Then we find the difference between each score and the mean (deviation). -

Leveraging for Big Data Regression

Focus Article Leveraging for big data regression Ping Ma∗ and Xiaoxiao Sun Rapid advance in science and technology in the past decade brings an extraordinary amount of data, offering researchers an unprecedented opportunity to tackle complex research challenges. The opportunity, however, has not yet been fully utilized, because effective and efficient statistical tools for analyzing super-large dataset are still lacking. One major challenge is that the advance of computing resources still lags far behind the exponential growth of database. To facilitate scientific discoveries using current computing resources, one may use an emerging family of statistical methods, called leveraging. Leveraging methods are designed under a subsampling framework, in which one samples a small proportion of the data (subsample) from the full sample, and then performs intended computations for the full sample using the small subsample as a surrogate. The key of the success of the leveraging methods is to construct nonuniform sampling probabilities so that influential data points are sampled with high probabilities. These methods stand as the very unique development of their type in big data analytics and allow pervasive access to massive amounts of information without resorting to high performance computing and cloud computing. © 2014 Wiley Periodicals, Inc. Howtocitethisarticle: WIREs Comput Stat 2014. doi: 10.1002/wics.1324 Keywords: big data; subsampling; estimation; least squares; leverage INTRODUCTION of FPGA (field-programmable gate array)-based accel- erators are ongoing in both academia and industry apid advance in science and technology in the (for example, with TimeLogic and Convey Comput- past decade brings an extraordinary amount R ers). However, none of these technologies by them- of data that were inaccessible just a decade ago, selves can address the big data problem accurately and offering researchers an unprecedented opportunity to at scale.