TR-4684: Implementing and Configuring Modern Sans with Nvme/FC

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Kosovo Commercial Guide

Kosovo Table of Contents Doing Business in Kosovo ____________________________ 6 Market Overview ___________________________________ 6 Market Challenges __________________________________ 7 Market Opportunities ________________________________ 8 Market Entry Strategy ________________________________ 9 Political and Economic Environment ____________________ 11 Selling US Products & Services ________________________ 11 Using an Agent to Sell US Products and Services _________________ 11 Establishing an Office ________________________________ 11 Franchising ______________________________________ 11 Direct Marketing ___________________________________ 12 Joint Ventures/Licensing ______________________________ 12 Selling to the Government ______________________________ 12 Distribution & Sales Channels____________________________ 13 Express Delivery ___________________________________ 13 Selling Factors & Techniques ____________________________ 13 eCommerce ______________________________________ 14 Overview ____________________________________________ 14 Current Market Trends ___________________________________ 14 Domestic eCommerce (B2C) ________________________________ 14 Cross-Border eCommerce __________________________________ 14 Online Payment________________________________________ 15 Major Buying Holidays ___________________________________ 15 Social Media __________________________________________ 15 Trade Promotion & Advertising ___________________________ 15 Pricing _________________________________________ 19 Sales Service/Customer -

Iscsi存储和存储多路径介绍 综合产品支持部——李树兵 01 Iscsi存储介绍

iSCSI存储和存储多路径介绍 综合产品支持部——李树兵 01 iSCSI存储介绍 02 Linux DM multipath(多路径)介绍 01 iSCSI存储介绍 02 Linux DM multipath(多路径)介绍 SCSI(Small Computer System Interface)历史 • SISI小型计算机系统接口技术是存储设备最基本的标准协议,但是他通常需要设备互相靠近并用SCSI总 线连接。 • SCSI-1 • 1986年ANSI标准,X3.131-1986 • SCSI-2 • 1994年ANSI标准,X3.131-1994 • SCSI-3 • 发展到SCSI-3,已经过于复杂,不适合作为单独的一个标准;在某次修订中,T10委员会决定把 SCSI分为更小的单元;并且将某些单元的维护工作移交给其他组织。SCSI-3是这些子标准的集合, 表示第三代SCSI。 • iSCSI属于SCSI-3的传输协议层(类似于FCP),由IETF维护。 • 目前还没有SCSI-4 SCSI-3架构模型 Architecture Model 特定设备命令集 Device-Type Specific Command Sets 架构模型 基础命令集 Shared Command Set SCSI传输协议 SCSI Transport Protocols 物理连接 Interconnects 特定设备命令集:包括磁盘设备的“SCSI块命令(SCSI Block Commands)”等 基础命令集:所有SCSI设备都必须实现的“基础命令(SCSI Primary Commands)” SCSI传输协议:譬如iSCSI,FCP 物理连接:譬如光纤通道,internet 架构模型:定义了SCSI系统模型和各单元的功能分工 SCSI通讯模型 target initiator 任务分发器 SCSI SCSI通道 SCSI port port 逻辑单元 客户端 任务管理器 服务器 见下页的概念介绍 iSCSI正是SCSI通道的一种 SCSI主要实体介绍 • SCSI是客户端-服务器架构 • 在SCSI术语里,客户端叫initiator,服务器叫target • Initiator通过SCSI通道向target发送请求,target通过SCSI通道向initiator发送响应 • SCSI通道连接的是SCSI Port,SCSI initiator port和SCSI target port • 所以initiator至少必须包括一个SCSI Port,和一个能发起请求的客户端 • 在SCSI里,target的概念比较麻烦(可能是历史原因),它并很与initiator对应 • Target需要包括一个SCSI port,任务分发器和逻辑单元 • SCSI port用来连接SCSI通道,任务分发器负责将客户端的请求分到到适当的逻辑单元,而逻 辑单元才是真正处理任务的实体 • 通常一个逻辑单元都支持多任务环境,所以需要包含一个任务管理器和真正响应请求的服务器 • Initiator用一种叫做CDB(Command Descriptor Blocks)的数据结构封装请求 分布式SCSI通讯模型 Initiator 设备 Target 设备 • Initiator的应用层封装好SCSI SCSI应用 应用协议 SCSI应用 CDB后,调用SCSI传输协议接 口…… 传输协议接口 • Target的应用层收到SCSI CDB后, 根据CDB内容进行相应处理,封 SCSI传输协议 传输协议 SCSI传输协议 装好SCSI 响应后,调用SCSI传输 协议接口…… 互连协议接口 • iSCSI正是SCSI传输协议的一种 -

MADE by ORIGIN SHAREHOLDER REVIEW 2014 Strategy Performance Growth

MADE BY ORIGIN SHAREHOLDER REVIEW 2014 Strategy Performance Growth ENERGY BY ORIGIN In 2014 Origin generated 17,195 gigawatt hours of electricity... WASHED BY ALEX ...enough energy to power around 16 billion (1) loads of washing. PERFORMANCE Statutory Profi t ($m) Statutory Earnings Per Share (¢) Dividends Per Share (¢) (2) HIGHLIGHTS $530m 48.1¢ 50¢ 2014 530 2014 48.1 2014 50 2013 378 2013 34.6 2013 50 A reconciliation between Statutory and 2012 980 2012 90.6 2012 50 Underlying profi t measures can be found 2011 186 2011 19.6 2011 50 in note 2 of the Origin Consolidated Financial Statements. 2010 612 2010 67.7 2010 50 Underlying EBITDA ($m) Underlying Profi t ($m) Underlying Earnings Per Share (¢) Free Cash Flow ($m) $2,139m $713m 64.8¢ $1,599m 2014 2,139 2014 713 2014 64.8 2014 1,599 2013 2,181 2013 760 2013 69.5 2013 1,188 2012 2,257 2012 893 2012 82.6 2012 1,415 2011 1,782 2011 673 2011 71.0 2011 1,316 2010 1,346 2010 585 2010 64.8 2010 800 01 ...............PERFORMANCE HIGHLIGHTS 02 ...............MESSAGE FROM THE CHAIRMAN AND MANAGING DIRECTOR 03 ...............FINANCIAL CALENDAR 2014/2015 03 ...............KEY MILESTONES IN 2014 03 ...............OUR COMPASS 04...............ENERGY MARKETS 04............... CONTACT ENERGY 05 ...............EXPLORATION & PRODUCTION 05 ...............LNG 06 .............. BOARD OF DIRECTORS 06 ..............EXECUTIVE MANAGEMENT TEAM 07 ...............FIVE YEAR FINANCIAL HISTORY 07 ...............GLOSSARY 08 ..............BUSINESS STRATEGY 08 ..............MAP OF ASSETS & OPERATIONS MESSAGE FROM THE CHAIRMAN AND MANAGING DIRECTOR During the period, we announced the acquisition starting to deliver results, as refl ected in the Fellow shareholder, of a 40 per cent interest in the Poseidon improvement in margins in the second half. -

Break Through the TCP/IP Bottleneck with Iwarp

Printer Friendly Version Page 1 of 6 Enter Search Term Enter Drill Deeper or ED Online ID Technologies Design Hotspots Resources Shows Magazine eBooks & Whitepapers Jobs More... Click to view this week's ad screen [Design View / Design Solution] Break Through The TCP/IP Bottleneck With iWARP Using a high-bandwidth, low-latency network solution in the same network infrastructure provides good insurance for generation networks. Sweta Bhatt, Prashant Patel ED Online ID #21970 October 22, 2009 Copyright © 2006 Penton Media, Inc., All rights reserved. Printing of this document is for personal use only. Reprints T he online economy, particularly e-business, entertainment, and collaboration, continues to dramatically and rapidly increase the amount of Internet traffic to and from enterprise servers. Most of this data is going through the transmission control protocol/Internet protocol (TCP/IP) stack and Ethernet controllers. As a result, Ethernet controllers are experiencing heavy network traffic, which requires more system resources to process network packets. The CPU load increases linearly as a function of network packets processed, diminishing the CPU’s availability for other applications. Because the TCP/IP consumes a significant amount of the host CPU processing cycles, a heavy TCP/IP load may leave few system resources available for other applications. Techniques for reducing the demand on the CPU and lowering the system bottleneck, though, are available. iWARP SOLUTIONS Although researchers have proposed many mechanisms and theories for parallel systems, only a few have resulted in working computing platforms. One of the latest to enter the scene is the Internet Wide Area RDMA Protocol, or iWARP, a joint project by Carnegie Mellon University Corp. -

IBM System Storage DS Storage Manager Version 11.2: Installation and Host Support Guide DDC MEL Events

IBM System Storage DS Storage Manager Version 11.2 Installation and Host Support Guide IBM GA32-2221-05 Note Before using this information and the product it supports, read the information in “Notices” on page 295. This edition applies to version 11 modification 02 of the IBM DS Storage Manager, and to all subsequent releases and modifications until otherwise indicated in new editions. This edition replaces GA32-2221-04. © Copyright IBM Corporation 2012, 2015. US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Figures .............. vii Using the Summary tab ......... 21 Using the Storage and Copy Services tab ... 21 Tables ............... ix Using the Host Mappings tab ....... 24 Using the Hardware tab ......... 25 Using the Setup tab .......... 26 About this document ......... xi Managing multiple software versions..... 26 What’s new in IBM DS Storage Manager version 11.20 ................ xii Chapter 3. Installing Storage Manager 27 Related documentation .......... xiii Preinstallation requirements ......... 27 Storage Manager documentation on the IBM Installing the Storage Manager packages website .............. xiii automatically with the installation wizard .... 28 Storage Manager online help and diagnostics xiv Installing Storage Manager with a console Finding Storage Manager software, controller window in Linux and AIX ........ 31 firmware, and readme files ........ xiv Installing Storage Manager packages manually .. 32 Essential websites for support information ... xv Software installation sequence ....... 32 Getting information, help, and service ..... xvi Installing Storage Manager manually ..... 32 Before you call ............ xvi Uninstalling Storage Manager ........ 33 Using the documentation ........ xvi Uninstalling Storage Manager on a Windows Software service and support ....... xvii operating system ........... 33 Hardware service and support ..... -

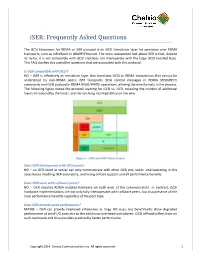

Iser: Frequently Asked Questions

iSER: Frequently Asked Questions The iSCSI Extensions for RDMA or iSER protocol is an iSCSI translation layer for operation over RDMA transports, such as InfiniBand or iWARP/Ethernet. The most unexpected fact about iSER is that, despite its name, it is not compatible with iSCSI and does not interoperate with the large iSCSI installed base. This FAQ clarifies this and other questions that are associated with this protocol. Is iSER compatible with iSCSI? NO – iSER is effectively an emulation layer that translates iSCSI to RDMA transactions that cannot be understood by non-RDMA peers: iSER transports iSCSI control messages in RDMA SEND/RECV commands and iSCSI payload in RDMA READ/WRITE operations, altering the wire formats in the process. The following figure shows the protocol layering for iSCSI vs. iSER, revealing the number of additional layers introduced by the latter, and the resulting incompatibility on the wire. Figure 1 – iSCSI and iSER Protocol Layers Does iSER interoperate with iSCSI peers? NO – an iSER client or server can only communicate with other iSER end nodes. Interoperating in this case means disabling iSER extensions, and losing offload support and all performance benefits. Does iSER work with software peers? NO – iSER requires RDMA enabled hardware on both ends of the communication. In contrast, iSCSI hardware implementations are not only fully interoperable with software peers, but also preserve all the local performance benefits regardless of the peer type. Does iSER provide good performance? MAYBE – iSER can provide improved efficiencies at large I/O sizes, but benchmarks show degraded performance at small I/O sizes due to the additional overheads introduced. -

Designing High-Performance and Scalable Clustered Network Attached Storage with Infiniband

DESIGNING HIGH-PERFORMANCE AND SCALABLE CLUSTERED NETWORK ATTACHED STORAGE WITH INFINIBAND DISSERTATION Presented in Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy in the Graduate School of The Ohio State University By Ranjit Noronha, MS * * * * * The Ohio State University 2008 Dissertation Committee: Approved by Dhabaleswar K. Panda, Adviser Ponnuswammy Sadayappan Adviser Feng Qin Graduate Program in Computer Science and Engineering c Copyright by Ranjit Noronha 2008 ABSTRACT The Internet age has exponentially increased the volume of digital media that is being shared and distributed. Broadband Internet has made technologies such as high quality streaming video on demand possible. Large scale supercomputers also consume and cre- ate huge quantities of data. This media and data must be stored, cataloged and retrieved with high-performance. Researching high-performance storage subsystems to meet the I/O demands of applications in modern scenarios is crucial. Advances in microprocessor technology have given rise to relatively cheap off-the-shelf hardware that may be put together as personal computers as well as servers. The servers may be connected together by networking technology to create farms or clusters of work- stations (COW). The evolution of COWs has significantly reduced the cost of ownership of high-performance clusters and has allowed users to build fairly large scale machines based on commodity server hardware. As COWs have evolved, networking technologies like InfiniBand and 10 Gigabit Eth- ernet have also evolved. These networking technologies not only give lower end-to-end latencies, but also allow for better messaging throughput between the nodes. This allows us to connect the clusters with high-performance interconnects at a relatively lower cost. -

We Help Jess Harness the Sun's Energy to Power Her

WE HELP JESS HARNESS THE SUN’S ENERGY TO POWER HER HOME ENERGY MADE FRESH DAILY SUSTAINABILITY REPORT 2015 CONTENTS 02 .................. MANAGING DIRECTOR’S MESSAGE 04 ..................OUR COMPASS 05 ..................WHERE WE OPERATE 06.................. OUR YEAR AT A GLANCE 08.................. BUSINESS STRATEGY 09 ..................OUR PERFORMANCE 10 ..................GLOBAL TRENDS 12 .................. APPROACH TO MATERIALITY 14 .................. ENGAGING WITH OUR STAKEHOLDERS 17 ................... ENERGY DEVELOPMENTS 32 .................. DELIVERING ENERGY 40 .................. MANAGING OUR BUSINESS 60 .................. FUTURE ENERGY SOLUTIONS 64 .................. PHILANTHROPY 67 ................... RATINGS AND BENCHMARKS 68 ..................GLOSSARY DISCOVER MORE ONLINE IMPORTANT INFORMATION Origin has been reporting its sustainability The following Report includes an assessment performance since 2002 via an annual of upstream activities by Australia Pacific LNG, Sustainability Report. in which Origin has a 37.5 per cent shareholding Unless otherwise stated, Origin’s Sustainability (consistent with its holding as at 30 June 2014) Report provides a summary of activities, operated and is the Upstream operator. assets and non-financial performance between This Report does not contain information 1 July 2014 and 30 June 2015. Emissions on the sustainability performance of Contact performance is reported on an operated and Energy, in which Origin held a 53.1 per cent equity basis. Significant events occurring after interest at the close of the reporting period. the close of the period may also be referenced. After the close of the period, Origin divested Origin’s Sustainability Report outlines the its entire interest in Contact Energy. Information Company’s performance against a number regarding Contact Energy can be found of Material Aspects. Origin’s approach to at www.contactenergy.co.nz. determining Material Aspects is informed All monetary amounts are in Australian dollars by the Global Reporting Initiative and the unless otherwise stated. -

Dns in Action a Detailed and Practical Guide To

DNS in Action A detailed and practical guide to DNS implementation, configuration, and administration Libor Dostálek Alena Kabelová BIRMINGHAM - MUMBAI DNS in Action A detailed and practical guide to DNS implementation, configuration, and administration Copyright © 2006 Packt Publishing All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, without the prior written permission of the publisher, except in the case of brief quotations embedded in critical articles or reviews. Every effort has been made in the preparation of this book to ensure the accuracy of the information presented. However, the information contained in this book is sold without warranty, either express or implied. Neither the authors, Packt Publishing, nor its dealers or distributors will be held liable for any damages caused or alleged to be caused directly or indirectly by this book. Packt Publishing has endeavored to provide trademark information about all the companies and products mentioned in this book by the appropriate use of capitals. However, Packt Publishing cannot guarantee the accuracy of this information. First published: March 2006 Production Reference: 1240206 Published by Packt Publishing Ltd. 32 Lincoln Road Olton Birmingham, B27 6PA, UK. ISBN 1-904811-78-7 www.packtpub.com Cover Design by www.visionwt.com This is an authorized and updated translation from the Czech language. Copyright © Computer Press 2003 Velký průvodce protokoly TCP/IP a systémem DNS. ISBN: 80-722-6675-6. All rights reserved. Credits Authors Development Editor Libor Dostálek Louay Fatoohi Alena Kabelová Indexer Technical Editors Abhishek Shirodkar Darshan Parekh Abhishek Shirodkar Proofreader Chris Smith Editorial Manager Dipali Chittar Production Coordinator Manjiri Nadkarni Cover Designer Helen Wood About the Authors Libor Dostálek was born in 1957 in Prague, Europe. -

Optimizing Dell EMC SC Series Storage for Oracle OLAP Processing

Optimizing Dell EMC SC Series Storage for Oracle OLAP Processing Abstract This paper highlights the advanced features of Dell EMC™ SC Series storage and provides guidance on how they can be leveraged to deliver a cost-effective solution for Oracle® OLAP and DSS deployments. November 2019 Dell EMC Best Practices Revisions Revisions Date Description September 2014 Initial release October 2016 Updated for agnosticism with Dell SC Series all-flash arrays; updated format July 2017 Consolidated best practice information and focus on OLAP environment; updated format November 2019 vVols branding update Acknowledgements Updated by: Mark Tomczik and Henry Wong The information in this publication is provided “as is.” Dell Inc. makes no representations or warranties of any kind with respect to the information in this publication, and specifically disclaims implied warranties of merchantability or fitness for a particular purpose. Use, copying, and distribution of any software described in this publication requires an applicable software license. © 2014–2019 Dell Inc. or its subsidiaries. All Rights Reserved. Dell, EMC, Dell EMC and other trademarks are trademarks of Dell Inc. or its subsidiaries. Other trademarks may be trademarks of their respective owners. Dell believes the information in this document is accurate as of its publication date. The information is subject to change without notice. 2 Optimizing Dell EMC SC Series Storage for Oracle OLAP Processing | 2009-M-BP-O Table of contents Table of contents 1 Introduction .................................................................................................................................................................. -

Global Shipping Transaction Guide

v 2600 or higher Global Shipping Transaction Guide Important Information Payment of this guide may be distributed or disclosed in any form to any third party without written permission of FedEx. This guide is provided to you and its use is You must remit payment in accordance with the FedEx Service Guide, tariff, subject to the terms and conditions of the FedEx PowerShip Placement service agreement or other terms or instructions provided to you by FedEx Agreement. The information in this document may be changed at any time from time to time. You may not withhold payment on any shipments because of without notice. Any conflict between this guide and the FedEx Service Guide equipment failure or for the failure of FedEx to repair or replace any shall be governed by the FedEx Service Guide. equipment. © 2012 FedEx. FedEx and the FedEx logo are registered service marks. All Confidential and Proprietary rights reserved. Unpublished. The information contained in this guide is confidential and proprietary to FedEx Corporate Services, Inc. and its affiliates (collectively “FedEx”). No part FedEx Ship Manager® Software PassPort, Global Shipping Transaction Guide 2 Contents FedEx Home Delivery with Printed Return Shipping Label Output 1 Overview ................................................................................................................ 6 Transaction .............................................................................................................. 30 Features ..................................................................................................................... -

INFOCOM PDF Export File

Mount Alexander Shire Council Community Services Directory Table Of Contents ACCOMMODATION . 1 Emergency Accommodation . 1 Holiday . 1 Hostels . 1 Nursing Homes . 1 Public Housing . 1 Tenancy . 2 AGED AND DISABILITY SERVICES . 2 Aids and Appliances . 2 Intellectual Disabilities . 3 Home Services . 3 Learning Difficulties . 4 Psychiatric/Mental Health . 4 Physical Disabilities . 4 Senior Citizen's Centres . 5 Rehabilitation . 5 Respite Services . 5 ANIMAL WELFARE . 6 Animal Welfare Groups . 6 ANIMALS . 6 Dingos . 6 Dogs . 6 Horses . 7 Pony Clubs . 7 Pigeons . 7 ANTIQUES AND SECONDHAND GOODS . 7 Opportunity Shops . 7 ARTS AND CRAFTS . 7 Dancing . 7 Drama . 7 Drawing . 8 Embroidery . 8 Film . 8 Hobbies . 8 Instruction . 8 Music and Singing . 8 Painting . 9 Quilting . 9 Spinning and Weaving . 9 COMMUNICATIONS . 9 Computers . 9 Fax Transmissions . 10 Languages . 10 Media Newsletters . 11 Postal Communications . 11 Radio . 12 Telephone Services . 12 COMMUNITY ORGANISATIONS . 12 Associations . ..