On Memory Addressing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Microkernel API for Fine-Grained Decomposition

A Microkernel API for Fine-Grained Decomposition Sebastian Reichelt Jan Stoess Frank Bellosa System Architecture Group, University of Karlsruhe, Germany freichelt,stoess,[email protected] ABSTRACT from the microkernel APIs in existence. The need, for in- Microkernel-based operating systems typically require spe- stance, to explicitly pass messages between servers, or the cial attention to issues that otherwise arise only in dis- need to set up threads and address spaces in every server for tributed systems. The resulting extra code degrades per- parallelism or protection require OS developers to adopt the formance and increases development effort, severely limiting mindset of a distributed-system programmer rather than to decomposition granularity. take advantage of their knowledge on traditional OS design. We present a new microkernel design that enables OS devel- Distributed-system paradigms, though well-understood and opers to decompose systems into very fine-grained servers. suited for physically (and, thus, coarsely) partitioned sys- We avoid the typical obstacles by defining servers as light- tems, present obstacles to the fine-grained decomposition weight, passive objects. We replace complex IPC mecha- required to exploit the benefits of microkernels: First, a nisms by a simple function-call approach, and our passive, lot of development effort must be spent into matching the module-like server model obviates the need to create threads OS structure to the architecture of the selected microkernel, in every server. Server code is compiled into small self- which also hinders porting existing code from monolithic sys- contained files, which can be loaded into the same address tems. Second, the more servers exist | a desired property space (for speed) or different address spaces (for safety). -

Virtual Memory

Chapter 4 Virtual Memory Linux processes execute in a virtual environment that makes it appear as if each process had the entire address space of the CPU available to itself. This virtual address space extends from address 0 all the way to the maximum address. On a 32-bit platform, such as IA-32, the maximum address is 232 − 1or0xffffffff. On a 64-bit platform, such as IA-64, this is 264 − 1or0xffffffffffffffff. While it is obviously convenient for a process to be able to access such a huge ad- dress space, there are really three distinct, but equally important, reasons for using virtual memory. 1. Resource virtualization. On a system with virtual memory, a process does not have to concern itself with the details of how much physical memory is available or which physical memory locations are already in use by some other process. In other words, virtual memory takes a limited physical resource (physical memory) and turns it into an infinite, or at least an abundant, resource (virtual memory). 2. Information isolation. Because each process runs in its own address space, it is not possible for one process to read data that belongs to another process. This improves security because it reduces the risk of one process being able to spy on another pro- cess and, e.g., steal a password. 3. Fault isolation. Processes with their own virtual address spaces cannot overwrite each other’s memory. This greatly reduces the risk of a failure in one process trig- gering a failure in another process. That is, when a process crashes, the problem is generally limited to that process alone and does not cause the entire machine to go down. -

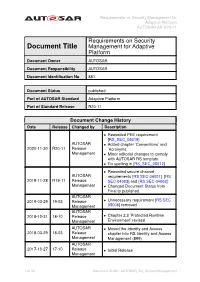

Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11

Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11 Requirements on Security Document Title Management for Adaptive Platform Document Owner AUTOSAR Document Responsibility AUTOSAR Document Identification No 881 Document Status published Part of AUTOSAR Standard Adaptive Platform Part of Standard Release R20-11 Document Change History Date Release Changed by Description • Reworded PEE requirement [RS_SEC_05019] AUTOSAR • Added chapter ’Conventions’ and 2020-11-30 R20-11 Release ’Acronyms’ Management • Minor editorial changes to comply with AUTOSAR RS template • Fix spelling in [RS_SEC_05012] • Reworded secure channel AUTOSAR requirements [RS SEC 04001], [RS 2019-11-28 R19-11 Release SEC 04003] and [RS SEC 04003] Management • Changed Document Status from Final to published AUTOSAR 2019-03-29 19-03 Release • Unnecessary requirement [RS SEC Management 05006] removed AUTOSAR 2018-10-31 18-10 Release • Chapter 2.3 ’Protected Runtime Management Environment’ revised AUTOSAR • Moved the Identity and Access 2018-03-29 18-03 Release chapter into RS Identity and Access Management Management (899) AUTOSAR 2017-10-27 17-10 Release • Initial Release Management 1 of 32 Document ID 881: AUTOSAR_RS_SecurityManagement Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11 Disclaimer This work (specification and/or software implementation) and the material contained in it, as released by AUTOSAR, is for the purpose of information only. AUTOSAR and the companies that have contributed to it shall not be liable for any use of the work. The material contained in this work is protected by copyright and other types of intel- lectual property rights. The commercial exploitation of the material contained in this work requires a license to such intellectual property rights. -

Hardware Configuration with Dynamically-Queried Formal Models

Master’s Thesis Nr. 180 Systems Group, Department of Computer Science, ETH Zurich Hardware Configuration With Dynamically-Queried Formal Models by Daniel Schwyn Supervised by Reto Acherman Dr. David Cock Prof. Dr. Timothy Roscoe April 2017 – October 2017 Abstract Hardware is getting increasingly complex and heterogeneous. With different compo- nents having different views of the system, the traditional assumption of unique phys- ical addresses has become an illusion. To adapt to such hardware, an operating system (OS) needs to understand the complex address translation chains and be able to handle the presence of multiple address spaces. This thesis takes a recently proposed model that formally captures these aspects and applies it to hardware configuration in the Barrelfish OS. To this end, I present Sockeye, a domain specific language that uses the model to de- scribe hardware. I then show, that code relying on knowledge about the address spaces in a system can be statically generated from these specifications. Furthermore, the model is successfully applied to device management, showing that it can also be used to configure hardware at runtime. The implementation presented here does not rely on any platform specific code and it reduced the amount of such code in Barrelfish’s device manager by over 30%. Applying the model to further hardware configuration tasks is expected to have similar effects. i Acknowledgements First of all, I want to thank my advisers Timothy Roscoe, David Cock and Reto Acher- mann for their guidance and support. Their feedback and critical questions were a valuable contribution to this thesis. I’d also like to thank the rest of the Barrelfish team and other members of the Systems Group at ETH for the interesting discussions during meetings and coffee breaks. -

Message Passing for Programming Languages and Operating Systems

Master’s Thesis Nr. 141 Systems Group, Department of Computer Science, ETH Zurich Message Passing for Programming Languages and Operating Systems by Martynas Pumputis Supervised by Prof. Dr. Timothy Roscoe, Dr. Antonios Kornilios Kourtis, Dr. David Cock October 7, 2015 Abstract Message passing as a mean of communication has been gaining popu- larity within domains of concurrent programming languages and oper- ating systems. In this thesis, we discuss how message passing languages can be ap- plied in the context of operating systems which are heavily based on this form of communication. In particular, we port the Go program- ming language to the Barrelfish OS and integrate the Go communi- cation channels with the messaging infrastructure of Barrelfish. We show that the outcome of the porting and the integration allows us to implement OS services that can take advantage of the easy-to-use concurrency model of Go. Our evaluation based on LevelDB benchmarks shows comparable per- formance to the Linux port. Meanwhile, the overhead of the messag- ing integration causes the poor performance when compared to the native messaging of Barrelfish, but exposes an easier to use interface, as shown by the example code. i Acknowledgments First of all, I would like to thank Timothy Roscoe, Antonios Kornilios Kourtis and David Cock for giving the opportunity to work on the Barrelfish OS project, their supervision, inspirational thoughts and critique. Next, I would like to thank the Barrelfish team for the discussions and the help. In addition, I would like to thank Sebastian Wicki for the conversations we had during the entire period of my Master’s studies. -

X79 Extreme11

X79 Extreme11 User Manual Version 1.1 Published June 2013 Copyright©2013 ASRock INC. All rights reserved. 1 Copyright Notice: No part of this manual may be reproduced, transcribed, transmitted, or translated in any language, in any form or by any means, except duplication of documentation by the purchaser for backup purpose, without written consent of ASRock Inc. Products and corporate names appearing in this manual may or may not be regis- tered trademarks or copyrights of their respective companies, and are used only for identification or explanation and to the owners’ benefit, without intent to infringe. Disclaimer: Specifications and information contained in this manual are furnished for informa- tional use only and subject to change without notice, and should not be constructed as a commitment by ASRock. ASRock assumes no responsibility for any errors or omissions that may appear in this manual. With respect to the contents of this manual, ASRock does not provide warranty of any kind, either expressed or implied, including but not limited to the implied warran- ties or conditions of merchantability or fitness for a particular purpose. In no event shall ASRock, its directors, officers, employees, or agents be liable for any indirect, special, incidental, or consequential damages (including damages for loss of profits, loss of business, loss of data, interruption of business and the like), even if ASRock has been advised of the possibility of such damages arising from any defect or error in the manual or product. This device complies with Part 15 of the FCC Rules. Operation is subject to the fol- lowing two conditions: (1) this device may not cause harmful interference, and (2) this device must accept any interference received, including interference that may cause undesired operation. -

DOS Virtualized in the Linux Kernel

DOS Virtualized in the Linux Kernel Robert T. Johnson, III Abstract Due to the heavy dominance of Microsoft Windows® in the desktop market, some members of the software industry believe that new operating systems must be able to run Windows® applications to compete in the marketplace. However, running applications not native to the operating system generally causes their performance to degrade significantly. When the application and the operating system were written to run on the same machine architecture, all of the instructions in the application can still be directly executed under the new operating system. Some will only need to be interpreted differently to provide the required functionality. This paper investigates the feasibility and potential to speed up the performance of such applications by including the support needed to run them directly in the kernel. In order to avoid the impact to the kernel when these applications are not running, the needed support was built as a loadable kernel module. 1 Introduction New operating systems face significant challenges in gaining consumer acceptance in the desktop marketplace. One of the first realizations that must be made is that the majority of this market consists of non-technical users who are unlikely to either understand or have the desire to understand various technical details about why the new operating system is “better” than a competitor’s. This means that such details are extremely unlikely to sway a large amount of users towards the operating system by themselves. The incentive for a consumer to continue using their existing operating system or only upgrade to one that is backwards compatible is also very strong due to the importance of application software. -

Run-Time Reconfigurable RTOS for Reconfigurable Systems-On-Chip

Run-time Reconfigurable RTOS for Reconfigurable Systems-on-Chip Dissertation A thesis submited to the Faculty of Computer Science, Electrical Engineering and Mathematics of the University of Paderborn and to the Graduate Program in Electrical Engineering (PPGEE) of the Federal University of Rio Grande do Sul in partial fulfillment of the requirements for the degree of Dr. rer. nat. and Dr. in Electrical Engineering Marcelo G¨otz November, 2007 Supervisors: Prof. Dr. rer. nat. Franz J. Rammig, University of Paderborn, Germany Prof. Dr.-Ing. Carlos E. Pereira, Federal University of Rio Grande do Sul, Brazil Public examination in Paderborn, Germany Additional members of examination committee: Prof. Dr. Marco Platzner Prof. Dr. Urlich R¨uckert Dr. Mario Porrmann Date: April 23, 2007 Public examination in Porto Alegre, Brazil Additional members of examination committee: Prof. Dr. Fl´avioRech Wagner Prof. Dr. Luigi Carro Prof. Dr. Altamiro Amadeu Susin Prof. Dr. Leandro Buss Becker Prof. Dr. Fernando Gehm Moraes Date: October 11, 2007 Acknowledgements This PhD thesis was carried out, in his great majority, at the Department of Computer Science, Electrical Engineering and Mathematics of the University of Paderborn, during my time as a fellow of the working group of Prof. Dr. Franz J. Rammig. Due to a formal agreement between Federal University of Rio Grande do Sul (UFRGS) and University of Paderborn, I had the opportunity to receive a bi-national doctoral degree. Therefore, I had to accomplished, in addition, the PhD Graduate Program in Electrical Engineering of UFRGS. So, I hereby acknowledge, without mention everyone personally, all persons involved in making this agreement possible. -

Introduction

1 INTRODUCTION A modern computer consists of one or more processors, some main memory, disks, printers, a keyboard, a mouse, a display, network interfaces, and various other input/output devices. All in all, a complex system.oo If every application pro- grammer had to understand how all these things work in detail, no code would ever get written. Furthermore, managing all these components and using them optimally is an exceedingly challenging job. For this reason, computers are equipped with a layer of software called the operating system, whose job is to provide user pro- grams with a better, simpler, cleaner, model of the computer and to handle manag- ing all the resources just mentioned. Operating systems are the subject of this book. Most readers will have had some experience with an operating system such as Windows, Linux, FreeBSD, or OS X, but appearances can be deceiving. The pro- gram that users interact with, usually called the shell when it is text based and the GUI (Graphical User Interface)—which is pronounced ‘‘gooey’’—when it uses icons, is actually not part of the operating system, although it uses the operating system to get its work done. A simple overview of the main components under discussion here is given in Fig. 1-1. Here we see the hardware at the bottom. The hardware consists of chips, boards, disks, a keyboard, a monitor, and similar physical objects. On top of the hardware is the software. Most computers have two modes of operation: kernel mode and user mode. The operating system, the most fundamental piece of soft- ware, runs in kernel mode (also called supervisor mode). -

Strengthening Diversification Defenses by Means of a Non-Readable Code

1 Strengthening diversification defenses by means of a non-readable code segment Sebastian Österlund Department of Computer Science Vrije Universiteit, Amsterdam, Netherlands Supervised By: H. Bos & C. Giuffrida Abstract—In this paper we present a new defense against Just- hardware segmentation, this approach should theoretically have In-Time return-oriented-programming attacks. By making pro- no run-time performance overhead for Intel x86 architecture, gram code non-readable, the assembly of Just-In-Time gadgets by once it has been set up. Both position dependent executables scanning the memory is effectively blocked. Using segmentation and position independent executables are covered. Furthermore on Intel x86 hardware, the implementation of execute-only code an approach to implement a similar defense on x86_64 using can be achieved. We discuss two different ways of implementing the new MPX [7] instructions is presented. The defense such a defense for 32-bit Intel architecture: one for position dependent executables, and one for position independent executa- mechanism we present in this paper is of interest, mainly, bles. The first implementation works by splitting the address- for use in security of network connected applications (such space into two mirrored segments. The second implementation as servers or web-browsers), since these applications are often creates an execute-only memory-section at the top of the address- the main targets of remote code execution exploits. space, making it possible to still use the whole address-space. By relying on hardware segmentation the run-time performance II. RETURN-ORIENTED-PROGRAMMING ATTACKS overhead of these defenses is minimal. Remote code execution by means of buffer overflows has Keywords—ROP, segmentation, XnR, buffer overflow, memory been a problem for a long time. -

Intel ® Atom™ Processor E6xx Series SKU for Different Segments” on Page 30 Updated Table 15

Intel® Atom™ Processor E6xx Series Datasheet July 2011 Revision 004US Document Number: 324208-004US INFORMATIONLegal Lines and Disclaimers IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL® PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL’S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER, AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. UNLESS OTHERWISE AGREED IN WRITING BY INTEL, THE INTEL PRODUCTS ARE NOT DESIGNED NOR INTENDED FOR ANY APPLICATION IN WHICH THE FAILURE OF THE INTEL PRODUCT COULD CREATE A SITUATION WHERE PERSONAL INJURY OR DEATH MAY OCCUR. Intel may make changes to specifications and product descriptions at any time, without notice. Designers must not rely on the absence or characteristics of any features or instructions marked “reserved” or “undefined.” Intel reserves these for future definition and shall have no responsibility whatsoever for conflicts or incompatibilities arising from future changes to them. The information here is subject to change without notice. Do not finalize a design with this information. The products described in this document may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are available on request. Contact your local Intel sales office or your distributor to obtain the latest specifications and before placing your product order. -

Adding Support for Vector Instructions to 8051 Architecture

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 Volume: 05 Issue: 10 | Oct 2018 www.irjet.net p-ISSN: 2395-0072 Adding Support for Vector Instructions to 8051 Architecture Pulkit Gairola1, Akhil Alluri2, Rohan Verma3, Dr. Rajeev Kumar Singh4 1,2,3Student, Dept. of Computer Science, Shiv Nadar University, Uttar, Pradesh, India, 4Assistant Dean & Professor, Dept. of Computer Science, Shiv Nadar University, Uttar, Pradesh, India, ----------------------------------------------------------------------***--------------------------------------------------------------------- Abstract - Majority of the IoT (Internet of Things) devices are Some of the features that have made the 8051 popular are: meant to just collect data and sent it to the cloud for processing. They can be provided with such vectorization • 4 KB on chip program memory. capabilities to carry out very specific computation work and • 128 bytes on chip data memory (RAM) thus reducing latency of output. This project is used to demonstrate how to add specialized 1.2 Components of 8051[2] vectorization capabilities to architectures found in micro- controllers. • 4 register banks. • 128 user defined software flags. The datapath of the 8051 is simple enough to be pliable • 8-bit data bus for adding an experimental Vectorization module. We are • 16-bit address bus trying to make changes to an existing scalar processor so • 16 bit timers (usually 2, but may have more, or less). that it use a single instruction to operate on one- dimensional • 3 internal and 2 external interrupts. arrays of data called vectors. The significant reduction in the • Bit as well as byte addressable RAM area of 16 bytes. Instruction fetch overhead for vectorizable operations is useful • Four 8-bit ports, (short models have two 8-bit ports).