Micro-Architectural Threats to Modern Computing Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Commercial Audio

Commercial Audio AUTO DEALERSHIP This 26000 sq ft facility is comprised of four program zones. The showroom zone has background music and paging functionality with EQ for the JBL control 40 sub/sat system providing a frequency response from 32Hz to 20kHz with maximum continuous average SPL of 84.4 dB. The outdoor zone also has background music and paging functionality where most pages will come from the reception microphone. The offices and bathrooms are one zone with music and paging. Each room has its own wall-mounted volume controller. Finally the service area is a paging only zone where most pages come from the service microphone. EQ and paging priorities are set from the DBX ZonePro 641 while power is provided by the Crown CT8150 multichannel amplifier. 120’ 25’ CEILING 80’ S3 S3 S3 S3 SERVICE S102 SERVICE RECEPTION 75W M2 ZC1 ZC3 S102 101W 10’ CEILING S1 S2 S2 S104 S2 ZC3 WOMENS MENS 14’ CEILING OFFICE S105 S2 S1 S1 OFFICE ZC3 AVR M1 S106 S2 ZC1 ZC3 OFFICE ZC3 S1 S1 S107 S2 S103 75W OFFICE ZC3 ZC1 110’ S3 S3 180’ RACK DESCRIPTION Media Source Source Components Dealerships can have many sources, but the primary media source will be to provide background music for the showroom and lot areas. Paging microphones are dbx Zone Pro 641 M commonly used in the showroom reception area and service reception area. Phone systems can also output pages and integrate similarly as a paging mic would ZC1, ZC3 Signal Processing & Routing Crown CT8150 The DBX ZonePro 641 provides both input processing and output processing and signal routing functionality. -

Education Contents

TECHNOLOGY Audio Case Studies & Product Guide Education Contents About HARMAN About Sound Technology Ltd Case Studies • Exeter University • Manchester Metropolitan Business School • University of Leicester • MMU Students’ Union • Oxford Union Debating Chamber • Athlone Institute of Technology • Springfield Community Centre Product Guides • Loudspeakers • Signal Processing and Distribution • Amplificiation • Mixing • Microphones About HARMAN HARMAN Professional Solutions is the world’s largest professional audio, video, lighting, and control products and systems company. It serves the entertainment and enterprise markets with comprehensive systems, including enterprise automation and complete IT solutions for a broad range of applications. HARMAN Professional Solutions brands comprise AKG Acoustics®, AMX®, BSS Audio®, Crown International®, dbx Professional®, DigiTech®, JBL Professional®, Lexicon®, Martin®, Soundcraft® and Studer®. These best- in-class products are designed, manufactured and delivered to a variety of customers, including tour, cinema, retail, corporate, government, education, large venue and hospitality. In addition, HARMAN’s world-class product development team continues to innovate and deliver groundbreaking technologies to meet its customers’ growing needs. For scalable, high-impact communication and entertainment systems, HARMAN Professional Solutions is your single point of contact. About Sound Technology Ltd Sound Technology Ltd is the specialist audio distributor of HARMAN Professional Solutions in the UK and Republic of Ireland. We provide system design, demonstration facilities and servicing of all HARMAN audio products. In this document you’ll find some relevant case studies. For any further information, to speak to our system designers, or to arrange a demo, please call us on 01462 480000. Exeter University The University of Exeter’s stunning multi-million pound Forum project has transformed the heart of the Streatham Campus and provided it with a vi- brant new centrepiece. -

5. Overview of ARM's Cortex-A Series

5. Overview of ARM’s Cortex-A series 5. Overview of ARM’s Cortex-A series (1) 5. Overview of ARM’s Cortex-A series According to the general scope of this Lecture Notes, subsequently we will be concerned only with the Cortex-A series. Key features of ARM’s Cortex-A series Multiprocessor capability Performance classes Word length of the Cortex-A series of the Cortex-A series of the Cortex-A series 5. Overview of ARM’s Cortex-A series (2) Multiprocessor capability of the Cortex-A series Multiprocessor capability of the Cortex-A series Single processor designs, Dual designs with two options A-priory no multiprocessor • a single processor option and multiprocessor designs capability • a multiprocesor capable option Cortex-A8 (2005) Cortex-A9/Cortex-A9 MPCore (2007) Cortex-A7 MPCore (2011) Cortex-A12/Cortex-A12 MPCore (2013) Cortex-A35 (2015) Cortex-A15/Cortex-A15 MPCore (2010) Cortex-A53 (2012) Cortex-A17/Cortex-A17 MPCore (2014) Cortex-A57 (2012) Cortex-A72 (2015) Here we note that in figures or tables we often omit the MPCore tag for the sake of brevity. 5. Overview of ARM’s Cortex-A series (3) Remarks on the interpretation of the term MPCore by ARM • ARM introduced the term MPCore in connection with the announcement of the ARM11 MPCore in 2004 and interpreted it as multicore implementation (actually including up to 4 cores). • Along with the ARM Cortex-A9 MPCore ARM re-interpreted this term such that it indicates now the multiprocessor capability of the processor. 5. Overview of ARM’s Cortex-A series (4) Performance classes of the Cortex-A series -1 [12] Performance classes of the Cortex-A models High-performance models Mainstream models Low-power models Cortex-A15 Cortex-A8 Cortex-A5 Cortex-A57 Cortex-A9 Cortex-A7 Cortex-A72 (Cortex-A12) Cortex-A35 Cortex-A17 Cortex-A53 5. -

Bars & Restaurants

CASE STUDY BARS & RESTAURANTS OPPORTUNITY WONDERS BAR AND GRILL, TEXAS, USA Create a user-friendly system that can E2I Design installed a complete audio solution by HARMAN Professional Solutions at play a wide range of audio sources and Wonders Bar & Grill, a newly opened pub, restaurant, sports bar and live music venue manage volume levels in different areas in downtown Corpus Christi. Owners Dayyan and Darren Wonders hired David Rotter, of the bar with ease. a Systems Integrator at E2I Design, to create a user-friendly system that would enable them to play a wide range of audio sources and manage volume levels in different areas SOLUTION of the bar. After careful consideration, E2I selected a complete HARMAN audio solution E2I selected a complete audio solution made up of dbx controllers, Crown amplification and JBL speakers for their seamless by HARMAN Professional Solutions made integration, intuitive operation and exceptional sound quality. up of dbx controllers, Crown amplification “The owners wanted a state-of-the-art audio system that was very user-friendly, with and JBL speakers for their seamless auto-switching capabilities and independent volume control for different zones in the integration, intuitive operation and bar,” said Rotter. “By partnering with HARMAN, we were able to cover all of our bases for exceptional sound quality. the system—including controllers, amplification and speakers—all from one integrated provider. All of the solutions work together seamlessly, and the HARMAN team was extremely helpful in making sure that the system we created met all of the customer’s needs.” The system E2I Design installed at Wonders Bar & Grill includes a dbx ZonePRO 1260m “With the HARMAN system, digital zone processor, 4 dbx ZC2 wall-mounted zone controllers, 1 dbx ZC3 wall- everyone at the bar can mounted zone controller, 1 Crown DCi 4|600 power amplifier, 8 JBL AWC82 loudspeakers and 1 JBL SRX828SP dual self-powered subwoofer system. -

Si Impact Brochure

Also available MADI-USB USB MADI USB COMBO OPTICAL MADI CAT 5 MADI 32X32 (CAT 5) 32X32 (USB) 64X64 64X64 A complete range of powerful I/O expansion cards Featuring a 32x32 expansion card slot on the rear panel, Si Impact can Optical MADI Dual Cat5 MADI be used in the widest range of applications and integrated seamlessly with existing systems and hardware. MULTIDIGITAL AES CARD (XLR) AES CARD (D-SUB) 32X32 4X4 8X8 A full range of ViSi Connect expansion cards is available for multiple TM ® I/O formats, including MADI and industry standard protocols such as CobraNet Aviom A-Net Rocknet®, CobraNetTM and DanteTM. Soundcraft is committed to the continued development of the ViSi AVIOM A-NET COBRANET BLU LINK 16X16 32X32 16X16 Connect expansion card range, developing new cards as new network AES/EBU AES/EBU D-Type protocols become available. 32-Channel USB Recording included BLU link RockNet®ROCKNET DANTE 64X64 64X64 Stagebox Ready Multi Digital Card DanteTM Remote mixing with your iPad® Available on the App Store, the Soundcraft Si Impact remote iPad® app gives instant hands-on control over all important mixer functions direct from your iPad®. Application examples - • Optimise the front of house mix from anywhere in the room • Set mic gains and 48V from the stage • Adjust monitor levels while standing next to the artist • Adjust channel strip settings remote from the console • Use to extend the fader count of an existing control surface • Allow multiple users on the same console to control their own mixes Laptop not included Soundcraft, Harman International Industries Ltd., Cranborne House, Cranborne Road, Potters Bar, Hertfordshire EN6 3JN, UK T: +44 (0)1707 665000 F: +44 (0)1707 660742 E: [email protected] 40-input Digital Mixing Console Soundcraft USA, 8500 Balboa Boulevard, Northridge, CA 91329, USA T: +1-818-893-8411 F: +1-818-920-3208 E: [email protected] and 32-in/32-out USB Interface www.soundcraft.com ® Part No: 5058401 E & OE 04/2015 with iPad Control #41450 - Si_Impact_Brochure_V5_US_LAUNCH.indd 1-2 20/04/2015 13:17 Walk up. -

Contacting Dbx Technical Support

v1.0 25-Aug-21 My dbx product has developed a fault or I have a question about a product which I have purchased or perhaps intend to purchase. Who do I contact? The information below is applicable to products from dbx. Support for Harman Consumer products can be found at https://harmanaudio.com. Support for Harman Luxury products can be found at https://harmanluxuryaudio.com. USA Customers who have purchased a product in the USA or have a question about a product which they perhaps intend to purchase in the USA are supported directly by Harman Professional. Support contact information can be found at https://dbxpro.com/support/tech_support. This page contains a web-form where you can enter your contact details and query. Outside the USA Support outside of the USA is provided by Harman Professional distribution partners. These partners are responsible for sales, spare parts, repairs, technical support and general product queries. They will be able to provide assistance within local time-zone working hours for the territory. Distribution partner contact information can be obtained from https://dbxpro.com/support/tech_support after selecting your country from the drop-down selection box found at the top of the webpage. If a product develops a fault during the warranty period then please contact the point of sale (retailer or web-store). Warranty information can be found at https://dbxpro.com/warranty. About HARMAN Professional Solutions HARMAN Professional Solutions is the world’s largest professional audio, video, lighting, and control products and systems company. Our brands comprise AKG Acoustics®, AMX®, BSS Audio®, Crown International®, dbx Professional®, DigiTech®, JBL Professional®, Lexicon Pro®, Martin®, and Soundcraft®. -

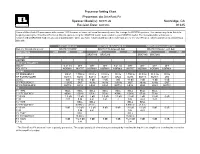

Speaker Model(S): SRX712M Northridge, CA Revision Date: 02/03/05 91325

Processor Setting Chart Processor: dbx DriveRack PA Speaker Model(s): SRX712M Northridge, CA Revision Date: 02/03/05 91325 Users of DriveRack PA processors with version 1.01 firmware or lower, will need to manually enter the tunings for SRX700 systems. The easiest way to do this is to begin by running the DriveRack PA Setup Wizard and selecting the SR4700X model most similar to your SRX700 model. The following table will include a recommended SR4700X model to use as a starting point. Once you have renamed and saved the tuning to one of the User Presets, edit the parameters according to this table. SRX712M Bi-Amp SRX712M Bi-Amp with Sub SRX712M Passive with Sub Run the Wizard and select: SR4702X BIAMP SR4702X Bi-Amp with Sub SR4702X Passive with Sub Output Name LOW MID HIGH LOW MID HIGH LOW MID HIGH SRX718S SRX728S SRX718S SRX728S COMP/LIMIT LIMITER DELAY & POLARITY DELAY 0.21 ms OFF OFF OFF 0.21 ms OFF OFF OFF OFF POLARITY* NORMAL INVERT NORMAL NORMAL NORMAL INVERT NORMAL NORMAL NORMAL XOVER HP FREQUENCY 60HZ 1.70KHz 31.5 Hz 31.5 Hz 90 Hz 1.70KHz 31.5 Hz 31.5 Hz 90 Hz HP FILTER/SLOPE BUT18 BUT6 BUT18 BUT18 LR24 BUT6 BUT18 BUT18 LR6 LEVEL 0dB -10 dB 0 dB -3 dB 0dB -10 dB 0 dB -3 dB 0 dB LP FREQUENCY 1.12KHZ OUT 80 Hz 80 Hz 1.0KHz OUT 80 Hz 80 Hz OUT LP FILTER/SLOPE BUT18 LR12 LR24 LR24 BUT18 LR12 LR24 LR24 BUT18 EQ F1 TYPE BELL BELL BELL BELL BELL BELL BELL BELL F1 FREQUENCY 400Hz 2.5KHz 67 Hz 75 Hz 400Hz 2.5KHz 67 Hz 75 Hz F1 GAIN -2.5 dB -2.5 dB 2 dB 1.5 dB -2.5 dB -2.5 dB 2 dB 1.5 dB 0 dB F1 Q 2.03 0.94 4.41 3.41 2 0.94 4.41 3.41 F2 TYPE BELL BELL BELL BELL F2 FREQUENCY 6.00KHz 42.5 Hz 6.00KHz 42.5 Hz F2 GAIN 0 dB -3.0 dB 1.5 dB 0 dB 0 dB -3.0 dB 1.5 dB 0 dB 0 dB F2 Q 0.94 4.41 0.94 4.41 F3 TYPE BELL BELL F3 FREQUENCY 15.0KHz 15.0KHz F3 GAIN 0 dB +12.0 dB 0 dB 0 dB 0 dB +12.0 dB 0 dB 0 dB 0 dB F3 Q 3.41 3.41 * The DriveRack PA does not have a polarity parameter. -

K92 High Performance Closed-Back Monitoring Headphones

K92 HIGH PERFORMANCE CLOSED-BACK MONITORING HEADPHONES HIGHLIGHTS Professional 40mm Drivers For reference monitor accuracy over a precisely balanced 16Hz–22kHz frequency response Lightweight Design and Self-Adjusting Headband For exceptional comfort over long periods of time Professional Build Quality Gold Hardware, metal construction and closed back design for excellent durability and sound isolation Flexibility Low impedence design and included gold adapter Mix and master your tracks with uninhibited clarity with the AKG K92 over-ear, closed back headphones. Professional-grade 40mm drivers reveal even the subtlest nuances, so you can be confident your mix will translate accurately on any system. Whether you’re fine-tuning track levels within the mix or mastering the final product, the self-adjusting headband and lightweight design will provide hours of comfort. Designed by the company whose mics and headphones have helped create some of the world’s most iconic recordings, the durable K92 is a serious headphone that delivers great sound in the studio and beyond. KEY MESSAGES PROFESSIONAL DRIVERS DESIGNED FOR COMFORT When it comes to audio quality, driver size and weight are critical, especially The exposed headband design keeps the K92 headphones comfortably light, in the lower frequencies. Generous 40mm drivers deliver high sensitivity for without compromising driver size. Breathable, lightweight ear pads encircle your powerful output, plus an extended, balanced frequency response that reveals ears, rather than putting pressure on them. And a single-sided cable gives you every detail of the mix without artifically coloring the sound. freedom to move. To sum it up, these high-performance headphones sound, feel and look great. -

Si Expression Brochure

Technical Data Frequency Response Mic Input to Line Output . +0/-1dB, 20Hz – 20kHz Stereo Input to Master Output . +0.5/-0.5dB, 20Hz – 20kHz Si Expression consoles feature a 64x64 expansion card slot on the rear panel. A full T.H.D. and Noise range of ViSi Connect expansion cards is available for multiple I/O formats. Mic Input (Min Gain) to Bus Output . 0.006% @ 1kHz Soundcraft is committed to the continued development of the ViSi Connect expansion Mic Input (Max Gain) to Bus Output . 0.008% @ 1kHz card range, developing new cards as new network protocols become available. Residual noise, Master Output, no inputs routed, mix fader @0dB . <-88dBu Mic Input E.I.N. (maximum gain) . -126dBu (150 Ω source) Mix noise, masters at unity . < -86dBu 1 input to mix at unity gain . -84dBu CMRR mic @1kHz (max gain) . -80dBu I/O Expansion Cards Crosstalk (@ 1kHz) Channel ON attenuation . <120dB Channel Fader attenuation . <120dB Mic – Mic . -100dB @ 1kHz, -85dB @ 10kHz Line – Line . -100dB @ 1kHz, -85dB @ 10kHz Input Gain Optical MADI Dual Cat5 MADI Mic Gain . -5dB – 58dB integrated pad design 1dB steps Line Trim . -10dB - +16dB EQ HI MID & LO MID . 22Hz – 20kHz, +/-15dB Q 6-0.3 Shelf (HF) . 800Hz – 20kHz, +/-15dB Shelf (LF) . 20Hz – 500Hz, +/-15dB CobraNet™ Aviom A-Net® HPF . 40Hz – 1kHz GEQ . 31Hz – 16kHz 1/3 octave Delay User adjustable delay . 1 sample – 500ms Digital I/O AES/EBU AES/EBU D-Type Converter resolution . 24-bit DSP resolution . 40-bit floating point Latency Mic In to Line Out . <0.8 ms Analogue in to AES out . -

I-Tech Application Guide 1

I-Tech Application Guide 1 Application Guide © 2005 by Crown Audio Inc., 1718 W. Mishawaka Rd., Elkhart, IN 46517-9439 U.S.A. Telephone: 574-294-8000. Fax: 574-294-8329. www.crownaudio.com Trademark Notice: Grounded Bridge™ is a trademark, and Crown® and Macro-Tech® are registered trademarks of Crown International. Other trademarks are the property of their respective owners. 137327-3A Application Guide 09/05 2 I-Tech Application Guide Introduction This application guide provides useful information designed to help you best use your new Crown® I-Tech Series amplifi er. It is designed to complement your amplifi er’s Opera- tion Manual, which describes the specifi c features and specifi cations of your amplifi er. This guide also provides suggestions for matching I-Tech Series amplifi ers to JBL VERTEC® Line Array loudspeaker systems, along with wiring pin assignments for several I-Tech / VerTec combinations. A list of suggested publications and webpages is provided for your convenience in the Appendix section. Please be sure to read all instructions, warnings and cautions contained both in this guide and your amplifi er’s Operation Manual. Application Guide I-Tech Application Guide 3 Table of Contents Introduction .....................................................................................2 1 Power and Thermal Information ..........................................................5 1.1 Output Power ..................................................................................................5 1.2 AC Power Draw and Thermal Dissipation -

Houses of Worship

PARTNER STORY HOUSES OF WORSHIP OPPORTUNITY GSJA BANDENGAN CHURCH, INDONESIA To deliver clear, intelligible sound for Established in 1964 under the auspices of the Church of the Assemblies of God, GSJA sermons and special events, GSJA Bandengan Church has been bringing people together for more than 50 years. Located Bandengan Church hired IMS Indonesia to in the heart of Jakarta, Bandengan Church hosts a variety of events including musical upgrade their existing sound system with a performances, children’s prayer conferences and special events, attracting worshipers premium networked audio solution. from all age groups. The venue features a sprawling ground floor as well as a balcony with extensive seating capacity. In order to ensure pristine sound with even, balanced SOLUTION coverage, Bandengan Church hired AV integrators IMS Indonesia to upgrade their IMS Indonesia equipped Bandengan existing sound system with a cutting-edge networked audio solution featuring JBL Church with a cutting-edge networked loudspeakers, a Soundcraft mixing console and a dbx loudspeaker management system. audio solution featuring JBL loudspeakers, “GSJA Bandengan Church required an upgrade for their existing sound setup,” said a Soundcraft mixing console and a dbx Christian Prasetya, Brand Manager, IMS Indonesia. “Since the church hall is spread loudspeaker management system. out over a fairly large area, the setup had to provide even sound distribution across the worship space. To energize the church services, they needed excellent vocal clarity, reliability and smooth reproduction of sound across all frequencies. To meet these requirements, we chose the JBL VRX series speakers.” We“ were seeking a powerful, IMS Indonesia equipped Bandengan Church with JBL VRX932LAP line array loudspeakers dynamic audio solution that to ensure consistent coverage in every seat for both the ground floor and balcony. -

Owner's Manual in a Safe Place for Future Reference

AV Receiver Owner’s Manual Read the supplied booklet “Safety Brochure” before using the unit. English CONTENTS Accessories . 5 7 Connecting other devices . 33 Connecting an external power amplifier . .33 Connecting recording devices . 33 FEATURES 6 Connecting a device that supports SCENE link playback (remote connection) . 34 Connecting a device compatible with the trigger function . 34 What you can do with the unit . 6 8 Connecting the power cable . 35 Part names and functions . 8 9 Selecting an on-screen menu language . 36 Front panel (RX-V773) . 8 10 Optimizing the speaker settings automatically (YPAO) . 37 Front panel (RX-V673) . 9 Measuring at one listening position (single measure) . 39 Front display (indicators) . 10 Measuring at multiple listening positions (multi measure) (RX-V773 only) . 40 Rear panel (RX-V773) . 11 Checking the measurement results . 41 Rear panel (RX-V673) . 12 Reloading the previous YPAO adjustments . 42 Remote control . 13 Error messages . .43 Warning messages . 44 PREPARATIONS 14 PLAYBACK 45 General setup procedure . 14 1 Placing speakers . 15 Basic playback procedure . 45 Selecting an HDMI output jack (RX-V773 only) . 45 2 Connecting speakers . 19 Selecting the input source and favorite settings with one touch Connecting front speakers that support bi-amp connections . 21 Input/output jacks and cables . 22 (SCENE) . 46 Configuring scene assignments . 46 3 Connecting a TV . 23 Selecting the sound mode . 47 4 Connecting playback devices . 28 Enjoying sound field effects (CINEMA DSP) . 48 Connecting video devices (such as BD/DVD players) . 28 Enjoying unprocessed playback . .50 Connecting audio devices (such as CD players) . 31 Enjoying pure high fidelity sound (Pure Direct) .