Digital Music Input Rendering for Graphical Presentations in Soundstroll(Maxmsp)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Framework for Embedded Digital Musical Instruments

A Framework for Embedded Digital Musical Instruments Ivan Franco Music Technology Area Schulich School of Music McGill University Montreal, Canada A thesis submitted to McGill University in partial fulfillment of the requirements for the degree of Doctor of Philosophy. © 2019 Ivan Franco 2019/04/11 i Abstract Gestural controllers allow musicians to use computers as digital musical instruments (DMI). The body gestures of the performer are captured by sensors on the controller and sent as digital control data to a audio synthesis software. Until now DMIs have been largely dependent on the computing power of desktop and laptop computers but the most recent generations of single-board computers have enough processing power to satisfy the requirements of many DMIs. The advantage of those single-board computers over traditional computers is that they are much smaller in size. They can be easily embedded inside the body of the controller and used to create fully integrated and self-contained DMIs. This dissertation examines various applications of embedded computing technologies in DMIs. First we describe the history of DMIs and then expose some of the limitations associated with the use of general-purpose computers. Next we present a review on different technologies applicable to embedded DMIs and a state of the art of instruments and frameworks. Finally, we propose new technical and conceptual avenues, materialized through the Prynth framework, developed by the author and a team of collaborators during the course of this research. The Prynth framework allows instrument makers to have a solid starting point for the de- velopment of their own embedded DMIs. -

An Application of Max/MSP in the Field of Live Electro-Acoustic Music

An Application of Max/MSP in the Field of Live Electro-Acoustic Music. A Case Study Oles Protsidym Faculty of Mosic, McGill University, Montreal Aognst 1999 Thesis snbmitted to the Facuity of Gradnate Studies and Research in partial fulfillment of the rrguirements for the degree of Masar of Arts in Cornputer Applications in Music @ Oles Pmtsidym, 1999 The author bas granted a non- L'auteia a accord6 une licence non exclusive licence allowing the National Li* of Canada to Bibiiotbkqut nationale du Canada de reproduce, loan, distribute or se1 ceprodirire,*, distribuer ou copies of this thesis in microfq vendre des copies de cette thèse sous paper or electrdc fomüts. la fmede microfiche/Iiim, de reproâuction m papier ou sur format The author =tains ownership of the L'auteur cansave la Propriété du copyright in tbis thesis. N* the droit d'auteur qpi protège cette diese. thesis nor subdal extracts fiom it Ni la thèse ni des extraits substantiels may be printed or otherwise de celle-ci ne doivent êtn imprimés reproduced without the avthor's ou autrement reproduits sans son permission. autorisation. Cana! For many yean the integration of real-time digital audio signal processing with dynarnic control has been one of the most significant issues facing live electro-acoustic music. While real-time electroacoustic music systems began to appear as early as the 1970's. for approximately two decades a lack of portability and prohibitive cost restricted their availability to a limited nurnber of electronic music studios and research centers. The rapid development of computer and music technologies in the 1990's enabled desktop-based real-time digital audio signal processing, making it available for general use. -

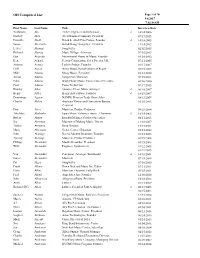

OH Completed List

OH Completed List Page 1 of 70 9/6/2017 7:02:48AM First Name Last Name Title Interview Date Yoshiharu Abe TEAC, Engineer and Innovator d 10/14/2006 Norbert Abel Abel Hammer Company, President 07/17/2015 David L. Abell David L. Abell Fine Pianos, Founder d 10/18/2005 Susan Aberbach Hill & Range Songs Inc., President 11/14/2012 Lester Abrams Songwriter 02/02/2015 Richard Abreau Music Village, Advocate 07/03/2013 Gus Acevedo International House of Music, Founder 01/20/2012 Ken Achard Peavey Corporation, Sales Director UK 07/11/2005 Antonio Acosta Luthier Strings, Founder 01/17/2007 Cliff Acred Amro Music, Band Instrument Repair 07/15/2013 Mike Adams Moog Music, President 01/13/2010 Arthur Adams Songwriter, Musician 09/25/2011 Edna Adams World Wide Music, Former Sales Executive 04/16/2010 Paul Adams Piano Technician 07/17/2015 Hawley Ades Shawnee Press, Music Arranger d 06/10/2007 Henry Adler Henry Adler Music, Founder d 10/19/2007 Dominique Agnew NAMM, Director Trade Show Sales 08/13/2009 Charles Ahlers Anaheim Visitor and Convention Bureau, 01/25/2013 President Don Airey Musician, Product Endorser 09/29/2014 Takehiko Akaboshi Japan Music Volunteer Assoc., Chairman d 10/14/2006 Bulent Akbay Istanbul Mehmet, Product Specialist 04/11/2013 Joy Akerman Museum of Making Music, Docent 11/30/2007 Toshio Akiyama Band Director 12/15/2011 Marty Albertson Guitar Center, Chairman 01/21/2012 John Aldridge Not So Modern Drummer, Founder 01/23/2005 Tommy Aldridge Musician, Product Endorser 01/19/2008 Philipp Alexander Musik Alexander, President 03/15/2008 Will Alexander Engineer, Synthesizers 01/22/2005 01/22/2015 Van Alexander Composer, Arranger, Bandleader d 10/18/2001 James Alexander Musician 07/15/2015 Pat Alger Songwriter 07/10/2015 Frank Alkyer Down Beat and Music Inc, Editor 03/31/2011 Davie Allan Musician, Guitarist, Early Rock 09/25/2011 Fred Allard Amp Sales, Inc, Founder 12/08/2010 John Allegrezza Allegrezza Piano, President 10/10/2012 Andy Allen Luthier 07/11/2017 Richard (RC) Allen Luthier, Friend of Paul A. -

Opcode Systems : Definition of Opcode Systems and Synonyms of Opcod

opcode systems : definition of opcode systems and synonyms of opcod... http://dictionary.sensagent.com/opcode systems/en-en/ anagrams crosswords wikipedia Ebay definition - opcode systems definition of Wikipedia Advertizing ▼ Wikipedia Opcode Systems Opcode Systems, Inc. was founded in 1985 by Dave Oppenheim and based in and around Palo Alto, California, USA. Opcode produced MIDI sequencing software for the Mac OS and Microsoft Windows , which would later include digital audio capabilities, as well as audio and MIDI hardware interfaces. Opcode's MIDIMAC sequencer, launched in 1986, was the first commercially available MIDI sequencer for the Macintosh computer and one of the first commercially available music sequencers on any commercial computer platform. At the time Opcode went under, the Studio Vision sequencer was at the front of the pack, with arguably the best MIDI editor written to this day. Their most notable software titles include: Vision (a MIDI-only sequencer) Studio Vision (a full sequencer, including digital audio) Galaxy (a patch editor and librarian) OMS (a MIDI-interface environment) Max (a graphical development environment) In 1998, Opcode was bought by Gibson Guitar Corporation . Development on Opcode products ceased in 1999. [1] Some of Opcode's ex-employees went on to be part of Apple 's Mac OS X Core Audio and MIDI software development. Detailed History In 1985, Stanford University graduate Dave Oppenheim founded Opcode. Dave was the majority partner, focusing on Research & Development, with Gary Briber the minority partner focusing on Sales & Marketing. In 1986, two major products were released. One was the MIDIMAC Sequencer, which later became the Opcode Sequencer and, eventually, Vision. -

Improvisatory Live Visuals : Playing Images Like a Musical Instrument

------ - --- UNIVERSITÉ DU QUÉBEC À MONTRÉAL IMPROVISATORY LIVE VISUALS: PLAYING IMAGES LIKE A MUSICAL INSTRUMENT THESIS SUBMITTED IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY IN ART STUDIES AND PRACTICES BY KATHERINE LIBEROVSKAYA SEPTEMBER 2014 UNIVERSITÉ DU QUÉBEC À MONTRÉAL Service des bibliothèques Avertissement La diffusion de cette thèse se fait dans le respect des droits de son auteur, qui a signé le formulaire Autorisation de reproduire et de diffuser un travail de recherche de cycles supérieurs (SDU-522 - Rév.01-2006). Cette autorisation stipule que «conformément à l'article 11 du Règlement no 8 des études de cycles supérieurs, [l 'auteur] concède à l'Université du Québec à Montréal une licence non exclusive d'utilisation et de publication de la totalité ou d'une partie importante de [son] travail de recherche pour des fins pédagogiques et non commerciales. Plus précisément, [l 'auteur] autorise l'Université du Québec à Montréal à reproduire, diffuser, prêter, distribuer ou vendre des copies de [son] travail de recherche à des fins non commerciales sur quelque support que ce soit, y compris l'Internet. Cette licence et cette autorisation n'entraînent pas une renonciation de [la] part [de l'auteur] à [ses) droits moraux ni à [ses] droits de propriété intellectuelle. Sauf entente contraire , [l'auteur] conserve la liberté de diffuser et de commercialiser ou non ce travail dont [il] possède un exemplaire. » -------~~------ ~ - UNIVERSITÉ DU QUÉBEC À MONTRÉAL LES VISUELS LIVE IMPROVISÉS: JOUER DES IMAGES COMME D'UN INSTRUMENT DE MUSIQUE THÈSE PRÉSENTÉE COMME EXIGENCE PARTIELLE DU DOCTORAT EN ÉTUDES ET PRATIQUES DES ARTS PAR KATHERINE LIBEROVSKAYA SEPTEMBRE 2014 ACKNOWLEDGEMENTS This doctoral thesis wou Id ne ver have been possible without the priceless direct and indirect help of a number of people. -

Guest Scholar Miller S. Puckette September 16, 2010, 12:30Pm

BROOKLYN COLLEGE CENTER FOR COMPUTER MUSIC PRESENTS GUEST SCHOLAR MILLER S. PUCKETTE SEPTEMBER 16, 2010, 12:30PM Puckette is the original author of Max, electronic music software that is popular worldwide The Brooklyn College Center for Computer Music (www.bc-ccm.org) Announces a special guest lecture by renowned computer musician and computer scientist Miller S. Puckette, who will visit Brooklyn College and give a presentation on Thursday, September 16 at 12:30pm in the Woody Tanger Auditorium (Room 150, first floor, Brooklyn College Library). Puckette is most-known as the original author of Max, a computer music software application that is now used by thousands of musicians and media artists worldwide. Miller S. Puckette Puckette describes his talk, Re-purposing Musical Instruments As Synthesizer Controllers, as follows: In order to use a computer as a musical instrument, one can either design a new interface (and then have to learn to play it) or fall back on one or more existing instruments and somehow hijack the performer's actions for use controlling the new instrument. I've been working for several years on the second of the two approaches, in particular trying to use the instrumental sound output as a control source for an electronic instrument. This talk is a progress report on what I've been able to do so far using a guitar and a small percussion instrument to control a small but growing collection of synthesis algorithms. Miller Puckette is Associate Director fo the Center for Research in Computing and the Arts (CRCA) at the University of California, San Diego. -

Download Complete Oral History List

OH Completed List Page 1 of 91 3/9/2020 10:54:03AM First Name Last Name Title Interview Date Joe Abbati Frost School of Music, Professor 2019-11-17 Yoshiharu Abe TEAC, Engineer and Innovator 2006-10-14 Norbert Abel Abel Hammer Company, President 2015-07-17 David L. Abell David L. Abell Fine Pianos, Founder 2005-10-18 Susan Aberbach Hill & Range Songs Inc., President 2012-11-14 Lester Abrams Songwriter 2015-02-02 Richard Abreau Music Village, Advocate 2013-07-03 Gus Acevedo International House of Music, Founder 2012-01-20 Ken Achard Peavey Corporation, Sales Director UK 2005-07-11 Tony Acosta Luthier Strings, Founder 2007-01-17 Cliff Acred Amro Music, Band Instrument Repair 2013-07-15 Arthur Adams Songwriter, Musician 2011-09-25 Edna Adams World Wide Music, Former Sales Executive 2010-04-16 Mike Adams Sound Image, Chief Engineer 2018-03-28 Paul Adams Piano Technician 2015-07-17 Mike Adams Moog Music, President 2010-01-13 Chris Adamson Tour Manager 2019-01-25 Leo Adelman Musician 2019-05-14 Hawley Ades Shawnee Press, Music Arranger 2007-06-10 Henry Adler Henry Adler Music, Founder 2007-10-19 Dominique Agnew NAMM, Director Trade Show Sales 2009-08-13 Carlos "DJ Aguilar DJ 2020-01-18 Quest" Charles Ahlers Anaheim Visitor and Convention Bureau, President 2013-01-25 Chuck Ainlay Recording Engineer 2019-07-16 Don Airey Musician, Product Endorser 2014-09-29 Takehiko Akaboshi Japan Music Volunteer Assoc., Chairman 2006-10-14 Bulent Akbay Istanbul Mehmet, Product Specialist 2013-04-11 Joy Akerman The NAMM Foundation's Museum of Making 2007-11-30